- Why Do Enterprises Need an AI Strategy That Scales?

- Siloed Pilots That Never Connect

- Growing Data Debt

- Lack Of Governance and Accountability

- Unclear ROI Tracking

- What Are the Guiding Principles for an Enterprise AI Strategy?

- Business-First, Outcome-Driven Planning

- Data As A First-Class Asset

- Governance By Design

- Iterative, Measurement-Driven Scaling

- Platform Thinking

- What Is the Step-By-Step Framework for Building an Enterprise AI Strategy?

- Step 1: Executive Alignment And Sponsorship

- Step 2: Readiness Assessment

- Step 3: Use-Case Selection And Prioritization

- Step 4: Data And Platform Architecture

- Step 5: Pilot Implementation

- Step 6: Governance, Ethics And Compliance

- Step 7: Scale And Sustain

- Top Enterprise AI Use Cases Defining 2026

- Enterprise AI Use Cases Delivering the Highest ROI

- What Do Enterprise AI Technical Architectures Look Like in Practice?

- Real-Time Customer Personalization Architecture

- Back-Office Decision Intelligence Architecture

- Sample MLOps Pipeline

- Example KPI And Model Metric Mapping

- What Is the Cost of Building an AI Strategy?

- Enterprise AI Solution Types And Investment Ranges

- Program Phase Cost And Timeline

- Key Cost Drivers

- How Do Enterprises Measure Success in AI Strategy?

- Business And Model Performance Signals

- Operational And ROI Tracking

- What Are Real Enterprise Examples of Successful AI Strategy?

- J.P. Morgan: Contract Automation (COIN)

- UPS: Route Optimization (ORION)

- Walmart: Demand Forecasting And Inventory Optimization

- How Does Appinventiv Help Enterprises Build AI Strategy?

- FAQs

Key takeaways:

- Enterprise AI fails without a structured strategy, governance, and shared data foundations.

- Readiness assessment and phased pilots turn AI ambition into scalable execution.

- Data architecture and MLOps platforms are critical to enterprise AI scalability.

- Governance, compliance, and lifecycle controls build trust in enterprise AI systems.

- Measuring KPIs and tracking ROI keeps AI investments aligned with business outcomes.

Most enterprise teams hit a moment where AI efforts start to feel scattered. One business unit launches a virtual assistant. Another experiment with AI-driven predictive analytics for forecasting. A third signs up for a generative AI tool. Useful, yes. Strategic, not yet. This is usually when leadership starts asking a sharper question. How do we turn AI investment into consistent business results instead of isolated wins?

This guide is built for leaders shaping an AI strategy consulting for business at an enterprise scale. The focus is practical. Driving measurable ROI. Reducing operational and regulatory risk. Building AI capabilities that grow across applications, departments, and markets without rebuilding everything from scratch. A strong enterprise AI strategy does not chase experimentation. It creates repeatable execution, governed delivery, and technology foundations that support long-term growth.

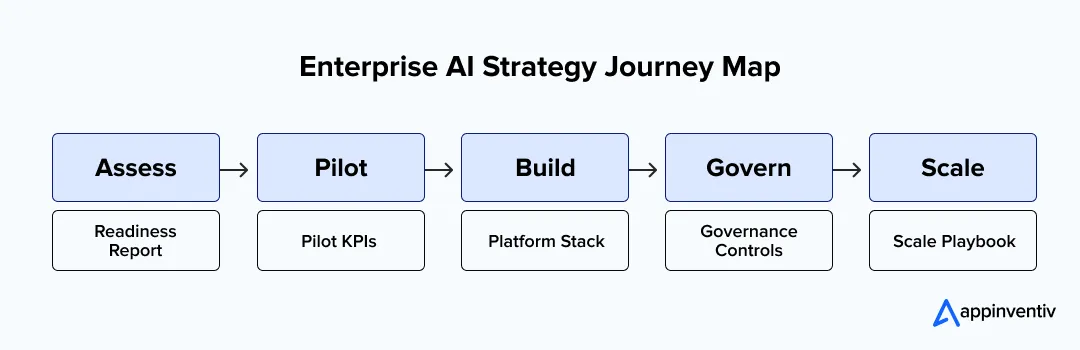

The path follows a clear progression. You assess readiness across data, infrastructure, skills, and risk posture. You pilot priority use cases with defined success metrics. You build shared data and MLOps platforms. You govern models with security, compliance, and lifecycle controls. Then you scale AI across the organization with accountability and cost discipline.

The sections ahead walk through each phase in depth so your team can move from ambition to enterprise-grade implementation.

Why Do Enterprises Need an AI Strategy That Scales?

Most large organizations do not fail at experimenting with AI. They fail at turning experiments into dependable business capabilities. The risks and AI adoption challenges in enterprise solutions rarely show up on day one.. They surface later, when pilots stall, costs rise, and leadership cannot trace AI investment to real outcomes.

Only 37% of enterprises without a formal AI strategy report successful AI adoption, while success rises to 80% when structured strategy and governance are in place. An AI adoption strategy for enterprise applications exists to prevent that slow breakdown.

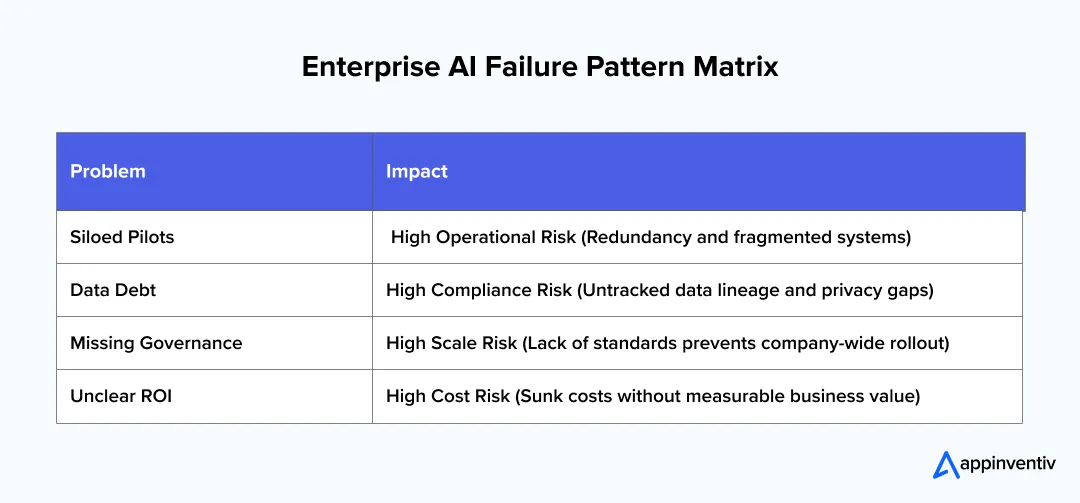

Below are the most common failure patterns seen across enterprise AI programs.

Siloed Pilots That Never Connect

Teams often launch AI initiatives inside individual departments. Each group selects its own tools, data sources, and deployment methods. Progress looks fast at first. Still, integration becomes painful later.

Typical symptoms:

- Duplicate data pipelines across teams

- Models built without shared standards

- No clear path from pilot to production

- Reinvention of the same use cases in multiple units

Growing Data Debt

AI systems depend on reliable data flows. Without a coordinated data strategy, technical debt accumulates quietly. Over time, models degrade, and trust erodes.

Common signals:

- Inconsistent data definitions across systems

- Missing lineage and audit trails

- Poor data quality controls

- Delays in accessing enterprise datasets

Lack Of Governance and Accountability

Many enterprises deploy models without formal ownership, review cycles, or risk controls. This becomes a serious exposure as AI touches regulated or customer-facing processes.

Frequent gaps:

- No model lifecycle policies

- Limited bias and explainability testing

- Unclear responsibility for model failures

- Weak security and access controls

Unclear ROI Tracking

AI investments often proceed without a measurement framework. Leadership then struggles to justify further scaling.

Typical issues:

- Technical metrics tracked without business KPIs

- No cost visibility across model training and inference

- Benefits reported anecdotally instead of financially

The business impact is tangible. Missed revenue opportunities. Compliance exposure. Operational risk. A scalable enterprise AI strategy consulting addresses these failure points before they become expensive constraints.

What Are the Guiding Principles for an Enterprise AI Strategy?

Most enterprise AI programs do not break because of weak technology. They break because decisions are made in isolation. One team optimizes for speed. Another optimizes for compliance. A third optimizes for innovation. Without shared principles, these efforts pull in different directions. A practical AI strategy framework keeps everyone aligned. It gives your AI business strategy consistency, even as teams move at different speeds.

Business-First, Outcome-Driven Planning

If AI initiatives start with model selection, misalignment shows up later. Strong programs begin with a simple question. What business result are we committing to deliver?

This business-first approach is what separates effective AI for business strategy from technology-driven experiments that fail to deliver ROI.

In real enterprise environments, this means:

- Business leaders define target outcomes first

- Technical teams map models to those outcomes

- Executive sponsors stay accountable for results

Data As A First-Class Asset

Every scaled AI system eventually runs into a data bottleneck. Enterprises that plan ahead treat data pipelines like core infrastructure, not side projects.

Teams that do this well:

- Know where critical data originates

- Track how data moves across systems

- Fix data quality issues before models break

- Make datasets easy to find across departments

Governance By Design

Governance is often seen as a brake on innovation. In practice, it is what allows innovation to survive in regulated environments.

Mature organizations:

- Review models before and after deployment

- Test for bias and explainability early

- Control who can access sensitive data

- Align AI operations with compliance teams

Iterative, Measurement-Driven Scaling

No enterprise scales AI in one leap. Progress happens in short cycles, with lessons carried forward.

Teams that succeed:

- Run pilots with clear pass or fail criteria

- Monitor model performance over time

- Adjust plans based on real results

Platform Thinking

Scaling becomes easier when teams stop building everything from scratch. Shared platforms reduce friction.

This usually includes:

- Common data pipelines

- Shared model repositories

- Standard deployment and monitoring workflows

These principles keep an enterprise AI strategy practical, resilient, and ready for long-term growth.

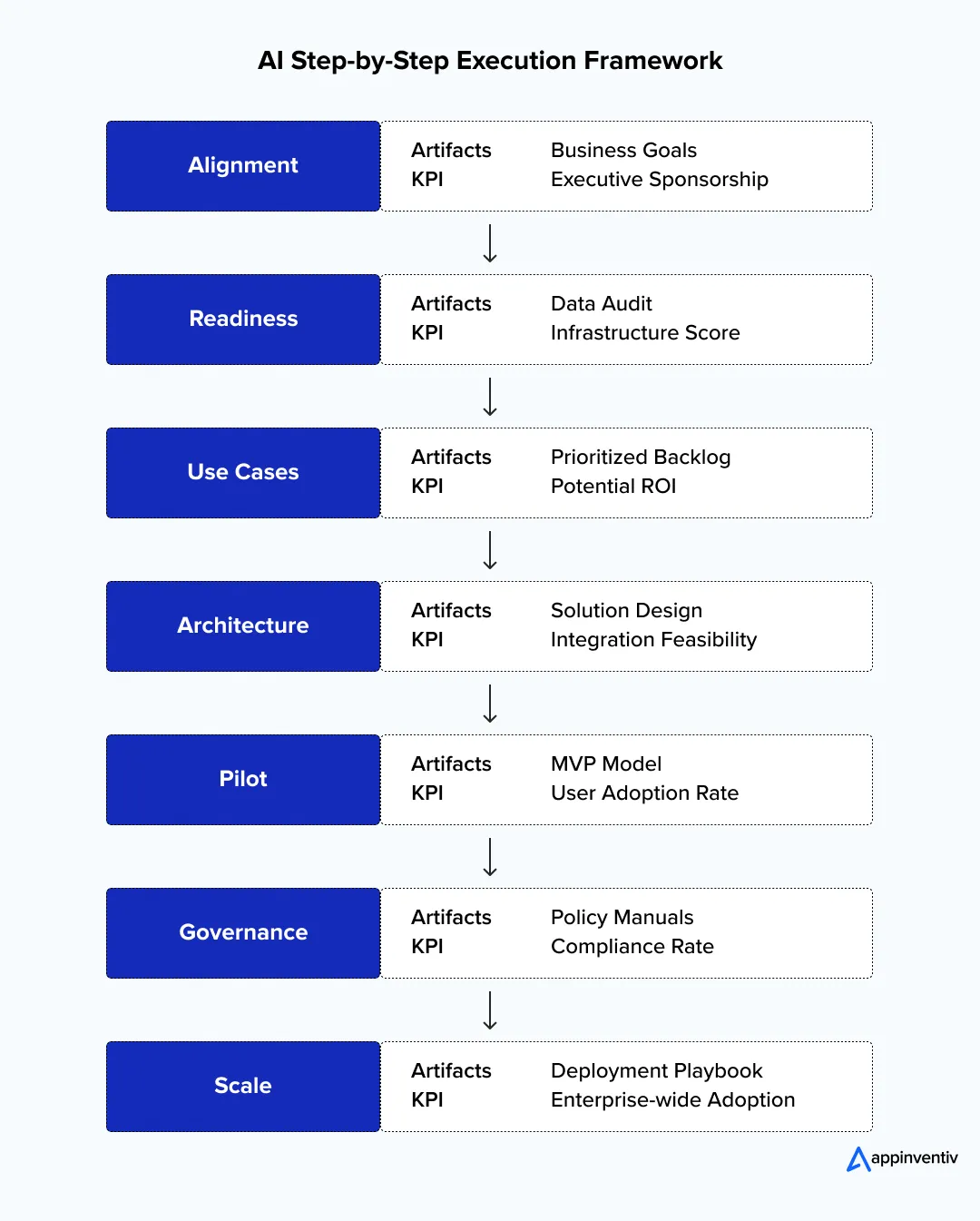

What Is the Step-By-Step Framework for Building an Enterprise AI Strategy?

In large organizations, AI strategy planning for large organizations only becomes real when it changes how budgets are approved, how systems are built, and how teams make decisions.

An AI strategy consulting plan works the same way. It is not a conceptual roadmap sitting in a drive folder. It is a sequence of practical moves that guide AI investment planning for enterprises from intent to operating reality.

Each step below creates a concrete output, a decision point, and a signal that it is safe to move forward.

Step 1: Executive Alignment And Sponsorship

Most AI initiatives slow down when ownership becomes unclear. One team funds the pilot. Another team owns the data. A third team is asked to deploy the model. This matters because AI adoption is already widespread. 88% of organizations now use AI in at least one business function, which means leadership alignment is no longer optional; it is a competitive necessity.

The first step is avoiding that situation.

Start by defining a clear AI vision through AI-powered market research consulting that integrates AI and business strategy, aligning technology initiatives with strategic business priorities. This means setting specific business objectives, target KPIs, and expected ROI before any model or platform decisions are made.

Faster approvals. Lower servicing costs. Better retention. Once objectives are clear, leadership selects an executive sponsor who carries decision authority across departments. Budget framing follows next, so funding is planned, not requested in emergencies halfway through a pilot.

Artifacts that typically come out of this step:

- A short executive brief tying AI initiatives to business priorities

- A backlog of testable AI hypotheses

- A ranked list of outcomes the organization commits to deliver

Early KPI signals often include:

- Estimated cost savings per workflow

- Reduction in time to reach decisions

- Customer experience improvements such as NPS movement

This is where the AI strategy plan stops being abstract and starts having accountable owners. This step also establishes enterprise AI literacy at the C-level and creates a shared understanding of current AI maturity and data readiness levels.

Step 2: Readiness Assessment

Enterprises rarely start from zero. They have data warehouses, analytics teams, legacy systems, and security frameworks. The real question is whether these pieces are ready to support scaled AI. This step answers that before money is spent on development. It is also where AI strategy consulting for enterprises typically accelerates progress.

The enterprise AI integration assessment looks at five areas. Data maturity. Infrastructure. Talent. Operating processes. Risk posture. The goal is to find friction points early by following an AI readiness guide.

Tools commonly used:

- Data inventories that map where critical datasets live

- Capability matrices across data engineering, ML, and DevOps

- Technical debt scoring for legacy platforms

The core deliverable is an AI readiness report. It outlines foundational gaps, recommends pilot candidates, and estimates potential performance lift once blockers are removed.

Quick scoring checks often review:

- Data access and reliability

- Integration effort with existing systems

- Skill availability inside teams

- Security and compliance preparedness

This step grounds AI strategy development in the current state of the enterprise, not optimism. It also exposes common AI adoption barriers early, including weak data foundations, skills gaps, scarcity of AI talent, and misalignment between AI initiatives and business objectives.

Identifying these risks upfront allows leadership to secure support, introduce standard protocols, strengthen analytics solutions, and plan targeted enablement before launching AI-powered automation or business-grade AI products.

Step 3: Use-Case Selection And Prioritization

Once readiness is understood, attention shifts to deciding where to start. Knowing how to build an AI strategy in an enterprise means picking battles that matter and can actually be won. The output of this step becomes the working AI roadmap for enterprises.

Use cases are weighed through professional AI integration consulting for business to balance value with feasibility. Value covers revenue growth, efficiency gains, automation opportunities, and customer feedback analysis.

Feasibility evaluates data governance readiness, workflow documentation, model quality metrics, and responsible AI practices. This ensures the AI portfolio stays aligned with stakeholder priorities and enterprise risk management processes.

High-impact enterprise clusters often fall into:

- Personalized customer engagement with generative AI for business

- Decision support for planning and operations

- Automation of repetitive back-office work by building an AI app for internal tasks.

- Fraud and anomaly detection

Guardrails are set before pilots begin. Teams agree on acceptable data usage, explainability expectations, and integration boundaries.

ROI modeling stays simple on purpose:

- Estimated implementation cost

- Expected annual benefit

- Payback period

- Adoption sensitivity assumptions

The result is a ranked backlog that becomes the AI strategy roadmap for enterprises, outlining priority use cases, required AI capabilities, and sequencing across teams. This roadmap is then communicated to C-level stakeholders and business units to secure stakeholder buy-in, align operating models, and keep strategic planning consistent across the organization.

In mature programs, an appointed AI communicator-in-chief or transformation lead ensures the roadmap stays visible, progress is tracked, and AI maturity assessments are revisited as capabilities evolve.

Step 4: Data And Platform Architecture

Most scaling problems in AI are architecture problems in disguise. This step defines the technical backbone that supports the enterprise AI implementation strategy.

Data architecture choices come first, including semantic AI for business data structures. Some organizations centralize data into a lakehouse. Others adopt data mesh aligned to business domains. Feature stores standardize how models access inputs. Streaming pipelines power real-time use cases. Batch pipelines handle analytical workloads. Model serving layers control how predictions reach enterprise applications, often utilizing RAG or fine-tuning approaches to optimize performance.

MLOps foundations are then laid down:

- Automated pipelines for model deployment

- A central model registry with version control

- Continuous monitoring for drift and degradation

- Explainability views for business and risk teams

Deployment patterns are chosen based on operational reality. Some workloads run fully in cloud environments. Others remain hybrid or on-prem to meet security or latency needs. US enterprises also factor in encryption standards, identity controls, audit logging, and data residency constraints.

At this stage, teams often introduce a visual reference like an Enterprise AI platform stack diagram

This step anchors the AI strategy framework for enterprise apps in engineering structures that can grow over time.

At this stage, many enterprises move from planning to execution. Appinventiv’s AI development services help convert reference architectures into production-ready systems, covering model engineering, platform integration, and secure deployment aligned with enterprise governance requirements.

Step 5: Pilot Implementation

This is where plans meet real systems. Pilots are designed to learn quickly without disrupting core operations. They are the practical expression of how to build an AI strategy for enterprise apps.

Pilots are time-boxed, following proven AI integration examples for business applications to define success. MVP models are built and tested against existing processes through controlled A/B comparisons. Every assumption is documented.

Typical technical checks include:

- Reproducible data pipelines

- Versioned training datasets

- Baseline model benchmarking

- Feature engineering standards

Operational planning covers how the pilot runs in production conditions. Some models deploy in cloud environments. Others sit closer to internal systems or edge locations to meet latency and SLA expectations.

The main deliverable is a pilot outcome report:

- Business KPIs achieved

- Model performance results

- Integration and data lessons

- A clear recommendation to scale or rework

This step validates the AI adoption strategy for enterprise applications before major expansion.

Step 6: Governance, Ethics And Compliance

As soon as AI touches customer data, financial decisions, or regulated processes, governance stops being optional. A durable artificial intelligence strategy bakes AI-powered data governance and risk control into everyday operations.

Model governance defines ownership and accountability. Model cards and data cards document training sources, intended use, and limitations so no system becomes a black box.

Risk control practices usually include:

- Bias and fairness testing routines

- Privacy impact assessments

- Access and activity audit trails

As AI systems take on decision-making roles, enterprises must also ensure responsible and ethical AI use. This means putting accountability, transparency, and fairness practices in place so models remain trustworthy under regulatory and public scrutiny.

Additional governance controls include:

- Bias detection and fairness assessment routines

- Responsible AI dashboards for continuous oversight

- Data governance and responsible data practices

- Transparency practices for model decisions

- Clear accountability for AI-driven outcomes

- Alignment with emerging AI regulations and ethical guidelines

Regulatory alignment is mapped into the roadmap. US enterprises often connect controls to HIPAA obligations, FTC consumer protection guidance, and state-level privacy laws. The focus is operational compliance, not legal theory.

Monitoring and incident response plans define:

- Threshold alerts for performance drops

- Rollback procedures when models misbehave

- Escalation paths for risk and compliance teams

This step keeps the AI strategy for business credible under internal and external scrutiny.

Step 7: Scale And Sustain

Scaling is where AI becomes part of everyday enterprise muscle memory. This step converts isolated wins into an enterprise AI transformation roadmap.

Successful pilots are productized. Shared pipelines and services are reused across departments. Platform capacity is expanded in controlled increments rather than sudden overhauls.

Organizational design matters here. Some enterprises form a centralized AI Center of Excellence. Others adopt federated execution with shared standards. RACI models clarify who owns data, models, deployment, and risk.

Cost discipline keeps scaling sustainably:

- Resource tagging and internal chargeback

- Retiring underused models

- Optimizing inference and compute consumption

Continuous improvement closes the loop:

- Retraining schedules

- Performance and uptime SLAs

- Ongoing tracking of business KPIs

At scale, enterprises also establish continuous evaluation practices. AI operating models are reviewed through regular internal assessments, design reviews, and risk assessments. Model and agent monitoring, responsible AI dashboards for bias evaluation, and system metrics tracking ensure policy enforcement, incident response readiness, and adaptation to regulatory changes.

This final stage ensures scaling AI in enterprise applications becomes repeatable, accountable, and ready for long-term evolution.

Turn your AI roadmap into enterprise-grade execution

Top Enterprise AI Use Cases Defining 2026

By 2026, AI will operate as a core enterprise capability embedded across customer experience, operations, finance, and infrastructure. Leading organizations are scaling AI into governed, repeatable business systems rather than isolated pilots.

| Industry | Priority AI Use Cases |

|---|---|

| Healthcare | Predictive diagnostics, AI imaging, personalized treatment, patient automation |

| Banking & Finance | Fraud detection, credit risk scoring, automated reporting, personalized wealth insights |

| Retail & E-Commerce | Demand forecasting, dynamic pricing, recommendation engines, and visual search |

| Manufacturing | Predictive maintenance, vision-based quality control, digital twins |

| Logistics & Transportation | Route optimization, fleet intelligence, and real-time tracking |

| Telecommunications | Network optimization, churn prediction, and AI customer support |

| Energy & Utilities | Smart grids, consumption forecasting, and fault detection |

| Education | Adaptive learning, automated grading, virtual assistants |

| Real Estate | Property valuation, smart building management, and market prediction |

| Public Sector | Smart city operations, fraud prevention, and citizen automation |

Enterprise AI Use Cases Delivering the Highest ROI

While AI opportunities span every function, enterprise leaders must focus first on initiatives that balance measurable business impact with practical scalability. The table below highlights AI applications consistently delivering strong returns across industries.

| AI Application Area | Core Business Impact |

|---|---|

| Process automation | Faster operations, lower cost |

| AI customer service | Reduced response time |

| Predictive maintenance | Lower downtime |

| Fraud detection | Higher detection accuracy |

| Supply chain optimization | Reduced logistics cost |

| Personalization engines | Higher conversions |

By the end of 2026, over 80% of enterprises will run at least one production-grade AI automation system. Competitive advantage will belong to organizations that scale AI through governed platforms, not isolated experiments.

What Do Enterprise AI Technical Architectures Look Like in Practice?

Enterprise AI conversations often stay at a conceptual level. At some point, your architects and engineering leaders need something more concrete. This section translates the AI strategy consulting for enterprise apps into reference architectures and operational patterns that teams can actually build against. Think of it as a bridge between strategy decks and engineering backlogs.

Real-Time Customer Personalization Architecture

Personalization systems live or die by latency. If a recommendation arrives after the customer has moved on, the model may be accurate but the business value is lost. Real-time personalization architectures are designed to keep data moving continuously from interaction to insight to action.

A typical component flow looks like this:

- Event streaming layer capturing user behavior from web, mobile, and product systems

- Stream processing engine for real-time feature extraction

- Feature store to maintain consistent model inputs across training and inference

- Low-latency model serving layer exposed through APIs

- Application layer consuming predictions in customer-facing experiences

Supporting services usually include:

- Identity resolution and session management

- Real-time monitoring for latency and prediction quality

- Fallback rules when models are unavailable

This pattern is often used for recommendation engines, next-best-action systems, and dynamic pricing. It is a practical example of an AI implementation strategy that prioritizes speed, scale, and customer experience at the same time.

Back-Office Decision Intelligence Architecture

Not every enterprise AI system needs real-time responses. Many high-value use cases sit in planning, finance, supply chain, and operations. These environments prioritize accuracy, often utilizing causal AI for strategic decision making and auditability.

A common architecture includes:

- Batch data ingestion from ERP, CRM, finance, and operations systems

- Batch feature pipelines for data preparation and aggregation

- Centralized model training environment

- Model registry for versioning and approval workflows

- Decision dashboards with embedded model explanations

- Human-in-the-loop interfaces for review and override

Supporting layers often include:

- Data quality validation before model training

- Explainability services to justify predictions

- Audit logs for regulatory and internal review

This reference pattern is widely used for demand forecasting, risk scoring, capacity planning, and compliance monitoring. It reflects an AI strategy for enterprise apps that values trust and traceability as much as predictive performance.

Sample MLOps Pipeline

Once architectures are defined, consistent delivery becomes the next priority. A mature MLOps pipeline keeps model development, deployment, and monitoring predictable across teams.

A typical CI/CD flow includes:

- Code commit and automated testing of data pipelines

- Training job triggered with versioned datasets

- Model validation against baseline performance thresholds

- Bias and explainability checks as automated gates

- Model registration with metadata and documentation

- Controlled deployment to staging and production

- Continuous monitoring for drift and degradation

Validation gates usually verify:

- Business metric thresholds

- Data integrity and schema stability

- Security and access compliance

This pipeline structure ensures the AI implementation strategy remains repeatable instead of dependent on individual teams.

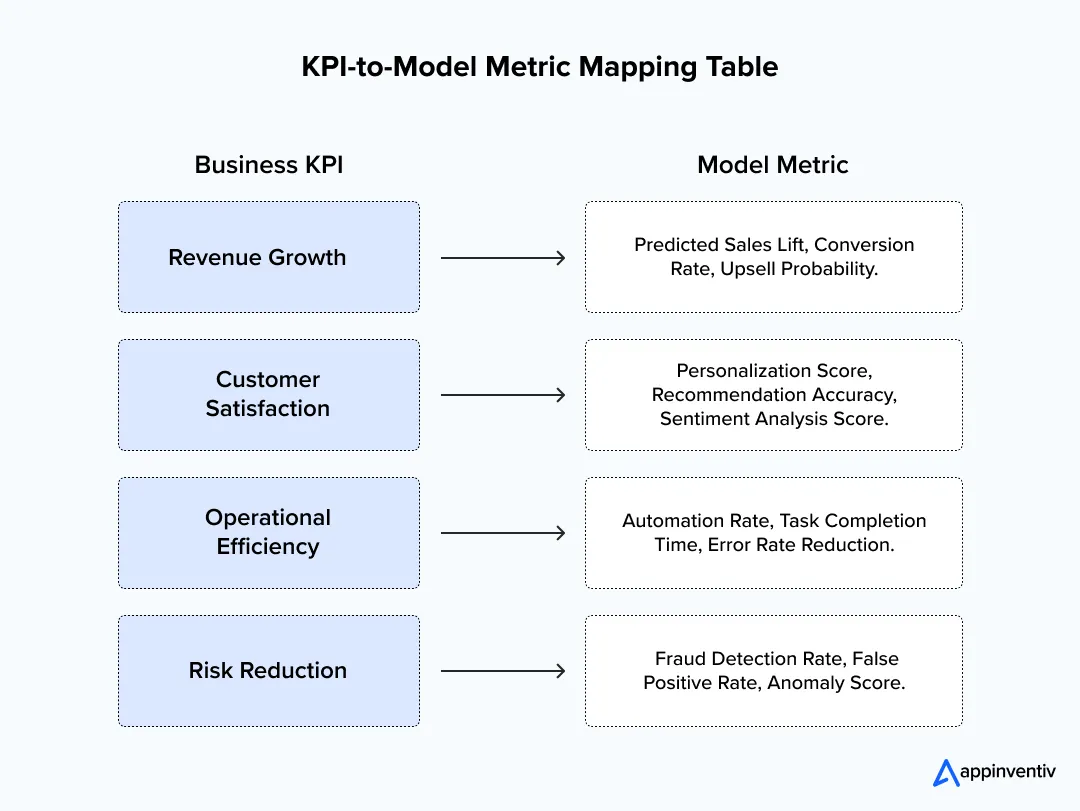

Example KPI And Model Metric Mapping

Enterprise leaders care about business outcomes. Engineering teams care about model performance. Connecting the two avoids misaligned reporting.

A practical mapping approach includes:

- Customer retention rate ↔ recommendation relevance score

- Fraud loss reduction ↔ anomaly detection precision and recall

- Processing cost per transaction ↔ automation accuracy rate

- Forecast accuracy ↔ mean absolute percentage error

This table becomes a shared language between business owners and technical teams. It keeps the AI strategy for enterprise apps anchored to measurable value rather than abstract performance metrics.

These reference patterns are not meant to lock your organization into a single design. They provide starting points that engineering teams can adapt based on existing infrastructure, regulatory requirements, and operating models.

What Is the Cost of Building an AI Strategy?

AI budgeting inside large organizations is rarely straightforward. One team estimates model costs. Another worries about data cleanup. Security adds new requirements halfway through. The result is often uncertainty. A clearer view of the cost of building an AI strategy consulting helps leadership plan AI investment for enterprises with fewer surprises.

The ranges below are practical reference points. They are not quotes. They reflect typical enterprise engagements where data foundations and governance matter as much as model development.

Enterprise AI Solution Types And Investment Ranges

Enterprises usually start small, prove value, then deepen capability. Investment scales with complexity.

| Solution Type | Typical Timeline | Typical Cost Range |

|---|---|---|

| Basic AI Enablement (pre-built tools, minimal integration) | 3 to 6 weeks | $40K to $80K |

| Pre-Built Enterprise Solutions (SaaS AI, light customization) | 6 to 10 weeks | $80K to $150K |

| Mid-Level Enterprise AI (custom models, data pipelines, early MLOps) | 3 to 6 months | $150K to $250K |

| Advanced Enterprise AI Platforms (shared data layer, governance, multi-team use) | 6 to 12 months | $250K to $400K |

Program Phase Cost And Timeline

Most organizations fund AI in stages to reduce risk.

| Phase | Timeline | Typical Cost Range |

|---|---|---|

| Assessment And Roadmap | 4 to 6 weeks | $40K to $90K |

| Pilot (1–2 Use Cases) | 8 to 14 weeks | $90K to $180K |

| Platform Build And Scale | 4 to 12 months | $180K to $400K |

Key Cost Drivers

AI spend usually rises because of foundations, not models.

Common drivers:

- Data cleanup and system integration

- Security and compliance requirements

- Internal skill gaps

- Cloud usage and inference volume

- Ongoing monitoring and retraining

Enterprises control costs by fixing data access early, limiting pilot scope, and reusing shared infrastructure as they scale.

Also Read: Essential Questions Every Leader Should Ask Before Investing in Custom AI for Business

How Do Enterprises Measure Success in AI Strategy?

Once AI systems move into production, the next question is simple. Is the investment paying off? Enterprises that track only model accuracy miss the full picture. Strong programs measure business impact, model health, and operational stability together. This keeps AI strategy consulting metrics tied to real outcomes and protects long-term AI ROI.

Business And Model Performance Signals

Start by connecting business goals to technical performance. This avoids situations where a model performs well in testing but fails to move core KPIs.

Typical dashboard metrics include:

- Revenue uplift or cost savings per use case

- Customer satisfaction or NPS movement

- Model accuracy, precision, or recall

- Drift detection and data stability scores

Most enterprises review these dashboards monthly for business impact and weekly for model health.

Operational And ROI Tracking

AI systems also behave like operational services. They need uptime tracking and cost monitoring to stay reliable.

Common operational metrics:

- Prediction latency and SLA adherence

- System uptime

- Inference and cloud usage costs

ROI attribution is usually handled through before-and-after comparisons. Teams measure baseline process performance, introduce AI, then track delta improvements over defined reporting cycles.

This measurement structure keeps AI ROI visible, defensible, and tied to leadership expectations.

Track ROI, risk, and performance in one framework

What Are Real Enterprise Examples of Successful AI Strategy?

Below are three concise, verifiable vignettes that show how large organizations turned AI strategy into measurable outcomes. Each vignette is framed as Challenge → Solution → Outcome.

J.P. Morgan: Contract Automation (COIN)

Challenge: Loan-document review consumed massive lawyer hours and introduced human error into commercial agreements.

Solution: An internal Contract Intelligence system (COIN) parsed agreements and extracted key clauses automatically.

Outcome: The system reportedly replaced roughly 360,000 annual hours of manual review, cutting errors and speeding approvals.

UPS: Route Optimization (ORION)

Challenge: Last-mile delivery costs and miles driven were large and growing line items for operations.

Solution: ORION applied optimization algorithms at scale to generate daily route plans that cut unnecessary miles.

Outcome: UPS reported reductions equivalent to hundreds of millions of dollars in annual operating cost once ORION scaled across routes.

Walmart: Demand Forecasting And Inventory Optimization

Challenge: Seasonal spikes and local demand variability caused stockouts and excess inventory across stores.

Solution: Walmart centralized forecasting workflows and adopted ML-driven demand models to improve placement and replenishment.

Outcome: Internal engineering teams report measurable improvements in forecast accuracy and faster time-to-value for promotions and holiday planning.

These examples share a pattern. Start with a clearly scoped problem. Build a pilot that links model outputs to a business KPI. Measure uplift, then invest in platform and governance only once value is proven.

Appinventiv’s role is to shorten that loop while keeping enterprise controls and auditability front and center. In one of our recent engagements, We helped a leading automotive player transform its digital operations using AI consulting.

The initiative streamlined vehicle data flows, improved predictive maintenance accuracy, and accelerated feature rollout across connected services.

The result was measurable operational uplift and faster go-to-market. Learn how strategic AI planning can unlock similar outcomes for your enterprise.

How Does Appinventiv Help Enterprises Build AI Strategy?

Enterprise AI succeeds when strategy, engineering, and governance move in sync. Many organizations know where they want to go with AI, but struggle to convert ambition into execution. This is where Appinventiv steps in as an AI consulting company focused on enterprise-scale transformation. Our AI strategy consulting practice helps enterprises design clear AI strategy plans, modernize data foundations, and scale AI safely across applications and business units.

We support enterprises across the full lifecycle:

- AI readiness assessment and roadmap creation

- Data engineering and pipeline modernization

- MLOps setup for deployment, monitoring, and model lifecycle control

- Governance and compliance implementation

- Custom model development and enterprise integration

- Managed AI services for ongoing performance and cost control

Our consulting model is built for complex enterprise environments:

- 2000+ strategy and transformation projects delivered

- 24/7 advisory support for enterprise programs

- 8+ global consulting partnerships

- 1600+ tech evangelists across cloud, data, and AI

- 35+ industries mastered

- 15+ global recognitions and awards

Measured business impact from our consulting engagements:

- 30% average revenue growth

- 2x faster go-to-market

- 98% ROI reported by enterprise clients

We have delivered large-scale digital and data platforms for global brands such as Americana, IKEA, Adidas, and Domino’s. This experience enables us to design AI strategy plans that align with enterprise security, compliance, and operational realities.

If you are planning your next AI initiative, request an enterprise AI readiness assessment and start with a roadmap built for scale. Let’s connect.

FAQs

Q. How Do Enterprises Build An AI Strategy That Actually Scales?

A. Most enterprises start AI strategy consulting by getting clear on where AI should move the business, not where it sounds exciting. Teams review data access, system readiness, and risk constraints first. Then they choose a few high-value use cases and run controlled pilots with defined success criteria. Once pilots prove impact, shared data platforms, MLOps pipelines, and governance models are introduced to support scaling across applications.

Q. How Does Appinventiv Help Enterprises Design AI Strategies?

A. Appinventiv works with enterprise teams to connect business goals with technical execution. We assess readiness, map data and system constraints, define an AI strategy plan, and design the platform architecture needed for scale. From there, we help implement MLOps, governance controls, and integration workflows so AI programs move from early pilots into secure, production-ready enterprise environments.

Q. How Much Does It Cost To Build An AI Strategy For An Enterprise App?

A. There is no single price tag because cost depends on data maturity, legacy complexity, and compliance requirements. Most enterprises invest in phased engagements that begin with assessment and roadmap creation, followed by pilot execution and platform build-out. This staged approach keeps spending tied to validated progress, avoids large upfront risk, and provides leadership with predictable investment planning.

Q. What Are The Risks Of Not Having An AI Strategy?

A. Enterprises without a defined AI strategy often end up with disconnected pilots, duplicated data work, unclear model ownership, and weak ROI tracking. Over time, this creates data debt, security gaps, and compliance exposure. Innovation slows because teams lack shared platforms and governance. A structured strategy prevents fragmented investment and keeps AI development aligned with business priorities.

Q. What Should An Enterprise AI Roadmap Include?

A. A practical enterprise AI roadmap lays out readiness findings, prioritized use cases, data and platform architecture plans, governance structures, pilot timelines, and scaling phases. It also defines ownership models, KPI targets, and investment estimates. This roadmap becomes the shared reference that keeps leadership, engineering, and risk teams aligned as AI capability expands across the organization.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

From Chatbots to AI Agents: Why Kuwaiti Enterprises are Investing in AI-Powered App Development

Key Takeaways Kuwaiti organizations are moving beyond basic chatbots to deploy AI agents that handle tasks, analyze data, and support operations intelligently. National initiatives such as Vision 2035 and CITRA regulations are creating a structured environment for AI-powered app development in Kuwait. Businesses in key sectors like banking, oil and gas, retail, and public services…

Beyond the Hype: Practical Generative AI Use Cases for Australian Enterprises

Key takeaways: Enterprises in Australia are adopting Gen AI to address real productivity gaps, particularly in reporting, analysis, and service delivery. From banking and pharmaceutical to mining and agriculture, Gen AI is applied across industries to modernise legacy systems and automate knowledge-intensive and documentation-heavy workflows. The strongest outcomes appear where Gen AI is embedded into…

The ROI of Accuracy: How RAG Models Solve the "Trust Problem" in Generative AI

Key takeaways: RAG models in generative AI attach real, verifiable sources to model outputs, which sharply cuts hallucinations and raises user confidence. Accuracy directly impacts ROI, driving fewer escalations, faster decision cycles, and lower support costs. RAG vs fine-tuning: RAG allows real-time updates without retraining, offering faster deployment and lower maintenance. Vector databases for RAG…