- The Shortcomings of Traditional Data Engineering

- Traditional Data Engineering vs Agentic AI Data Engineering

- Why Are Enterprises Adopting Agentic AI for Data Engineering?

- 1. AI-Driven Automation

- 2. Continuous Optimization with Real-Time Insights

- 3. Scalable and Adaptive Systems

- 4. Predictive Intelligence

- 5. Data Contextualization for Smarter Decisions

- 6. Cost Reduction

- 7. Regulatory Compliance and Security

- How Are Enterprises Applying Agentic AI in Real-World Data Engineering?

- 1. Financial Services: Revolutionizing Fraud Detection and Compliance

- 2. Healthcare: From Reactive to Proactive Care

- 3. Retail: Personalizing Customer Experiences at Scale

- 4. Manufacturing: Improving Operations through Predictive Maintenance

- How Enterprises Start with Agentic AI Without Disrupting Existing Systems?

- Step 1: Understand Your Current Data Systems

- Step 2: Set Clear Objectives

- Step 3: Begin with an MVP

- Step 4: Scale Gradually

- Step 5: Constant Optimisation

- Step 6: Foster a culture of experimentation and growth.

- What Challenges Do Enterprises Face When Adopting Agentic AI in Data Engineering?

- What Is the Future of Agentic AI in Data Engineering?

- How Appinventiv Can Assist: Top AI Solutions for Businesses

- Frequently Asked Questions

Key takeaways:

- Agentic AI streamlines intricate data engineering processes, including data ingestion, transformation, and validation, allowing teams to focus on high-stakes decision-making.

- By automating manual tasks, Agentic AI significantly reduces operational costs, allowing companies to optimize resource allocation.

- Agentic AI scales with ease as the volume of data increases, without the need for additional infrastructure or expensive resources.

- With AI-driven data engineering automation, companies can cut hours of hands-on work, enabling data processes to operate in real-time without interruption, thereby enhancing decision-making speed.

- Agentic AI is constantly refining data workflows to enable companies to access the latest, precise information for faster, more insightful decisions.

Businesses today are struggling with a disproportionate quantity and complexity of data. When companies expand, it becomes an urgent task to handle and derive value from this data. Conventional data engineering processes are often lacking, leading to bottlenecks that hinder decision-making and business agility.

Most enterprise data failures don’t happen because teams lack tools. They happen because pipelines rely on constant manual fixes, static rules, and delayed oversight. Agentic AI changes this model by introducing autonomous agents that continuously monitor, correct, and optimize data workflows in real time before failures cascade into reporting delays, compliance gaps, or poor decisions.

How do businesses use data to drive growth and innovation without being slowed down by legacy systems or compromising on scalability? The solution is in Agentic AI data engineering.

Agentic AI data engineering presents a path to the future by optimizing and automating data processes. It eliminates human constraints that get in the way of speed and accuracy. It enables intelligent, real-time decision-making with effortless scalability.

This blog will discuss how enterprise AI is revolutionizing data engineering for businesses, unlocking new levels of accuracy, efficiency, and business value.

By adopting AI-powered automation, business organizations can streamline data management, improve data quality, and translate insights into effective strategies at a speed never seen before.

Appinventiv has delivered 100+ autonomous AI agents across complex enterprise data environments helping teams modernize data workflows without disrupting existing systems.

Let’s explore how Agentic AI data engineering is transforming the future of enterprise data engineering.

Unlock new revenue streams and improve decision-making with Agentic AI. The future of data engineering is here.

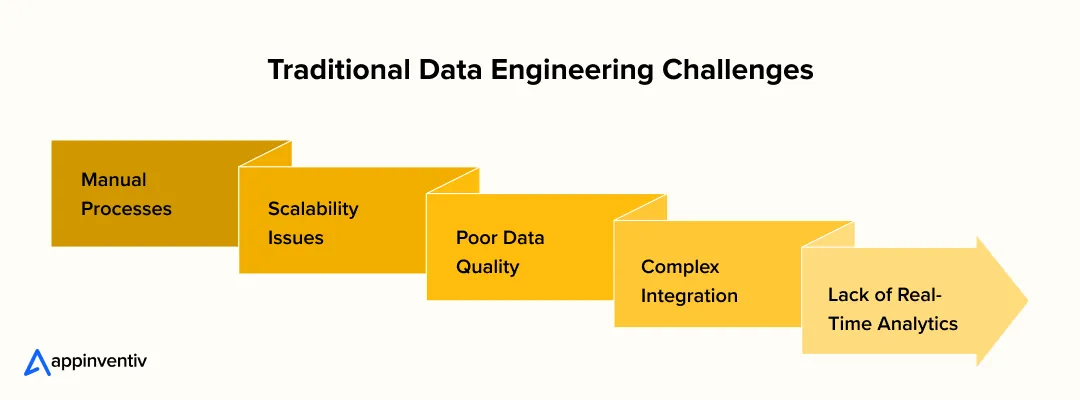

The Shortcomings of Traditional Data Engineering

Businesses today are struggling to cope with the rising complexity and quantity of their data. This highlights the need for Agentic AI data engineering to streamline these processes and eliminate inefficiencies.

Gartner states that inadequate data quality costs organizations an average of $12.9 million per year—a direct reflection of the inefficiencies of legacy systems.

Here are some of the shortcomings of traditional data engineering:

1. Manual Processes

Even with substantial investment in technology, most data engineering teams remain overwhelmed by repetitive work—whether coding, data transformation, or pipeline maintenance. All of these are necessary, but they take time away and provide little opportunity for innovation or strategic thought.

2. Scalability Challenges

As data volume and complexity increase, legacy data workflows are increasingly unable to keep up with the required scale. Enterprises struggle, in many cases, to scale their data systems due to expensive infrastructure spending and excessive reliance on human labor to operate data pipelines.

3. Data Quality Concerns

Manual data handling tends to generate errors and inconsistencies that impair the overall data quality. This failure in quality assurance hinders sound decision-making, as poor-quality data leads to misleading insights and faulty strategies.

4. Lack of Real-Time Analytics

In classical data engineering systems, data processing tends to occur in batches, so insights lag and cannot be acted on in real time. With businesses needing to make decisions sooner, depending on legacy systems to process and analyze data introduces enormous latency.

5. Complexity of Integration

Legacy data engineering systems typically consist of many stand-alone tools and technologies that don’t integrate well. Their integration takes a lot of time and money, causing delays in data processing and analytics.

Traditional Data Engineering vs Agentic AI Data Engineering

To understand why enterprises are moving toward Agentic AI data engineering, the comparison below highlights how traditional approaches differ from AI-driven data operations.

| Dimension | Traditional Data Engineering | Agentic AI Data Engineering |

|---|---|---|

| Pipeline Management | Manual configuration and maintenance | Autonomous pipeline orchestration by AI agents |

| Data Ingestion | Batch-based, delayed processing | Continuous real-time ingestion |

| Data Transformation | Rule-based and manually coded | AI-driven adaptive transformation |

| Data Quality Checks | Periodic manual validation | Continuous automated validation and anomaly detection |

| Scalability | Requires infrastructure expansion and manual tuning | Dynamically scales workloads through multi-agent orchestration |

| Failure Handling | Reactive troubleshooting after breakdowns | Predictive issue detection and self-healing pipelines |

| Real-Time Analytics | Limited, high-latency reporting | Always-on real-time data processing |

| Integration | Complex toolchain integration | AI-managed interoperability across systems |

| Governance & Compliance | Manual monitoring and audits | Embedded policy enforcement and automated compliance checks |

| Operational Cost | High ongoing manual effort | Reduced effort through automation |

| Decision Readiness | Delayed insights | Context-aware, decision-ready data streams |

The shift isn’t about better tools, it’s about moving from reactive data engineering to autonomous, self-correcting systems that keep decisions moving without constant human intervention.

Unlike legacy systems, Agentic AI data engineering automates the most time-consuming tasks, ensuring faster processing and more accurate data workflows.

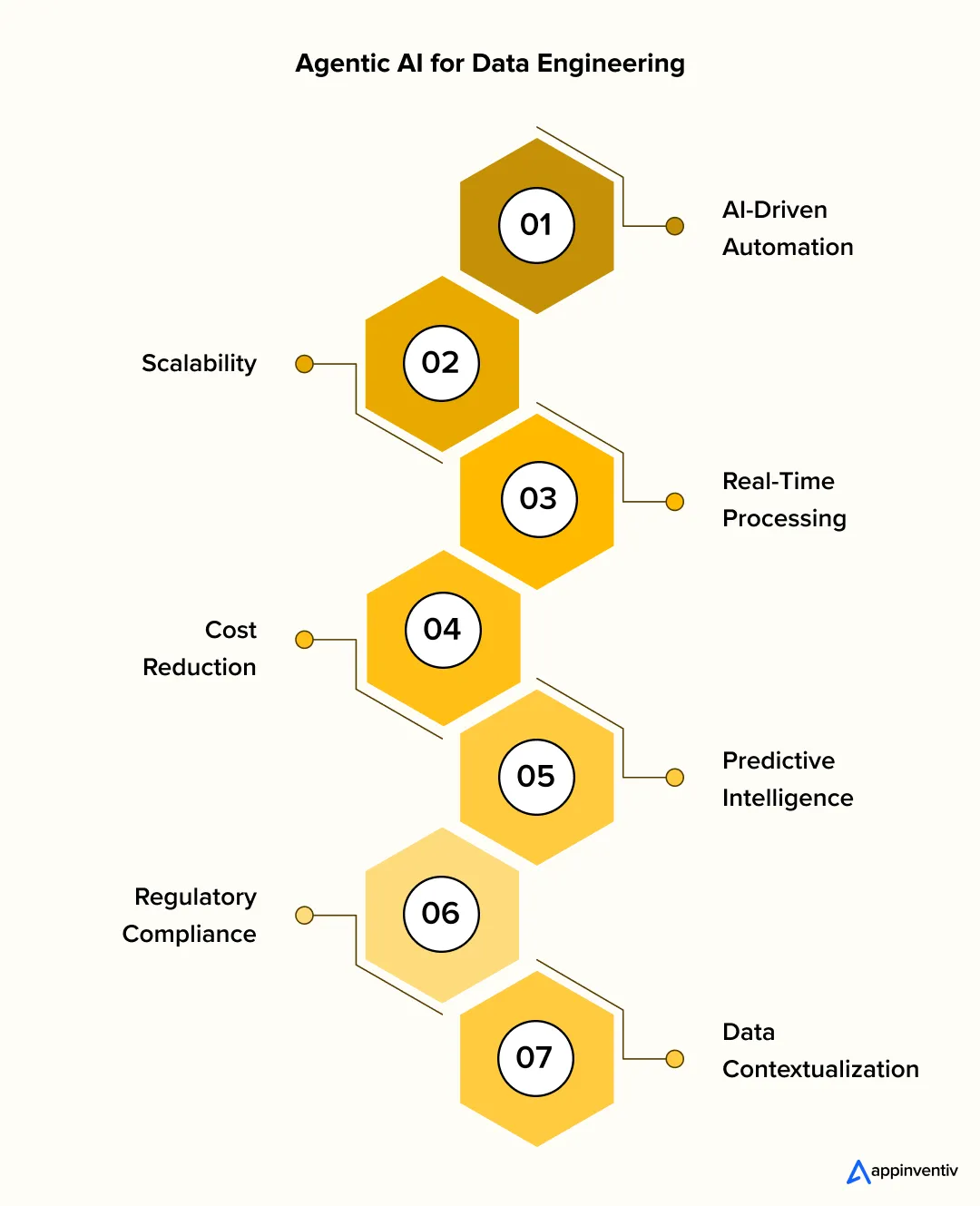

Why Are Enterprises Adopting Agentic AI for Data Engineering?

Most companies eventually feel the strain of managing data. Pipelines grow, sources change, and systems that once worked well start slowing teams down. If you have ever rushed to fix a broken workflow before a report is due, this will sound familiar.

Agentic AI in data engineering helps reduce that pressure. It coordinates pipelines, cuts manual fixes, and keeps processing fast as scale increases. McKinsey estimates this shift could unlock $450 billion to $650 billion in annual revenue by 2030, creating a 5% to 10% lift in high-value industries. That is why enterprise AI and Agentic AI applications in data engineering are gaining real traction.

1. AI-Driven Automation

In traditional stacks, orchestration DAGs break silently when schemas drift or upstream APIs change. Engineers spend valuable time fixing Airflow or debt pipeline failures instead of advancing data strategy.

Modern agent-based orchestration introduces self-healing DAGs. Intelligent agents oversee ingestion, transformation, and validation stages, automatically rewriting failed transformations or rerouting data flows when anomalies occur.

Frameworks such as LangGraph-style multi-agent orchestration allow agents to reason over pipeline state, execution logs, and metadata before taking corrective action.

What changes:

- Pipelines recover automatically from schema or source changes

- Manual intervention drops significantly

- ETL and data processing run continuously

The result is stable, near-real-time data availability without constant firefighting.

2. Continuous Optimization with Real-Time Insights

Legacy observability tools alert teams only after a pipeline stalls or misses an SLA. Root cause analysis remains manual and time-consuming.

Metadata-aware agents now monitor throughput, latency, and transformation efficiency across execution graphs. By analyzing pipeline telemetry in real time, agents rebalance workloads, tune query plans, and optimize data flows before performance degrades. This enables true event-driven ingestion rather than batch-dependent processing.

What improves:

- Real-time pipeline optimization

- Fewer SLA breaches

- Faster access to analytics-ready data

3. Scalable and Adaptive Systems

Scaling traditional data stacks usually requires reconfiguring compute clusters and rewriting orchestration logic.

With multi-agent orchestration, specialized agents manage ingestion, transformation, governance, and delivery as cooperating services. Workloads distribute dynamically based on data volume, velocity, and business priority. New data sources integrate through plug-in connectors rather than pipeline redesign.

What this enables:

- Elastic scaling without infrastructure re-architecture

- Faster onboarding of enterprise data sources

- Reduced operational planning overhead

Data operations grow naturally with business demand.

4. Predictive Intelligence

Most teams detect pipeline risks only after downstream dashboards fail. Predictive monitoring agents analyze execution history, schema drift, query behavior, and resource utilization to forecast breakdowns before they occur. Pre-emptive rerouting, compute reallocation, or transformation rewrites keep pipelines reliable and analytics uninterrupted.

What improves:

- Early anomaly detection

- Higher pipeline uptime

- Fewer last-minute interventions

5. Data Contextualization for Smarter Decisions

Clean data does not always translate into business-ready intelligence. Context is frequently lost between ingestion and reporting layers.

Semantic transformation agents map raw datasets to enterprise data models, enrich metadata, and link related data products dynamically. The outcome is connected, meaning-rich datasets that feed dashboards, AI models, and decision systems directly.

What changes:

- Context-aware data products

- Reduced interpretation effort

- Faster decision cycles

6. Cost Reduction

Data platform costs often rise due to inefficient queries, idle clusters, and over-provisioned pipelines.

Resource-governance agents continuously monitor compute consumption, storage growth, and workload patterns. They automatically optimize warehouse sizing, schedule transformations during low-cost windows, and eliminate waste across the data stack.

What this leads to:

- Lower infrastructure spend

- Predictable performance at scale

- Reduced FinOps overhead

7. Regulatory Compliance and Security

Manual compliance does not scale in distributed data environments.

Policy-as-code agents enforce access control, privacy rules, and regulatory requirements directly within pipeline execution. Automated lineage tracking and audit logs ensure governance remains continuous and inspection-ready.

What this supports:

- Continuous compliance enforcement

- Audit-ready data ecosystems

- Embedded security across data operations

With these Agentic AI applications in data engineering in mind, let’s see how leading enterprises are putting Agentic AI to work in real-world scenarios.

How Are Enterprises Applying Agentic AI in Real-World Data Engineering?

Enterprise data engineering with AI is no longer a future-facing concept; it’s already revolutionizing how businesses manage data. In many sectors, it’s emerging as an enabler for more optimized, accelerated, and intelligent data processes.

Let’s see how Agentic AI data engineering is presently addressing real-life issues in actual applications:

1. Financial Services: Revolutionizing Fraud Detection and Compliance

Compliance and data security aren’t optional in financial services—they’re a matter of survival. Traditional fraud detection methods lag behind threats that morph daily. Agentic AI in finance processes transaction events through real-time streaming pipelines built on platforms like Kafka or Pulsar.

The system also autonomously handles compliance reporting and risk assessments, with governance agents maintaining audit trails and enforcing AML and PCI-DSS data policies. This reduces manual updates, lowers risk, and significantly speeds up response times.

MyExec is a classic example of Agentic AI for data engineering. It is an AI-powered business advisor developed by Appinventiv, showing this in action by automating data analysis and delivering real-time insights that drive better decision-making and operational efficiency for financial companies.

This pattern: agent-driven data ingestion paired with real-time insight delivery—is increasingly common among finance teams modernizing legacy reporting systems without disrupting core operations.

Also Read: How AI Agents Are Revolutionizing Fraud Detection in Financial Services

2. Healthcare: From Reactive to Proactive Care

Healthcare is becoming data-driven fast, but turning mountains of clinical data into actionable insights remains difficult.

Agentic AI in healthcare closes this gap by continuously monitoring patient data and delivering timely insights. Interoperability agents ingest clinical records through FHIR (Fast Healthcare Interoperability Resources) APIs, normalize schemas, and ensure FHIR-compliant data exchange across disparate EMR and EHR systems.

Solutions like Babylon Health’s virtual health assistants guide patients through symptom checks and appointment reminders. Meanwhile, semantic enrichment agents map clinical data to standardized vocabularies such as SNOMED and ICD codes, improving care coordination and analytics accuracy.

Agentic AI also reduces administrative workload by streamlining scheduling and medical record management, freeing more time for patient care.

3. Retail: Personalizing Customer Experiences at Scale

Retailers own tons of customer data, yet most can’t convert this information into usable insights fast enough. Agentic AI in retail fixes this by powering real-time data processing and predictive analytics to dynamically tweak product recommendations, promotions, and inventory levels on the fly.

Streaming ingestion agents capture customer events from e-commerce platforms and POS systems, feeding real-time feature stores that power personalization models.

This allows retailers to scale individualized shopping experiences efficiently. Sephora uses AI in its app and in-store tablets to deliver personalized beauty advice based on customer specifics.

Inventory intelligence agents also connect demand signals to supply chain systems, optimizing replenishment and reducing stockouts.

Also Read: AI Agents in Customer Service: The Future of Seamless Customer Interactions

4. Manufacturing: Improving Operations through Predictive Maintenance

Manufacturing inefficiencies cost real money. Traditional maintenance schedules either result in excessive servicing or unexpected breakdowns.\

Agentic AI tackles this by leveraging real-time machine telemetry and applying machine learning in manufacturing to predict when maintenance is needed. In modern factories, ingestion agents parse Industrial IoT (IIoT) data streams transmitted via MQTT or OPC-UA protocols, filtering noise and enriching sensor data with equipment metadata.

Predictive maintenance agents detect vibration anomalies, temperature drift, or throughput degradation before failures occur. Orchestration agents then trigger maintenance workflows and update production schedules automatically.

BMW, for example, runs AI-driven robots that detect bottlenecks and optimize workflows without human intervention. AI-powered computer vision also detects defects in automobile parts, maintaining quality standards and reducing returns.

Automate your data workflows and scale your operations seamlessly with our AI Agent Development Services. Let us help you drive growth.

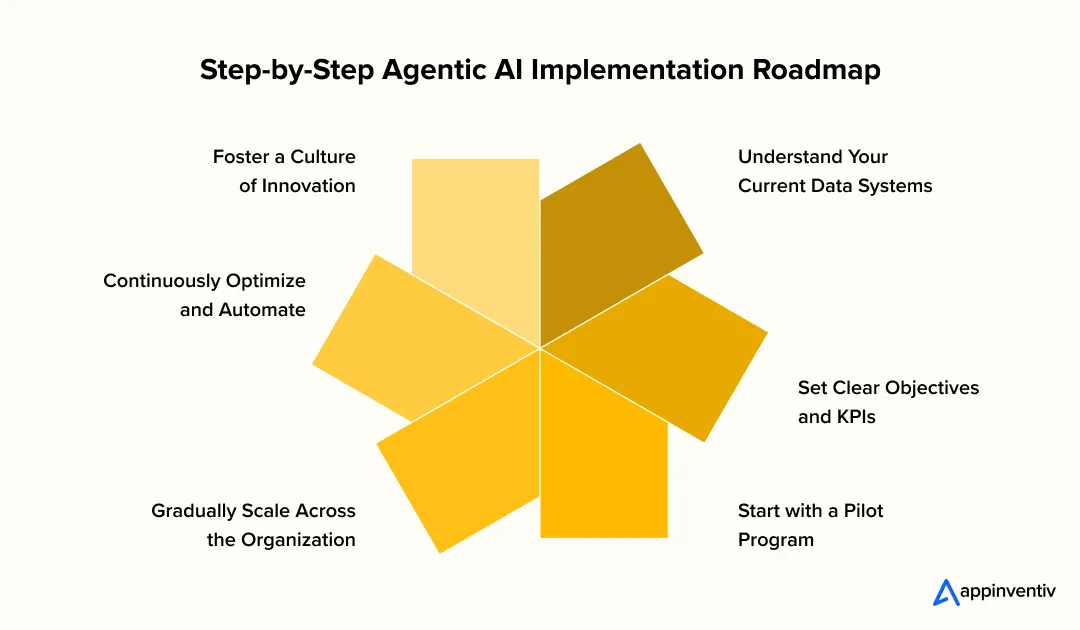

How Enterprises Start with Agentic AI Without Disrupting Existing Systems?

Implementing Agentic AI developed for your business is more than just flipping a switch. This is about really transforming how data moves through your company, perfecting what you already do well, and being willing to adjust as you go along.

You have to understand where you’re starting, determine where you’re going, then experiment a bit, grow in a considered way, and continue to make adjustments.

Here’s a real-world approach to getting going:

Step 1: Understand Your Current Data Systems

Before you start throwing money at new technology, spend real time mapping how data actually moves through your company and where it gets stuck. Hunt down those areas where employees still copy-paste between spreadsheets and where workflows grind to a halt. This tells you precisely where Agentic AI will deliver returns worth the investment.

Step 2: Set Clear Objectives

Define what winning looks like for AI-driven data engineering in your situation. Cutting processing time in half? Helping managers make smarter calls? Managing triple the data volume without adding headcount? Pick specific goals and metrics, or you’ll end up drifting without knowing if anything worked.

Step 3: Begin with an MVP

Launch small with a Minimal Viable Product or MVP l: just one department, just one process. Try Agentic AI in real business conditions, have brutally honest feedback from people using it, and fix what breaks before rolling it out company-wide. Pilots catch disasters that look great in demos.

Step 4: Scale Gradually

Once the pilot proves itself, Agentic AI is expanded piece by piece across operations. Take lessons from the pilot and apply them to each new rollout, building on what works rather than repeating mistakes or racing ahead too fast.

Step 5: Constant Optimisation

Improving Agentic AI does not stop at launch. The performance should be monitored continuously, metrics watched like a hawk, and settings tweaked when results slip to keep them aligned with your needs as business conditions change.

Step 6: Foster a culture of experimentation and growth.

Let your teams play around and find new ways to use AI. Give them proper training and tools so they can view AI as something that augments their capabilities, not as another top-down initiative that nobody asked for.

What Challenges Do Enterprises Face When Adopting Agentic AI in Data Engineering?

Adopting Agentic AI usually does not fail because of the technology. It slows down when everyday realities like data, systems, and people are not planned for upfront. Knowing where friction shows up makes the transition far easier to manage.

Most Agentic AI initiatives stall not because the technology fails but because data realities, system dependencies, and team readiness are not addressed upfront.

For most enterprises, adoption begins not by replacing platforms but by identifying where manual data failures repeatedly slow decisions, inflate costs, or erode confidence in insights, a process frequently guided by a digital transformation partner.

Below are the most common adoption challenges enterprises face, and how teams typically work through them.

1. Data Quality Issues

AI systems depend entirely on the data already in place. If that data is fragmented or unreliable, results quickly lose credibility across the business.

- Review what data is accurate and what is not

- Fix gaps, duplicates, and inconsistent formats

- Put regular quality checks in place

2. Integration with Current Systems

Most enterprises run on a mix of old and new systems. Adding Agentic AI into that environment needs care to avoid disrupting what already works.

- Start with one system or workflow

- Roll out changes in controlled phases

- Involve IT teams early to flag risks

3. Resistance to Change

New technology often triggers concern, especially when teams are unsure how their roles will change. That hesitation can quietly slow adoption.

- Communicate early and often

- Show how AI reduces repetitive work

- Offer training tied to real tasks

4. Cost Issues

Initial AI investment can feel heavy without a clear context. Uncertainty around long-term costs often creates hesitation at the decision stage.

- Look beyond upfront spend to long-term savings

- Test value through pilots before scaling

- Measure impact using internal data

Also Read: How Much Does It Cost to Build an AI Agent?

5. Lack of Expertise

Agentic AI is still new for many teams. Without experience, implementation can become slower and more expensive than expected.

- Bring in experts for early setup

- Avoid learning through high-risk mistakes

- Build internal capability over time

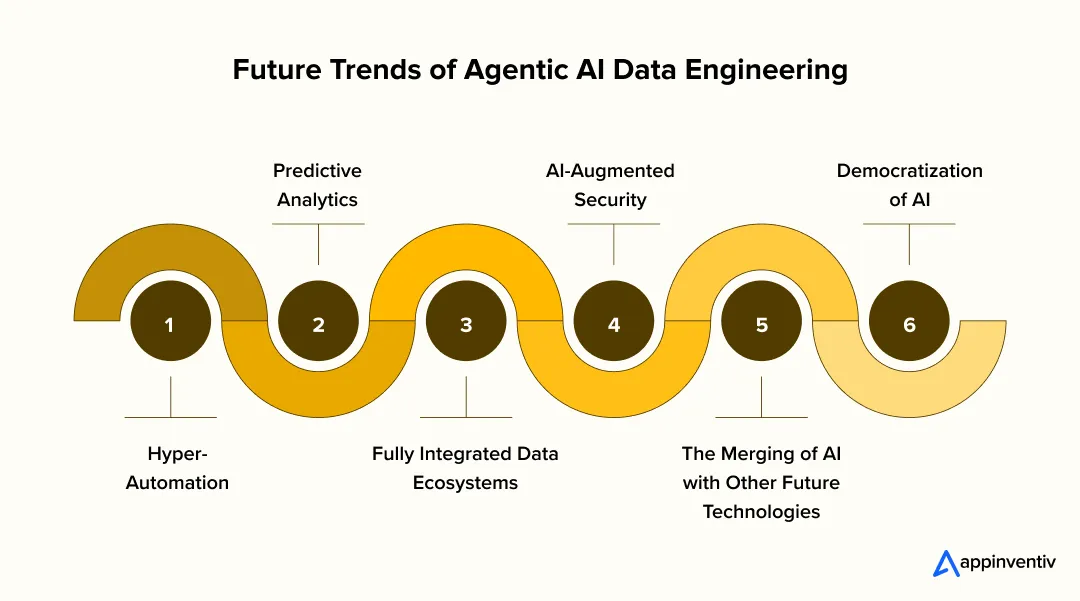

What Is the Future of Agentic AI in Data Engineering?

Data engineering is changing fast under our feet, and Agentic AI is at the very heart of this revolution. The future will probably have systems that act more autonomously, transition more seamlessly, and require fewer directions from humans than the systems we have today.

Let’s consider where Agentic AI for data engineering appears to be going and what that could do to businesses in the coming few years:

1. Hyper-Automation and Autonomous Workflows

Agent-powered data engineering is now moving beyond simple automation to fully autonomous workflows capable of handling tasks from data processing down to decision-making, with hardly any human involvement. In that way, teams are free to address more creative and strategic work rather than just babysitting systems.

2. Real-time Predictive Analytics Across Industries

AI will enable predictive analytics in industries such as healthcare and retail to shift from reactive to predictive operations. It can identify potential upcoming problems and suggest remedies in advance that help the business move faster than its competition, which is still looking at yesterday’s data.

3. Wholly Integrated Data Ecosystems

Agentic AI will link together data sources and systems that don’t talk to each other today, smashing silos and giving decision-makers a unified view of operations. This allows for quicker, sharper decisions to be made based on full information.

4. AI-Augmented Data Security and Governance

AI will automatically handle compliance audits, security monitoring, ensuring that companies comply with GDPR, HIPAA, etc., jumping on vulnerabilities before breaches can happen, without constant human oversight.

5. Democratization of Data Engineering and AI

As AI tools become more intuitive, small businesses will gain access to the same great insights that previously were locked behind enterprise budgets, leveling the playing field dramatically and reshaping how entire industries compete.

6. The Merging of AI with Other Future Technologies

Agentic AI is going to interweave with blockchain, the IoT, and 5G networks, combining their powers to build smarter, more efficient systems that enable real-time data management and drive innovation nobody saw coming.

Get in touch today to discover how Agentic AI can transform your data engineering, making your operations faster and smarter.

How Appinventiv Can Assist: Top AI Solutions for Businesses

Appinventiv is a trusted AI consulting company helping enterprises integrate Agentic AI into complex data environments. We support organizations in identifying automation opportunities, aligning data strategies with business goals, and architecting scalable, governance-ready data engineering frameworks.

Our AI agent development services focus on designing and deploying autonomous agents that orchestrate data pipelines, enforce data quality controls, and enable real-time data processing across enterprise systems.

To date, we have developed over 100 autonomous AI agents supported by 200+ data scientists and AI engineers. With more than 10 years of enterprise delivery experience, we build Agentic AI data engineering solutions that improve operational efficiency, scale data capabilities, and strengthen decision-making.

Partner with Appinventiv to implement smarter, scalable, and future-ready Agentic AI data engineering solutions.

Frequently Asked Questions

Q. How can enterprises leverage Agentic AI for smarter data engineering?

A. Organizations can harness Agentic AI by allowing it to automate the mundane parts of data workflows. This liberates teams from time-consuming manual processes while making operations smoother.

When you strategically deploy AI agents, your data operations can scale organically, you gain insights as events happen in real time and not retroactively, and decisions among departments occur quickly with higher precision.

Q. What are the benefits of using Agentic AI in enterprise data pipelines?

A. The largest benefits appear in three places: more work is automated with Agentic AI for data transformation, systems are easier to scale, and data processing occurs in real time. Agentic AI reduces manual steps by far, building pipelines that run smoothly and adapt as your data needs change. You’ll see quicker processing, fewer errors making it through, and overall operations that simply work better.

Q. How does Agentic AI improve data management and processing?

A. Agentic AI does the heavy lifting of processing data—bringing it in, converting it into a usable format, and verifying that everything is right. It processes enormous volumes of information as it comes in, so your data remains accurate and available. The bottom line? You’ve always got accurate, high-quality data on hand when you need to make decisions.

Q. What is the cost of implementing Agentic AI in enterprise data engineering?

A. Implementation expense varies based on a number of factors: how widely you’re implementing it, how intricate your current data infrastructure is, and what your business uniquely needs. That being said, most organizations see the investment pay for itself within a relatively short period of time.

Automation of labor, more efficient operations, and the ability to make decisions sooner are places where savings come. The reduction in manual labor alone typically justifies the cost, not to mention the improvements in data quality.

Q. How can Appinventiv help integrate Agentic AI into enterprise data workflows?

A. We accompany you every step of the way, from that initial discussion of what you require through auditing your existing systems, deployment of the solution in its entirety, and then optimizing performance in the long term.

Our group ensures the integration is smooth and that you’re truly realizing maximum value from AI in your data processes, not merely marking a box that you’ve embraced new technology.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

How to Build AI Agents for Insurance - Framework, Use Cases & ROI

Key takeaways: AI agents are moving insurance operations beyond pilots into scalable, regulatory-compliant AI in insurance production-grade systems. Enterprise-ready AI agents require governance-first frameworks, hybrid architectures, and deep integration with core systems. ROI compounds through reduced cycle times, improved decision consistency, and non-linear operational scalability. Insurers succeeding with AI agents treat them as operational infrastructure,…

Customer Experience Automation (CXA) for Australian Enterprises

Key takeaways: CX automation scales in Australian enterprises only when escalation, audit trails, and accountability are designed before rollout. Weak customer data and orchestration choices undermine CX automation faster than any platform limitation. AI improves CX outcomes when it supports routing and prediction, not when it replaces judgement in regulated interactions. CX automation succeeds when…

Appinventiv's AI Center of Excellence: Structure, Roles, and Business Impact for Enterprises

Key takeaways: Enterprises struggle less with AI ideas and more with turning those ideas into repeatable outcomes. An AI Center of Excellence provides structure, ownership, and clarity as AI initiatives scale. Clear roles, a practical operating model, and built-in governance are what keep AI programs from stalling. Measuring maturity and business impact helps enterprises decide…