- Why AI Agents Are Becoming Digital Operators Inside Australian Enterprises

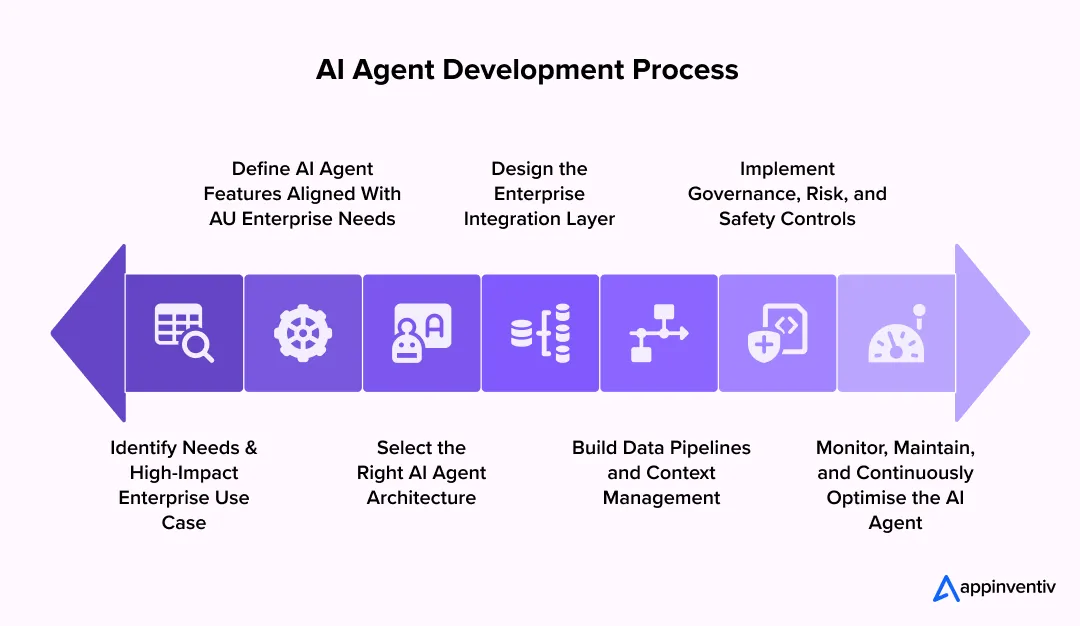

- How to Build an AI Agent in Australia: A Step-by-Step Process

- Step 1: Identify Needs and High-Impact Enterprise Use Case

- Step 2: Define AI Agent Features Aligned With AU Enterprise Needs

- Step 3: Select the Right AI Agent Architecture

- Step 4: Design the Enterprise Integration Layer

- Step 5: Build Data Pipelines and Context Management

- Step 6: Implement Governance, Risk, and Safety Controls

- Step 7: Monitor, Maintain, and Continuously Optimise the AI Agent

- Technology Stack Required to Build AI Agents in 2026

- The Need for Compliance, Data Residency, and Responsible AI in Australia

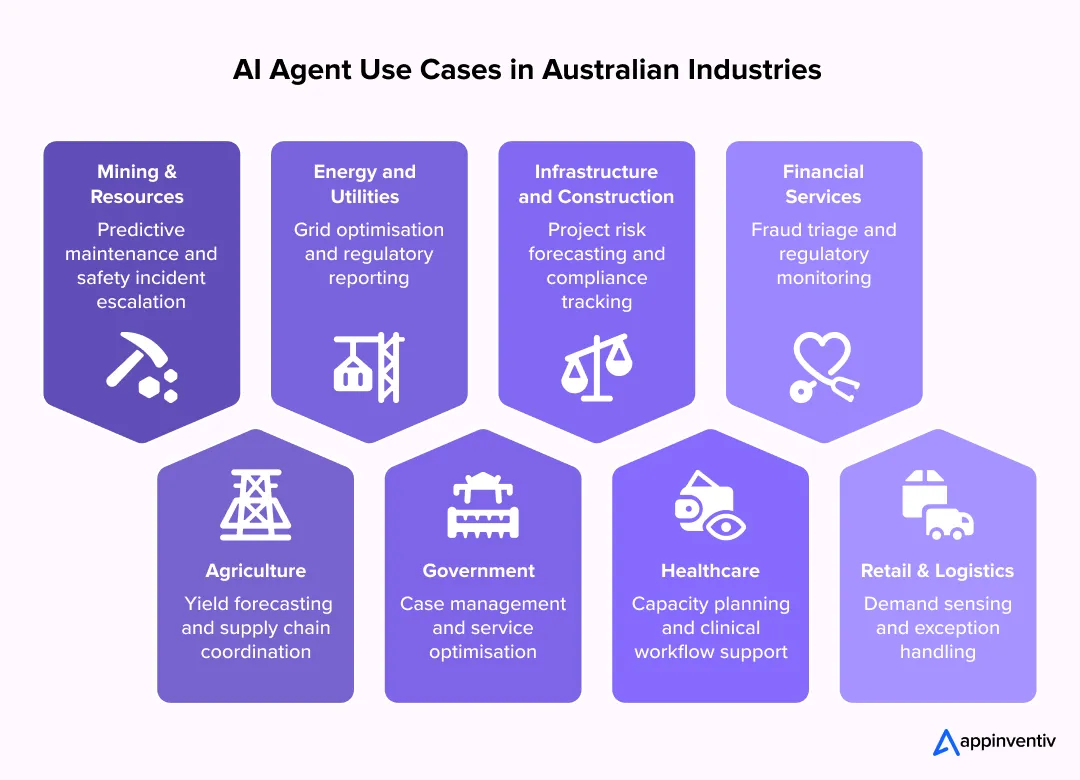

- Industry-Specific AI Agent Use Cases in Australia

- Mining and Resources

- Energy, Utilities, and Renewables

- Infrastructure and Construction

- Agriculture and Agribusiness

- Government and Public Sector

- Financial Services and Banking

- Healthcare and Aged Care

- Retail, Logistics, and Supply Chain

- What is the Cost and Timeline to Build an AI Agent in Australia

- Measuring ROI and Long-Term Business Impact

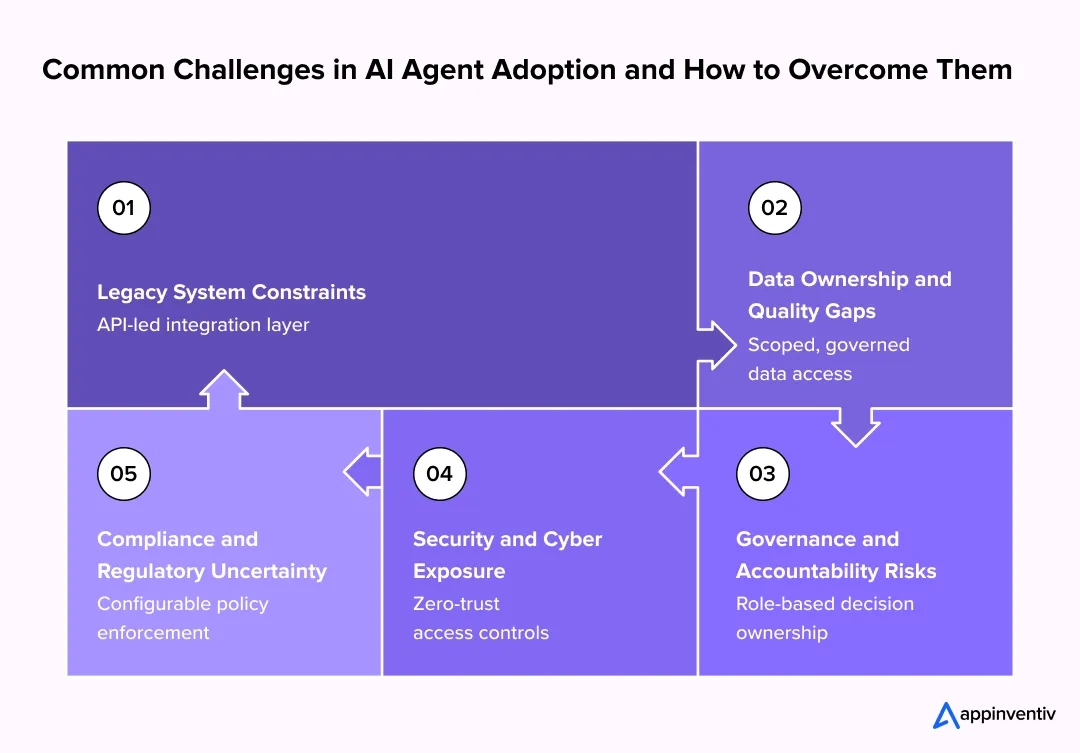

- Common Enterprise Challenges in AI Agent Adoption and How to Address Them

- Legacy System Constraints

- Data Ownership and Quality Gaps

- Governance and Accountability Risks

- Security and Cyber Exposure

- Compliance and Regulatory Uncertainty

- Future of AI Agents: Emerging AI Agent Trends

- How Appinventiv Helps Australian Enterprises Build AI Agents

- FAQs

Key takeaways:

- AI agents in Australia function as governed digital operators that observe systems, reason over enterprise data, and execute actions within defined authority limits.

- A disciplined AI agent development approach begins with governance and integration planning and relies on ongoing monitoring and refinement to remain effective.

- Mining, energy, finance, healthcare, and government deployments prioritise reliability, traceability, and risk control over full autonomy.

- The cost to build an AI agent in Australia reflects governance complexity, integration depth, and lifecycle support, typically ranging from AUD 40,000 to AUD 600,000.

- Multi-agent systems, governance-first architectures, and explainability will shape the future of AI agents in Australia.

Across Australian enterprises, the conversation around AI has shifted decisively from experimentation to execution. The question is no longer whether AI agents will be introduced into operational environments. It is whether organisations can afford to deploy them without structural control.

In 2026, AI agents are being positioned as digital operators. They reconcile data, trigger actions across systems, manage exceptions, and increasingly influence commercial and safety-critical decisions. This is not incremental automation. It is delegated autonomy.

For Australian enterprises, this shift carries unique consequences. Data sovereignty expectations are rising. Board accountability for technology risk is explicit. Cyber exposure is no longer abstract. When an AI agent acts, the organisation remains accountable for the outcome.

This is why how to build an AI agent in Australia has become an executive-level decision rather than a technical one. The organisations that succeed are not the ones that deploy the most capable models. They are the ones that design AI agents around governance, traceability, and long-term ownership from day one.

As articulated by Minister Dib,

“Artificial intelligence offers the opportunity to create a safer and more productive world, and we must do so responsibly, safely, and ethically.”

That statement is not aspirational. It reflects the operating reality Australian enterprises are now expected to meet.

This blog reflects how enterprises should approach AI agent development in Australia that adheres to security, accountability, and durable ROI, not short-term demos.

Design custom AI agents aligned with Australian compliance, cost control, and operational reality.

Why AI Agents Are Becoming Digital Operators Inside Australian Enterprises

Many businesses still confuse AI agents with copilots, RPA bots, or conversational interfaces. In practice, the difference matters. AI agents are no longer limited to answering questions or drafting content.

How AI Agents Differ From Other Enterprise Automation Models

| Capability Dimension | RPA Bots | AI Copilots | Conversational AI | Enterprise AI Agents |

|---|---|---|---|---|

| Primary Role | Task automation | Human assistance | User interaction | System-level execution |

| Decision Ownership | Predefined rules | Human-led | Human-led | Delegated within limits |

| Context Awareness | Low | Medium | Medium | High and persistent |

| Ability to Act | Scripted actions only | Suggests actions | Responds to prompts | Executes approved actions |

| Governance & Auditability | Limited | Minimal | Minimal | Built-in by design |

| Suitability for Regulated AU Enterprises | Narrow use cases | Support-only | Front-end only | Core operations |

Enterprise AI agents operate with delegated authority. They observe systems, reason over context, take actions, and escalate when thresholds are crossed. Without controls, that autonomy becomes a liability.

An AI agent deployed inside a mining operation, energy network, financial platform, or government workflow is not a support tool. It becomes part of the operating model.

In Australian enterprise environments, mature autonomous agents in AI share a few defining traits:

- Predictable behaviour in regulated workflows

- Transparent decision logs suitable for audits

- Clear identity and role-based permissions

- Human override paths for high-risk actions

Consumer-grade agents fail here. They optimise for fluency, not accountability. That gap is why AI agents for businesses in Australia in 2026 must be designed differently from day one.

With enterprise-grade expectations defined for AI agents, the next challenge is execution. Considering this, the following section moves into how to build an AI agent for Australian enterprises.

Also Read: Digital Transformation Strategy for Australian Enterprises: A Practical Roadmap

How to Build an AI Agent in Australia: A Step-by-Step Process

Building AI agents is no longer a technical experiment for Australian organisations. It is an execution discipline shaped by governance expectations, the National AI Capability Plan, and board-level accountability for automated decision-making. But worry not. Each step below reflects how AI agent development in Australia is being approached in practice.

Let’s unveil the steps to make an AI agent in Australia:

Step 1: Identify Needs and High-Impact Enterprise Use Case

When considering how to make an AI agent in Australia, the first step is narrowing the scope.

Australian enterprises that succeed start with a single, high-friction workflow where decision latency, human error, or scale constraints are already well understood. These are usually areas where humans are overloaded by exceptions rather than routine tasks.

Typical starting points include compliance triage, asset maintenance prioritisation, procurement exceptions, or operational risk monitoring. The goal is not full automation. It is controlled delegation.

At this stage, you need to ask yourself some decision-making questions, like:

- What decisions can the agent make independently

- What decisions must always be escalated

- What measurable outcome defines success

This framing anchors the rest of the enterprise AI agent development process.

Step 2: Define AI Agent Features Aligned With AU Enterprise Needs

Once the use case is fixed, the next core step is to define the feature list. In Australia, feature decisions are inseparable from governance and compliance expectations. Most failed initiatives attempted to retrofit controls later. Mature organisations define them upfront as part of custom AI agent development.

Below is the feature framework Australian enterprises are converging on.

| Feature Domain | Basic Features (Minimum Viable Control) | Advanced Features |

|---|---|---|

| Decision Authority | Rule-based task execution | Bounded autonomous decisions with risk thresholds |

| Human Oversight | Mandatory manual approvals | Human-in-the-loop and human-on-the-loop models |

| Explainability | Action and event logs | Full decision lineage and replayable reasoning |

| Accountability | Named operational owner | Executive and board-level accountability mapping |

| Governance Controls | Static approval rules | Dynamic controls aligned to risk appetite |

| Data Handling | Structured internal data | Context-aware reasoning across systems and sources |

| Data Residency | Local data storage | Jurisdiction-aware data routing and tagging |

| Policy Enforcement | Hard-coded policies | Embedded organisational and regulatory policies |

| Security Access | Role-based permissions | Per-agent identity with zero-trust enforcement |

| Integration | API-triggered actions | Event-driven orchestration across enterprise platforms |

| Risk & Safety | Manual intervention | Automated kill-switches and anomaly detection |

| Monitoring & Observability | Performance metrics | Continuous drift, bias, and compliance monitoring |

| Audit Readiness | Log retention | Exportable evidence for regulatory and internal audits |

| Change Management | Manual updates | Versioned, auditable releases with rollback |

| Scalability | Limited scope deployment | Horizontal scaling with controlled privilege expansion |

These capabilities are not optional when you develop an AI agent for Australian enterprises operating in regulated or asset-heavy environments.

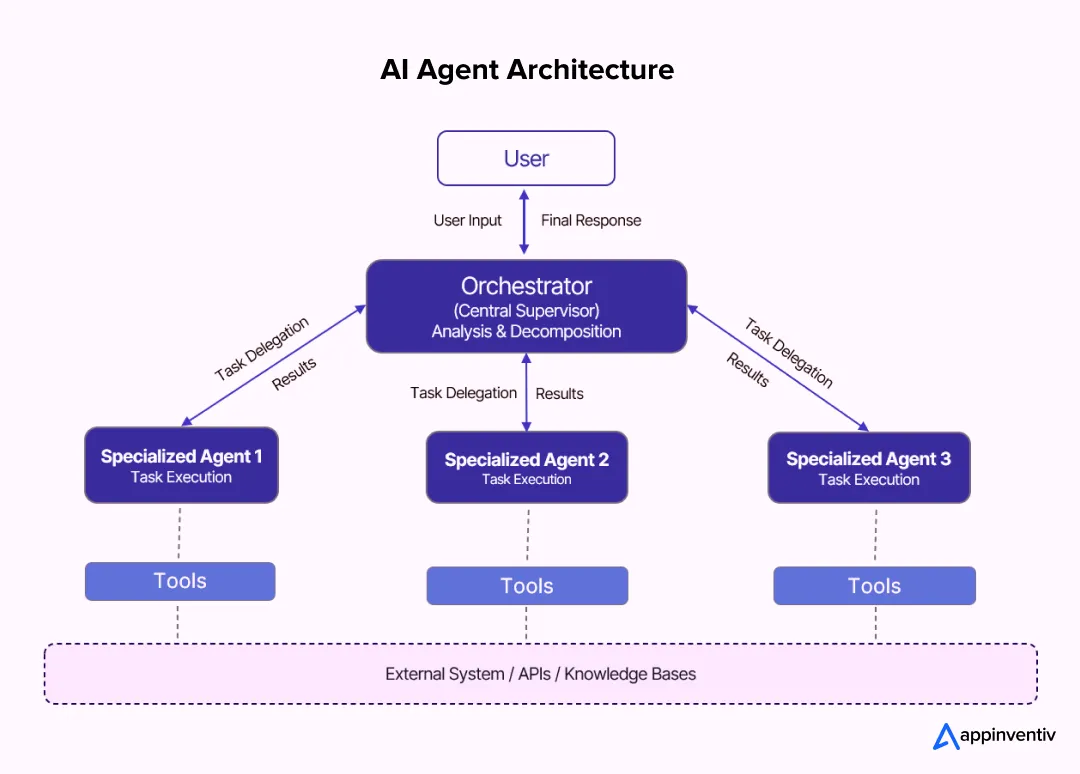

Step 3: Select the Right AI Agent Architecture

Architecture choices determine whether AI agents scale safely or fail quietly.

Australian enterprises are moving away from single-agent designs toward supervised or multi-agent systems. Task-specific agents operate under a coordinating agent that enforces authority limits and escalation rules.

This approach aligns well with leading AI agent frameworks that support orchestration, state management, and observability. Selecting from the top AI agent development frameworks requires evaluating:

- Support for deterministic execution paths

- Integration with identity and access management

- Native observability and logging

Step 4: Design the Enterprise Integration Layer

AI agents only create value when they can act across systems.

Australian enterprises typically operate hybrid environments, so integration layers must be API-first and event-driven. Direct database access is avoided to reduce risk and improve auditability.

This step is especially critical when organisations explore how to build an AI agent in copilot environments, where surface-level assistance still requires enterprise-grade system integration underneath.

Step 5: Build Data Pipelines and Context Management

AI agents do not fail because of poor models. They fail because of poor context.

Enterprises separate raw data, decision context, and policy constraints to ensure agents act on verified, current, and compliant inputs. This reflects national guidance on trustworthy AI and data stewardship.

Strong context management is now a baseline requirement in AI agent tech stack decisions.

Step 6: Implement Governance, Risk, and Safety Controls

Governance is enforced through systems, not statements. This step embeds:

- Decision approval thresholds

- Automated escalation triggers

- Kill-switch mechanisms

- Continuous monitoring for drift and bias

This approach aligns with expectations outlined by the Australian government under its National AI initiatives and responsible AI principles. It is also consistent with NSW public sector guidance on lifecycle accountability, traceability, human oversight, and risk classification for AI agents.

Step 7: Monitor, Maintain, and Continuously Optimise the AI Agent

AI agents require ongoing operational ownership.

Enterprises track decision accuracy, override frequency, compliance exceptions, and drift over time. These signals inform retraining, authority adjustments, and risk reassessment.

This continuous loop completes the AI agent development roadmap and ensures long-term viability. Without this ongoing step, even well-designed agents degrade under real-world conditions.

Architect AI agents that integrate cleanly with legacy systems, respect authority boundaries, and scale without increasing risk.

Technology Stack Required to Build AI Agents in 2026

Businesses prefer AI agent stacks that are modular, auditable, and vendor-agnostic, aligned with the National AI Capability Plan and public-sector AI guidance. Thus, the AI tech stack you choose for AI agent development must support controlled autonomy, audit readiness, and data sovereignty in line with the National and state-level AI guidance.

A typical AI agent tech stack includes:

| Stack Layer | Relevant Technologies Used by AU Enterprises |

|---|---|

| Foundation Models | Private LLM deployments, Azure OpenAI (AU regions), AWS Bedrock, fine-tuned open-source models |

| AI Agent Frameworks | LangGraph, AutoGen, CrewAI, Semantic Kernel |

| Orchestration & Workflow | Temporal, Apache Airflow, Azure Logic Apps |

| Integration Layer | REST APIs, GraphQL, Kafka, MuleSoft |

| Data & Context Layer | Vector databases (Pinecone, Weaviate), enterprise data lakes |

| Memory & State Management | Redis, PostgreSQL, event stores |

| Security & Identity | Azure AD, IAM, OAuth 2.0, Zero Trust frameworks |

| Monitoring & Observability | OpenTelemetry, Prometheus, enterprise SIEM tools |

| Governance & Compliance | Policy engines, audit logging systems, data residency controls |

The Need for Compliance, Data Residency, and Responsible AI in Australia

Across enterprise environments in Sydney, Melbourne, Brisbane, Perth, and Adelaide, AI agents are judged on control, traceability, and accountability before capability. Decision makers expect AI-driven decisions to stand up to internal review, audit scrutiny, and external questioning without ambiguity. Key regulatory expectations shaping AI agent design include:

- Clear accountability for outcomes

Every agent action must map to a named business owner. Responsibility does not shift to the system when automation is involved. - Responsible AI practices into execution

Fairness, transparency, and explainability need to be embedded in agent logic and workflows, not managed through standalone policy documents. - Local data residency by design

Sensitive operational and customer data is expected to remain within local jurisdictions, with visibility over any cross-border data movement when global platforms are used. - End-to-end traceability

AI agents must retain decision histories, inputs, and actions in a form that supports audits, investigations, and regulator engagement. - Security controls equivalent to human access

Agents are treated as digital staff, governed by identity management, role-based access, and continuous monitoring. - Ongoing governance across the lifecycle

AI agents require continuous oversight, retraining approvals, and policy updates as business conditions and regulatory expectations evolve.

For enterprises working through how to build an AI agent in Australia, compliance and data governance are not barriers to progress. They are what allow AI agents to operate with confidence, scale responsibly, and remain defensible at the board and regulator level.

Industry-Specific AI Agent Use Cases in Australia

For Australian enterprises, AI agents deliver value only when embedded in real operational decision loops. The most successful deployments are not customer-facing experiments. They sit behind the scenes, orchestrating systems, enforcing policy, and reducing latency in high-stakes workflows.

What follows are AI agent use cases for Australia that reflect how large organisations are deploying agents, with governance, legacy constraints, and accountability built in.

Mining and Resources

Mining operations are asset-intensive, geographically dispersed, and safety-critical. This makes them well-suited for controlled autonomous agents that operate within strict boundaries.

Agentic AI in Australian mining industries is increasingly used to:

- Monitor equipment telemetry across multiple sites and identify early failure patterns

- Trigger maintenance workflows based on risk thresholds, not fixed schedules

- Escalate safety anomalies when environmental or operational signals deviate from norms

In this context, an AI agent does not “predict failure” in isolation. It coordinates between IoT platforms, maintenance systems, and workforce scheduling tools. The agent’s authority is limited, auditable, and aligned with safety governance.

This is a clear example of autonomous agents in AI operating as digital supervisors rather than decision-makers of last resort.

Energy, Utilities, and Renewables

Australian energy providers operate under continuous regulatory oversight, with increasing pressure to balance reliability, sustainability, and cost.

Enterprise AI agents are being deployed to:

- Optimise load balancing across distributed energy resources

- Detect grid instability signals earlier than human monitoring allows

- Coordinate response actions across operational systems during incidents

Here, AI agents for businesses in Australia in 2026 function as orchestration layers. They do not replace control rooms. They reduce reaction time and surface decision-ready insights while maintaining strict escalation paths.

Governance matters deeply in this sector. Agents must explain why actions were taken, which data sources were used, and how thresholds were applied. This makes custom AI agent development essential.

Infrastructure and Construction

Large infrastructure projects suffer from predictable issues: delays, cost overruns, and fragmented accountability across contractors and systems.

AI agents are increasingly used to:

- Track project health across scheduling, procurement, and workforce systems

- Flag early signals of timeline or budget deviation

- Coordinate compliance reporting across multiple stakeholders

Rather than acting independently, the agent operates as a continuous risk assessor. It escalates issues early, allowing human leaders to intervene before costs compound.

This model aligns well with enterprise expectations around explainability and audit readiness.

Agriculture and Agribusiness

Australian agribusiness operates under climate volatility, thin margins, and complex supply chains. AI agents here focus less on autonomy and more on coordination.

Common use cases include:

- Yield forecasting using weather, soil, and historical production data

- Supply chain coordination between growers, distributors, and exporters

- Early detection of logistics disruptions or quality risks

These agents operate across data silos that traditionally do not communicate well. Their value lies in synthesising signals and recommending actions, while leaving execution authority with human operators.

This approach reflects a pragmatic enterprise AI agent development process, not speculative automation.

Government and Public Sector

Government agencies face unique constraints around transparency, fairness, and accountability. As a result, AI agent adoption in Australia’s public sector is conservative but deliberate.

AI agents are being used to:

- Triage cases and prioritise workloads across departments

- Monitor service delivery metrics and flag systemic issues

- Assist with compliance monitoring and reporting

Agents in this environment are tightly governed. Every action is logged. Human override is mandatory. Decision criteria are explicit.

This aligns closely with the NSW Government’s guidance on responsible AI agent use and reinforces why governance must be designed in from the outset.

Financial Services and Banking

Banks and financial institutions are among the most advanced users of AI agents in Australia, driven by scale, regulatory pressure, and cost efficiency.

AI agents are deployed to:

- Monitor transactions for fraud patterns across channels

- Coordinate regulatory reporting and compliance checks

- Assist risk teams by prioritising investigations

These agents do not make final credit or compliance decisions. They narrow the problem space, reduce noise, and ensure consistency across systems.

This is where AI agent frameworks that support auditability and role-based control become critical.

Also Read: How Is AI Used in Fintech in Australia

Healthcare and Aged Care

Healthcare systems are under strain from workforce shortages and rising demand. AI agents are emerging as coordination tools rather than diagnostic engines.

Key use cases include:

- Capacity planning across hospitals or aged care facilities

- Predictive scheduling for staff and resources

- Monitoring patient flow and escalation triggers

Given the sensitivity of medical data, agentic AI in the Australian healthcare sector operates under strict data residency and access controls. This reinforces why off-the-shelf agents are rarely suitable.

Retail, Logistics, and Supply Chain

In large retail and logistics networks, the value of AI agents comes from speed and consistency across high-volume operations.

Agents are used to:

- Sense demand changes and adjust inventory allocation

- Identify supply chain bottlenecks before they impact service levels

- Coordinate responses across warehousing, transport, and procurement systems

These agents act continuously, something human teams cannot do at scale. However, their authority remains bounded, especially where financial or contractual implications exist.

Across all sectors, the same rule applies. AI agents work best when they are given narrow authority, clear escalation paths, and defined ownership. Where those conditions exist, they become reliable operational support. Where they do not, adoption stalls quickly.

What is the Cost and Timeline to Build an AI Agent in Australia

On average, the cost to build an AI agent in Australia typically ranges between AUD 40,000 and AUD 600,000 or more. However, it is not a fixed price range; the cost can significantly increase or decrease, depending on project scope, integration depth, and governance requirements.

Timelines and budgets are driven less by model selection and more by how the agent is expected to operate within existing systems and controls.

Key cost drivers accountable for price fluctuation are:

- Depth of integration with legacy, ERP, and operational platforms

- Level of autonomy versus mandatory human approvals

- Security, audit, and data residency requirements

- Monitoring, retraining, and long-term support expectations

Here is an estimated breakdown of AI agent development cost in Australia based on different project complexities

| Project Complexity | Typical Scope | Estimated Cost Range | Indicative Timeline |

|---|---|---|---|

| Basic | Single use case, limited integrations, full human approval | AUD 40,000 – 80,000 | 4–6 months |

| Moderate | Multiple workflows, core system integration, audit logging | AUD 80,000 – 180,000 | 6–9 months |

| Advanced | Cross-system orchestration, governance controls, monitoring | AUD 180,000 – 350,000 | 9–12 months |

| Enterprise-Grade | Multi-business deployment, supervisory agents, compliance-by-design | AUD 350,000 – 600,000+ | 12–18+ months |

This phased cost structure reflects how enterprises typically scale AI agents while maintaining control over risk, compliance, and long-term ownership.

Discuss your project idea with us, and get a more precise estimate.

Measuring ROI and Long-Term Business Impact

Even if the upfront investment appears high, enterprises that deploy AI agents with the right controls often witness a higher ROI that far outweighs the initial investment. Value is realised not through short-term savings alone, but through sustained improvements in efficiency, risk management, and decision reliability across core operations.

Key indicators include:

- Enterprises see measurable gains when AI agents reduce manual intervention in high-volume workflows and shorten response times.

- Cost benefits typically emerge through lower rework, fewer operational errors, and more predictable execution.

- Risk exposure decreases as agents surface compliance and operational issues earlier in the cycle.

- Decision-making improves as prioritisation becomes more consistent under time and data constraints.

- Long-term value is evident when teams rely on agent-supported outcomes with fewer overrides.

These outcomes justify continued investment in the future of AI agents.

Common Enterprise Challenges in AI Agent Adoption and How to Address Them

For most Australian enterprises, the barriers to AI agent adoption are not model capability or tooling maturity. They sit in systems, governance, and organisational trust. The organisations that succeed are those that treat these challenges as architectural and leadership problems, not technical bugs.

Legacy System Constraints

Challenge:

Most Australian enterprises run on legacy ERP and operational platforms that were not designed for autonomous decision-making. Direct agent integration often increases fragility and system risk.

Solution:

Enterprises introduce controlled integration layers that mediate agent actions through APIs and policy engines, preserving system stability while enabling autonomy.

Also Read: Legacy System Modernisation in Australia: A Practical Roadmap for Enterprises

Data Ownership and Quality Gaps

Challenge:

AI agents depend on context, but enterprise data is fragmented, inconsistently governed, and often subject to sovereignty constraints.

Solution:

Leading organisations use scoped data access and versioned pipelines, ensuring agents reason only on approved, traceable datasets.

Governance and Accountability Risks

Challenge:

When agents act autonomously, accountability for decisions becomes unclear, creating audit and regulatory exposure.

Solution:

Agents are assigned explicit roles, authority limits, and decision logs, with mandatory human escalation for high-risk actions.

Security and Cyber Exposure

Challenge:

AI agents often require system-level access, making them attractive targets for misuse or exploitation. Poorly secured agents can amplify cyber risk rather than reduce it.

Solution:

You should embed security controls at every layer and treat role-based permissions, encrypted communication, continuous monitoring, and incident response integration as baseline requirements.

Compliance and Regulatory Uncertainty

Challenge:

AI regulations continue to evolve, creating long-term compliance uncertainty for autonomous systems.

Solution:

Forward-looking development teams design agents with configurable policies and controls, allowing compliance adaptation without re-engineering.

Also Read: Responsible AI Checklist: 10 Steps for Safe AI Deployment

Future of AI Agents: Emerging AI Agent Trends

The next phase of AI agent adoption is being shaped less by technical capability and more by how autonomy is controlled inside large organisations. Enterprises are moving away from isolated agents toward coordinated systems where responsibility, escalation, and authority are clearly defined. This mirrors existing operating models and reduces the risk that comes with delegating decisions at scale.

Another visible shift is how autonomy is granted. Rather than fixed permissions, agents are being designed to adjust their scope based on risk and context. Low-impact actions proceed independently, while higher-impact decisions trigger review. At the same time, explainability is becoming a baseline expectation, with agents expected to justify decisions in business terms that stand up to executive and audit scrutiny.

In the long term, organisations are prioritising flexibility and accountability. AI agents are increasingly treated as digital operators with defined identities and roles, while architectures are kept vendor-agnostic to avoid lock-in. The focus is on durability and trust, ensuring agents can evolve alongside regulation and organisational change without repeated reinvention.

How Appinventiv Helps Australian Enterprises Build AI Agents

Building AI agents for Australian enterprises is an architectural commitment that shapes how systems operate, how risk is managed, and how accountability is enforced over time. Many AI initiatives stall because autonomy is introduced before governance, or intelligence is prioritised over operational fit.

At Appinventiv Australia, we design and deliver AI agents as enterprise systems, not experimental components. Our artificial intelligence development services in Australia focus on enabling controlled autonomy that fits within Australia’s regulatory, security, and data governance expectations.

We support Sydney, Melbourne, Brisbane, Perth, and Adelaide-based enterprises across the full AI agent lifecycle, from defining authority boundaries and governance models to integrating agents safely into legacy-heavy environments.

Our teams of 200+ data scientists and AI engineers build AI agents that can reason, act, and escalate without compromising auditability or system stability. In our 10+ years of APAC delivery, we have deployed over 100 autonomous AI agents and trained 150+ custom AI models for 35+ industries, including mining, energy, healthcare, financial services, and government

This delivery maturity is reflected in external recognition. Appinventiv has been featured in the Deloitte Fast 50 for two consecutive years in 2023 and 2024, recognised as a leader in AI product development by ET and ranked among APAC’s High-Growth Companies by Statista and the Financial Times.

From a business impact perspective, enterprises working with us typically achieve measurable operational outcomes:

- 50% reduction in manual process dependency through governed agent workflows

- 90%+ task accuracy across agent-driven decision and execution paths

- 2x scalability improvements, particularly in high-volume, cross-system operations

Case in Action: MyExec AI Business Consultant

To illustrate how to build an AI agent in Australia, here’s a real case study.

One of our flagship AI agent projects was MyExec, an AI-powered business consultant designed to help SMBs make smarter, data-driven decisions. Rather than acting as a simple chatbot, MyExec analyses business documents, extracts strategic insights, and provides tailored, actionable recommendations, effectively democratising high-level advisory services previously only accessible through human consultants.

Key aspects of the MyExec build included:

- A multi-agent RAG (Retrieval-Augmented Generation) architecture where specialised agents collaboratively interpret documents and reason over context.

- A personalisation engine that adapts recommendations to the unique objectives and data of each business.

- Seamless integration with diverse structured and unstructured data sources for real-time insight delivery.

While MyExec was not developed for the Australian market specifically, its architecture reflects the same principles required for enterprise AI agent development: bounded autonomy, clear system roles, and controlled decision-making.

Discuss your project vision and move from AI experimentation to governed, production-ready agents.

FAQs

Q. Why should enterprises invest in AI agents in 2026?

A. Enterprises should invest in AI agents in 2026 because operational complexity is rising faster than human teams can scale. Governed AI agents help reduce decision latency, handle exception-heavy workflows, and improve consistency across systems, while maintaining accountability, auditability, and regulatory control.

Q. What does an AI agent do?

A. In practice, an AI agent does not replace judgment. It structures it. Agents continuously observe operational data, apply defined logic and context, surface options, and either act within approved boundaries or escalate decisions to people.

Their value is most visible in environments where decisions are frequent, time-sensitive, and dependent on fragmented information. Over time, they reduce noise and allow teams to focus on the decisions that genuinely require human judgment.

Q. How much does it really cost to build an AI agent?

A. On average, the cost to build an AI agent in Australia ranges between AUD 40,000 and AUD 600,000. Typical cost drivers include:

- Integration with ERP, asset, finance, or operational platforms

- Level of autonomy versus mandatory human approval

- Security, auditability, and data residency controls

- Ongoing monitoring, optimisation, and support

Enterprises that invest upfront in these areas generally avoid higher remediation costs later.

Q. How long does it take to create an AI agent?

A. Timelines for AI agent development in Australia depend less on development speed and more on organisational readiness. A tightly scoped agent can be delivered in just 4-8 months, but enterprise-grade deployments take nearly 12-18 months or more because they require integration, testing, governance sign-off, and adoption planning.

Many organisations underestimate the time needed for trust-building and operational alignment. Agents that are rushed into production often stall, while those introduced in stages tend to scale more smoothly.

Q. What are the most common enterprise use cases for AI agents in Australia?

A. AI agents are most effective where decisions are frequent, time-sensitive, and exception-heavy. Common use cases include:

- Compliance and reporting triage

- Operational risk and incident prioritisation

- Asset maintenance and scheduling decisions

- Financial reconciliations and exception handling

- Supply chain coordination during disruption

These areas benefit from consistency and speed while still requiring human oversight.

Q. How do AI agents comply with Australian data privacy regulations?

A. AI agents comply with Australian data privacy regulations through architecture-led controls, not policy statements alone. Enterprises enforce compliance by limiting agents to approved data sources, keeping sensitive data within Australian jurisdictions, applying role-based access, and logging every decision and action for auditability. Human escalation and override mechanisms ensure accountability remains with the organisation, not the system.

Q. Can AI agents work with legacy systems that were never designed for automation?

A. Yes, but not through direct integration. Most successful deployments place AI agents behind middleware and orchestration layers that translate modern decision logic into safe, controlled system interactions.

This allows organisations to extend the life of legacy platforms while still modernising how decisions are made around them. Attempts to bypass this layer usually increase risk and slow adoption.

Q. What is the biggest mistake enterprises make when building AI agents?

A. The most common mistake is assuming the hardest part is the technology. In reality, unclear ownership and poorly defined authority limits cause far more problems than model performance. When teams do not know who is responsible for an agent’s decisions, trust erodes quickly. Enterprises that address accountability early tend to scale confidently. Those who do not often pause or abandon initiatives altogether.

Q. How to create an AI agent?

A. Here is a step-by-step process to create an AI agent:

- Identify Needs and High-Impact Enterprise Use Case

- Define AI Agent Features Aligned With AU Enterprise Needs

- Select the Right AI Agent Architecture

- Design the Enterprise Integration Layer

- Build Data Pipelines and Context Management

- Implement Governance, Risk, and Safety Controls

- Monitor, Maintain, and Continuously Optimise the AI Agent

To gain an in-depth understanding of how to build an AI agent in Australia, please refer to the above blog.

Q. What are some popular AI agent examples?

A. Popular AI agent examples include Microsoft Copilot agents, Salesforce Einstein agents, ServiceNow AI agents, IBM Watson Orchestrate, AWS Bedrock agents, and Darktrace Autonomous Response.

These agents operate with defined authority, integrate with enterprise systems, and execute tasks or decisions within governed boundaries rather than simply responding to prompts.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

AI Fraud Detection in Australia: Use Cases, Compliance Considerations, and Implementation Roadmap

Key takeaways: AI Fraud Detection in Australia is moving from static rule engines to real-time behavioural risk intelligence embedded directly into payment and identity flows. AI for financial fraud detection helps reduce false positives, accelerate response time, and protecting revenue without increasing customer friction. Australian institutions must align AI deployments with APRA CPS 234, ASIC…

Agentic RAG Implementation in Enterprises - Use Cases, Challenges, ROI

Key Highlights Agentic RAG improves decision accuracy while maintaining compliance, governance visibility, and enterprise data traceability. Enterprises deploying AI agents report strong ROI as operational efficiency and knowledge accessibility steadily improve. Hybrid retrieval plus agent reasoning enables scalable AI workflows across complex enterprise systems and datasets. Governance, observability, and security architecture determine whether enterprise AI…

Key takeaways: AI reconciliation for enterprise finance is helping finance teams maintain control despite growing transaction complexity. AI-powered financial reconciliation solutions surfaces mismatches early, improving visibility and reducing close-cycle pressure. Hybrid reconciliation logic combining rules and AI improves accuracy while preserving audit transparency. Real-time financial reconciliation strengthens compliance readiness and reduces manual intervention. Successful adoption…