- What Is AI API Integration? (And Why It Matters Now)

- How AI API Integration Accelerates Digital Transformation

- Adds intelligence without rebuilding

- Reduces time-to-market

- Enables real-time decisions

- Improves CX and agent productivity

- Continuously improves

- Flexible scaling with governance built-in

- Turns capabilities into reusable building blocks

- Accelerates experimentation and innovation

- Improves cross-channel consistency

- The compounding effect

- What high performers do differently

- Types of AI APIs in Digital Transformation

- 1. NLP & Large Language Model (LLM) APIs

- 2. Speech-to-Text (Including Ambient Clinical Listening)

- 3. Computer Vision APIs

- 4. Recommendation & Personalization APIs

- 5. Predictive Analytics & Forecasting APIs

- 6. Sentiment & Social Listening APIs

- 7. Anomaly & Threat Detection APIs

- 8. Agentic & Orchestrated Workflow APIs (Emerging)

- Step-by-Step Process for AI API Integration

- Use Cases Across Key Industries (US-Led Examples)

- 1. YouCOMM (Healthcare – US in-hospital communication)

- 2. JobGet (Workforce/Marketplace – US job platform)

- 3. MyExec (SMB AI-Business Consultant)

- 4. Ralph Lauren (Luxury Retail – US)

- 5. Walmart (Mass Retail – US)

- Technical Considerations & Integration Requirements

- Business Frictions AI API Integration Eliminates

- AI API Integration vs. Custom AI Development

- Cost of AI API Integration (Ranges & Levers)

- Cost Drivers

- Implementation Mistakes That Slow Results

- Treating APIs as “plug-and-play” without proper evaluation

- No data-quality plan - “garbage in, confident nonsense out”

- Weak governance - unclear ownership, shadow usage, audit gaps

- Ambiguous terms & claims - regulatory risk if messaging over-promises

- Vendor lock-in - no abstraction layer, no migration path

- How to Choose the Right AI API Provider

- Accuracy & Latency SLAs (by use case)

- Security & Data Use Terms

- Compliance Readiness (HIPAA, GLBA, sector controls)

- Operational Maturity

- Observability (usage, quality, and cost telemetry)

- Commercial Structure & Pricing Transparency

- Map Your Evaluation to NIST AI RMF

- How This Looks in Practice

- Future Trends to Watch

- How Appinventiv Accelerates AI API Integration Success

- FAQs

Key takeaways:

- AI API integration upgrades existing systems with language, vision, and decision-making—without re-platforming or rewriting legacy platforms.

- Because training, hosting, and updating are offloaded to providers, enterprises reach production-grade outcomes faster with lower engineering overhead.

- When intelligence sits inside the flow of work, decisions accelerate, errors shrink, and customer experience becomes consistent across channels.

- Gateways, versioning, audit trails, scope control, and human-in-the-loop thresholds prevent drift, reputational risk, and compliance surprises.

- Reusable summarization, classification, and recommendation endpoints create shared intelligence blocks—reducing duplication and growing efficiency exponentially.

Every enterprise wants the same outcomes from digital transformation: faster decisions, leaner operations, and experiences customers actually feel. Yet most teams are stuck between legacy systems, data silos, and scarce AI talent. The fastest way through that gridlock isn’t to rebuild everything from scratch—it’s to add intelligence as APIs that plug into the stack you already run. This is exactly where AI API integration moves fastest, layering intelligence without replatforming.

US enterprises, in particular, are feeling the productivity squeeze and moving from proof-of-concept to production AI that ships value in weeks, not quarters. AI based API integration makes that possible by turning discrete capabilities like language, vision, recommendations, and predictions into composable services your products and workflows can call on demand.

Surveys show adoption is broad and growing, but value concentrates where companies pair integration discipline with strong governance—treating AI API integration for digital transformation as an engineering practice with clear guardrails.

What Is AI API Integration? (And Why It Matters Now)

AI API integration means consuming external or managed AI capabilities like LLMs, speech, vision, recommendations, anomaly detection via secure APIs, then orchestrating them inside your applications and workflows.

You keep control of business logic and data contracts, while offloading model training, hosting, and updates to a provider. This shrinks time-to-value and lowers total cost of ownership versus building bespoke models everywhere, while still letting you standardize access, security, logging, and billing across teams—why ai in API integration is now the practical default.

McKinsey data backs the urgency: enterprise use of gen-AI jumped sharply through 2024–2025, with leadership pushing for measurable productivity gains and managed risk—turning AI API integration for digital transformation from pilot to platform.

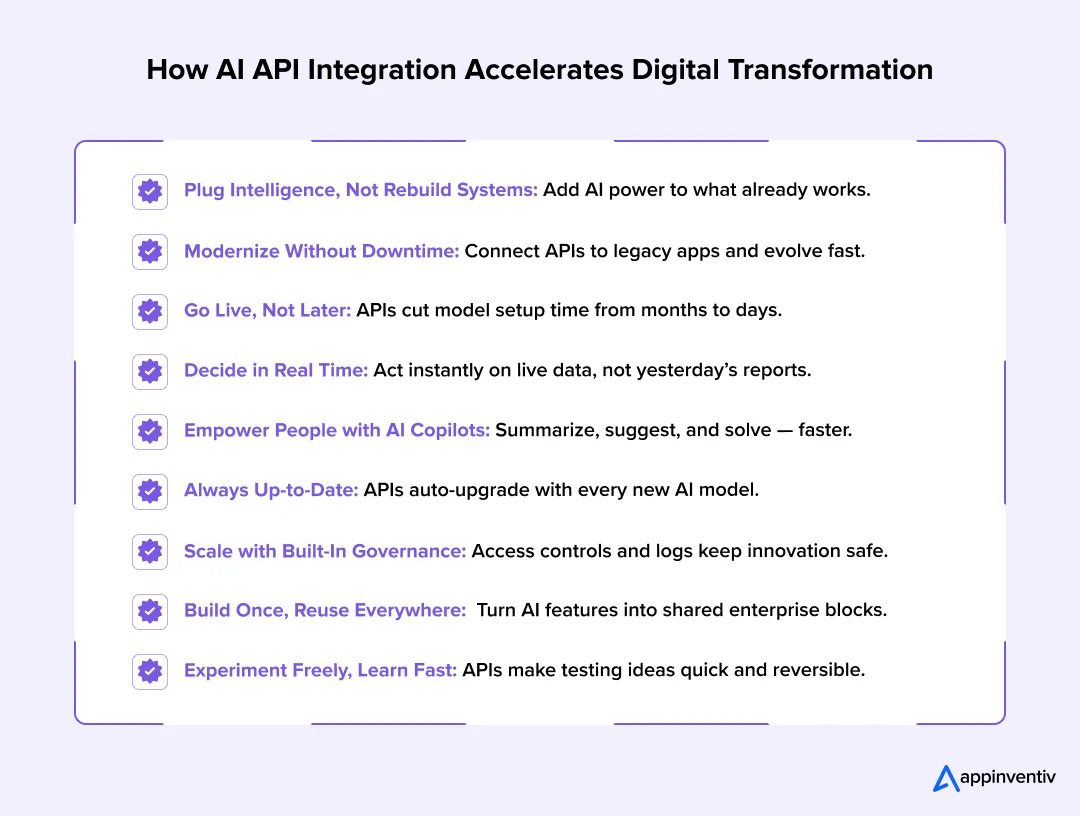

How AI API Integration Accelerates Digital Transformation

Digital transformation isn’t just a technology upgrade—it’s a race against rising customer expectations, shrinking attention spans, and operational complexity. The enterprises pulling ahead aren’t rebuilding everything from scratch; they’re plugging intelligence into the systems they already rely on—this is AI in API integration at work. AI powered API integration ordinary workflows is turned into adaptive, data-driven experiences without slowing teams down.

Adds intelligence without rebuilding

Legacy modernization often stalls because enterprises assume every system must be upgraded before intelligence can be layered on top. This “rip and replace” mindset adds risk, introduces downtime, and drains budgets before value appears.

AI APIs change the sequence entirely.

They connect to the interfaces your teams already use — EMRs in healthcare, core banking systems in BFSI, ERPs in manufacturing, or e-commerce engines in retail. With minimal re-architecture, these systems can suddenly classify data, summarize documents, detect fraud, and recommend next actions.

Even more important: AI APIs respect the boundaries of legacy systems. They don’t demand schema overhauls, data migrations, or complex database rewrites. Enterprises keep their institutional memory intact while gradually modernizing workflows at the edges. A similar evolutionary approach is outlined in our guide on legacy application modernization and cloud-native migration.

This evolutionary approach reflects an AI-driven API integration strategy, modernizing at the edges while core systems remain stable. This reduces friction, accelerates adoption, and avoids technical shock to the business.

Reduces time-to-market

Digital transformation fails when it moves slower than the competition. Traditional AI development cycles — provisioning GPUs, training models, building MLOps tooling, hiring specialists — often take months before the first result appears.

AI APIs collapse the timeline dramatically.

Once a use case is selected, developers can plug capabilities directly into workflow surfaces: search bars, ticketing dashboards, contact-center consoles, underwriting portals, logistics systems, etc. Instead of worrying about infrastructure, teams focus on experience delivery — where transformation is actually felt.

Gateways and policy engines manage the rest—core to an AI-driven API integration strategy:

- request throttling

- authentication scope

- cost ceilings

- sandbox environments

- fallback logic

This means innovation happens continuously and is controlled by configuration rather than infrastructure. IT organizations shift from bottlenecks to enablement engines, empowering business units to experiment safely. These are tangible benefits of AI API integration for enterprises: faster releases, safer experimentation, and fewer infra roadblocks.

Enables real-time decisions

Historically, enterprise systems were designed to record data — not react to it. Decisions were batch-processed overnight, requiring analysis the next day. By then, opportunities were gone.

AI APIs allow intelligence to sit in the flow of business. By pairing machine learning API integration with live signals, decisions move into the flow of work.

- A transaction that looks suspicious is flagged instantly.

- A patient’s risk score updates during the exam.

- A ticket is prioritized the moment it’s submitted.

- A warehouse picks better route suggestions before the next order.

This shift upgrades the organization from reactive to proactive. Circumstances don’t wait for human reconciliation the system handles 80%, escalating only the edge cases. That is digital transformation’s ultimate promise: fewer delays, fewer bottlenecks, and fewer avoidable errors.

Improves CX and agent productivity

Customer experience is often lost in operational complexity. Agents spend too much time searching knowledge bases, rewriting documentation, and hand-holding legacy interfaces.

AI APIs create copilot assistance across channels:

- Summarize customer history before the call

- Suggest empathetic responses

- Detect sentiment in real-time

- Surface relevant policies instantly

- Draft follow-up emails

This lifts emotional labor off the agent and reduces cognitive load. This is where AI assistants API integration benefits show up first shorter handle times and more consistent resolutions.

For customers, these improvements are invisible but they do feel the change via:

- shorter waits,

- more accurate answers,

- higher consistency,

- fewer escalations.

Internally, the effect compounds:

- Knowledge gaps shrink

- Onboarding accelerates

- Tribal knowledge becomes accessible

The organization becomes more resilient because expertise is augmented, not isolated in a few senior employees. The same improvements are visible in enterprise contact centers adopting AI-powered customer service automation.

Continuously improves

Traditional systems degrade over time. APIs improve.

When AI providers ship new model versions safer guardrails, lower latency, broader knowledge windows enterprises inherit the upgrade automatically. No GPU procurement. No training cycles. No container orchestration shifts. It’s the equivalent of replacing the engine while the plane is flying.

This unlocks a new operating model:

- version pinning to test improvements safely

- feature flags to target specific user cohorts

- fallback routing if quality drops

It turns AI into an evergreen capability always current, always optimized.

And because improvements arrive quietly, enterprises stay competitive without unpredictable budget spikes.

Flexible scaling with governance built-in

Scaling AI isn’t just a performance problem it’s a risk governance problem:

- Who can access which capabilities?

- Which data is allowed?

- What contexts are sensitive?

- What outputs require human approval?

API gateways solve these concerns early:

- rate limits control leakage

- logs ensure traceability

- scopes segment privileges

- audit trails simplify compliance

This means transformation can grow horizontally:

from one pilot → to multiple teams → to enterprise adoption

…without security debt accumulating behind the scenes.

This is exactly where non-API approaches collapse, they scale functionality before they scale responsibility. For highly regulated environments, we’ve published a detailed breakdown of compliance-centric AI architecture for BFSI.

Turns capabilities into reusable building blocks

Without APIs, every new AI use case becomes a custom project. Budgets balloon, timelines drift, and operational load compounds.

With API integration, teams publish intelligence internally:

- summarizeText

- scoreRisk

- classifyIntent

- recommendNextAction

- extractEntities

Suddenly, product squads share capabilities instead of duplicating effort. This is platform thinking, reusable components, standardized contracts, governed access.

A single summarization endpoint might power:

- contact center transcripts

- claims notes

- compliance reports

- user research sessions

That’s digital transformation at economies of scale and a prime AI API integration use case pattern.

Accelerates experimentation and innovation

Transformation dies when experimentation becomes expensive.

AI APIs are reversible:

- If a prompt fails, fix the prompt.

- If a pattern fails, switch the routing.

- If a model fails, change providers.

The organization becomes anti-fragile, learning from small failures instead of hiding them.

Product teams can:

- try new user flows

- test automated approvals

- tune recommendations

- refine onboarding questions

… that too without infrastructure investment.

This encourages decentralization, innovation moves to the edges, where the business knows itself best.

Improves cross-channel consistency

Before AI APIs, different channels evolved organically:

- The help center taught one tone.

- The chat agent used another script.

- The email follow-up was built years ago.

- The chatbot never matched the support’s tone.

API integration centralizes reasoning and language models:

- one source of truth

- one summarization logic

- one sentiment detection system

- one recommendation strategy

When a customer moves between channels, the intelligence moves with them. Consistency, not coincidence, is a clear benefit of using AI API in digital transformation.

The compounding effect

Each benefit on its own is incremental. Combined, they change how an enterprise behaves:

- Workflows shorten.

- Decisions accelerate.

- Errors shrink.

- Customer loyalty grows.

- Operational costs flatten.

Digital transformation stops being an IT mandate and becomes an organizational reflex.

Enterprises that embrace this architecture governed access, measurable KPIs, reusable patterns, and runtime observability, report disproportionately higher ROI and faster competitive differentiation.

That is why the smartest organizations are not replacing their stack. They’re evolving it intelligently, one API at a time. That is why the smartest organizations are not replacing their stack. They’re evolving it intelligently, one API at a time—true AI APIs for enterprise transformation.

What high performers do differently

Enterprises that integrate AI API as a platform capability governed access, clear KPIs, reusable patterns, and runtime observability report disproportionate gains in productivity and customer satisfaction, according to McKinsey’s 2025 enterprise adoption findings. This is the Business Impact of AI-Powered API Integration when done right.

Read our enterprise AI development benefits & challenges guide to see how integration strategies fit into broader AI adoption use cases and platform decisions.

Types of AI APIs in Digital Transformation

AI APIs act like plug-in upgrades for the systems you already run. Instead of ripping out legacy tools, they quietly add intelligence where it’s needed most. When teams combine different types of APIs, they can improve decisions, shorten workflows, and help people focus on work that actually matters practical AI APIs for enterprise transformation.

1. NLP & Large Language Model (LLM) APIs

These APIs allow software to read, write, and understand language in a way that feels natural.

They’re helpful wherever people get bogged down by long documents or scattered information, which is a classic AI API integration use case.

- Build conversational assistants that answer questions employees would normally escalate.

- Turn dense policies, contracts, or case notes into clear summaries.

- Help teams search internal knowledge with simple, everyday language.

- Assist developers with code suggestions and clean-up tasks.

2. Speech-to-Text (Including Ambient Clinical Listening)

Not everything worth capturing is typed. Speech APIs convert conversations into structured text, making it easier to document and reference later. This is especially valuable in fast-paced environments.

- Produce real-time transcripts during calls or bedside conversations.

- Create clear, searchable meeting notes without manual writing.

- Suggest compliant language or follow-up steps as conversations unfold.

Ambient workflows like these are common AI APIs for enterprise transformation.

3. Computer Vision APIs

These APIs “see” what’s happening through cameras and images. They cut down on manual checks and give teams a clearer picture of what’s going on across physical environments.

- Spot product defects as they move along a manufacturing line.

- Track shelf conditions in retail to prevent out-of-stock moments.

- Raise alerts when safety rules are broken on shop floors.

4. Recommendation & Personalization APIs

Many customers feel overwhelmed by choices. Recommendation APIs narrow options based on behavior and preference, often improving satisfaction and revenue at the same time. It’s a practical form of generative AI API integration that personalizes without heavy rebuilds.

- Offer relevant products or content suggestions in real time.

- Trigger small add-ons that increase cart value.

- Guide banking customers with prompts tuned to their financial habits.

5. Predictive Analytics & Forecasting APIs

These APIs look ahead rather than reacting after something goes wrong. With the right signals, organizations can reduce waste and spot issues early.

- Forecast demand so inventory and staffing stay balanced.

- Predict which customers may churn and start outreach sooner.

- Highlight odd spending behaviors before fraud settles.

- Detect early signs of equipment failure, avoiding surprise downtime.

6. Sentiment & Social Listening APIs

Customers share how they feel across dozens of channels. Sentiment APIs gather that signal, detect tone, and help brands respond thoughtfully.

- Read tone in chat, email, and review text.

- Highlight the topics that keep surfacing across tickets.

- Notify marketing or support when negative chatter spikes online.

7. Anomaly & Threat Detection APIs

Not every risk is obvious. These APIs look for unusual patterns buried in data streams and raise flags before issues turn into incidents.

- Notice strange login attempts or spending habits.

- Catch network activity that doesn’t match normal traffic.

- Watch IoT devices for sensor readings that fall outside expected ranges.

8. Agentic & Orchestrated Workflow APIs (Emerging)

These APIs take automation further by stringing tasks together instead of performing just one. They can plan, execute, check their own work, and ask for help when needed—clear Intelligent automation through AI APIs.

- Search internal data, update records, and draft summaries in a single request.

- Trigger approvals or escalate exceptions without extra workflow tools.

- Operate with cost guardrails, permission checks, and audit trails.

Step-by-Step Process for AI API Integration

Implementing AI through APIs takes more than wiring endpoints into applications. Mature teams follow a structured approach that balances experimentation with governance, ensures data quality, and scales safely across the enterprise.

- Discovery & Prioritization

- Pick 2–3 high-leverage use cases with measurable KPIs (AHT, CSAT, conversion, error reduction).

- Score opportunities by business impact, data readiness, risk profile, and feasibility.

- Avoid “pilot sprawl” by showing early wins that build leadership momentum.

- Data Readiness

- Standardize schemas and remove inconsistencies before prompts hit the model.

- Redact or tokenize PII/PHI for compliance (especially healthcare/finance).

- Define retention policies and identify upstream data quality gaps early.

- Provider Evaluation

- Compare accuracy, latency SLAs, region availability, and fine-tuning options.

- Review data-use terms and default training policies to avoid privacy surprises.

- Validate compliance support (HIPAA, GLBA), logging, auditability, and explainability.

- Sandbox & Proof-of-Concept (POC)

- Test edge cases, ambiguous prompts, slang, multilingual queries, and formatting differences.

- Measure latency variance, hallucination rate, and cost per inference.

- Use dashboards to refine prompts and safety filters before customer exposure.

- Governance Baseline

- Align runtime controls with the NIST AI RMF: traceability, incident handling, human oversight.

- Create access policies, cost alerts, and anomaly monitoring.

- Define human-in-the-loop thresholds for sensitive decisions.

- Document key challenges in modern API integration at runtime, not just in policy slides.

- Production Integration

- Enforce auth, quotas, version routing, and fallback logic at the API gateway.

- Track structured logs, token costs, latency trends, and exception patterns.

- Use golden prompts and blue-green deployments to prevent UX disruption.

- Continuous Improvement

- Monitor drift, update prompts, and refresh retrieval context regularly.

- Route low-risk tasks to cheaper models to manage spending.

- Roll out performance upgrades behind feature flags to reduce operational risk.

Use Cases Across Key Industries (US-Led Examples)

AI API integration delivers meaningful business value when paired with domain context, regulatory awareness, and tight feedback loops. In the United States, adoption has accelerated in sectors where digital transformation is being pushed by labor shortages, rising compliance scrutiny, cost pressures, and consumer expectations for personalization. Value compounds when AI APIs for enterprise transformation are layered on domain logic.

1. YouCOMM (Healthcare – US in-hospital communication)

In-hospital patient-care app built by Appinventiv where patients use voice commands, head-gestures or tablet interfaces to alert nurses, bypassing outdated call-bell systems.

Relevance to AI API integration:

- Real-time communication amplifies urgency; intelligent routing APIs can classify request type, escalate emergencies, estimate wait-times.

- The existing hospital ecosystem remains intact (HMS, nurse dashboards); AI APIs layer additional intelligence rather than replacing systems.

- Strong compliance context: PHI, audit logs, fallback logic (three-nurse assignment chain) show how integration and governance matter.

Key outcome: 60% improvement in nurse real-time response time, multiple US hospitals using the solution, an exemplar AI API integration use case in healthcare.

2. JobGet (Workforce/Marketplace – US job platform)

Platform built for hourly job-seekers and employers by Appinventiv. The app uses AI matching technology (location-specific, simplified profiles) to reduce job-search time from months to days.

Relevance to AI API integration:

- Matching algorithms appear as black-box features but can be offered via AI APIs (profile-scoring, job-recommendation) that slot into the workflow.

- The employer/employee messaging and video-interview flows remain unchanged — intelligence is layered in, not re-architected.

Key outcomes: over 2 million downloads, 50,000+ active companies, and $52 M Series B funding.

3. MyExec (SMB AI-Business Consultant)

Another Appinventiv case: virtual AI-consultant built for SMBs to analyze business documents, extract strategic insights, and guide decisions.

Relevance to AI API integration:

- The intelligent core (document-analysis, summarisation, recommendation) can be exposed via APIs so multiple SMB apps or products consume the same intelligence layer.

- Integration into existing SME-platforms rather than building brand-new systems.

- This shows a cross-industry pattern: intelligence as a service, not vertical rebuild.

4. Ralph Lauren (Luxury Retail – US)

The luxury brand deployed “Ask Ralph”, an AI conversational tool in its U.S. mobile app, built in partnership with Microsoft / OpenAI, providing style recommendations aligned to inventory and brand voice.

Relevance to AI API integration:

- The recommendation/styling intelligence plugs into the mobile app (existing product surface)—an AI API backend handling prompts and inventory logic.

- Maintains control of brand style and inventory tie-in—demonstrates that ai chatbot API integration must obey business logic and brand guardrails.

- It shows how even luxury retail uses API-based intelligence rather than “build from scratch”.

For more on intelligent retail experiences, explore our deep dive into the 10 use case and benefits of AI in retail industry..

5. Walmart (Mass Retail – US)

The retail giant partnered with OpenAI to allow customers to shop via ChatGPT’s “Instant Checkout” feature, accessing Walmart’s catalog via conversational interface.

Relevance to AI API integration:

- The catalog/checkout intelligence is exposed through an AI-driven conversational interface rather than traditional e-commerce flow.

- Existing logistics/inventory/payment systems remain — the intelligence layer is overlaid (AI API + conversational front-end).

This also previews perplexity AI API integration–style conversational shopping where the catalog is the context.

Technical Considerations & Integration Requirements

Building AI API integrations isn’t simply about sending prompts and waiting for responses. The most successful enterprises treat integration as a production-grade discipline, where reliability, security, and governance are engineered into every call. These technical considerations establish the foundation for safe scaling, cost efficiency, and predictable user experience — especially as AI workloads expand across product teams and business units.

- Authentication & IAM: Enterprises use scoped API keys, OAuth tokens, and least-privilege permissions to prevent unauthorized access. Token rotation and identity logging offer traceability during audits, ensuring only approved services can invoke sensitive AI capabilities—table stakes in AI API development and integration.

- Rate Limits & Quotas: Traffic shaping protects downstream systems from overload and prevents runaway usage costs. When limits are reached, graceful fallback responses maintain user experience instead of crashing workflows.

- Latency Optimization: To feel real-time, AI calls must return fast. Routing requests to regional endpoints, caching common outputs, batching multiple queries, and streaming partial responses all help maintain low latency in production. These considerations become even more critical when planning cloud computing with AI.

- Schema & Prompt Contracts: Consistent input and output formats prevent prompt fragility. Schema contracts define how data should be structured, while defensive parsing prevents malformed responses from breaking downstream logic.

- LLM Contexting (RAG) & Tool Use: Retrieval-Augmented Generation pulls relevant enterprise knowledge into the prompt, reducing hallucinations and aligning responses with policy. Tool use lets models call calculators, search systems, or business logic instead of guessing.

- Versioning: Models evolve, and behavior can shift unexpectedly. Version pinning, staged rollouts, and instant rollback options keep production stable when upgrading to new releases. This directly reduces the AI API integration cost for businesses by avoiding regressions.

Business Frictions AI API Integration Eliminates

Digital transformation often stalls not because organizations lack data, but because intelligence is trapped in outdated workflows and siloed systems. AI APIs remove these friction points by adding smarter decision-making directly into existing tools. The result is faster execution, fewer manual steps, and more consistent customer experiences—the measurable business impact of AI-powered API integration.

- Data silos & slow decisions: When key insights live in different tools, teams waste time gathering and reconciling information. AI API integration centralizes intelligence without rebuilding pipelines, enabling fast judgment calls inside the apps people already use. According to McKinsey’s 2025 report, organizations see stronger performance when intelligence is embedded at the workflow level.

- Manual workflows: Tasks like classification, summarization, routing, or compliance review still consume hours of human effort. AI APIs automate these steps consistently and at scale, reducing error rates and freeing employees to focus on cases that require empathy, reasoning, or negotiation.

- Fragmented customer journeys: Customers switch between web, app, chat, and email—and judge brands on how smooth that experience feels. AI APIs bring real-time context and personalization across channels, reducing friction and lowering the likelihood of churn triggered by inconsistent support.

- Legacy system constraints: Replacing core systems is costly, risky, and often slows transformation. AI APIs offer a lighter, safer path by wrapping intelligence around existing platforms instead of rebuilding them. This is the role of AI APIs in digital transformation: modernize gradually without disrupting operations. Gartner predicts 40% of enterprise applications will feature task-specific AI agents by 2026, reflecting a shift toward augmentation rather than full re-platforming.

- High AI build costs & talent scarcity: Training AI models from scratch requires specialized talent, expensive GPU infrastructure, and long experimentation cycles. AI APIs let organizations access advanced capabilities instantly and redirect internal teams toward prompt design, governance, and measurable business outcomes. McKinsey’s 2025 findings show companies that scale responsibly—beyond pilots—unlock higher ROI.

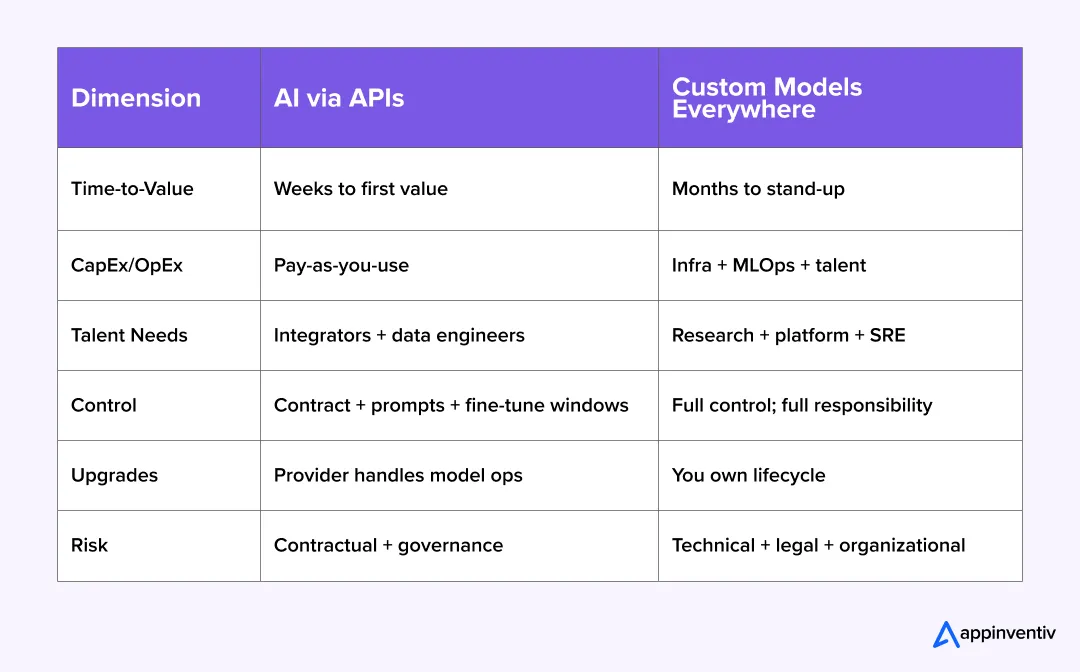

AI API Integration vs. Custom AI Development

For most enterprises, the decision comes down to speed versus control. AI API integration offers plug-and-play intelligence that can be deployed in weeks, while custom AI development delivers full ownership—at the cost of time, infrastructure, and specialized talent. The gap between the two models has widened as API-first approaches prove faster to operationalize and easier to govern.

For enterprises focused on agility and predictable ROI, AI APIs deliver the quickest path to transformation—embedding language, vision, and reasoning into workflows with minimal friction. On the other hand, organizations building highly specialized or regulated products may still opt for custom AI models to retain full control of training data, intellectual property, and system behavior.

Gartner’s outlook on surging API demand driven by AI supports an “APIs-first” approach for many use cases; reserve custom builds for clear competitive moats—maximizing the benefits of using AI API in digital transformation.

Cost of AI API Integration (Ranges & Levers)

AI API integration costs for businesses vary based on scope, traffic volume, regulatory needs, and how deeply the capabilities are embedded into workflows. Most organizations see investment phases: upfront engineering to integrate, followed by ongoing operational spend tied to API consumption, evaluation, and optimization.

Cost Drivers

Engineering (Discovery-to-POC-to-Production Hardening): Early work includes use-case selection, schema contracts, prompt design, and prototyping. Once value is proven, additional effort goes into latency tuning, fallback logic, caching, and integration with authentication systems. This is typically where teams stabilize performance and remove edge-case failure modes.

API Usage (Per-token / Per-minute / Per-call Pricing): Ongoing cost is usually tied directly to consumption: more workflows and users mean more model calls. Enterprises often budget by workflow volume (e.g., support tickets per month) and set quotas inside the API gateway. Streaming or batch requests can reduce repeated calls for the same context.

Data Pipelines (Redaction, Vector Storage, Retrieval Ops): RAG (retrieval-augmented generation) patterns require storing embeddings, maintaining vector databases, and redacting sensitive fields before passing text into a model. These introduce cloud storage costs, indexing overhead, and retrieval queries at runtime—but dramatically improve quality and reduce hallucinations.

Observability & Evaluation (Logs, Traces, Quality Checks): Prompt/response logs, token dashboards, latency traces, and drift detection help teams understand where accuracy drops or costs spike. Quality evaluation pipelines ensure changes in model versions don’t introduce regressions. These tools offer savings downstream by catching issues before they impact customers, keeping the business impact of AI-Powered API integration measurable.

Governance (Policy Engines, Reviews, Incident Playbooks): Runtime guardrails—content filters, approval gates, access audits, and human escalation steps—ensure compliance with frameworks like the NIST AI Risk Management Framework . They introduce modest overhead but avoid far more expensive risks tied to privacy, safety, and explainability.

Implementation Mistakes That Slow Results

Even with the right strategy, AI API initiatives can stall if foundational details are overlooked. Most failures aren’t caused by the technology itself, but by rushed assumptions, weak data discipline, and unclear ownership. Recognizing these early can prevent costly rework and help teams scale AI responsibly.

| Mistake | Why It Happens | Prevention |

|---|---|---|

| Treating APIs as plug-and-play | Rush to deliver, shallow testing | Sandbox evaluations, edge-case prompts, quality gates |

| Poor data hygiene | Legacy data debt, inconsistent schemas | Data contracts, ETL pipelines, redaction workflows |

| Weak governance | Policy on paper, not runtime logic | Audit logs, permission scopes, incident runbooks |

| Over-promising capability | Marketing pressure, unclear legal guardrails | Transparent claims, disclosure policies, legal review |

| Vendor lock-in | Shortcut integration, proprietary formats | API abstraction, fallback providers, version pinning |

Treating APIs as “plug-and-play” without proper evaluation

AI APIs look simple to integrate, but skipping early evaluation leads to output drift, hallucinations, or unintended responses in production. When organizations don’t test edge cases or simulate traffic patterns, quality issues surface only after users are impacted. This damages trust and slows adoption across other teams.

- Why it happens: excitement to launch quickly, pressure to show value

- Prevention: sandbox testing, evaluation rubrics, latency/quality benchmarks helps in addressing key challenges in modern API integration early.

No data-quality plan – “garbage in, confident nonsense out”

Even the most sophisticated model can only reason from the context it’s given. Poorly formatted or inconsistent inputs result in confidently wrong answers that waste time, misroute requests, and frustrate users. Data cleanup should happen before any prompt reaches an LLM.

- Why it happens: legacy data debt, incomplete schemas, rushed integrations

- Prevention: data contracts, redaction pipelines, lineage tracking

Weak governance – unclear ownership, shadow usage, audit gaps

Without centralized guardrails, teams end up deploying models independently, creating inconsistent behaviors and compliance blind spots. As usage scales, organizations lose visibility into what models were queried, when, why, and by whom. The National Institute of Standards and Technology (NIST) warns that this creates safety and accountability risks if not addressed early.

- Why it happens: governance treated as documentation, not runtime

- Prevention: permission scopes, audit logs, access reviews, incident playbooks

Ambiguous terms & claims – regulatory risk if messaging over-promises

Regulators now scrutinize claims around “autonomy,” “guaranteed accuracy,” or “bias-free” outputs. The Federal Trade Commission (FTC) has issued enforcement warnings against overstated AI claims and misleading marketing language. Over-positioning can spark investigations, fines, and brand damage.

- Why it happens: competitive pressure, unclear legal review

- Prevention: truthful claims, transparent disclaimers, compliant messaging

Vendor lock-in – no abstraction layer, no migration path

Tightly coupling your workflows to a single provider’s endpoints, response formats, or prompt style creates dependency risk. When pricing changes, performance degrades, or new compliance features are required, switching becomes painful and expensive.

- Why it happens: speed-to-market shortcuts, proprietary integrations

- Prevention: internal API abstractions, provider-agnostic orchestration, feature flags—preserving flexibility for claude AI API integration or perplexity AI API integration pilots later.

How to Choose the Right AI API Provider

Selecting an AI API provider isn’t just about picking the “smartest” model. You’re choosing a partner whose platform, policies, and roadmap will shape how reliably you can ship features over the next few years. A poor choice locks you into rising costs, unpredictable behavior, and compliance headaches. A good one becomes a stable foundation you can build on repeatedly.

Accuracy & Latency SLAs (by use case)

Different workflows have different tolerance levels. A support summarization can wait 700ms; an in-app recommendation cannot. Ask providers for accuracy benchmarks and latency SLAs tied to your region and traffic patterns. When accuracy drops or latency spikes, the downstream impact compounds fast—especially in customer-facing journeys.

Security & Data Use Terms

Look closely at how your data will be stored, logged, and used. Ideally, providers should offer:

- No training on your inputs by default

- Short retention windows

- Encrypted transport and storage

This prevents sensitive information from becoming someone else’s training asset later. For regulated industries, these terms can make or break legal eligibility.

Compliance Readiness (HIPAA, GLBA, sector controls)

If you’re working in healthcare, banking, insurance, or government, ensure the provider supports:

- HIPAA-aligned handling for PHI

- GLBA protections for financial data

- Role-based access and audit records

Compliance add-ons after integration are painful; build with them from the beginning.

Operational Maturity

Ask how often models update, how version changes are communicated, and whether rollback is supported. A model upgrade that changes tone, structure, or accuracy can quietly break production workflows. Mature providers publish changelogs, offer version pinning, and provide migration guides—keeping surprises out of your critical paths.

Observability (usage, quality, and cost telemetry)

Without visibility, costs creep quietly. Strong providers offer:

- Token and cost dashboards

- Latency and error tracing

- Quality variance reporting

- Anomaly alerts

Transparent telemetry also makes perplexity AI API integration experiments safer under spend control.

Commercial Structure & Pricing Transparency

Costs should be predictable. Look for:

- Clear token/call pricing

- Committed-use discounts

- Burst handling during peak traffic

- Rate limits that fail gracefully

The right structure amplifies the benefits of AI API integration for enterprises without budget shocks.

Map Your Evaluation to NIST AI RMF

For structure, anchor your criteria to the NIST AI Risk Management Framework (RMF) functions:

- Govern: Who owns access? What’s the escalation plan?

- Map: What data and workflows does the model touch?

- Measure: How well does it perform over time?

- Manage: What happens when outputs degrade?

NIST’s 2025 updates emphasize runtime guardrails—not just policy slides.

How This Looks in Practice

Teams that choose providers based purely on performance benchmarks often discover hidden friction months later—rising inference costs, weaker audit trails, or unclear retention rules. Holistic evaluation, for instance, an approach commonly guided by a digital engineering services firm, helps teams that evaluate holistically usually scale faster, avoid surprises, and build trust across legal, security, and compliance stakeholders.

Future Trends to Watch

AI API integration is evolving quickly; future trends of AI in API and digital transformation point to more control, reliability, and specialization. These will influence how enterprise leaders plan roadmaps, allocate budgets, and structure teams over the next 2–3 years.

- Multi-agent orchestration: AI will shift from single prompts to coordinated agents that plan, execute, and validate multi-step tasks across apps—managed by strict permission scopes and policy controls. We recently explored this pattern in our analysis of digital transformation with AI in various industries.

- Enterprise knowledge grounding: Retrieval and tool-based context will become standard to cut hallucinations and keep outputs tied to internal truths, policies, and historical data.

- Domain-specific managed models: Industry-tuned models (for claims, compliance, clinical review, financial risk, etc.) will be offered as managed services, reducing the need for heavy internal training.

- Safety, eval, and governance APIs: Guardrails will become callable APIs—content filters, bias checks, accuracy scoring—layered into the workflow rather than tacked onto documentation.

- Blended strategies: Enterprises will rent general capabilities via APIs and selectively fine-tune models where they need differentiation. This approach will shape the role of AI APIs in digital transformation over the next cycle.

How Appinventiv Accelerates AI API Integration Success

Launching AI in the enterprise requires more than plugging a model into an interface. It demands secure data pipelines, responsible governance, and production-grade engineering. Appinventiv’s AI Integration Services help organizations connect intelligence to real workflows while aligning to the National Institute of Standards and Technology’s AI Risk Management Framework (NIST AI RMF). Our integration accelerators, reference architectures, and prompt-engineering playbooks reduce hallucinations, strengthen accuracy, and ensure deployments behave predictably.

As organizations move from pilot to production, consistency and observability become critical. Appinventiv’s AI API integration approach introduces model versioning, secure retrieval, token cost dashboards, and fallback paths so that quality doesn’t erode as usage scales. We wrap intelligence around existing systems—avoiding risky re-platforming—and build context grounding pipelines that reflect enterprise knowledge rather than generic web assumptions.

Finally, we design architectures that avoid long-term vendor lock-in. By abstracting models behind internal APIs and supporting multi-provider orchestration, we preserve flexibility as the ecosystem evolves. Our broader AI development services ensure measurable outcomes, safe scaling, and future-ready foundations ,helping enterprises adopt AI with confidence, not complexity. This preserves optionality for claude AI API integration, perplexity AI API integration, and other providers as needs change.

Ready to embed intelligence without the rip-and-replace risk? Schedule your AI Integration strategy session with our experts.

FAQs

Q. How is AI transforming API integration platforms?

A. AI is turning traditionally rule-based integration platforms into dynamic, context-aware systems. Instead of relying on rigid mapping logic, AI automatically classifies data, resolves formatting inconsistencies, detects anomalies, and recommends routing rules based on historical patterns. By combining generative AI with retrieval and tool invocation, integration workflows can now self-optimize, reduce manual troubleshooting, and accelerate time-to-value. Enterprises gain higher accuracy, fewer integration failures, and more resilient pipelines across applications.

Q. How do AI APIs enhance business automation and efficiency?

A. AI APIs introduce intelligent decision-making directly into existing workflows without rebuilding core systems. They automate high-effort tasks such as document summarization, risk scoring, entity extraction, classification, and customer sentiment analysis. With capabilities like real-time prioritization, recommended actions, and contextual responses, organizations reduce cycle times, lower manual effort, and improve quality. As usage scales, this augments institutional expertise and frees teams to focus on cases that require emotional intelligence and deep reasoning.

Q. What’s the cost and ROI of AI API integration for digital transformation?

A. Costs depend on model usage volume, data pipelines, security requirements, and observability tooling. Most organizations invest initially in discovery, sandbox testing, and production hardening—then move to pay-as-you-consume pricing for inference calls. ROI is typically seen in reduced handle times, higher agent productivity, improved conversion rates, fewer errors, and faster decision cycles. Leaders that pair integration with governance and continuous evaluation unlock compounding returns rather than isolated savings.

Q. How can Appinventiv help with AI API integration for digital enterprises?

A. Appinventiv delivers AI API integration through reference architectures, secure data pipelines, and prompt-engineering playbooks aligned to the NIST AI RMF. We help enterprises move from pilot to production by building runtime guardrails, fallback logic, cost-telemetry dashboards, version control, and multi-provider orchestration layers. Our team wraps intelligence around existing systems—avoiding risky re-platforming—and designs migration paths that prevent vendor lock-in. The result: measurable outcomes, safe scaling, and future-ready digital experiences.

Q. Why are API integration platforms turning to AI?

A. API integration platforms are adopting AI to handle the scale and complexity of modern enterprise ecosystems. Traditional middleware struggles with unstructured data, exception handling, and rapidly evolving application stacks. AI introduces semantic understanding, pattern recognition, and predictive routing—reducing manual troubleshooting and improving operational resilience. As organizations adopt more SaaS applications and microservices, AI enhances accuracy, reduces integration debt, and ensures workflows remain consistent and context-aware across surfaces.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

Real Estate Chatbot Development: Adoption and Use Cases for Modern Property Management

Key takeaways: Generative AI could contribute $110 billion to $180 billion in value across real estate processes, including marketing, leasing, and asset management. AI chatbots can improve lead generation outcomes by responding instantly and qualifying prospects. Early adopters report faster response times and improved customer engagement across digital channels. Conversational automation is emerging as a…

AI Fraud Detection in Australia: Use Cases, Compliance Considerations, and Implementation Roadmap

Key takeaways: AI Fraud Detection in Australia is moving from static rule engines to real-time behavioural risk intelligence embedded directly into payment and identity flows. AI for financial fraud detection helps reduce false positives, accelerate response time, and protecting revenue without increasing customer friction. Australian institutions must align AI deployments with APRA CPS 234, ASIC…

Agentic RAG Implementation in Enterprises - Use Cases, Challenges, ROI

Key Highlights Agentic RAG improves decision accuracy while maintaining compliance, governance visibility, and enterprise data traceability. Enterprises deploying AI agents report strong ROI as operational efficiency and knowledge accessibility steadily improve. Hybrid retrieval plus agent reasoning enables scalable AI workflows across complex enterprise systems and datasets. Governance, observability, and security architecture determine whether enterprise AI…