- Flashback: Retrieval Augmentation Generation - RAG in AI

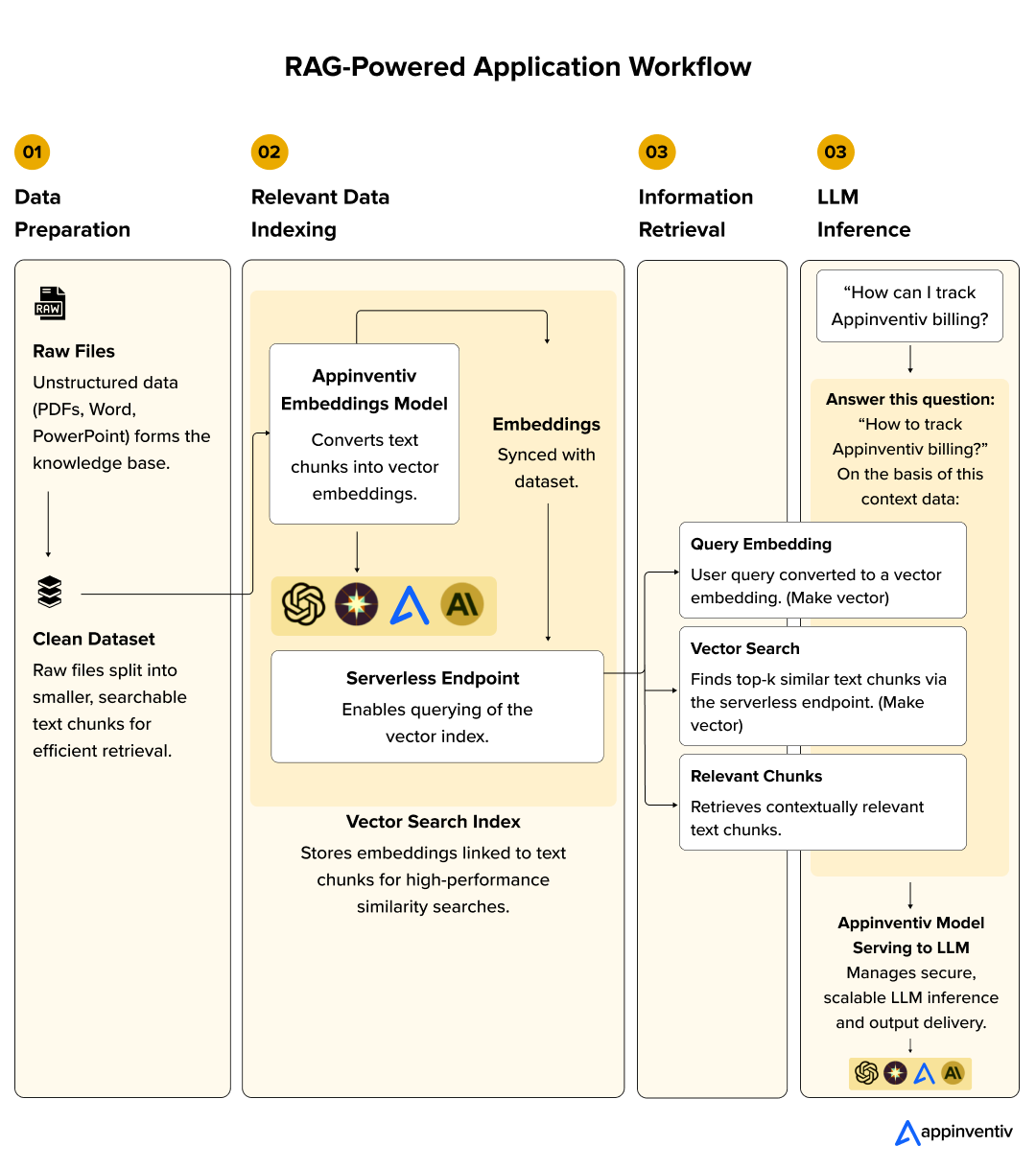

- Workflow to Understand the Process of RAG-Powered Applications

- Data Preparation

- Relevant Data to the Index

- Information Retrieval

- LLM Inference

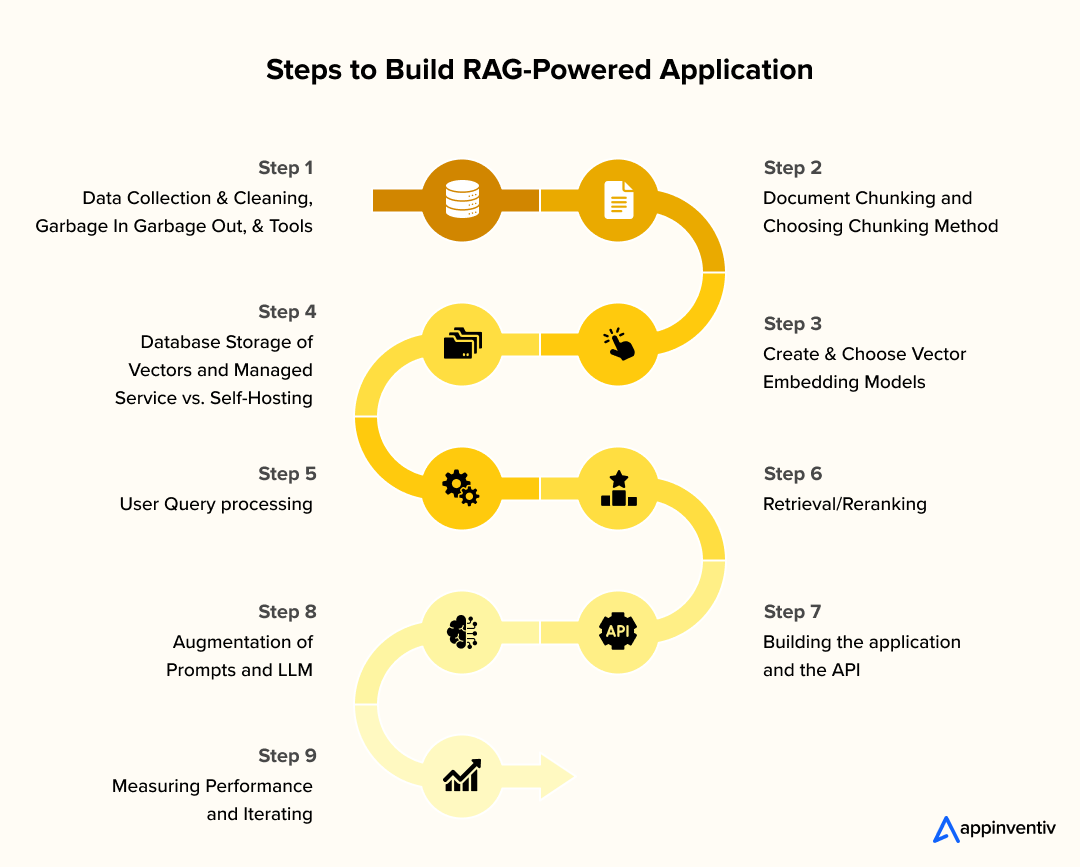

- How to Develop a RAG-Powered Application?

- Phase 1: Ingestion and preparation of data (The Foundation)

- Phase 2: Retrieval and Generation (The Core Logic)

- Phase 3: Deploy and Probe

- Cost to Develop a RAG-Powered Application

- One-Time Expenses (Set up & Development)

- The Operational Costs are recurring (Per Query & Month)

- LLM API Costs

- Costs of Open-Source vs. Proprietary models

- Build vs. Buy and the Path Forward

- When to Build

- When to Buy/Partner:

- Why Choose Appinventiv As Your Development Partner?

- The way Appinventiv takes your RAG to the Next Level:

- FAQs

- Data Preparation: Clean and chunk raw data into searchable text segments for efficient retrieval in RAG application development.

- Vector Embeddings: Convert text chunks into numerical vectors using models like OpenAI or BGE-M3 for fast similarity searches.

- Retrieval & Generation: Query embedding, vector search, and LLM inference deliver accurate, context-rich responses.

- Development Costs: Basic RAG apps cost $40K-$200K; advanced systems range from $600K-$1M+ based on complexity.

- Operational Costs: Recurring expenses include vector database fees (~$25-$70/month) and LLM API costs ($0.0003-$0.0046/query).

Retrieval-Augmented Generation (RAG) is intoxicating. It is the AI fable that avoids the nightmare of retraining models by assuring you that big language models (LLMs) will open up your proprietary data in their massive minds.

The demos are out, the buzz is going around, and your rivals are already discussing how their internal knowledge bots are automating customer service with AI. You realize that RAG chatbot development is about changing your business.

Between that successful vision and a production-ready application, there is a gap of pain. You have got questions that are not part of the marketing buzz:

- How does Custom RAG-powered app development for enterprises take place?

- What is the cost of development?

- What are the effects of the API fees that you have not taken into consideration?

- What are the steps from a proof-of-concept to a scalable, secure, and performant system that brings business value to life?

This is not yet another top-down perspective but a roadmap that you require. As an AI development company, our AI professionals have real-life experience bringing your idea to life, having seen them through.

The cost to develop a RAG-powered application ranges from $45,000 to $500,000+, depending on factors such as features, the tech stack you choose, and more.

This is a deep dive that peels the jargon back and cuts right to the core of what is involved in building a RAG-powered application. We are going to investigate the key phases of development, uncover the sophisticated cost system, and arm you with the specifics of information to empower you to pursue well-considered decisions that will turn into a success indicator of your project.

Tap into the future with a custom RAG-powered app. Connect with us now to lead the charge!

Flashback: Retrieval Augmentation Generation – RAG in AI

Retrieval-Augmented Generation (RAG) is a new technology offering significant benefits compared to the more limited capability of large language models (LLMs).

- With this aspect, it enables the LLMs to produce more precise, appropriate, and superior responses without retraining them.

- RAG does surmount the shortcomings of traditional models, including the inability to use up-to-date or knowledge domain-specific data to avoid the creation of wrong, obsolete, or general information.

- This renders RAG in AI as extensively useful in open-domain question-answering systems usage, law-related research, and call centers.

- RAG should transition to a less passive information gathering organization, more of an active contributor in the actual decision-making.

Explore more about future trends of Retrieval-Augmented Generation

Workflow to Understand the Process of RAG-Powered Applications

It is not enough to frame a powerful language model to create an application capable of understanding and responding to so-called complex queries with accurate knowledge. The RAG framework that actively integrates the comprehensive understanding of large language models (LLMs) with an ever-changing external knowledge base is called Retrieval-Augmented Generation (RAG).

In this workflow, there are four main stages: data preparation, aggregation, inference, and final inference. These stages are vital processes that make an application robust and reliable. Whenever a response to a request is required, valid and relevant current information is always available.

Data Preparation

This is the first step in which the raw, unstructured data is cleaned up and set into the system.

- Raw Files: Raw and unsystematic information in the form of PDFs, Word, and PowerPoint files marks the starting point in the process. This is the knowledge base in the application.

- Clean Dataset (Doc chunks): These are the raw files, which get chopped and broken into smaller and manageable chunks of text. The point is that it is essential to facilitate this step to retrieve efficiently in the future. Dividing one large document into small pieces will enable the system to recover the most applicable information without the need to undertake the whole document.

Relevant Data to the Index

At this phase, the cleaned data sections will be in a form that can be searched and retrieved promptly.

- Model Serving to Embeddings Model: RAG application development that can understand and respond to complex queries accurately requires more than just a powerful language model. Retrieval-Augmented Generation (RAG) is a revolutionary framework that combines the vast knowledge of large language models (LLMs) with a dynamic, external knowledge base.

- Embeddings Linked with Dataset: Embeddings produced are indexed into a database, usually a vector database, and are subsequently linked with chunks of original text. This forms an effective searchable index in which similar chunks of text will bear similar embeddings on vectors.

- Vector Search Index: These embeddings and text sections are indexed in a Vector search index. This index is specially matched with high-performance similarity searches. In the syncing of the data with this index, it means that the most recent data could always be retrieved.

- Serverless Endpoint: This is a serverless endpoint over which this vector index is queried. In the following step, the given endpoint will be employed to extract related data based on a user-input query.

Bonus Read: An Enterprise Guide to Build an Intelligent AI Model

Information Retrieval

This is the phase that comes into effect when a user posts a query. Relevant information is retrieved with the assistance of the system in the indexed knowledge base.

- Query Embedding: Similarly, the question that the user has entered is also embedded into an embedding in the same embedding model as in Stage 2.

- Vector Search: The application can conduct a similarity search on the Appinventiv Vector Search using the serverless endpoint. It compares the embedding of the query that the user entered with those of all the document chunks in the index.

- Relevant Chunk of Text Data: The top-k most similar document chunks to a query in terms of their vector similarity are retrieved by the system. It is believed that such chunks represent the context, which is relevant to answering the question for the user.

LLM Inference

This is the ultimate part, and the fine-tuning large Language Model (LLM) produces a reply to read.

- Chatbot Model Endpoint: The RAG chatbot development model endpoint receives the original question that a user had and the desired chunks of relevant text as the input.

- Prompt Engineering: The prompt that is given to the LLM contains the question asked by the user and the context retrieved.

- LLM Generation: The user provides a prompt, which is processed by the LLM, creating a logical and correct answer from the retrieved context. The LLM has been trained to solely rely on the given context when formulating a response, thus avoiding hallucinations and making the response based on the knowledge base that has been made available.

- Model Serving to LLM: This portion of the pipeline is responsible for the routing, credentials, throughput, and logs of the LLM inference, so that the whole pipeline is secure, highly scalable, and controllable. The last is the created output displayed to the user.

How to Develop a RAG-Powered Application?

The creation of a Retrieval-Augmented Generation (RAG) app brings together the strengths of information retrieval and generative artificial intelligence to provide quality information responses in the appropriate context. To get into some practical implementation of RAG, we will take you through the RAG pipeline, which describes all components and basic steps to build a RAG application.

Phase 1: Ingestion and preparation of data (The Foundation)

The first stage of the RAG app development process is entry-level, and the task is to prepare and consume a base of knowledge on which the LLM will be based, informing its responses. The efficiency of the whole system directly depends on the quality of this phase.

Step 1 – Data Collection & Cleaning, Garbage In Garbage Out, and Tools

- Data Collection and Cleaning: The process entails collecting raw data from various sources, including unstructured formats like PDFs, web pages, and internal documents, as well as structured sources such as databases and APIs.

- Garbage In, Garbage Out Principle: It emphasises that the neatness, correctness, and order of such raw material are supreme, and it determines the efficacy of the RAG system. Insufficient data quality in this baseline operation will automatically attract inappropriate recovery and insubstantial LLM generations, resulting in a more effortful situation as compared to the linguistic essence of RAG.

- Tools and libraries: Practical implementation makes use of different tools and libraries.

Tools:

BeautifulSoup is one of the most popular Python libraries for web scraping, parsing HTML and XML files to extract structured data from websites.

PyPDF2 is a pure-Python library for working with PDF documents and can read, manipulate, and extract text and metadata.

Libraries:

To extract text and tables, PDFplumber is very good.

PyMuPDF has much faster plain text extraction out of PDFs.

More advanced parsing can be provided by tools like Document AI offered by Google Cloud or Textract offered by Amazon Web Services, in case the document in question is complex, containing tables, or has an inconsistent layout.

Bonus Read: AWS Storage Gateway: Enhance Data Management with Hybrid Storage Integration

Step 2: Document Chunking and Choosing Chunking Method

- Document Chunking: Following data cleaning, large documents must be broken down into smaller, meaningful segments known as “chunks.” This process, called chunking, is vital because LLMs have inherent token limits, and smaller, relevant chunks reduce computational overhead during retrieval while simultaneously improving the precision of the context provided to the LLM.

- Fixed-Size vs. Semantic Chunking: Differentiate between these two crucial strategies, highlighting the pros and cons of each (e.g., speed vs. contextual integrity).

| Chunking Strategy | Description | Advantages |

|---|---|---|

| Fixed-Size Chunking | A predetermined number of tokens breaks up text. | Rapid and simple to put into use. |

| Semantic Chunking | Organize the sentences or paragraphs according to semantics. | Generates context-sensitive pieces of better contextual integrity. |

| Recursive Chunking | Recursively divides text with a tree of separators (e.g., paragraphs, then sentences, then words). | Preserves a structure. |

| Agentic Chunking | The second method is an experimental one in which the LLM augments to identify good splits according to the semantics and the structure of the content. | Context- and content-structure-adaptive. |

- Best Practices: Discuss strategies like overlapping chunks to preserve context across boundaries. The optimal chunk_size and chunk_overlap typically require experimentation to find the best balance for a specific use case and data type.

Also Read: AI in Intelligent Document Processing and Management

Step 3: Create & Choose Vector Embedding Models

- Development of Vector Embeddings: Explain what choices are when using a commercial API (e.g., openai-text-embedding-3-small) and an open-source model (e.g., bge and e5-base). Discuss such areas as price, performance, and domain requirements. Once unstructured documents have been chunked, each chunk of text may be encoded as a numerical vector, also known as an embedding, using an embedding model.

- Choice of Embedding Models: The choice of any particular embedding model is also an important decision that comes with significant trade-offs in cost, performance, and control.

| Aspect | Business API Models | Open-Source Models Self-Hosted |

|---|---|---|

| Hosting | It is offered as a service wherein no hosting is necessary | Hosted in own infrastructure (Compute, GPU) |

| Performance | High performance, MTEB scores 62.3 % (small), 64.6 % (large) | Equal to or better quality for specific tasks / multilingual criteria (e.g., BGE-M3) |

| Customization | Very monitored, provider-dependent | Completely customizable and inference optimization |

| Data Privacy | Information transmitted to the provider, possible breaches of privacy | Data remains in the lower half, with increased privacy |

| Features | Flexible embedding size, enhanced multilingual, via Dimension API | Features in a per-model fashion, e.g., excellent multilingual support in BGE-M3 |

| Maintenance | Managed end-to-end by the provider, one does not require maintenance on it | Has the need to be maintained and a know-how requirement |

Step 4: Database Storage of Vectors and Managed Service vs. Self-Hosting

- Vectors in a Database: When vector embeddings have been generated, they must then be stored and indexed so that they may be quickly retrieved. The work of a vector database is to provide a dedicated system that is optimized in speed and accuracy of similarity search in high-dimensional data. Another important decision point is whether to use managed services or self-hosting, since it affects scalability, operational overhead, and cost.

- Managed Services or Self-Hosting:

Managed Services (SaaS): These facilities offload all the complexities of infrastructure management, with easy deployment that can be scaled without issues.

Self-Hosted Options (Open-Source): These give more control, data privacy, and the possibility of low cost on a very large scale, but require a lot of in-house skills.

| Database | Type | Key Features | Best Uses |

|---|---|---|---|

| Pinecone | Managed (SaaS) | Hybrid search, ML, scalable | Production-ready, no infra management |

| Weaviate | Managed/Self-hosted | Metadata filtering, multi-modal, REST api | Multi-modal, RAG systems, complex schemas |

| Milvus | Self-hosted (OSS) | Excessive scalability, throughput | Giant RAG, enormous data |

| Qdrant | Self-hosted (OSS) | Easy to use, API-first, metadata-rich search | Complex recovering, speedy prototyping |

| ChromaDB | Self-hosted (OSS) | Lightweight, Python integration, simple | Small AI tools based on Python prototyping |

| FAISS | Self-hosted (Library) | Accelerated by GPUs, performance | Researching, tailor-made search, performance |

Bonus Read: Why should you hire managed IT services for your business?

Phase 2: Retrieval and Generation (The Core Logic)

This phase constitutes the core of the RAG pipeline, where the user’s query is processed, relevant information is retrieved, and the LLM generates a response.

Step 5: User Query processing

The main task in the inference path consists of representing a natural language query provided by a user to the RAG application as a numerical vector embedding, as it is the first important step in the inference path. Such transformation is done with the same embedding model that we used to generate the embedding of the document chunks in the knowledge base.

Step 6: Retrieval/Reranking:

- Retrieval and Reranking: Finding the most relevant document chunks in the structure of the vector database using vector similarity search.

- The Reranking Benefit: Whereas initial vector similarity search is good enough in terms of results, adding another reranker model can significantly enhance the performance and accuracy of the retrieved chunks.

– Rerankers apply a different model or enhanced contextual memory than on the first pass of retrievals to reassess the first set of documents in terms of their relevance to the initial query.

– The process aids in floating the most pertinent chunks to the top of the results, even though their base vector similarity score was not the highest.

Step 7: Augmentation of Prompts and LLM

It is at this phase that the aspect of Augusted Generation into RAG comes through. The initial user query is appended to the most relevant chunks in the retrieval set with the highest score to synthesize a new, contextually enhanced prompt and subsequently input to the Large Language Model. Such an augmented prompt allows the LLM to have all the factual background needed to formulate an informed, accurate, and grounded response.

- Relevant prompt engineering methods are much needed at this stage. It includes the deliberately designed prompt that teaches the LLM how to employ the given context and focus on the facts, as well as overtly warn against making up things and hallucinating.

- The tools may involve outlining the given context, formulating a clear representation of the persona of the LLM, and teaching it to respond only to the provided information. Before answering the query, it could also be retrieved using query augmentation methods, like synonym expansion, re-phrasing, or contextual augmentation, to improve the initial search results.

- The operation and simplification of such a RAG pipeline are better enabled by orchestration frameworks that include LangChain and LlamaIndex. They offer a set of modules for actions involved in loading documents, splitting text, embedding, connecting to a vector store, retrieval, and chaining the LLM, making it much easier to create, maintain, and iterate RAG application development.

Phase 3: Deploy and Probe

The last stage of RAG development for business automation involves implementing the application to users and creating a system to track performance and enhancements.

Step 8: Building the application and the API

The final stage of the RAG app development process is the creation of the user-facing application. This normally involves the designing of a user-friendly User Interface (UI), which could be a chatbot or a search interface through which the end-users access the RAG system. This interface is intended to be user-friendly where the interaction with AI is simplified.

- Backend API is the robustness behind this interface and is crucial to running the whole RAG pipeline. The API will be the focal point and keep track of communication between the user’s inquiry and the LLM’s response.

- It processes user queries, communicates with the embedding model, communicates with the vector database to retrieve, may also communicate with a reranker, and appends retrieved context to the prompt before sending it to the LLM to generate.

- This API then passes the response of the LLM back to the user interface.

- Orchestration tools such as LangChain or LlamaIndex that have been mentioned above play a very significant role in creating this backend layer, and connecting the multiple RAG components seamlessly.

Bonus Read: A Complete Guide to API Development

Step 9: Measuring Performance and Iterating

When a RAG goes into deployment, it is not the end of the RAG cycle; this is, in fact, only the beginning of an ongoing process of measuring performance and iterations. Basing an assessment of a RAG application solely on simple accuracy is inadequate. It needs a designed multi-faceted evaluation in determining the quality of both the retrieval and generation parts, as well as the end-to-end effectiveness.

Quantitative Metrics: There exist RAG frameworks with quantitative measurements, such as RAGAS, that give a full suite of quantitative measures suited to RAG pipelines. Such metrics may be LLM-based (providing better correlation with human judgment) or use more conventional techniques such as string similarity. Important RAGAS measures are:

- Context Precision: Determines signal-to-noise level of the retrieved context, i.e., how relevant retrieved chunks are to the question.

- Context Recall: This is a test to determine relevance and retrieval of all the information required to answer the question, after searching the knowledge base.

- Faithfulness: Measures the accuracy of the produced answer in terms of being factual by utilizing the confirmation of the statements rendered by the LLM using the contexts that are provided.

- Answer Relevancy: The extent of the answer to the original user question based on its relevancy and completeness of the generated answer.

These measures make it possible to test components (usually on a component-by-component basis (test quality of retriever alone)) and the whole performance.

Qualitative User Feedback: Regardless of the quantitative measures, user feedback should be collected, especially from qualitative professionals. It is also a key to gather user interaction indicators, such as clicks, follow-up questions, or explicit refinements, and correlate them with system outputs to identify regularities in low-performance situations and focus on them accordingly.

Continuous Iteration: RAG implementation is not some sort of destination, but it contains an unending lifetime that begins with its implementation. In contrast to conventional software, AI systems, and particularly those based on external data and LLMs, are vulnerable to such problems as data drift, model degradation, or evolving user needs.

- This anticipatory analysis is imperative to risk mitigation and to achieve content reliability in the production processes.

- A RAG system in the absence of a strong, ongoing assessment based both on quantitative measures and qualitative user feedback is bound to regress and return to a hallucinatory state with irrelevant replies and the loss of user confidence.

- That requires introducing MLOps (Machine Learning Operations) for enterprise approaches for RAG application development, which means that feedback mechanisms should be automated, performance tracking should be enabled, and a dedicated team to continuously improve the system should be established.

Gather clean data, chunk it wisely, select a top embedding model, and launch with a smooth API.

Cost to Develop a RAG-Powered Application

Building a RAG application involves various financial commitments, both upfront and ongoing. Understanding these costs is crucial for accurate budgeting and strategic decision-making, and drives cost-effective RAG-powered app development.

One-Time Expenses (Set up & Development)

The estimated cost you have given is quite acceptable for developing and initial deployment of an application driven by RAG. They fit in the wider perception that developing bespoke, advanced AI systems is an expensive undertaking. Here is an explanation as to why those ranges are suitable and what the difference between each level is:

Basic ($40,000 to $200,000):

The basic cost to develop a RAG-powered application:

- Features: An easy RAG pipeline. It could apply a simple-to-use embedding model and an off-the-shelf commercial vector database (such as entry-level plans of Pinecone).

- Data: The knowledge base size would probably be relatively small (e.g., thousands of documents) and its structure rather basic (e.g., PDFs that have recurring factors of the same layout).

- Usefulness: It would be useful to answer simple and fact-based questions in terms of low prompt engineering and low post-retrieval processing.

- Cost Drivers: The lesser section of this range comprises the time of a small team (1-2 developers) over several months. The higher end represents a more convoluted data set or a little more powerful system with improved monitoring and a more mature interface.

Medium ($300,000 to $500,000):

This is a scale of a robust production-ready RAG application.

- Attributions: The system would also have advanced RAG methodologies, which will involve query rewriting (to enhance searching with better results), hybrid search (a combination of search that uses both keyword and vector search), and a re-ranking model to fine-tune the resulting documents.

- Data: It would process more data, a more sophisticated information base with mixed types of documents, and a requirement for more judgmental data chunking and metadata.

- Functionality: The application could automatically respond to more open-ended questions, provide more detailed and accurate responses, and could connect with other enterprise systems (e.g., APIs).

- Cost Drivers: This cost is linked to an increased number of AI/ML engineers, data engineers, and backend developers that have been working over a few months. The next generation has a more elaborate RAG architecture, has more elaborate assessment structures, and is concerned with reliability and scalability.

Advanced ($600,000 to $1Million+):

The cost to develop a RAG-powered application is that of a highly sophisticated and enterprise RAG-powered app solution.

- Features: rather than a typical RAG pipeline, this system does more. It could feature multi-hop reasoning (the system asks a follow-up question itself), agentic workflows (the system selects between a set of tools to complete a task), or a model specifically trained. A very streamlined and scalable infrastructure would characterize it.

- Data: The huge, continuously advancing encyclopedia of knowledge that the application will handle will consist of highly unstructured data. It would presumably need bespoke data extraction and pre-processing pipelines.

- Cost Drivers: This cost indicates a team of senior AI/ML experts, a longer development cycle, and high-performance, expensive infrastructure (e.g., dedicated GPU clusters to train/fine-tune models). The expenses also include the testing and maintenance, which are broad for such a critical system.

Bonus Read: Hire AI Consultants for Your Business and How to Find the Perfect Fit

The Operational Costs are recurring (Per Query & Month)

These are the costs that accumulate when you use your RAG application, as well as the costs of maintaining and scaling it over time.

The Costs of Vector Database:

Vector database charges are a big ongoing cost and are normally charged every month. At a high level, the two main drivers of these costs are the amount of data stored (i.e., the amount of vectors and their dimension) and the compute resources that the query load (reads and writes) uses.

Managed Service Price:

- Pinecone: Once you have reached the limits of a free starter version (to which 100,000 vectors are available), the paid serverless plans start at around $70 a month. Certain pricing models have made use of a combination of vector storage and query units, which scale according to their use.

- Weaviate: Serverless Cloud costs begin at $25.00 per month (with a major cost element of $0.095 per 1 million of the stored vector dimensions per month).

LLM API Costs

The costs of LLM inference are commonly the most unpredictable and biggest recurring expense in an RAG application, and increase with use. These are roughly calculated to cost per 1000 tokens and include the user input query, the context retrieved and given to the LLM, and the generated response output by the LLM.

An example of Cost Per Query: As an example, imagine a single user query that includes:

- User query tokens: 50 tokens

- Retrieved context tokens (from the vector database): 1,000 tokens

- LLM output tokens (the generated response): 200 tokens

- Total tokens per query = 50 (query) + 1,000 (context) + 200 (output) = 1,250 tokens.

Now, let’s apply example pricing from major providers:

Using OpenAI’s gpt-4o-mini: Input price is $0.15 per 1 million tokens, and output price is $0.60 per 1 million tokens.

- Cost = (1,050 input tokens / 1,000,000) * $0.15 + (200 output tokens / 1,000,000) * $0.60 = $0.0001575 + $0.00012 = $0.0002775 per query.

Using OpenAI’s gpt-4o: Input price is $2.50 per 1 million tokens, and output price is $10.00 per 1 million tokens.

- Cost = (1,050 input tokens / 1,000,000) * $2.50 + (200 output tokens / 1,000,000) * $10.00 = $0.002625 + $0.002 = $0.004625 per query.

Costs of Open-Source vs. Proprietary models

The nature of using an open-source model is fundamentally a different expense model where the variable API cost model is replaced by the operational cost of hosting, maintaining, and scaling the model personally. Such work costs entail:

- GPU Fees: The usage of LLMs, particularly larger open-source models, requires high-resource GPUs. The prices of cloud GPUs differ dramatically and range between NVIDIA T4s at $0.35/hour, V100s at $2.48/hour on Google Cloud, and H100s at $2.49/hour on other sites such as Lambda.

- Wages of ML Engineering: Companies need trained and skilled ML engineers to implement, optimize, track, and improve such models. Based on the above, as pointed out before, and the average salaries of LLM engineers at about $111,000, we can see up to more than $750,000 annually as the cost per year of a small dedicated group. It is a major, fixed overhead in terms of this human investment.

Build vs. Buy and the Path Forward

Decision to Build vs. Buy is an essential strategic fork that an organization should take when on the RAG path, and it is framed more on the in-house knowledge base of an organization on a wide spectrum of technical skills, as opposed to economic factors or product functionalities.

When to Build

It is reasonable to undertake in-house building of a custom RAG solution when an organization has expertise in each component of data management, application development, DevOps, machine learning, and Generative AI.

- The approach has the highest levels of customization, the option to control all of the components, including their specific element, and it can be adjusted to a very specific need or unique external data source.

- Large tech-intensive organizations with significant resources and a strategic need to create a proprietary AI capability that brings a unique competitive edge are likely to favor it.

- The strategy provides full control of the entire technology and data, which may be essential in cases of privacy-sensitive applications.

When to Buy/Partner:

At the software engineering budgets of most businesses, especially those without expansive AI/ML internal engineering teams, what is often more realistic and cost-efficient is to either use a managed, full-stack RAG platform or collaborate with an AI development service.

- This alternative eliminates or greatly minimises the requirement of specialized skills, shortens time to market, minimizes operational risk, and solutions are standardised and scalable.

- Managed services also offload most of the management of the infrastructure as well as management of the components or orchestration, and enable the business to deal with what they are good at without incurring the heavy computing costs of AI infrastructure and the associated workforce.

- This is a workaround to the high cost of getting and retaining diverse talent in-house, as organizations need not hire people who are specifically trained in AI.

Bonus Read: Build vs Buy Software in 2025: Cost, ROI, and Decision Guide

Discover the Exact Cost for Your Tailored Solution.

Why Choose Appinventiv As Your Development Partner?

Custom RAG-powered app development for enterprises is a process that requires careful planning, sound technical expertise, and a clear vision of the process and the associated costs. Whether it is the initial data preparation and intelligent chunking or the difficulties of retrieval, reranking, and integration of LLM, each stage presents its own set of challenges.

The build vs. buy question is an important factor, and in most situations, the significant investment in outsourced skilled staff, complicated infrastructure, and constant upkeep makes a home build unacceptable.

Appinventiv comes in as the perfect development partner to assist in RAG application development in exactly this area. With its 1600+ tech evangelists, we have more than a decade of experience in creating intelligent, AI native solutions to help our clients drive their respective industries. We are aware that the successful adoption of AI is not limited to technology but results in strategic innovation; thus, we’ve meticulously crafted bespoke AI development services poised to deliver tangible returns on the investments made.

The way Appinventiv takes your RAG to the Next Level:

- End-to-End AI Product Development: We develop powerful AI-based chatbots and AI Assistants, which are a direct application of RAG, promoting innovation and contributing to efficiency. Sound knowledge and experience in LLM fine-tuning and predictive analytics platforms support the development of RAG solutions that are not only functional, but optimized towards maximum performance and accuracy.

- Future-proof solution development in the area of AI Agents: As an R&D and software development and services company, we are well-positioned for the next age of cost-effective AI Agents development, leveraging the future trend of Agentic RAG in terms of fully utilising autonomous decision-making, goal-oriented task execution, and smart memory management.

- Generative AI Expertise: As experts in Generative AI, our suites of AI services and solutions also include prompt engineering and optimization to ensure proper use of the context retrieved by LLM to render resultant responses that are accurate and relevant. The skills also touch on model deployment and scalability of RAG applications; hence, they can manage increasing demands and user loads.

- Smooth AI and Data Integration: We give a perfect balance between innovation and execution of ideas by integrating AI into more obvious legacy systems through solid API creation. High competency in data collection, cleaning, integration, and management directly counters the challenge of Garbage In, Garbage Out and therefore forms a solid background to RAG. Experience with a range of different vector databases, along with big data technologies, enhances this base even further.

- MLOps and Continuous Optimization: We will make sure that RAG solutions can develop and achieve optimal performance over time. Our Full-stack AIOps and MLOps services will automate, monitor, and tune all aspects of the AI process across the lifecycle.

- Strategic AI Consulting: Our top-notch AI consulting services assist organizations in creating customized road maps of AI integration, identifying high-impact strategic use cases, and developing AI governance and compliance strategies.

- Robust & Secure Cloud Computing: We leverage the robust cloud providers, such as Microsoft, AWS, Azure, and Google Cloud platforms, to create strong, scalable, and secure AI solutions.

- Follow Agile Roadmap: Starting with the discovery and gaining insights to constant monitoring and further movement, ensures that the RAG applications are not just introduced successfully but remain to provide value and adjust to the new tendencies in technology.

Difficulties and the possible expenses of RAG should never persuade your organization to waste the power of this transformation. Leverage Appinventiv with the ability to co-create your vision into an ROI-driven digital resource.

Book your consultation and let Appinventiv be your guide in navigating the RAG revolution, delivering intelligent solutions that drive efficiency, enhance decision-making, and unlock new opportunities for innovation.

FAQs

Q. Which is the best framework for RAG?

A. The most advantageous structure of Retrieval-Augmented Generation (RAG) hinges on the particular use case, albeit the most common ones are LangChain, LlamaIndex, and Haystack. LangChain, a RAG framework, excels at modularity and cross-data integration, and is highly suitable for use in complex applications. LlamaIndex is fast for indexing and retrieval, especially when dealing with large document repositories. Haystack is flexible and highly semantic search-friendly. Your judgment should be dependent on the scale of your projects, the type of data, and integration requirements.

Q. What are the impacts of Retrieval-Augmented Generation on Business?

A. RAG also supports commercial activities by increasing the establishment with multiple responses to large data sets, improving the quality of business decision processes. It is cheaper as it automates customer service, content production, and data analysis. When powered by RAG, apps raise efficiency, make customer experiences personal, and allow real-time understanding, offering businesses a market advantage. This is, however, not easily implementable because it entails investing in infrastructure and skills in data quality and integration capacity.

Q. What are the Real-World Applications of RAG in AI?

A. There are 11 Real-World Applications of RAG in AI:

- Open-Domain QA Systems

- Multi-Hop Reasoning

- Search Engines and Information Retrieval

- Legal Research and Compliance

- Comprehensive and Concise Content Creation

- Customer Support and Chatbots

- Educational Tools and Personalized Learning

- Context-Aware Language Translation

- Medical Diagnosis and Decision Support

- Financial Analysis and Market Intelligence

- Semantic Comprehension in Document Analysis

Q. How does the RAG model AI differ from traditional generative AI models?

A. Unlike traditional generative models that rely solely on pre-trained knowledge, the RAG models for app development combine retrieval of external data with generation, enabling it to access up-to-date or domain-specific information without retraining.

Q. Why should enterprises use RAG-powered apps?

A. Custom RAG-powered app development for enterprises will help them get the best contextual insights by leveraging the large volumes of both internal and external data sources and data points. They provide a better ability to serve your customers by responding quickly and accurately, making operations more efficient, automating data-intensive processes, and making decisions using up-to-date, relevant information. RAG in AI is also easier to scale, accommodate wider domains, and eliminate errors when compared to exclusively generative models, which promote productivity and innovation.

Q. How can the performance of an RAG model AI be improved?

A. There are various ways to enhance the performance of the RAG model AI:

- Better Retriever: To improve RAG model AI, use advanced embedding models or fine-tune the retriever.

- Quality Knowledge Base: Ensure the dataset is comprehensive and up-to-date.

- Fine-Tuning: Adjust the generative model for specific domains.

- Hybrid Approaches: Combine RAG with other techniques like caching or pre-filtering.

Q. What are the best practices for developing RAG-powered applications?

A. While considering the RAG app development process, there are a few important things to ponder while following the best practices for developing RAG-powered Applications:

- Curate Data of High Quality: Include relevant and high-quality data that is up-to-date and is structured within the knowledge base, ensuring only accurate information is retrieved and minimizing noise.

- Choose Robust Retriever: Pick a powerful retrieval technique such as dense vector search (e.g., BERT embeddings) or hybrid search and tune to your domain.

- Fine-Tune Generative Model: Tune/Apply the generative model to your application with domain-related data to provide sensible context-sensitive feedback.

- Balance Retrieval and Generation: How to integrate RAG into apps? Pair prompt engineering to seamlessly integrate retrieved information with generated results to make fluent and correct responses.

- Scale Indexing: When indexing large corpora or in other situations where fast, low-latency search is a requirement, utilise fast indexing (e.g., FAISS, Elasticsearch).

- Monitor and Evaluate: Retention (precision/recall), generation quality (BLEU/ROUGE). Treat hallucinations through frequent tests.

- Security and Compliance: Encrypt data and control access to it, ensure compliance with relevant laws (e.g., GDPR or HIPAA).

- Adapt Modular RAG Frameworks: Flexible in integration and simpler maintenance are LangChain, LlamaIndex, or Haystack.

- Test Live Similarities: Prove with a variety of shortest-path seeking quests, a feeling of generalization across use cases and dialects.

- Facilitate the Continuous Improvement: Re-use the feedback loops to keep the knowledge base current, and to refine the models based on user activity.

Q. What’s the best way to customize an LLM—prompt engineering, RAG, fine-tuning, or pretraining?

A. The best way to customize an LLM depends on your needs:

- Prompt Engineering: Fast, cheap, low customization. Best for general tasks or prototyping.

- RAG: Medium cost, adds external knowledge. Ideal for domain-specific, factual responses.

- Fine-Tuning: High cost, high customization. Suits specialize in tasks with labeled data.

- Pretraining: Very costly, maximum control. For unique needs with vast resources.

Recommendation: Start with prompt engineering, use RAG architecture for domain-specific tasks, fine-tune for precision, and pretrain only if no existing model works.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

Top 10 Benefits and Use Cases of AI Portfolio Management in the FinTech World

Key takeaways: AI for portfolio management helps overcome the big problems of traditional methods, like data overload and slow response times. The benefits of AI in portfolio management are huge, ranging from smarter risk assessment and predictive analytics to automated portfolio rebalancing. These powerful AI tools aren't just for big firms—robo-advisors are making advanced investment…

How AI Agents Are Reimagining Work in the Middle East and How to Build Them Right

Key takeaways: AI agents are transforming how Middle Eastern businesses operate—automating tasks, enhancing decision-making, and enabling 24/7 intelligent service. Industries such as finance, healthcare, logistics, retail, and government are leading the adoption with real-world success stories. Building an effective AI agent requires clear objectives, clean data, regional compliance, and scalable infrastructure. Development costs typically range…

10 Ways Generative AI is Transforming the eCommerce Industry

Key takeaways: Moving from experimental to essential for online businesses Companies like Amazon are seeing massive adoption with their AI tools Early adopters are gaining significant competitive advantages across operations Implementation costs are dropping while returns are increasing substantially The technology is transforming everything from customer service to inventory management Do you remember your last…