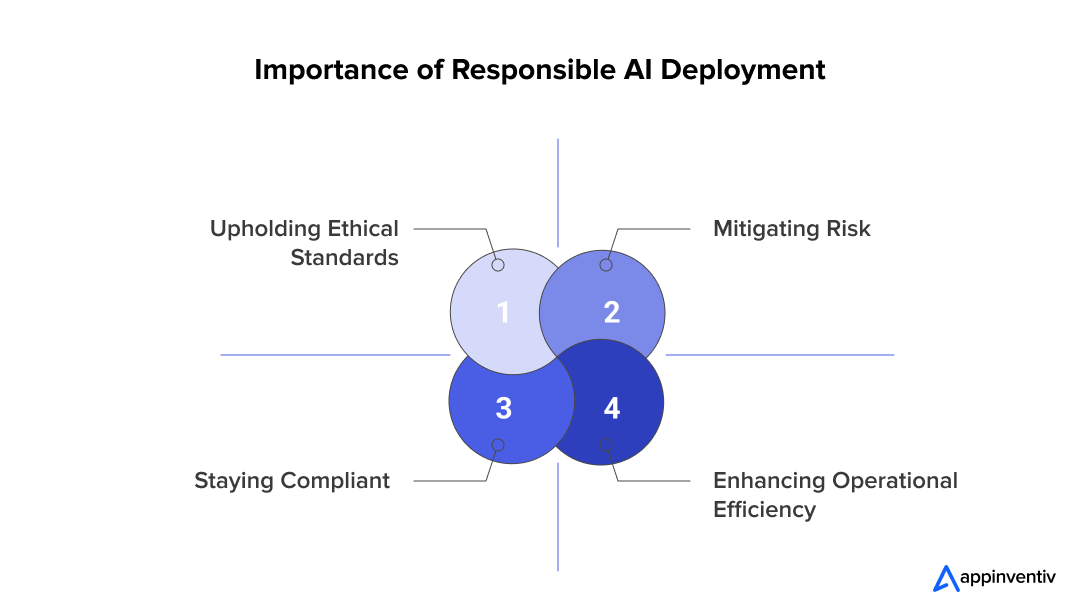

- Why Businesses Across Industries Prioritize Responsible AI Deployment

- Mitigating Risk

- Enhancing Operational Efficiency

- Staying Compliant

- Upholding Ethical Standards

- The Responsible AI Checklist: 10 Generative AI Governance Questions Leaders Must Ask

- 1. Data Integrity and Transparency

- 2. Ethical Alignment

- 3. Fairness and Bias Mitigation

- 4. Security and Privacy

- 5. Explainability and Transparency

- 6. Human Oversight

- 7. Compliance and Regulation

- 8. Accountability Framework

- 9. Environmental and Resource Impact

- 10. Continuous Monitoring and Risk Management

- Common Generative AI Compliance Pitfalls and How to Avoid Them

- Pitfall 1: Ignoring Data Provenance and Bias

- Pitfall 2: Lack of a Clear Accountability Framework

- Pitfall 3: Failing to Keep Up with Evolving Regulations

- Pitfall 4: Neglecting Human Oversight and Transparency

- How to Embed Responsible AI Throughout Generative AI Development

- The Lucrative ROI of Responsible AI Adoption

- The Appinventiv Approach to Responsible AI: How We Turn Strategy Into Reality

- FAQs

- Generative AI is a double-edged sword. It offers immense opportunities for growth and innovation. However, it also carries risks related to bias, security, and compliance

- Proactive governance is not optional. A responsible Generative AI deployment checklist helps leaders minimize risks and maximize trust.

- The checklist for Generative AI compliance assessment covers everything, including data integrity, ethical alignment, human oversight, continuous monitoring, and more.

- The ROI of responsible AI goes beyond compliance. This leads to increased consumer trust, reduced long-term costs, and a strong competitive advantage.

- Partner with AI experts like Appinventiv to turn Gen AI principles into a practical, actionable framework for your business.

The AI revolution is here, with generative models like GPT and DALL·E unlocking immense potential. In the blink of an eye, generative AI has moved from the realm of science fiction to a staple of the modern workplace. It’s no longer a question of if you’ll use it, but how and, more importantly, how well.

The potential is astounding: Statista’s latest research reveals that the generative AI market is projected to reach from $66.89 billion in 2025 to $442.07 billion by 2031. The race is on, and companies are deploying these powerful models to perform nearly every operational process. Businesses use Gen AI to create content, automate workflows, write code, analyze complex data, redefine customer experiences, and so on.

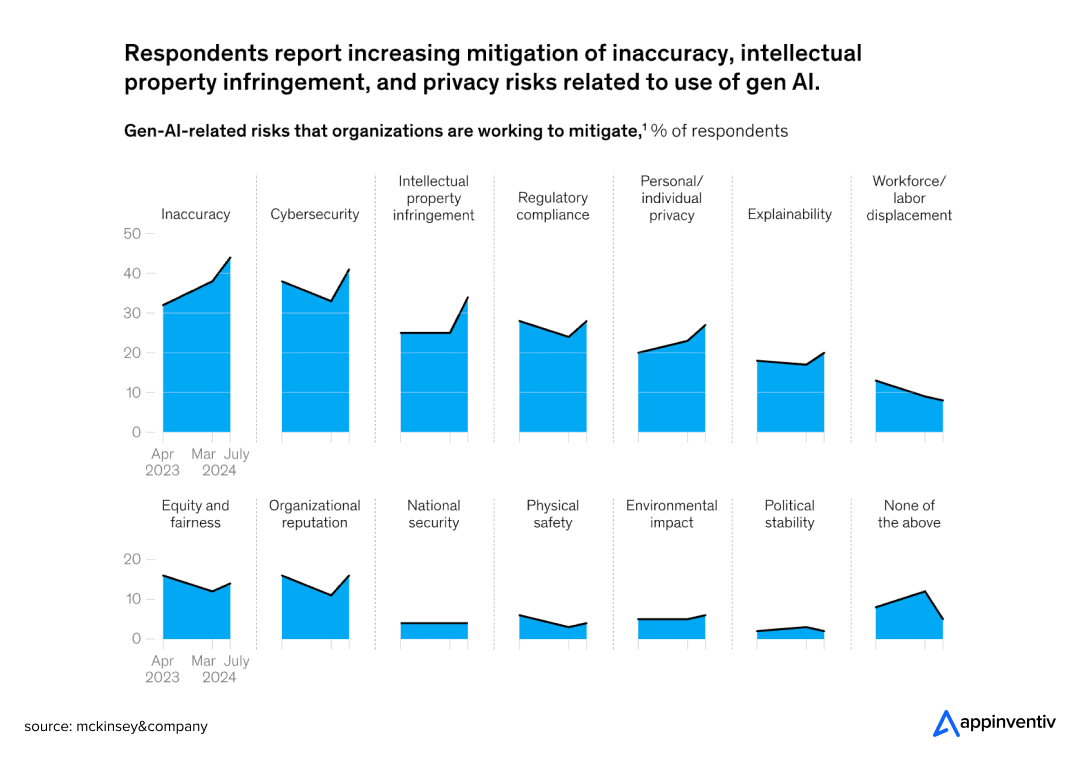

But as with any revolution, there’s a flip side. The same technology that can boost creativity can also introduce a host of unforeseen risks, from perpetuating biases to leaking sensitive data and creating “hallucinations” that are factually incorrect. The stake is truly high. According to McKinsey’s latest research, 44% of organizations have already experienced negative consequences from not evaluating generative AI risks.

As AI systems become integral to decision-making, the question arises: Are we ready to manage these gen AI risks effectively?

This is where the responsible AI checklist comes in. This blog covers the 10 critical AI governance questions every business leader must ask before deploying generative AI. These questions will help mitigate risks, build trust, and ensure that your AI deployment efforts align with legal, ethical, and operational standards.

Contact us for a comprehensive AI risk assessment to maximize AI benefits and minimize risks.

Why Businesses Across Industries Prioritize Responsible AI Deployment

Before we dive into the questions, let’s be clear: responsible AI isn’t an afterthought; it’s a core component of your business strategy. Without a responsible governance framework, you may risk technical failures, your brand image, your finances, and your very relationship with your customers. Here is more on why it’s essential for leaders to prioritize responsible AI practices:

Mitigating Risk

Responsible AI is not about slowing down innovation. It’s about accelerating it safely. Without following Generative AI governance best practices, AI projects can lead to serious legal, financial, and reputational consequences.

Companies that rush into AI deployment often spend months cleaning up preventable messes. On the other hand, companies that invest time upfront in responsible AI practices in their AI systems actually work better and cause fewer operational headaches down the road.

Enhancing Operational Efficiency

By implementing responsible AI frameworks, businesses can make sure their AI systems are secure and aligned with business goals. This approach helps them avoid costly mistakes and ensures the reliability of AI-driven processes.

The operational benefits are real and measurable. Teams stop worrying about whether AI systems will create embarrassing incidents, customers develop trust in automated decisions, and leadership can focus on innovation instead of crisis management.

Staying Compliant

The EU AI Act is now fully implemented, with fines reaching up to 7% of global revenue for serious violations. In the US, agencies like the EEOC are actively investigating AI bias cases. One major financial institution recently paid $2.5 million for discriminatory lending algorithms. Another healthcare company had to pull its diagnostic AI after privacy violations.

These are not isolated incidents. They are predictable outcomes when companies build and deploy AI models without a proper Generative AI impact assessment.

Upholding Ethical Standards

Beyond legal compliance, it is essential that AI systems operate in accordance with principles of fairness, transparency, and accountability. These ethical considerations are necessary to protect both businesses and their customers’ sensitive data.

Companies demonstrating responsible AI practices report higher customer trust scores and significantly better customer lifetime value. B2B procurement teams now evaluate Generative AI governance as standard vendor selection criteria.

Major business giants, including Microsoft, Google, and NIST, are already weighing in on Generative AI risk management and responsible AI practices. Now it’s your turn. With that said, here is a Generative AI deployment checklist for CTOs you must be aware of:

The Responsible AI Checklist: 10 Generative AI Governance Questions Leaders Must Ask

So, are you ready to deploy Generative AI? That’s great. But before you do, you must be aware of the Pre-deployment AI validation questions to make sure you’re building on a solid foundation, not quicksand. Here is a series of Generative AI risk assessment questions to ask your teams before, during, and after deploying a generative AI solution.

1. Data Integrity and Transparency

The Question: Do we know what data trained this AI, and is it free from bias, copyright issues, or inaccuracies?

Why It Matters: An AI model is only as good as its data. Most foundational models learn from a massive, and often messy, collection of data scraped from the internet. This can lead to the model making up facts, which we call “hallucinations,” or accidentally copying copyrighted material. It’s a huge legal and brand risk.

Best Practice: Don’t just take the vendor’s word for it. Demand thorough documentation of the training data. For any internal data you use, set clear sourcing policies and perform a detailed audit to check for quality and compliance.

2. Ethical Alignment

The Question: Does this AI system truly align with our company’s core values?

Why It Matters: This goes way beyond checking legal boxes. This question gets to the heart of your brand identity. Your AI doesn’t just process data; it becomes the digital face of your values. Every decision it makes, every recommendation it offers, represents your company.

Let’s say your company values fairness and equality. If your new AI hiring tool, through no fault of its own, starts to favor male candidates because of an undetected bias, that’s a direct contradiction of your values. It’s a fast track to a PR crisis and a loss of employee morale.

Best Practice: Create an internal AI ethics charter. Form a cross-functional ethics board with representatives from legal, marketing, and product teams to review and approve AI projects.

At Appinventiv, we have seen this principle in action. When developing JobGet, an AI-driven job search platform, we ensure AI matching algorithms promote equal opportunity for everyone, which aligns with our clients’ diversity commitments. This job search platform ensures that no candidate is unfairly disadvantaged by bias, creating a transparent and trustworthy platform for job seekers and employers alike.

3. Fairness and Bias Mitigation

The Question: How have we tested for and actively mitigated bias in the AI’s outputs?

Why It Matters: Bias in AI is not some abstract academic concern; it’s a business-killing reality that has already destroyed careers and companies. You might be aware of the facial recognition systems that couldn’t recognize darker skin tones and loan algorithms that treated identical applications differently based on zip codes that correlated with race.

Here’s what keeps business leaders awake at night: your AI might be discriminating right now, and you’d never know unless you specifically looked for it. These systems are brilliant at finding patterns, including patterns you never intended them to learn.

Best Practice: Test everything. Use datasets that actually represent the real world, not just convenient samples. Regularly audit AI models’ performance across different groups and discover uncomfortable truths about their systems.

Also Read: Benefits of Facial Recognition Software Development

4. Security and Privacy

The Question: What safeguards do we have against data breaches, prompt injection attacks, and sensitive data leaks?

Why It Matters: Generative AI creates new security weak points. An employee might paste confidential customer information into a public model to get a quick summary. That data is then out in the wild and misused by cyber fraudsters. As IBM’s 2024 report showed, the cost of a data breach jumped to $4.88 million in 2024 from $4.45 million in 2023, a 10% spike from the previous year. These are real vulnerabilities that require a proactive defense.

Best Practice: Implement strict data minimization policies and use encryption. Train employees on the risks and set up secure, private environments for any sensitive work.

5. Explainability and Transparency

The Question: Can we explain why our AI made that decision without sounding like we are making it up?

Why It Matters: Many advanced AI models operate as “black boxes”; they give you answers but refuse to show their work. That might sound impressive in a science fiction movie, but it’s a nightmare for real business applications.

Imagine explaining to an angry customer why your “black box” algorithm rejected their loan application. Good luck with that conversation. In high-stakes situations like medical diagnosis or financial decisions, “because the AI said so” isn’t going to cut it with regulators, customers, or your own conscience.

Best Practice: Use Explainable AI (XAI) tools to visualize and interpret the model’s logic. Ensure a clear audit trail for every output and decision the AI makes.

6. Human Oversight

The Question: Where does the human-in-the-loop fit into our workflow, and what are the escalation points?

Why It Matters: While AI can automate tasks, human judgment remains essential for ensuring accuracy and ethical behavior. Automation bias can lead people to blindly trust an AI’s output without critical review. The risk is especially high in creative or analytical fields where the AI is seen as a “co-pilot.”

Best Practice: Define specific moments when human expertise matters most. Train your staff to question AI outputs, not just approve them. And they create clear escalation paths for when things get complicated or controversial. Alongside governance, you’ll need to consider how to hire generative AI developers who can maintain responsible practices throughout model lifecycle.

7. Compliance and Regulation

The Question: Are we prepared to comply with current and emerging AI regulations, and is our governance framework adaptable?

Why It Matters: The legal landscape for AI is a moving target. What’s perfectly legal today might trigger massive fines tomorrow. A rigid governance framework will quickly become outdated. Leaders need to build a system that can evolve with new laws, whether they are focused on data privacy, intellectual property, or algorithmic transparency.

For example, the EU AI Act was passed by the European Parliament in March 2024, introduces strict requirements for high-risk AI systems and has different timelines for various obligations, highlighting the need for a flexible governance model.

Best Practice: Assign someone smart to watch the regulatory horizon full-time. Conduct regular compliance audits and design your framework with flexibility in mind.

8. Accountability Framework

The Question: Who is ultimately accountable if the AI system causes harm?

Why It Matters: This is a question of legal and ethical ownership. If an AI system makes a critical error that harms a customer or leads to a business loss, who is responsible? Is it the developer, the product manager, the C-suite, or the model provider? Without a clear answer, you create a dangerous vacuum of responsibility.

Best Practice: Define and assign clear ownership roles for every AI system. Create a clear accountability framework that outlines who is responsible for the AI’s performance, maintenance, and impact.

9. Environmental and Resource Impact

The Question: How energy-intensive is our AI model, and are we considering sustainability?

Why It Matters: Training and running large AI models require massive amounts of power. For any company committed to ESG (Environmental, Social, and Governance) goals, ignoring this isn’t an option. It’s a reputational and financial risk that’s only going to grow.

Best Practice: Prioritize model optimization and use energy-efficient hardware. Consider green AI solutions and choose cloud models that run with sustainability in mind.

10. Continuous Monitoring and Risk Management

The Question: Do we have systems for ongoing monitoring, auditing, and retraining to manage evolving risks?

Why It Matters: The risks associated with AI don’t end at deployment. A model’s performance can “drift” over time as real-world data changes or new vulnerabilities can emerge. Without a system for Generative AI risk management and continuous monitoring, your AI system can become a liability without you even realizing it.

Best Practice: Implement AI lifecycle governance. Use automated tools to monitor for model drift, detect anomalies, and trigger alerts. Establish a schedule for regular retraining and auditing to ensure the model remains accurate and fair.

Have any other questions in mind? Worry not. We are here to address that and help you ensure responsible AI deployment. Contact us for a detailed AI assessment and roadmap creation.

Common Generative AI Compliance Pitfalls and How to Avoid Them

Now that you know the critical questions to ask before deploying AI, you are already ahead of most organizations. But even armed with the right questions, it’s still surprisingly easy to overlook the risks hiding behind the benefits. Many organizations dive in without a clear understanding of the regulatory minefield they are entering. Here are some of the most common generative AI compliance pitfalls you may encounter and practical advice on how to steer clear of them.

Pitfall 1: Ignoring Data Provenance and Bias

A major mistake is assuming your AI model is a neutral tool. In reality, it’s a reflection of the data it was trained on, and that data is often biased and full of copyright issues. This can lead to your AI system making unfair decisions or producing content that infringes on someone’s intellectual property. This is a critical area of Generative AI legal risks.

- How to avoid it: Before you even think about deployment, perform a thorough Generative AI risk assessment. Use a Generative AI governance framework to vet the training data.

Pitfall 2: Lack of a Clear Accountability Framework

When an AI system makes a costly mistake, who takes the blame? In many companies, the answer is not clear. Without a defined responsible AI deployment framework, you end up with a vacuum of responsibility, which can lead to finger-pointing and chaos during a crisis.

- How to avoid it: Clearly define who is responsible for the AI’s performance, maintenance, and impact from the start. An executive guide to AI governance should include a section on assigning clear ownership for every stage of the AI lifecycle.

Pitfall 3: Failing to Keep Up with Evolving Regulations

The regulatory landscape for AI is changing incredibly fast. What was permissible last year might land you in legal trouble today. Companies that operate with a static compliance mindset are setting themselves up for fines and legal action.

- How to avoid it: Treat your compliance efforts as an ongoing process, not a one-time project. Implement robust Generative AI compliance protocols and conduct a regular AI governance audit to ensure you are staying ahead of new laws. An AI ethics checklist for leaders should always include a review of the latest regulations.

Pitfall 4: Neglecting Human Oversight and Transparency

It’s tempting to let an AI handle everything, but this can lead to what’s known as “automation bias,” where employees blindly trust the AI’s output without question. The lack of a human-in-the-loop can be a major violation of Generative AI ethics for leaders and create a huge liability.

- How to avoid it: Ensure your Generative AI impact assessment includes a plan for human oversight. You need to understand how to explain the AI’s decisions, especially for high-stakes applications. This is all part of mitigating LLM risks in enterprises.

Let our AI experts conduct a comprehensive AI governance audit to ensure your generative AI model is secure and compliant.

How to Embed Responsible AI Throughout Generative AI Development

You can’t just check the “responsible AI” box once and call it done. Smart companies weave these practices into every stage of their AI projects, from the first brainstorming session to daily operations. Here is how they do it right from start to end:

- Planning Stage: Before anyone touches code, figure out what could go wrong. Identify potential risks, understand the needs of different stakeholders, and detect ethical landmines early. It’s much easier to change direction when you’re still on paper than when you have a half-built system.

- Building Stage: Your developers should be asking “Is this fair?” just as often as “Does this work?” Build fairness testing into your regular coding routine. Test for bias like you’d test for bugs. Make security and privacy part of your standard development checklist, not something you bolt on later.

- Launch Stage: Deployment day isn’t when you stop paying attention; it’s when you start paying closer attention. Set up monitoring systems that actually work. Create audit schedules you’ll stick to. Document your decisions so you can explain them later when someone inevitably asks, “Why did you do it that way?”

- Daily Operations: AI systems change over time, whether you want them to or not. Data shifts, regulations updates, and business needs evolve. Thus, you must schedule regular check-ups for your AI just like you would for any critical business system. Update policies when laws change. Retrain models when performance drifts.

The Lucrative ROI of Responsible AI Adoption

Adhering to the Generative AI compliance checklist is not an extra step. The return on investment for a proactive, responsible AI strategy is immense. You’ll reduce long-term costs from lawsuits and fines, and by building trust, you’ll increase customer adoption and loyalty. In the short term, the long-term benefits of adopting responsible AI practices far outweigh the initial costs. Here’s how:

- Risk Reduction: Prevent costly legal battles and fines from compliance failures or misuse of data.

- Brand Loyalty: Companies that build trust by adhering to ethical AI standards enjoy higher customer retention.

- Operational Efficiency: AI systems aligned with business goals and ethical standards tend to be more reliable, secure, and cost-efficient.

- Competitive Advantage: Data-driven leaders leveraging responsible AI are better positioned to attract customers, investors, and talent in an increasingly ethical marketplace.

The Appinventiv Approach to Responsible AI: How We Turn Strategy Into Reality

At Appinventiv, we believe that innovation and responsibility go hand in hand. We stand by you not just to elevate your AI digital transformation efforts, but we stand by you at every step to do it right.

We help our clients turn this responsible AI checklist from a list of daunting questions into an actionable, seamless process. Our skilled team of 1600+ tech experts answers all the AI governance questions and follows the best practices to embed them in your AI projects. Our Gen AI services are designed to support you at every stage:

- AI Strategy & Consulting: Our Generative AI consulting services help you plan the right roadmap. We work with you to define your AI objectives, identify potential risks, and build a roadmap for responsible deployment.

- Secure AI Development: Our Generative AI development services are designed on a “privacy-by-design” principle, ensuring your models are secure and your data is protected from day one.

- Generative AI Governance Frameworks: We help you design and implement custom AI data governance frameworks tailored to your specific business needs. This approach helps ensure compliance and accountability.

- Lifecycle Support: AI systems aren’t like traditional software that you can deploy and forget about. They need regular check-ups, performance reviews, and updates to stay trustworthy and effective over time. At Appinventiv, we monitor, audit, and update your AI systems for lasting performance and trust.

How We Helped a Global Retailer Implement Responsible AI

We worked with a leading retailer to develop an AI-powered recommendation engine. By embedding responsible AI principles from the beginning, we ensured the model was bias-free, secure, and explainable, thus enhancing customer trust and compliance with privacy regulations.

Ready to build your responsible AI framework? Contact our AI experts today and lead with confidence.

FAQs

Q. How is governing generative AI different from traditional software?

A. Traditional software follows predictable, deterministic rules, but generative AI is different. Its outputs can be unpredictable, creating unique challenges around hallucinations, bias, and copyright. The “black box” nature of these large models means you have to focus more on auditing their outputs than just their code.

Q. What are the most common risks overlooked when deploying generative AI?

A. Many leaders overlook intellectual property infringement, since models can accidentally replicate copyrighted material. Prompt injection attacks are also often missed, as they can manipulate the model’s behavior. Lastly, the significant environmental impact and long-term costs of training and running these large models are frequently ignored.

Q. How do we measure the effectiveness of our responsible AI practices?

A. You can measure effectiveness both quantitatively and qualitatively. Track the number of bias incidents you have detected and fixed. Monitor the time it takes to resolve security vulnerabilities. You can also get qualitative feedback by conducting surveys to gauge stakeholder trust in your AI systems.

Q. What is a responsible AI checklist, and why do we need one?

A. A responsible AI checklist helps companies assess the risks and governance efficiently. The checklist is essential for deploying AI systems safely, ethically, and legally.

Q. What are the six core principles of Generative AI impact assessment?

A. Generative AI deployment checklist aligns with these six core principles:

- Fairness

- Reliability & Safety

- Privacy & Security

- Inclusiveness

- Transparency

- Accountability

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

How to Build AI Agents for Insurance - Framework, Use Cases & ROI

Key takeaways: AI agents are moving insurance operations beyond pilots into scalable, regulatory-compliant AI in insurance production-grade systems. Enterprise-ready AI agents require governance-first frameworks, hybrid architectures, and deep integration with core systems. ROI compounds through reduced cycle times, improved decision consistency, and non-linear operational scalability. Insurers succeeding with AI agents treat them as operational infrastructure,…

Customer Experience Automation (CXA) for Australian Enterprises

Key takeaways: CX automation scales in Australian enterprises only when escalation, audit trails, and accountability are designed before rollout. Weak customer data and orchestration choices undermine CX automation faster than any platform limitation. AI improves CX outcomes when it supports routing and prediction, not when it replaces judgement in regulated interactions. CX automation succeeds when…

Appinventiv's AI Center of Excellence: Structure, Roles, and Business Impact for Enterprises

Key takeaways: Enterprises struggle less with AI ideas and more with turning those ideas into repeatable outcomes. An AI Center of Excellence provides structure, ownership, and clarity as AI initiatives scale. Clear roles, a practical operating model, and built-in governance are what keep AI programs from stalling. Measuring maturity and business impact helps enterprises decide…