- The Market Momentum Behind RAG Integration in Enterprise AI

- Sectors Adopting First and Why

- RAG Integration in Action: How Enterprises Are Turning Data Into Measurable Outcomes

- Driving Impact Across Core Functions

- Proving Value Before Scaling

- Key Benefits of RAG Integration for Business Applications

- 1. Better Decisions, Backed by Facts

- 2. Built-In Control and Compliance

- 3. Less Manual Work, More Flow

- 4. Real Cost Savings

- 5. Works With What You Already Have

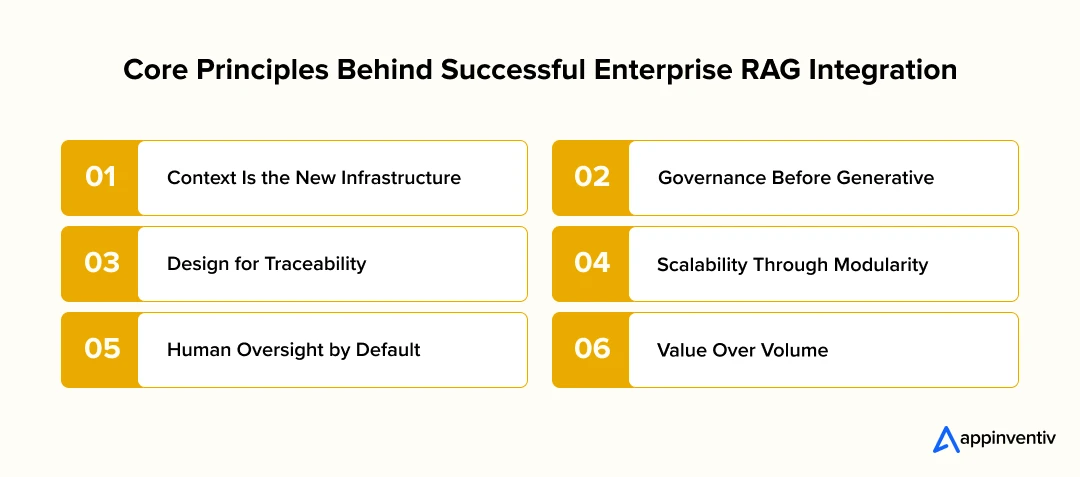

- Enterprise RAG Integration Principles Businesses Must Know Of

- 1. Context Is the New Infrastructure

- 2. Governance Before Generative

- 3. Design for Traceability

- 4. Scalability Through Modularity

- 5. Human Oversight by Default

- 6. Value Over Volume

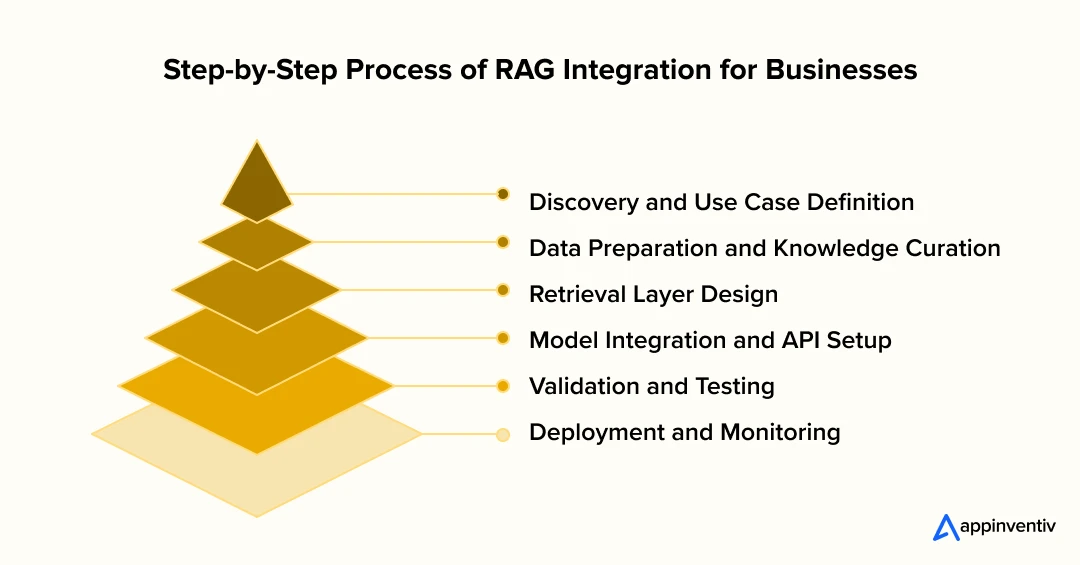

- Step by Step RAG Integration Process for Businesses

- 1. Discovery and Use Case Definition

- 2. Data Preparation and Knowledge Curation

- 3. Retrieval Layer Design

- 4. Model Integration and API Setup

- 5. Validation and Testing

- 6. Deployment and Monitoring

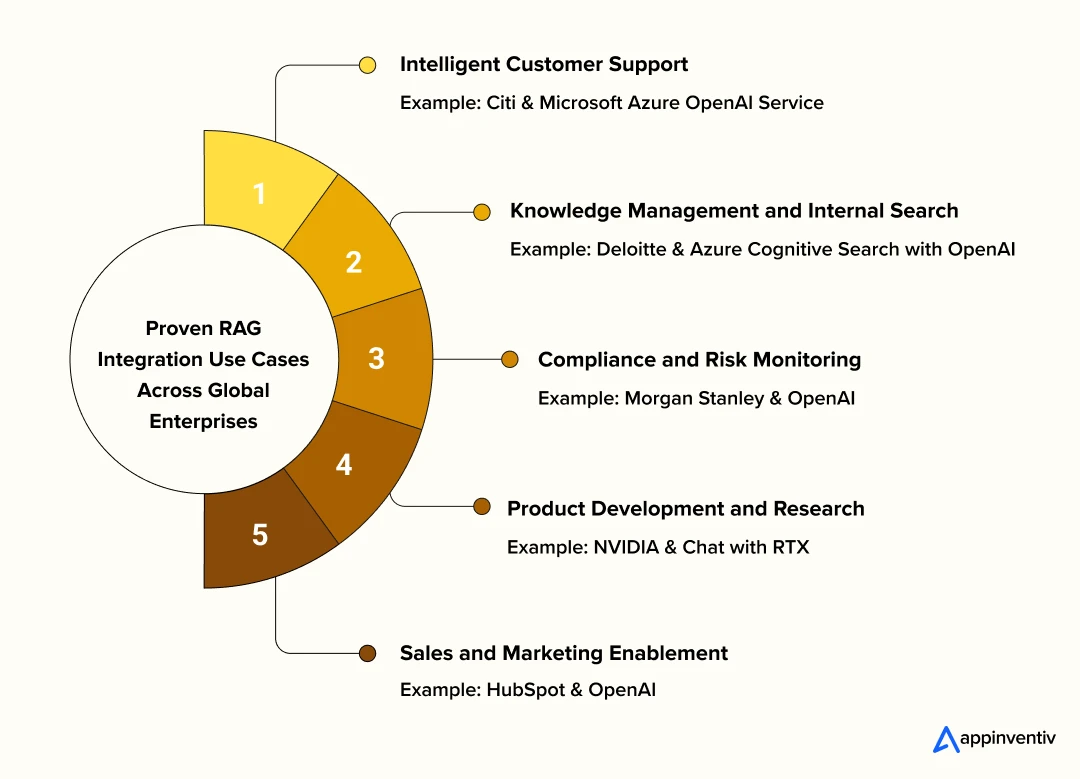

- Real-World RAG Integration Use Cases: How Leading Enterprises Are Getting It Right

- 1. Intelligent Customer Support

- 2. Knowledge Management and Internal Search

- 3. Compliance and Risk Monitoring

- 4. Product Development and Research

- 5. Sales and Marketing Enablement

- Cost of Integrating RAG into Applications

- Average Cost Range

- Factors That Influence the Cost

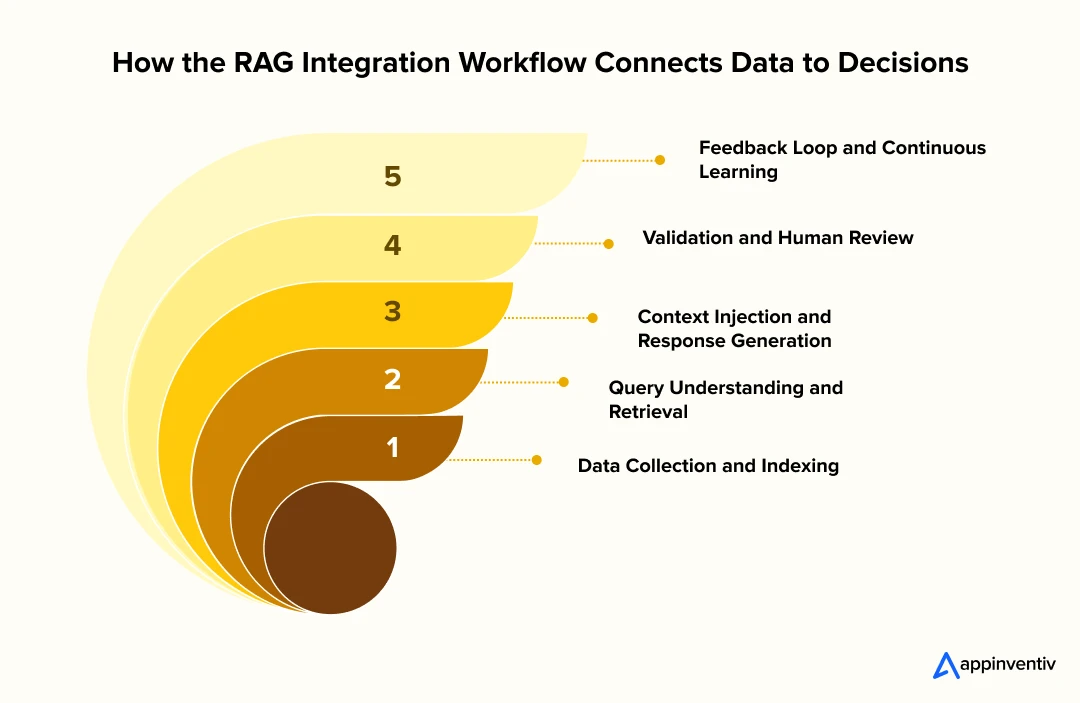

- Inside the RAG Integration Workflow: From Data to Decision

- 1. Data Collection and Indexing

- 2. Query Understanding and Retrieval

- 3. Context Injection and Response Generation

- 4. Validation and Human Review

- 5. Feedback Loop and Continuous Learning

- RAG Integration Challenges and How to Address Them

- Start Your RAG Integration Journey with Appinventiv

- FAQs

- RAG integration for business applications connects AI with trusted enterprise data for higher accuracy.

- Enterprises use RAG to improve customer support, compliance, and decision-making.

- The RAG integration process covers discovery, data curation, retrieval setup, and continuous learning.

- Cloud-based and on-prem RAG setups both support secure enterprise AI deployments.

- Integration costs vary from $35,000 to $400,000 depending on scope and data size.

- Global leaders like Citi, Deloitte, and Morgan Stanley are already using RAG to power enterprise intelligence.

Every enterprise today sits on an ocean of data – documents, emails, customer chats, internal wikis but when someone asks a simple question like “What did we decide in the Q1 policy review?”, the system still goes blank. Teams waste hours digging through folders, while leadership wonders why their “AI investments” haven’t actually made work easier. The real problem isn’t lack of AI; it’s the disconnect between information retrieval and meaningful response generation. That’s where RAG integration for business applications steps in.

Across boardrooms, the conversation has shifted from “Should we use AI?” to “How do we make it smarter with our own data?” Pure large language models sound impressive, but they hallucinate when context runs thin. On the other hand, Retrieval-Augmented Generation integration brings precision by grounding model responses in verified enterprise knowledge. Think of it as giving your AI the company’s memory- instantly searchable, always accurate, and secure within your existing tech stack.

For CIOs and CTOs, this is less about chasing another trend and more about protecting ROI. Enterprises have already seen value in RAG integration with enterprise software, whether it’s for customer support automation, compliance audits, or knowledge management. But success doesn’t come from slapping a RAG API on top of an LLM; it requires thoughtful RAG system integration, aligning your data architecture, vector databases, and model workflows so everything moves in sync.

The challenge? Integrating RAG properly is not a plug-and-play task. From choosing between cloud-based and on-prem setups to ensuring multi-LLM RAG integration for reliability, every decision shapes your speed, cost, and scalability. Add concerns around latency, security, and governance, and it becomes clear why most pilots stall before production. The enterprises that win are the ones who treat RAG as a system, not a side experiment.

That’s exactly what this blog will help you master. We’ll break down the step-by-step RAG integration process, show you how the architecture actually works, and explain the cost of integrating RAG into applications from pilot to full scale. You’ll see what makes enterprise RAG integration successful, where most teams go wrong, and how to estimate real business impact before you even write the first line of code.

So, if your goal is to make AI work with your data and not against it, this guide will show you how to build a retrieval-grounded, compliant, and scalable foundation. By the end, you’ll know exactly how to integrate RAG in your app, how much it costs, and what to expect when you move from proof-of-concept to production.

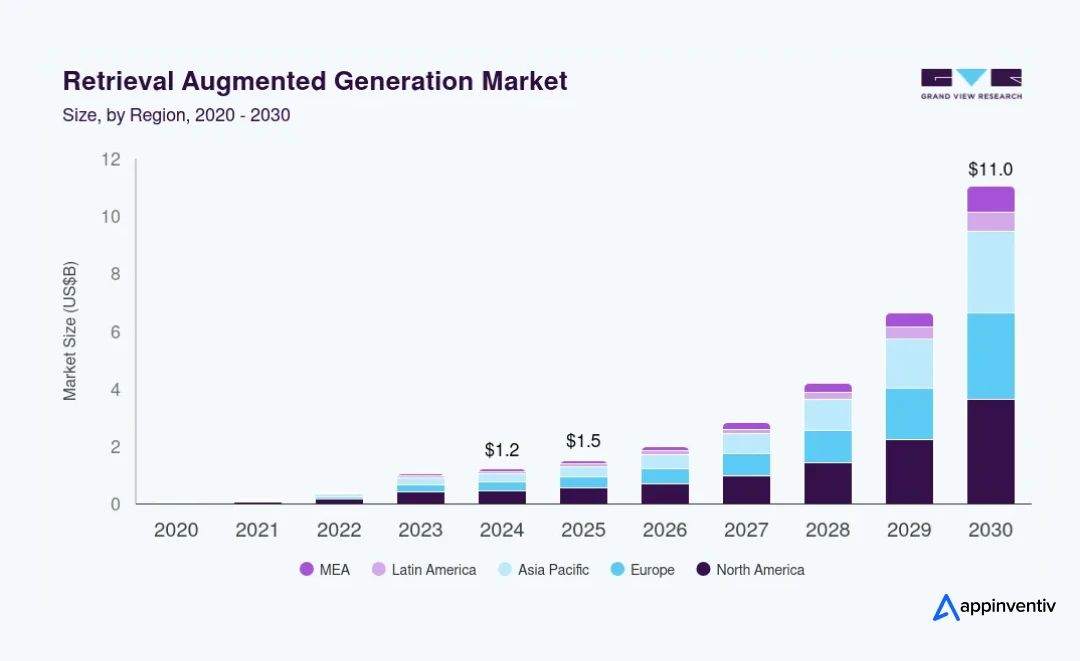

RAG adoption is exploding at 49.1% CAGR and rising. The enterprises moving now aren’t experimenting; they’re redefining how AI thinks with their data.

If you’re ready to turn retrieval into real competitive advantage, we’ll help you build the roadmap that gets you there first.

The Market Momentum Behind RAG Integration in Enterprise AI

In just a year, Retrieval-Augmented Generation has shifted from a technical curiosity to one of the most actively adopted GenAI frameworks in the enterprise world. According to Grand View Research, the global RAG market size was valued at $1.2 billion in 2024 and is projected to reach $11.0 billion by 2030, growing at a CAGR of 49.1% from 2025 to 2030. That’s one of the fastest growth rates across the entire AI ecosystem, faster than standalone NLP (Natural language Processing) or machine learning segments.

The reason is simple: enterprises have realized that generic large language models alone can’t deliver context, accuracy, or compliance. As IDC points out, “Retrieval-augmented generation is making enterprise adoption of generative AI more feasible and practical by augmenting LLMs with enterprise data using search techniques.” Thus, forward-looking organizations are now using RAG integration for business applications to bridge their proprietary data and large language models, enabling smarter, grounded outputs that reflect real organizational knowledge.

In other words, RAG is the technology that finally makes AI enterprise-ready. It’s not about building another chatbot but about reengineering how knowledge flows inside the company. And that’s what’s driving budgets, pilots, and production deployments across industries.

Sectors Adopting First and Why

The early adopters tell an interesting story about where RAG integration for enterprise software is creating the most measurable value. McKinsey identifies four domains leading the charge – customer service, marketing, finance, and knowledge management, where RAG’s grounding mechanism directly reduces hallucinations and boosts trust.

- Customer Service: Companies are embedding RAG integration for apps in support workflows to retrieve real-time policy or product data, providing instant, accurate responses to customer queries. This alone can lift customer satisfaction scores by 15–25% while reducing call center load.

- Knowledge Management: Enterprises are building enterprise knowledge management copilots that let employees query internal wikis, documents, and CRMs with natural language, cutting research time in half.

- Finance & Legal: In highly regulated industries, RAG integration with LLMs ensures that responses stay compliant by retrieving only verified data sources, minimizing the risk of misinformation or non-compliant communication.

- Marketing & Sales: Drafting assistants powered by Retrieval-Augmented Generation integration help teams generate proposals, campaigns, and reports using approved content, speeding up turnaround while maintaining consistency.

According to Gartner, RAG is “a practical way to overcome the limitations of general large language models by making enterprise data and information available for LLM processing.” The firm notes that while RAG is conceptually simple, its implementation requires integrating multiple new technologies like vector databases, embedding models, and search pipelines. For now, that complexity serves as a competitive differentiator, but within the next 12–18 months, Gartner predicts it will become a baseline competency for any enterprise leveraging generative AI.

The momentum is undeniable. What began as an experiment in AI labs is now a strategic pillar in boardroom roadmaps. Enterprises aren’t just exploring RAG but are investing in full-scale RAG system integration as a core part of digital transformation.

RAG Integration in Action: How Enterprises Are Turning Data Into Measurable Outcomes

The shift to Retrieval-Augmented Generation integration is no longer experimental but is operational. Enterprises are now embedding RAG across business systems to convert scattered data into actionable intelligence. What started as pilot projects in customer service and knowledge management has evolved into enterprise-wide deployments that drive faster decisions and measurable ROI.

Driving Impact Across Core Functions

Customer Experience: RAG copilots retrieve verified answers from internal systems, improving response accuracy and cutting handling times.

Operations & Governance: Teams use enterprise RAG integration to access SOPs, vendor data, and audit logs in real time, improving compliance efficiency.

Finance & Strategy: Analysts combine structured and unstructured data through RAG-enabled tools to generate insights faster and reduce reporting delays.

Product & Engineering: Product teams access historical specs and feedback instantly, accelerating iteration and documentation cycles.

Proving Value Before Scaling

Most leaders begin with focused pilots – customer support, policy retrieval, or internal knowledge bots to demonstrate early ROI. Once validated, these use cases scale across departments with minimal disruption, turning RAG into a shared enterprise capability rather than a standalone experiment.

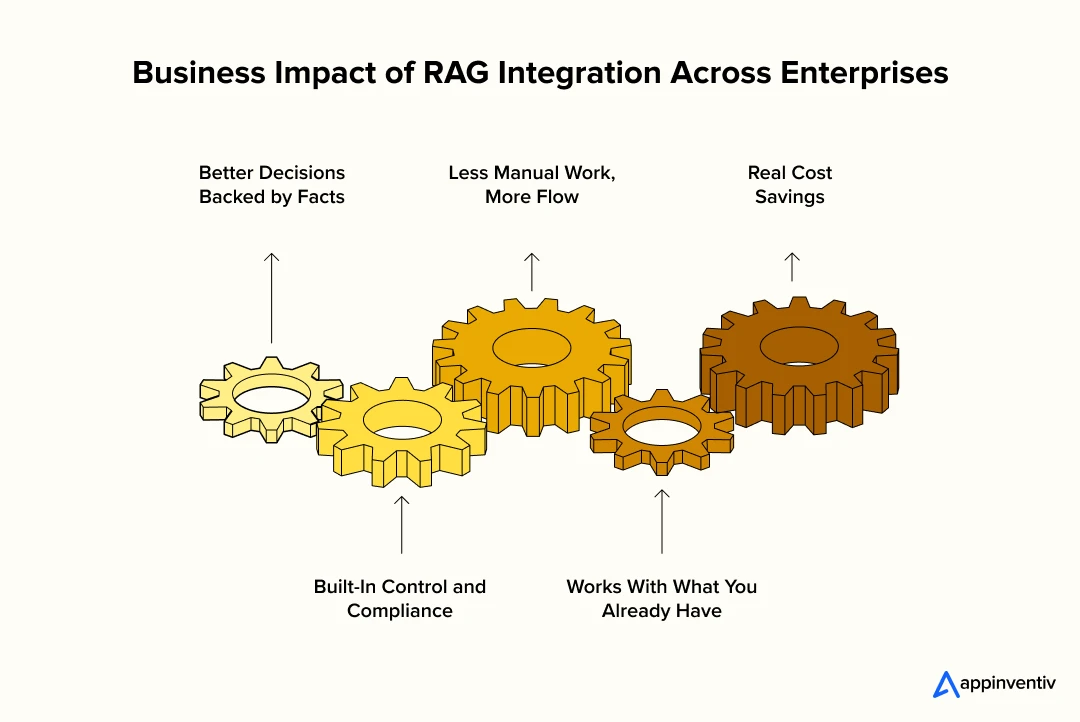

Key Benefits of RAG Integration for Business Applications

When companies bring Retrieval-Augmented Generation into their ecosystem, the difference shows up quickly. It’s not about adding another AI tool; it’s about getting real value from the data a business already owns. The right RAG setup helps teams find answers faster, make cleaner decisions, and work with confidence in the accuracy of every output.

1. Better Decisions, Backed by Facts

RAG connects every response to real company data. Instead of relying on generic information, it pulls from policies, reports, and historical records. Leaders can trust that the insights they see are grounded in their own systems, not assumptions. The result is quicker, fact-based decisions that hold up under scrutiny.

2. Built-In Control and Compliance

With RAG, enterprises decide exactly which data sources the model can use. Every answer is traceable, which means compliance teams can audit how and why an output was generated. It’s an easy way to stay aligned with privacy and regulatory standards while still moving fast.

3. Less Manual Work, More Flow

Across departments, people spend less time digging for information and more time acting on it. Customer agents get instant access to product or policy details, operations teams find SOPs in seconds, and analysts can pull insights from multiple systems without switching screens. The impact is simple- less friction, smoother workflows, and faster outcomes.

4. Real Cost Savings

Because RAG narrows what the model looks at before generating an answer, it reduces compute costs and token usage. Companies avoid the high bills tied to larger, unoptimized AI models. The gains are visible within weeks of deployment, making it one of the few AI investments that pays for itself early.

5. Works With What You Already Have

RAG fits into existing setups. It connects with CRMs, ERPs, knowledge bases, and analytics tools without forcing teams to rebuild their tech stack. IT leaders like it because it strengthens what’s already there instead of replacing it, keeping rollout timelines short and disruption minimal.

Enterprise RAG Integration Principles Businesses Must Know Of

Enterprises that succeed with Retrieval-Augmented Generation don’t treat it as another model integration. They treat it as an evolution of how knowledge is managed, governed, and accessed across the organization. These principles guide that transformation, ensuring every RAG initiative scales responsibly, stays compliant, and delivers long-term business value.

1. Context Is the New Infrastructure

In RAG systems, context is everything. The real performance lift doesn’t come from a bigger model but from better data structuring. Businesses that build clear, searchable, and well-labeled knowledge stores get faster, more accurate retrievals. Without context discipline, even the best models guess.

2. Governance Before Generative

Before an answer can be generated, access must be governed. Enterprises should define which data is retrievable, who can retrieve it, and under what conditions. Embedding these rules into the RAG architecture ensures every output is compliant by design, not by chance.

3. Design for Traceability

Trust comes from transparency. Every RAG output should link back to its original source – document, record, or database entry. This traceability gives leaders confidence that decisions made on AI insights are defensible, auditable, and policy-aligned.

4. Scalability Through Modularity

RAG isn’t a single system; it’s an ecosystem. Modular design backed by APIs, vector databases, and microservices allows teams to plug in new models, data sources, or business applications without rebuilding from scratch. This keeps integration flexible and cost-effective as the enterprise evolves.

5. Human Oversight by Default

RAG is meant to support decision-making, not replace it. Keeping humans in the loop ensures outputs are validated before action is taken. Continuous feedback from domain experts helps fine-tune retrieval logic and model behavior, creating a system that learns responsibly over time.

6. Value Over Volume

Enterprises should measure RAG’s success by outcomes, not deployment size. The focus should stay on tangible metrics – faster response times, improved accuracy, lower compliance risk, and measurable ROI. Scaling without clear business value only adds cost without impact.

Step by Step RAG Integration Process for Businesses

Bringing Retrieval Augmented Generation into an enterprise environment is a planned journey, not a one time setup. It moves through several stages where data, technology, and business goals align to deliver reliable, compliant, and measurable outcomes.

1. Discovery and Use Case Definition

Every successful process of RAG Integration for business applications starts with defining purpose. The first task is to identify where retrieval and generation together can add value. This could be customer service, policy review, knowledge search, or compliance documentation. Teams outline clear business goals, assess the current data landscape, and agree on what success will look like once RAG is in place.

2. Data Preparation and Knowledge Curation

The foundation of any RAG system lies in its data. Businesses clean and organize information stored across CRMs, wikis, document libraries, and archives. Creating consistent tags and embeddings ensures that the AI retrieves only relevant and updated content. Decisions are also made about what data should be indexed and what should remain private, keeping security and governance intact.

3. Retrieval Layer Design

This stage brings the RAG pipeline integration to life. A vector database is created or connected to, forming the memory layer that allows the AI to pull context at the right time. The way data is chunked, embedded, and indexed defines how fast and how accurately the system can respond. Some enterprises choose hybrid search that mixes semantic and keyword based retrieval to improve precision.

4. Model Integration and API Setup

The next step involves RAG API integration, where the retrieval layer connects with the chosen large language model. Depending on needs, enterprises can use a single LLM or adopt multi LLM RAG integration for better control and reliability. Many organizations begin with a cloud based RAG integration setup to simplify scaling and manage infrastructure through API gateways that keep internal data secure.

5. Validation and Testing

Before the system reaches end users, it goes through validation. In this phase of RAG integration for apps, teams test retrieval accuracy, latency, and response quality to make sure the model stays grounded in the right data. Selected departments such as customer support or risk management often pilot the system to confirm usefulness and trust. Their feedback helps refine prompts, filters, and retrieval logic.

6. Deployment and Monitoring

After testing, the RAG setup is deployed within existing business applications. Continuous monitoring is essential. Teams observe retrieval precision, user satisfaction, and performance trends. As the organization grows and new data sources come online, the RAG framework is updated to stay relevant. A mature enterprise RAG integration evolves with the business, learning from usage and feedback to deliver consistent long term value.

Real-World RAG Integration Use Cases: How Leading Enterprises Are Getting It Right

Retrieval Augmented Generation has moved beyond pilots and into full production across industries. Leading enterprises are using it to connect internal knowledge with intelligent automation, improving accuracy, compliance, and decision speed. These are some of the most common RAG integration use cases, along with examples of businesses already leveraging them.

1. Intelligent Customer Support

RAG has become the backbone of next-generation customer service. By connecting large language models with verified internal data, support systems can resolve customer issues faster and more accurately. Instead of relying on static FAQs, RAG-driven assistants pull live answers from policy databases and ticket histories.

Example:

Citi adopted a RAG system integration approach in partnership with Microsoft Azure OpenAI Service to assist contact center agents with real-time information retrieval. The system provides contextual responses drawn from internal knowledge bases, helping agents improve accuracy and cut average handle time.

2. Knowledge Management and Internal Search

Enterprises with massive document repositories are using enterprise RAG integration to simplify internal search. Employees can ask natural questions and get sourced answers from verified company documents, reports, and intranet pages, without needing to know where that information is stored.

Example:

Deloitte uses Retrieval-Augmented Generation integration within its internal knowledge management platform, combining Azure Cognitive Search with OpenAI models. Consultants can query proposals, project data, and research materials through conversational AI, saving significant time on client preparation and documentation.

3. Compliance and Risk Monitoring

Regulated industries like banking and healthcare use RAG to ensure that AI-generated outputs stay compliant. By retrieving policy clauses and legal definitions before generating responses, the model avoids hallucinations and provides traceable references for every answer.

Example:

Morgan Stanley partnered with OpenAI to deploy a RAG-based wealth management assistant for financial advisors. The system retrieves insights from the firm’s 100,000+ internal research documents, ensuring all client responses are accurate and compliant with regulatory guidelines. This implementation is one of the first enterprise-grade RAG systems in global finance.

4. Product Development and Research

RAG supports faster innovation by helping product and R&D teams access technical papers, design history, and customer insights instantly. It turns research and feedback loops into live reference systems that reduce development time.

Example:

NVIDIA has integrated RAG pipeline integration within its internal documentation and developer support platforms. The company’s “Chat with RTX” tool allows users to connect custom data sources locally, simulating enterprise-grade RAG in a secure environment. This approach improves how engineers find code references and model documentation, cutting hours of search time.

5. Sales and Marketing Enablement

Marketing and sales teams are using RAG to build personalized, data-backed content quickly. By retrieving the latest campaign results, pricing data, and product details, RAG ensures every piece of outbound content is both accurate and consistent with the company’s messaging.

Example:

HubSpot implemented RAG integration for business applications using its proprietary AI engine and OpenAI models. The system retrieves live data from CRM and marketing analytics sources to draft personalized emails, proposals, and recommendations for users. This blend of retrieval and generation has boosted campaign response rates and reduced manual editing.

Cost of Integrating RAG into Applications

The cost of building a Retrieval Augmented Generation setup depends on how deeply it is integrated into existing systems and how much data it needs to handle. There is no single price tag that fits every enterprise, but understanding the moving parts helps plan budgets with confidence.

Average Cost Range

For small to mid scale pilots, the cost of integrating RAG into applications generally starts around $35,000 to $80,000.

On the other hand, full scale enterprise RAG integration for apps that involve large data sets, multi model pipelines, and cloud infrastructure typically range between $100,000 and $400,000 or more, depending on complexity and ongoing maintenance needs.

Factors That Influence the Cost

Scope of Integration

The number of use cases and applications directly affects cost. A single retrieval bot in a customer support system is far simpler than connecting RAG to multiple enterprise platforms such as CRM, ERP, and analytics tools.

Data Volume and Quality

Cleaning, tagging, and embedding large or unstructured data sets adds time and cost. Better quality data reduces post deployment tuning and speeds up time to value.

Model Selection

Choosing between open source, custom, or commercial LLMs changes both initial and recurring expenses. Multi LLM setups offer reliability but increase infrastructure and monitoring costs.

Infrastructure and Deployment Type

Cloud based RAG integration is usually faster and less capital intensive at the start. On premise or hybrid deployments come with higher infrastructure and security costs but offer greater data control.

Testing and Governance

Validation, compliance review, and monitoring frameworks can add to upfront costs but are essential for regulated sectors. These investments prevent rework later and ensure data integrity.

Maintenance and Scaling

After launch, enterprises need to budget for updates, retraining, and infrastructure scaling. Continuous optimization keeps the system accurate and aligned with new data sources.

Enterprises often begin with a three month pilot in one workflow to measure ROI before expanding. Most see payback within six to nine months once retrieval accuracy improves and manual work decreases. Clear tracking of cost savings, efficiency gains, and customer satisfaction helps justify broader rollout.

Whether you’re testing a small pilot or planning full-scale enterprise deployment, understanding the real cost is the first step.

Our experts can help you estimate investment and timeline before you even start building.

Inside the RAG Integration Workflow: From Data to Decision

Once the planning and setup are complete, the Retrieval Augmented Generation system begins to work quietly behind the scenes. The goal is simple: connect business data to intelligent reasoning so that every answer the model generates is grounded in truth. A well designed RAG integration workflow makes this connection seamless and reliable.

1. Data Collection and Indexing

The process starts with collecting information from approved internal sources such as CRMs, policy repositories, knowledge bases, or ticketing tools. The content is broken into smaller segments, converted into embeddings, and stored in a vector database. This indexing layer becomes the searchable memory of the organization.

2. Query Understanding and Retrieval

When a user asks a question, the system converts the query into an embedding and compares it to the stored vectors. The retrieval engine then identifies the most relevant documents or data chunks. This step ensures that the model only works with verified, enterprise specific knowledge rather than relying on general internet content.

3. Context Injection and Response Generation

The retrieved context is passed to the large language model through a RAG system integration layer. The model uses this context to generate a response that is both accurate and traceable. Since the output is grounded in company data, it carries higher confidence and is easier to audit.

4. Validation and Human Review

Before final delivery, enterprises often add a validation layer. This may involve automated filters that check for compliance terms or human reviewers who approve sensitive responses. In regulated sectors, this human in the loop approach adds trust and accountability.

5. Feedback Loop and Continuous Learning

The final stage in the enterprise RAG integration workflow is feedback. User ratings, corrections, and new data updates feed back into the vector store. Over time, the retrieval model learns which responses perform best, improving accuracy and speed without retraining the full LLM.

Appinventiv’s Insight

What we see across enterprises adopting RAG integration and AI-based knowledge systems is that success rarely depends on the model itself but depends on how well the workflow is designed. The companies that win with RAG don’t just connect data; they curate it. Clean indexing, governance checkpoints, and continuous feedback loops make the difference between a model that sounds smart and one that actually supports real business decisions.

RAG Integration Challenges and How to Address Them

Even with a clear workflow, enterprises face practical challenges while scaling RAG. These issues often arise from data quality, infrastructure limits, or governance gaps. Addressing them early ensures long term reliability and trust in the system.

| Challenge | What It Means | Impact on Enterprise | How to Address It |

|---|---|---|---|

| Data Drift | Source data changes or becomes outdated, causing retrieval inaccuracy. | Leads to outdated or inconsistent AI outputs. | Schedule periodic data refreshes and automated re-indexing. |

| Latency and Performance | Retrieval slows as data volume grows or infrastructure lags. | Reduces user adoption and disrupts workflow speed. | Optimize vector database design and use caching for frequent queries. |

| Compliance Gaps | Missing audit trails or unrestricted data access. | Creates regulatory and privacy risks. | Enforce access controls and maintain detailed retrieval logs. |

| Hallucination Risks | Model produces content not backed by retrieved data. | Damages trust and output reliability. | Implement grounding checks and confidence scoring for every response. |

| Scaling Limitations | Architecture struggles to handle new data sources or departments. | Restricts enterprise wide expansion. | Build modular components and scalable APIs. |

| Feedback Neglect | User feedback and error corrections not utilized. | Prevents improvement and causes stagnation. | Establish structured feedback pipelines to refine performance continuously. |

Data drift, latency, compliance, or architecture – RAG challenges differ for every business.

Our team helps enterprises overcome roadblocks, streamline workflows, and launch secure, production-ready RAG systems.

Start Your RAG Integration Journey with Appinventiv

Retrieval Augmented Generation is no longer an emerging trend but is the next foundation of enterprise AI. As organizations look beyond proof of concept, the real challenge lies in integrating RAG in a way that balances speed, accuracy, and compliance. That’s where Appinventiv comes in.

As a RAG development services provider, we help enterprises design and implement RAG integration for business applications that go beyond pilots to deliver measurable business value. Our AI consulting team combines deep expertise in language models, data engineering, and enterprise security to build retrieval-first architectures that align perfectly with your business goals.

Our approach to enterprise RAG integration and RAG development focuses on making AI systems contextual and traceable. We start by assessing your data readiness, identifying key use cases, and architecting retrieval layers that integrate seamlessly with your existing stack. Whether you need a cloud-based RAG integration or a private on-premise deployment, we ensure that every response your AI generates is grounded in verified enterprise data and backed by governance.

We’ve delivered advanced AI ecosystems for some of the world’s most trusted brands, including KFC, IKEA, JobGet, Mudra, YouComm, and Americana ALMP. Our experience in building retrieval-augmented and multimodal AI systems has helped businesses improve customer experience, streamline operations, and achieve faster decision-making with complete compliance assurance.

By partnering with an AI integration services provider like us, you gain access to more than 1500 technology experts, including data scientists, machine learning engineers, and AI strategists who bring end-to-end execution capability, from use case design and model integration to long-term monitoring and optimization.

We don’t just deliver RAG systems; we deliver AI ecosystems that learn, adapt, and scale with your organization. Every implementation follows a compliance-by-design framework to ensure your data remains private, auditable, and enterprise-ready from day one.

For instance, we partnered with MyExec, a fast-growing AI startup, to build an intelligent, chat-based business consultant that helps small and medium businesses make data-driven decisions. Designed to act as a virtual advisor, MyExec analyzes uploaded documents, extracts insights, and provides strategic recommendations in real time, transforming how SMBs access and act on business intelligence.

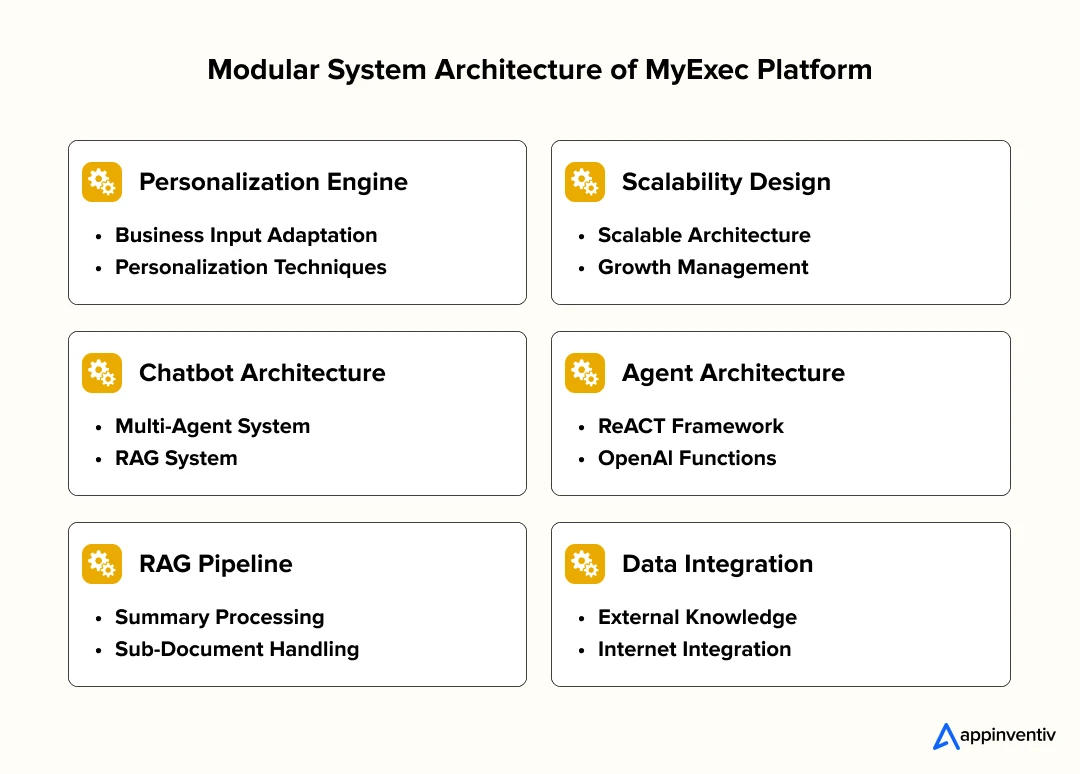

At the heart of the system lies a multi-agent RAG integration built using GPT-4o, LangChain, and LlamaIndex. This Retrieval-Augmented Generation setup connects MyExec’s conversational AI to verified document data, ensuring accuracy, context, and traceability in every response. The platform’s modular architecture and RAG pipeline have enabled scalable, compliant, and deeply personalized decision support, setting a new benchmark for enterprise-grade AI assistants.

If your organization is ready to move from experimentation to enterprise-wide impact, get in touch with our experts to make that transition smoothly.

FAQs

Q. How to integrate RAG into existing enterprise applications?

A. It’s easier than it sounds once you know where to start. You don’t replace what you already have but build around it. The goal is to connect your internal data and let your current systems use it smartly.

In practice, most enterprises follow a simple flow:

- Pick one use case that’s slowing people down – maybe customer support or knowledge search.

- Collect and clean your internal files, documents, or FAQs.

- Create a retrieval setup (often a vector database) so the system can “remember” that data.

- Plug this retrieval layer into your CRM or workflow through APIs.

- Test it small, see what works, then expand.

Q. What are the costs associated with implementing RAG in enterprise systems?

A. Costs depend on how deep you want to go. For a small pilot, companies usually spend somewhere around $35,000 to $80,000. For a full enterprise RAG integration, that number can grow up to $400,000 or more once it runs across teams and platforms.

A few things that affect cost:

- How much data needs to be cleaned and indexed.

- Whether you choose cloud-based RAG integration or build on-premise.

- Model licensing or fine-tuning fees.

- Ongoing monitoring and updates once it’s live.

Q. What are the security considerations when integrating RAG into applications?

A. Security sits at the center of RAG design. Because the system works with company data, you can’t let it retrieve from unapproved places.

Enterprises usually cover this with:

- Role-based access so only certain people or systems can retrieve sensitive info.

- Encryption of both stored and moving data.

- Regular audits to see what the model accessed and when.

- Keeping the retrieval layer private or hosted under their own security framework.

Q. How long does it take to deploy RAG in a business application?

A. Timelines vary. A simple use case can go live in about six to eight weeks. Bigger rollouts where RAG connects across departments may take five to six months.

The usual rhythm looks like this:

- Define the problem and get your data ready.

- Build the retrieval pipeline and test the model connection.

- Run a short pilot and collect feedback.

- Scale gradually once it works.

Q. What are the biggest challenges when integrating RAG into enterprise workflows?

A. RAG can do a lot, but it’s not magic. The main struggles happen early, mostly with messy data or unclear ownership.

Here’s what usually trips teams up:

- Data that’s outdated or scattered in too many formats.

- Latency when the system handles too many retrieval calls.

- Missing compliance checks that make security teams nervous.

- Not collecting feedback from users, which slows improvement.

Good prep – clean data, modular design, and real user testing usually fixes most of it.

Q. What kind of ROI can enterprises expect from RAG integration?

A. Returns show up faster than most expect. The first thing leaders notice after RAG integration for business applications is time saved- people stop digging for answers and start using them.

The benefits usually fall into three buckets:

- Teams work faster because knowledge is one search away.

- Decisions improve since responses come from verified data.

- Costs drop as model usage and cloud calls become more efficient.

Most companies start small, measure these gains, and scale once they see the numbers add up.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

How to Build AI Agents for Insurance - Framework, Use Cases & ROI

Key takeaways: AI agents are moving insurance operations beyond pilots into scalable, regulatory-compliant AI in insurance production-grade systems. Enterprise-ready AI agents require governance-first frameworks, hybrid architectures, and deep integration with core systems. ROI compounds through reduced cycle times, improved decision consistency, and non-linear operational scalability. Insurers succeeding with AI agents treat them as operational infrastructure,…

Customer Experience Automation (CXA) for Australian Enterprises

Key takeaways: CX automation scales in Australian enterprises only when escalation, audit trails, and accountability are designed before rollout. Weak customer data and orchestration choices undermine CX automation faster than any platform limitation. AI improves CX outcomes when it supports routing and prediction, not when it replaces judgement in regulated interactions. CX automation succeeds when…

Appinventiv's AI Center of Excellence: Structure, Roles, and Business Impact for Enterprises

Key takeaways: Enterprises struggle less with AI ideas and more with turning those ideas into repeatable outcomes. An AI Center of Excellence provides structure, ownership, and clarity as AI initiatives scale. Clear roles, a practical operating model, and built-in governance are what keep AI programs from stalling. Measuring maturity and business impact helps enterprises decide…