- Reframing PoC as an Enterprise Decision Instrument

- A Strategic Framework for Proof of Concept Software Development

- Phase 1: Opportunity Framing and Hypothesis Definition

- Phase 2: Scope, Constraints, and Validation Criteria

- Phase 3: Architecture and Environment Planning

- Phase 4: Build, Instrument, and Validate

- Phase 5: Evaluation, Risk Review, and Decision Gate

- Key Advantages Of POC In Software Development

- Cost and Timeline Benchmarks for Enterprise PoC Software Development

- Enterprise-Grade PoC for New Software Product Use Cases That Drove Real Impact

- Example 1: ING’s Data Mesh PoC

- Example 2: Financial Data Platform Modernization

- Example 3: AI PoC Avoids Unnecessary ML Complexity

- Common Pitfalls & Challenges of PoC Development

- Trying to Prove Too Much at Once

- Unclear or Shifting Success Criteria

- Treating Data as a Later Problem

- Ignoring Security and Risk Until the End

- Ownership Sitting With One Team

- The Demo Trap: When PoCs Look Convincing but Prove Nothing

- PoC vs. Prototype vs. MVP: How Enterprises Should Choose the Right Path

- Checklist Before Creating a Proof of Concept in Software Development

- Enterprise-Grade PoC Software Engineering Architecture, Capabilities, and Technology Foundations

- Core Capabilities an Enterprise PoC Must Demonstrate

- Reference Architecture Patterns

- Technology Selection Principles

- Architectural Decisions That Help or Hurt Future Scale

- Governance, Compliance, and Risk Controls in Proof of Concept in Software Development

- Enterprise Security Expectations and Control Readiness

- Global Data Protection and Privacy Considerations

- Industry and Regional Compliance Requirements

- Third-Party and Platform Risk Exposure

- Audit Trails and Documentation Discipline

- Measuring PoC Outcomes: Metrics, KPIs, and Evaluation Models

- Business Metrics vs Technical Metrics

- Core Indicators Teams Usually Track

- Handling Mixed or Inconclusive Results

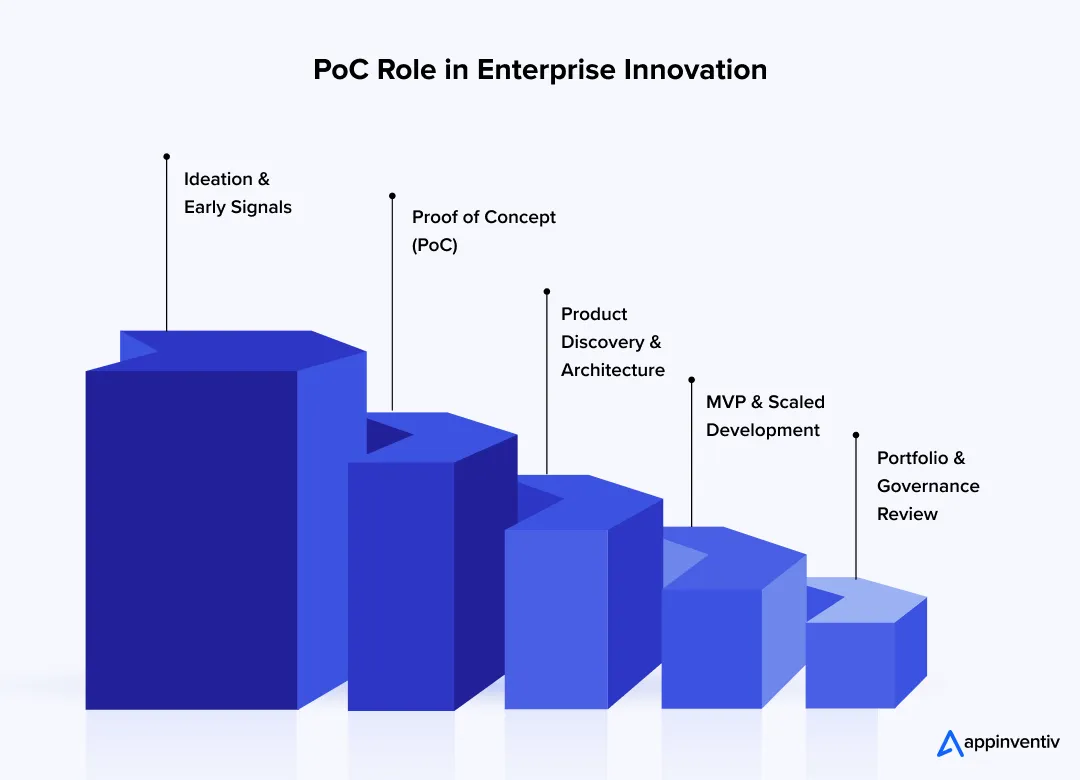

- How PoC in Software Development Fits Into Broader Product & Innovation Lifecycles

- Future Directions in Proof of Concept Software Development

- AI-First and Model-Driven PoCs

- Compliance-Led Validation Approaches

- Shorter PoC Cycles With Higher Rigor

- PoCs as Vendor and Procurement Filters

- Greater Focus on Cost-of-Scale Modeling

- Why Appinventiv Is a Trusted Partner for Enterprise PoC Software Engineering Development

- Frequently Asked Questions

Key takeaways:

- Most enterprise PoCs fail due to a lack of decision clarity, not technical feasibility or innovation potential.

- A disciplined PoC framework reduces delivery risk before budgets, teams, and timelines are committed.

- Enterprise-grade PoCs validate feasibility, compliance, and scale assumptions under realistic operating constraints.

- Clear success metrics and governance turn PoCs into reliable inputs for executive decisions.

- Well-designed PoCs connect early experimentation directly to product roadmaps and investment planning.

Most enterprise initiatives do not fail because the idea was bad. They failed because the organization moved forward without really knowing what it was committing to.

You’ve probably seen this game. A small team puts together a proof of concept to show progress, and it works well enough in a demo. You see slides getting approved, and then funding follows. However, a few months later, a lot of questions start coming in. Can this handle real data volumes? What happens when security reviews begin? Why does the architecture look nothing like what production needs?

That gap is where many PoCs quietly lose their value.

When PoC in software development is treated as informal experiments, they stop being a useful decision tool. They answer surface-level questions but avoid the ones that slow teams down later. Leadership senses this. Over time, PoCs start to feel like placeholders instead of proof.

A more disciplined approach to proof of concept software development changes how PoCs are used inside the enterprise. The goal is no longer to show that something can work alone. The goal is to test the right assumptions early, under realistic constraints, before making larger investments.

For enterprise leaders, this shift matters. A structured PoC creates alignment across engineering, security, and business teams. It replaces opinion with evidence. And it makes the next decision, whether to move forward or not, far easier to defend.

Over 80 percent of initiatives stall without decision-ready PoC validation

Reframing PoC as an Enterprise Decision Instrument

In many enterprises, the PoC meaning in software development sits in an odd place—it is proof-of-concept work that is neither quite an experiment nor treated as a serious decision checkpoint. That confusion shows up later, usually when leadership asks questions the PoC was never designed to answer.

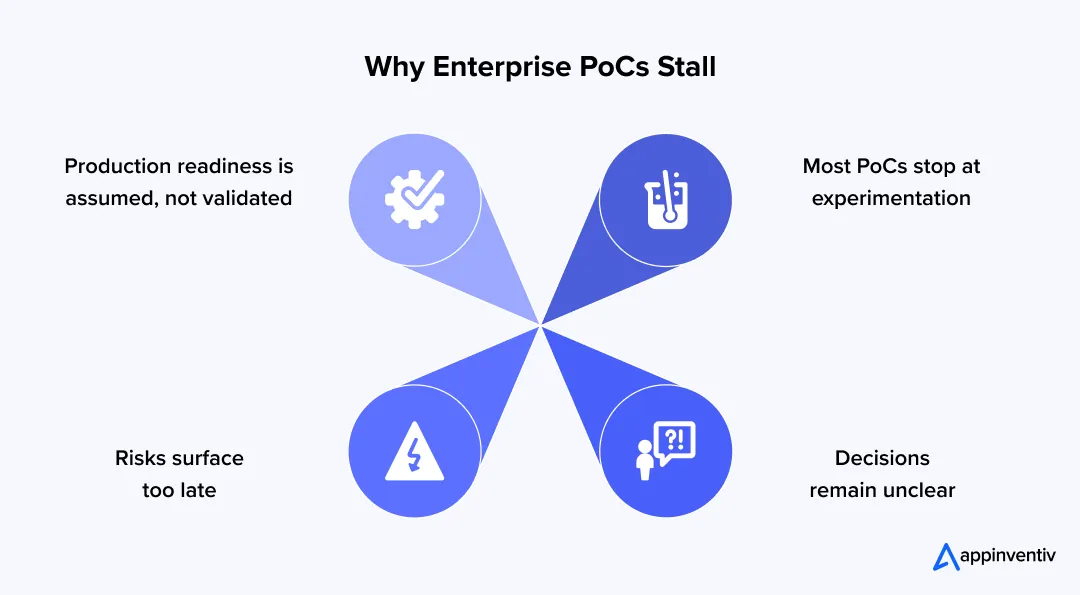

This gap has real consequences. 87% of proof of concept in software development initiatives never move beyond experimentation and fail to reach production, not because the idea lacked merit, but because the PoC never delivered the clarity leadership needed. At an enterprise level, a PoC only earns its keep when it helps people decide what to do next. Not someday but now.

Here is how that mindset shift plays out in practice:

- PoC is not early exploration: Exploration is open-ended by design. A PoC is not. It exists because a decision is blocked, and someone needs evidence to move forward.

- Some situations leave no room for guesswork: If the system needs to operate at scale, touch-regulated data, or sit on top of aging platforms, a PoC becomes a requirement, not a nice-to-have.

- Executives expect a clear outcome: At the end of a PoC, leadership wants one thing. Proceed, rethink the approach, or stop before costs increase. Anything vague slows momentum.

- Ownership cannot live with one team: Product frames intent. PoC software engineering tests the feasibility of security flag exposure. Procurement looks ahead to cost and vendor risk. When those voices align early, decisions become easier.

This is when a PoC stops being a technical activity and becomes a real decision point.

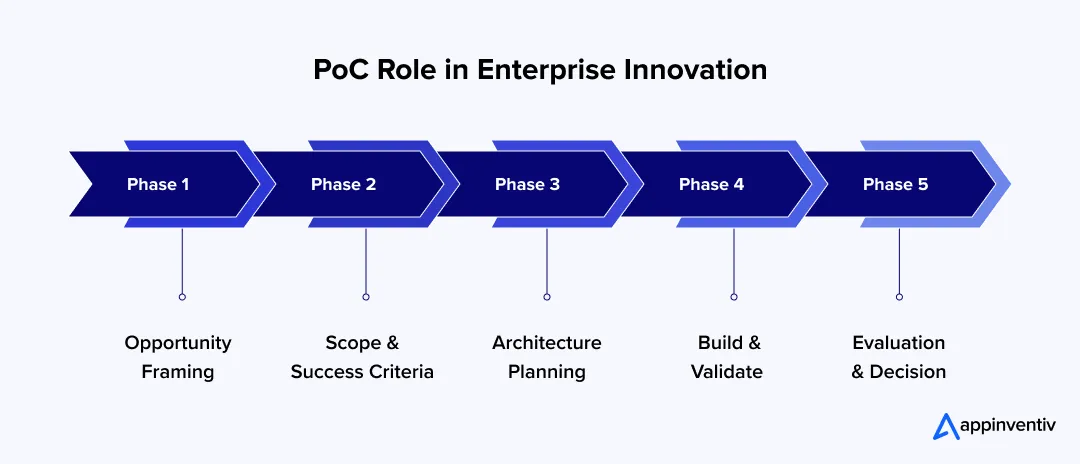

A Strategic Framework for Proof of Concept Software Development

When PoC software development fails inside large organizations, it is rarely because the technology was impossible. More often, teams jumped into building before they were clear on what decision the work was supposed to support.

A good framework fixes that, not by adding ceremony, but by forcing the right conversations to happen early, in the right order.

Here are the key steps in developing a proof of concept:

Phase 1: Opportunity Framing and Hypothesis Definition

This is where the work actually begins, even though no code is written yet.

The team steps back and agrees on what is uncertain today. Maybe it is whether an approach can work with existing systems. Maybe it is whether the value justifies the effort.

This uncertainty is common with proof of concept in software development for emerging technologies, where 42% of enterprise IT teams have already moved from experimentation to active deployment, while 40% are still exploring next steps. The PoC exists to resolve that uncertainty, not to impress anyone with a demo.

At this stage, teams usually focus on:

- What problem is being solved, and why it matters now

- What could go wrong if assumptions are incorrect

- Whether the idea is technically possible within known limits

- Whether success would justify further investment

If this alignment is skipped, everything that follows becomes harder.

Phase 2: Scope, Constraints, and Validation Criteria

Once the problem is clear, discipline becomes the priority.

This is where teams decide how small the PoC can be while still being meaningful. Many PoCs fail here by trying to do too much. Others fail by being so narrow that results cannot be trusted.

The goal is balance.

Teams typically lock down:

- The smallest set of behaviors that must be tested

- Which requirements matter now, and which can wait

- Constraints around data access, security, or integrations

- How success will be measured in concrete terms

Without this clarity, results stay subjective.

Phase 3: Architecture and Environment Planning

Before anything is built, boundaries are agreed upon.

Not production architecture. Not future state design. Just enough structure to make the PoC realistic and safe.

This phase often includes:

- Deciding what must stay isolated from production

- Setting up sandbox environments with controlled access

- Being honest about data quality and availability

- Calling out dependencies that could affect results

These decisions prevent surprises later.

Phase 4: Build, Instrument, and Validate

This is where momentum picks up.

The team builds quickly, but with intention. Instrumentation is added early so performance, behavior, and limitations are visible as the PoC evolves.

Typical focus areas include:

- Lightweight governance to avoid risky shortcuts

- Logging and basic monitoring from day one

- Validation tied back to agreed success criteria

- Clear documentation of trade-offs and gaps

If results cannot be repeated, they cannot be trusted.

Phase 5: Evaluation, Risk Review, and Decision Gate

The PoC does not end with a demo. It ends with clarity. Results are pulled together in a way that leadership can understand. What worked. What did not? What risks remain if the organization moves forward?

This final step usually covers:

- Evidence that supports or challenges the original hypotheses

- Dependencies and risks that surfaced during validation

- An honest view of the effort required to continue

- A clear recommendation on what should happen next

That decision is the PoC’s real output.

Also Read: MVP in Software Development – Why is it essential and how can businesses approach it?

Key Advantages Of POC In Software Development

Most enterprise teams do not need more ideas. They need fewer surprises. That is what a structured PoC really helps with. When PoCs are loose, they feel productive early and expensive later. When they are structured, they act like a pause button at the right moment.

Here is where the benefits of creating a PoC in software development actually show up.

- Lower risk, early: A good PoC forces uncomfortable questions sooner than most teams prefer. Will this break under real load? Does the data behave as we expect? Is the effort justified if this moves forward? Finding early is usually cheaper than finding later.

- Cleaner executive conversations: Leadership discussions change when PoC results are grounded in data. In data, instead of debating opinions, teams go through what was tested and what was not. Decisions become simpler. Not always easier, but clearer.

- Fewer surprises around scale and compliance: Security reviews, data access issues, and performance limits rarely appear in demos. They appear when PoCs are properly scoped. Seeing them early gives teams room to adjust.

- Better alignment between business and IT: A structured PoC gives both sides something concrete to react to. Business teams stop guessing. Engineering teams stop defending assumptions. This alignment becomes even more critical during comprehensive software development efforts that follow successful PoCs.

- More confidence moving forward: When a team decides to continue after a PoC, it feels earned. That confidence matters once real budgets and accountability are involved.

Cost and Timeline Benchmarks for Enterprise PoC Software Development

Cost and timelines are often where PoC conversations become uncomfortable. Leaders want estimates early, but teams hesitate because requirements, dependencies, and risk factors are not fully defined. In practice, most enterprise PoCs fall into predictable ranges once scope and risk are understood.

The key is to frame proof of concept development numbers as validation spend rather than delivery cost.

| PoC Complexity | Typical Timeline | What Is Being Validated |

|---|---|---|

| Low | 3 to 4 weeks | Core feasibility, basic workflows |

| Medium | 6 to 8 weeks | Integrations, performance, data flow |

| High | 8 to 12 weeks | Scale assumptions, security, AI or legacy systems |

Longer timelines usually signal unclear scope rather than true complexity. Most enterprise-grade PoC software development cost in the US falls between $40,000 and $400,000, depending on depth. Costs rise when multiple systems, sensitive data, or advanced models are involved.A few factors influence cost more than others:

- Data access, preparation, and masking

- Security controls and reviews

- AI or ML components, especially inference costs

- Number and complexity of integrations

A PoC starts losing value when its cost approaches that of building the first real version. At that point, assumptions should already be validated.

Also Read: How Much Does It Cost to Build an MVP: Costs Explained

Control costs, validate feasibility early, and build a scalable custom PoC with confidence.

Enterprise-Grade PoC for New Software Product Use Cases That Drove Real Impact

When enterprises treat PoCs as disciplined validation experiments, they uncover insights that change how major initiatives unfold. The examples of POC in software development below show real PoC work that shaped decisive outcomes in large organizations.

Example 1: ING’s Data Mesh PoC

ING partnered with Thoughtworks to run a PoC on Google Cloud for a data mesh architecture. This wasn’t just a demo—it tested decentralizing data ownership across business domains, moving away from a monolithic data stack. Within about eight weeks, the PoC showed measurable improvements in agility and faster team-led data product creation, which influenced a broader enterprise-wide data transformation plan.

Key outcomes

- Validated data mesh for autonomous data domains

- Reduced manual bottlenecks

- Accelerated strategic transformation roadmap, with rigorous POC testing approaches applied to scale the solution.

Example 2: Financial Data Platform Modernization

A leading financial services client engaged in a PoC to modernize its legacy data platform with cloud-native data warehousing and analytics. The PoC validated ingestion from diverse systems and governance models before full build-out. This early evidence directly influenced technology choice and phased rollout plans.

Key outcomes

- Unified complex pricing data into a single repository

- Improved analytic readiness before scale

- Reduced risk of costly post-launch rewrites

Example 3: AI PoC Avoids Unnecessary ML Complexity

At a logistics firm, a PoC involving ML for document processing showed that pure ML did not handle variable document formats effectively. Building an AI POC requires validating these technical constraints early to avoid expensive rework. The PoC revealed the need for hybrid algorithms rather than simply throwing more training data at the problem.

Key outcomes

- Prevented 7+ months of unnecessary development

- Led to a hybrid ML/algorithmic solution

- Reduced manual processing by ~25% in the initial MVP phase

Across all these use cases of POC in software development, disciplined work shifted risk into insight, saved time and cost, and accelerated confident decision-making at scale.

Common Pitfalls & Challenges of PoC Development

Enterprise PoC software development tends to struggle for predictable reasons. The problems are rarely technical. They usually come from how the PoC is framed, owned, and evaluated once multiple teams get involved.

Trying to Prove Too Much at Once

PoCs often become overloaded because teams want to demonstrate momentum. Features are added to keep stakeholders interested, even when those features do not help validate the core assumption. As the scope grows, focus weakens, and the original question remains unanswered.

What helps

- Reduce the scope to one or two critical assumptions.

- Remove features that do not influence the decision.

- Treat anything else as explicitly out of scope.

Unclear or Shifting Success Criteria

When success is not clearly defined upfront, PoC outcomes become subjective. Engineering teams may see progress, while leadership remains unconvinced. Over time, the criteria shift in response to feedback, making results harder to trust.

What helps

- Define success and failure before development begins.

- Use measurable signals instead of qualitative judgment.

- Keep standards stable across the PoC.

Treating Data as a Later Problem

Many PoCs start with ideal or partial data to move quickly. Real data introduces access constraints, quality issues, and privacy concerns that surface late and disrupt progress. This often invalidates earlier results.

What helps

- Validate data access early in the process.

- Test with realistic samples whenever possible

- Document known data gaps and limitations clearly.

Ignoring Security and Risk Until the End

Security is often delayed to avoid slowing early work. In enterprise environments, this creates friction later. Late security involvement can force redesigns or stall approvals, even when the PoC technically works.

What helps

- Involve security early, even at a high level.

- Set clear access, isolation, and data boundaries.

- Avoid shortcuts that cannot be justified later.

Ownership Sitting With One Team

PoCs owned by a single team struggle to influence broader decisions. Without early input from product, risk, or procurement, results lack context and buy-in across the organization.

What helps

- Share ownership across product and engineering.

- Keep risk and procurement informed from the start.

- Align teams around the decision being supported

The Demo Trap: When PoCs Look Convincing but Prove Nothing

The demo trap occurs when a PoC is designed to impress rather than validate. The walkthrough looks smooth, stakeholders feel confident, and approvals follow. But critical constraints are quietly bypassed to keep the demo simple.

Key questions remain unanswered. How does the system behave with real data volumes? What happens once security controls are enforced? Which assumptions were skipped to make the demo work?

This creates false confidence. When delivery begins, gaps surface during integration, compliance review, or scale testing. Timelines stretch, rework increases, and trust erodes.

How teams avoid the demo trap

- Validate under realistic data, security, and integration constraints

- Instrument performance, cost, and failure behavior early

- Document shortcuts and untested assumptions clearly

- Treat demos as evidence, not conclusions

Well-run PoCs are valuable because they reveal friction early, not because they look polished.

Also Read: A Comprehensive Guide to Hiring Software Developers: Cost, Process, and Key Insights

PoC vs. Prototype vs. MVP: How Enterprises Should Choose the Right Path

Enterprises often use these terms interchangeably, which is where confusion starts. Each stage exists for a different reason and answers a different question. Choosing the wrong one, or skipping a step entirely, usually leads to misaligned expectations and wasted effort.

The easiest way to decide is to look at what you are trying to validate right now, not what you eventually want to build.

Comparison Across PoC, Prototype, and MVP

| Aspect | Proof of Concept (PoC) | Prototype | Minimum Viable Product (MVP) |

|---|---|---|---|

| Primary objective | Validate feasibility and risk | Validate usability and flow | Validate market demand |

| Typical scope | Narrow, assumption-driven | Visual or functional slice | End-to-end core experience |

| Time investment | Short, weeks | Short to medium | Medium to long |

| Cost range | Low to medium | Medium | Medium to high |

| Validation focus | Can this work under constraints | Does this make sense to users | Will users adopt and pay |

| Key stakeholders | Engineering, architecture, risk | Product, design, business | Product, business, customers |

| Common output | Evidence and findings | Clickable or testable model | Working product in the market |

The most common mistake is jumping straight to an MVP before feasibility or risk is understood. Another is using a prototype to justify technical decisions it was never meant to validate.

Organizations should understand MVP fundamentals thoroughly before committing to full-scale development. In regulated or complex environments, skipping a proper PoC often leads to delays later, when changes are far more expensive.

Choosing the right stage upfront keeps expectations aligned and decisions grounded.

Also Read: POC vs. MVP vs. Prototype: The Strategy Closest to Product Market Fit

Checklist Before Creating a Proof of Concept in Software Development

Before starting a software PoC, it helps to pause and confirm a few basics. Teams that skip this step usually pay for it later.

Sanity check before you build:

- Do we know the exact decision this PoC is meant to support?

- Are success and failure clearly defined?

- Have data access and privacy constraints been confirmed?

- Is security involved early, even at a lightweight level?

- Do all stakeholders agree on what is in and out of scope?

If any of these answers are unclear, the PoC is likely to create more questions than clarity.

Enterprise-Grade PoC Software Engineering Architecture, Capabilities, and Technology Foundations

At the enterprise level, a PoC is judged less by how fast it was built and more by how safely conclusions can be drawn from it. Architecture choices, even temporary ones, shape that trust.

This section focuses on how enterprise teams design PoCs that remain flexible, reviewable, and technically credible, without accidentally turning them into half-built production systems.

Core Capabilities an Enterprise PoC Must Demonstrate

Before architecture diagrams or tooling discussions, it helps to define what the PoC must prove at a capability level. These capabilities enable stakeholders to trust the results, even if the implementation is short-lived.

In most enterprise PoCs, that means demonstrating:

- A modular structure where components can be replaced or removed without cascading changes

- Strong isolation from production systems, including access boundaries and data separation, similar to how effective IT integration requires clear boundaries

- Early visibility into cost drivers, performance limits, and basic reliability behavior

- Tangible artifacts such as configs, logs, and design notes that survive beyond the demo

- Explicit assumptions about scale, including what has not been tested yet

Without these signals, PoC outcomes tend to feel optimistic rather than reliable.

Reference Architecture Patterns

PoC architecture does not need to be complex, but it does need to be intentional. The goal is to mirror reality just enough to surface meaningful constraints.

Most enterprise PoCs benefit from a simple layered approach:

- An interface layer that reflects how users or systems would actually interact

- A validation or service layer where the core logic is exercised

- A data abstraction layer that avoids tight coupling to underlying stores

- Integration adapters that simulate or connect to internal and external systems

Teams also make deliberate tradeoffs here:

- Choosing stateless designs when possible to simplify testing and recovery

- Accepting the state only where behavior cannot be validated otherwise

- Deciding between cloud-native setups and hybrid environments based on constraints

- Accounting for AI or LLM components, including inference latency and data handling

- Using containers and basic infrastructure automation to keep environments consistent

Technology Selection Principles

At the PoC stage, technology choices are about reducing friction, not finding the perfect stack.

Teams typically prioritize:

- Backend and infrastructure options that allow rapid change and rollback

- Data sourcing approaches that respect access limits and privacy constraints

- AI or ML components that can be evaluated under realistic conditions, with lessons from AI integration examples informing implementation decisions

- Foundational observability so behavior can be inspected, not guessed

- Identity and secrets handling that reflects enterprise security expectations

The emphasis is on transparency and control, not optimization.

Architectural Decisions That Help or Hurt Future Scale

Every PoC contains decisions that either age well or create false confidence later. Calling this out early prevents confusion during handoff or expansion.

Teams should be explicit about:

- Which components are candidates for reuse, and which are throwaway by design

- Where shortcuts were taken and why they were acceptable in this context

- Anti-patterns such as hard-coded assumptions, hidden dependencies, or bypassed controls

When these boundaries are clear, PoCs inform future work rather than complicate it.

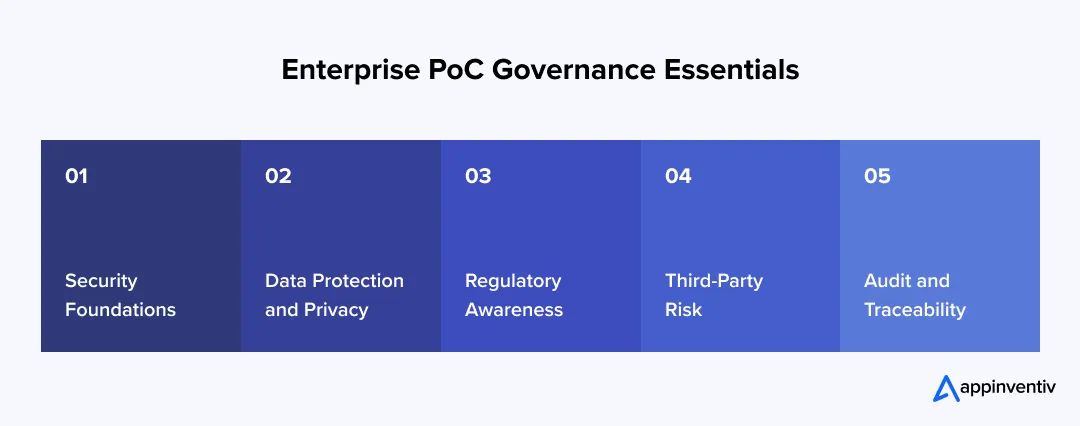

Governance, Compliance, and Risk Controls in Proof of Concept in Software Development

Even when a PoC is small, governance expectations are not. Across regions, enterprises expect early-stage initiatives to respect security, data protection, and risk boundaries. A PoC does not need to follow certification standards, but it must demonstrate intent, control, and awareness.

This is often what determines whether results are taken seriously beyond the engineering team.

Enterprise Security Expectations and Control Readiness

Globally, security teams seek baseline discipline during PoCs. The goal is not perfection, but evidence that risks are understood and managed.

What typically matters:

- Controlled access to environments and services

- Isolation from production systems

- Secure handling of credentials, keys, and secrets

- Basic logging and monitoring of system activity

Global Data Protection and Privacy Considerations

Data handling is one of the fastest ways a PoC can stall. Regulations differ by region, but expectations around privacy are consistent.

Teams usually clarify:

- What data is being used and why

- Whether personal or sensitive data can be avoided

- How data is masked, anonymized, or sampled

- Retention and cleanup plans after the PoC

This applies across regulations such as GDPR, CCPA, and similar regional frameworks.

Industry and Regional Compliance Requirements

Certain industries bring compliance considerations into PoCs early, regardless of geography.

Common examples include:

- Healthcare data regulations across regions

- Financial services and market oversight requirements

- Internal enterprise policies that exceed regulatory baselines

Third-Party and Platform Risk Exposure

Most PoCs rely on external platforms or services. That introduces risk, even at a limited scale.

Teams typically assess:

- What data is shared externally

- How third parties manage access and security

- Contractual or licensing constraints

Audit Trails and Documentation Discipline

PoCs gain credibility when decisions can be traced.

What helps:

- Clear documentation of architecture and assumptions

- Records of access and configuration changes

- Explicit notes on known gaps and unresolved risks

These practices enable PoCs to move forward globally without becoming compliance bottlenecks.

Measuring PoC Outcomes: Metrics, KPIs, and Evaluation Models

PoC in software development often falls apart at the measurement stage. Not because teams did not collect data, but because they collected the wrong kind of data. In enterprise settings, outcomes need to make sense to both business and technical stakeholders. One without the other rarely drives a decision.

Business Metrics vs Technical Metrics

Both matter, but they answer different questions.

Business-focused signals usually include:

- Expected impact on cost, revenue, or efficiency

- Reduction in manual effort or process time

- Risk exposure if the solution is scaled

Technical-focused signals tend to cover:

- System latency under realistic load

- Reliability and failure behavior

- Integration complexity with existing systems

Keeping these separate avoids confusion during reviews.

Core Indicators Teams Usually Track

Most enterprise PoCs narrow measurement down to a small set of indicators:

- Cost: estimated run cost, licensing, or infrastructure impact

- Latency: response times under expected conditions

- Reliability: error rates, failure recovery, stability

- Risk: security gaps, compliance concerns, dependency risk

Example PoC Evaluation Matrix

| Area | Metric Tracked | Result | Threshold Met |

|---|---|---|---|

| Performance | Avg response time | 320 ms | Yes |

| Cost | Monthly run estimate | High | No |

| Security | Access control gaps | Minor | Partial |

| Integration | Legacy system impact | Medium | Yes |

This kind of view helps leadership quickly scan outcomes.

Handling Mixed or Inconclusive Results

Not every PoC ends cleanly. Some results fall in between. When that happens, teams usually:

- Call out what was validated versus what remains unknown

- Recommend targeted follow-up tests.

- Avoid forcing a go or stop decision without evidence.

Clarity here matters more than certainty.

How PoC in Software Development Fits Into Broader Product & Innovation Lifecycles

In large organizations, PoCs can cause problems when they operate in isolation. A team runs one, shows the results, and then everyone moves on without knowing what to do with the outcome. The work feels finished, but nothing really changes.

PoCs work better when they sit in the middle of the product flow, not on the side.

PoCs work better when they sit in the middle of the product flow, not on the side.

They usually come after early ideas have been discussed, but before budgets, delivery teams, and timelines are locked in. That timing matters. It gives leadership a chance to say yes, no, or not yet with real information.

PoCs also tend to shape product and architecture planning more than teams expect. Decisions about integrations, data limits, or performance constraints often come directly from PoC findings, even if no one labels them that way.

What typically carries forward:

- Assumptions that were tested and held up

- Constraints that need to be designed around

- Risks that need more work before scaling

At a portfolio level, PoCs help organizations decide where to invest next and what to stop early. When used this way, they reduce restarts and repeated debates. The work connects instead of resetting each time.

Turn validated PoC insights into confident product and roadmap decisions

Future Directions in Proof of Concept Software Development

Proof of concept software development is changing because enterprises are changing how they make decisions. Teams are expected to validate more quickly, surface risks earlier, and provide clearer signals to leadership. That pressure is shaping how PoCs are designed and what they are expected to deliver.

The trends below reflect how PoCs are already being used across large organizations.

AI-First and Model-Driven PoCs

More PoCs now revolve around models rather than application features. The focus has shifted from whether something can be built to whether models behave reliably under real conditions.

What teams are validating:

- Model accuracy with real or near-real data

- Latency and inference cost at expected volumes

- Failure behavior and edge cases

Compliance-Led Validation Approaches

Enterprises are increasingly using PoCs to test regulatory assumptions early, especially when data, automation, or cross-border systems are involved.

What this includes:

- Early privacy and data handling checks

- Documented assumptions for audit review

- Validation aligned with regional regulations

Shorter PoC Cycles With Higher Rigor

PoCs are getting shorter, but not looser in discipline. Teams are cutting scope rather than validation.

Common changes:

- Fewer features, deeper testing

- Faster checkpoints for go or stop decisions

- Clear exit criteria

PoCs as Vendor and Procurement Filters

PoCs are often used to evaluate vendors under real constraints before long-term commitments are made.

Teams look at:

- Integration effort with existing systems

- Security posture and controls

- Cost and support implications

Greater Focus on Cost-of-Scale Modeling

Enterprises are paying closer attention to how costs behave beyond the PoC stage, not just whether something works.

What teams test early:

- Infrastructure and licensing costs at scale

- Operational overhead

- Long-term sustainability

These shifts are turning PoCs into sharper decision tools rather than open-ended experiments.

Why Appinventiv Is a Trusted Partner for Enterprise PoC Software Engineering Development

Most enterprises do not look for a PoC software development partner because they need help writing code. They look for one because they want fewer surprises once real money and timelines are involved.

That is where Appinventiv’s proof of concept software development services usually fit.

As a PoC software development company, the teams here have worked on 2,000+ software products, many of them inside large organizations with existing systems, approvals, and constraints. A 95% on-time delivery record matters in PoC work because delays often signal unclear scope or weak ownership. Those issues tend to surface early.

There is also a lot of experience dealing with complexity that does not show up in demos. Appinventiv’s custom software development services have helped transform 500+ legacy processes and work across 35+ industries, ensuring PoCs are built with real environments in mind, not ideal ones.

Trust shows up over time. A 95% client satisfaction rate and 90% repeat clientele usually mean teams come back because earlier work held up under pressure.

Behind the scenes, 1,600+ technologists and 10+ years of experience support delivery, so PoCs do not stall when they move into the next phase. Security and continuity are also treated as baseline expectations, with 99.5% compliance standards and 24/7 support.

The result is PoC work that teams can actually build on, not explain away later.

Frequently Asked Questions

Q. What is POC in software development?

A. The PoC meaning in software development, is a focused exercise used to validate whether a proposed solution can work within real technical and operational constraints. It helps teams test feasibility, uncover risks, and challenge assumptions early, before committing significant budget, delivery timelines, or long-term architectural decisions.

Q. Why does software need proof of concept?

A. Enterprises rely on proof of concept in software development to reduce uncertainty before making high-impact decisions. A PoC allows teams to test integration complexity, data readiness, performance limits, and security expectations early. This prevents costly rework later and gives leadership evidence to approve, pause, or stop initiatives before scaling confidently.

Q. What makes a proof of concept successful at the enterprise level?

A. An enterprise PoC is successful when it makes a clear decision, not when it produces a polished demo. Success depends on well-defined validation criteria, realistic constraints, early involvement from risk and security teams, and transparent documentation of what worked, what failed, and what remains uncertain.

Q. What is the cost of POC software development for enterprises?

A. For enterprises, proof of concept development typically costs between $40,000 and $400,000, depending on scope, data complexity, integrations, and compliance needs. Costs increase when regulated data, legacy systems, or AI models are involved. A well-scoped PoC justifies its price by reducing downstream delivery risk.

Q. How long does it take to develop a software POC?

A. Most enterprise PoCs are completed within 3 to 12 weeks. Simpler feasibility validations fall on the lower end, while PoCs involving compliance reviews, data pipelines, or AI validation take longer. When PoCs extend beyond this range, unclear scope is usually the cause.

Q. What should enterprises include in a PoC checklist and best practices?

A. An enterprise PoC checklist should cover requirements engineering, clear needs and goals, and alignment with the business plan. It should confirm feasibility, funding readiness, and stakeholder involvement through a requirements workshop. Building a prototype using suitable software development methodologies and incorporating early user feedback improves decision confidence.

Q. Why is a proof of concept important for enterprises?

A. The advantages of PoC in software development include testing feasibility using a PoC prototype, validating UI/UX assumptions, and gathering stakeholder feedback. It supports teamwork and change management, reduces risk through mitigation and cost savings, strengthens investor confidence, clarifies market value, informs the roadmap, and aligns solutions with the intended user base.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

The ROI of Strategic Insurance Technology Consulting for Legacy Modernization

Key takeaways: Insurance technology consulting delivers ROI only when modernization is tied to real workflows, not system replacement. Most legacy modernization failures stem from weak ROI definition and tracking, not from technology limitations. The strongest returns come from reduced operational friction, faster change cycles, and tighter claims and underwriting control. Delaying modernization incurs hidden costs…

Key Takeaways Use a scorecard-driven RFP and a technical assessment to compare vendors on capability, compliance, and delivery risk. Local partners provide regulatory and cultural alignment; hybrid teams often pair that with offshore cost efficiency. Start with a scoped pilot or MVP, milestone-based contracts, and clear IP/SLAs to reduce procurement risk. Require demonstrable security controls,…

Top UK Software Development Trends Shaping 2026: Insights for Business Leaders

Key takeaways: 2026 is about fixing friction, not chasing trends. UK software teams are prioritising reliability, clarity, and systems that are easier to change over flashy new tooling. AI is becoming background support, not the main act. Its value shows up in routine work like testing, reviews, and documentation, while people still make decisions. Architecture…