- Why Traditional Operations Hit AI Scaling Walls

- MLOps vs DevOps: A Strategic Comparison

- Understanding the Cost of Getting MLOps Wrong

- Silent Model Failure

- Escalating Operational Cost

- Compliance and Audit Exposure

- Loss of Leadership Confidence in AI

- How MLOps Pays for Itself?

- Faster Time-to-Value

- Lower Cost per Model

- Reduced Operational Drag

- Controlled Risk and Fewer Surprises

- MLOps Success Stories: Real Companies, Real Revenue Impact

- Netflix's Runway Platform

- Microsoft's Azure MLOps Framework

- Starbucks' Deep Brew Platform

- Building AI That Works at Scale: How Enterprises Actually Implement MLOps

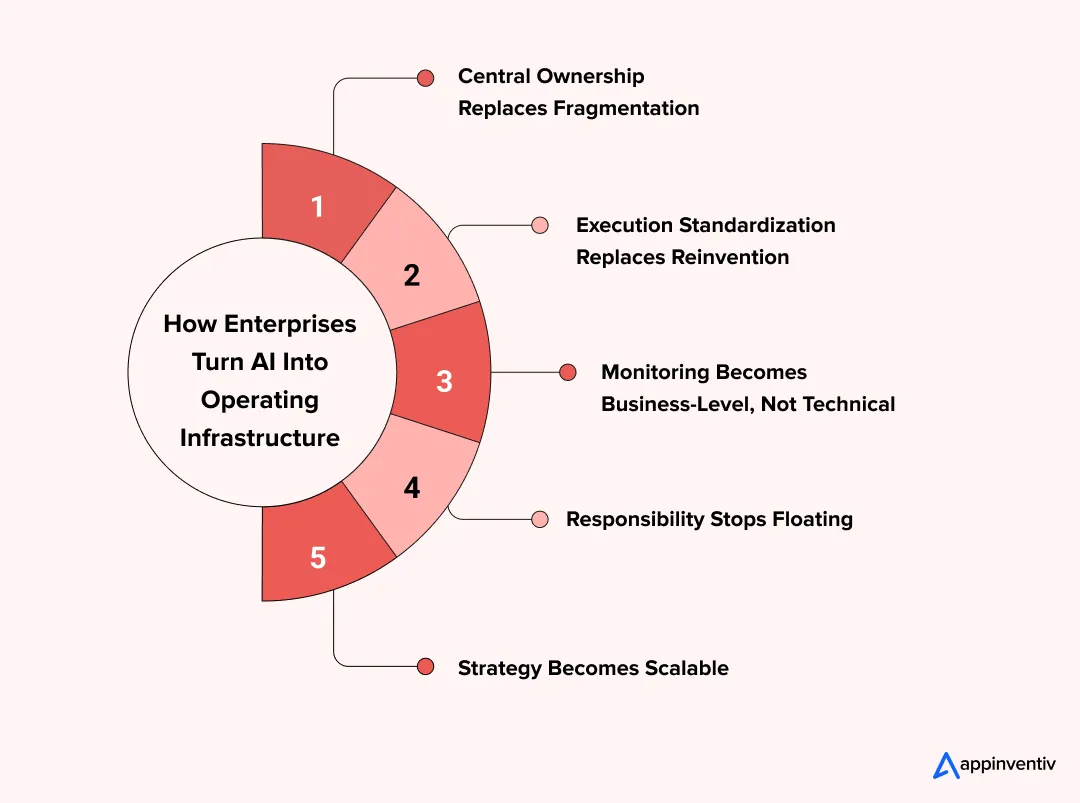

- Central Ownership Replaces Fragmentation

- Execution Standardization Replaces Reinvention

- Monitoring Becomes Business-Level, Not Technical

- Responsibility Stops Floating

- Strategy Becomes Scalable

- Scaling Right: MLOps Best Practices for Enterprise AI

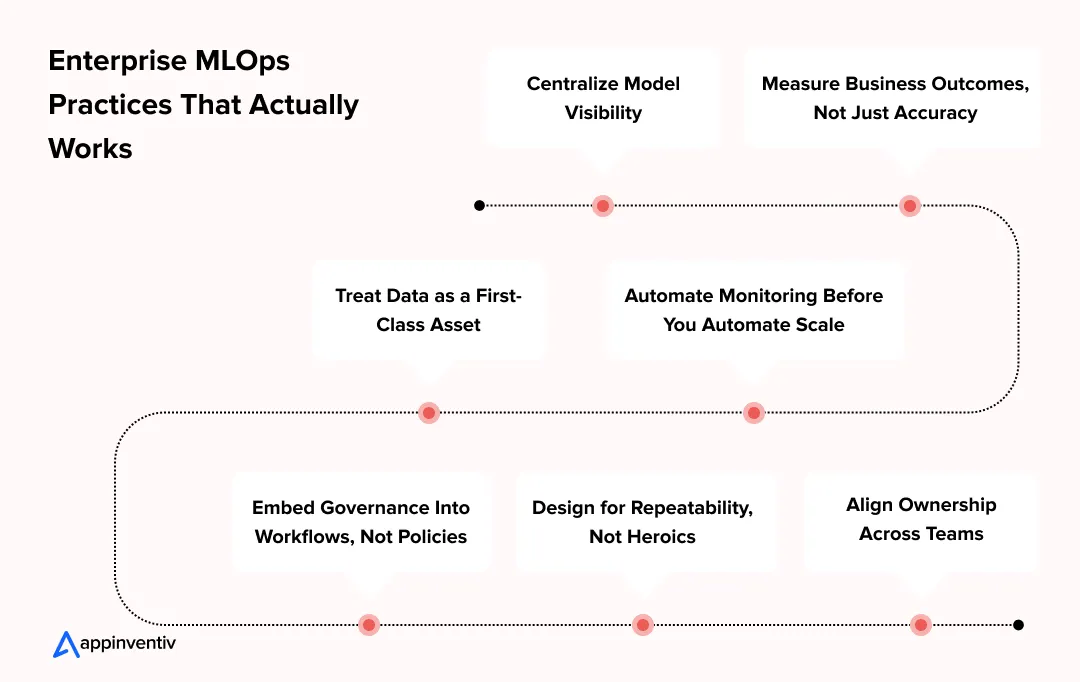

- Centralize Model Visibility

- Measure Business Outcomes, Not Just Accuracy

- Treat Data as a First-Class Asset

- Automate Monitoring Before You Automate Scale

- Embed Governance Into Workflows, Not Policies

- Design for Repeatability, Not Heroics

- Align Ownership Across Teams

- Common Mistakes Leaders Make With MLOps

- Where Enterprises Go Wrong and How to Fix It

- Future of MLOps and DevOps: Where the Technologies are Heading Next?

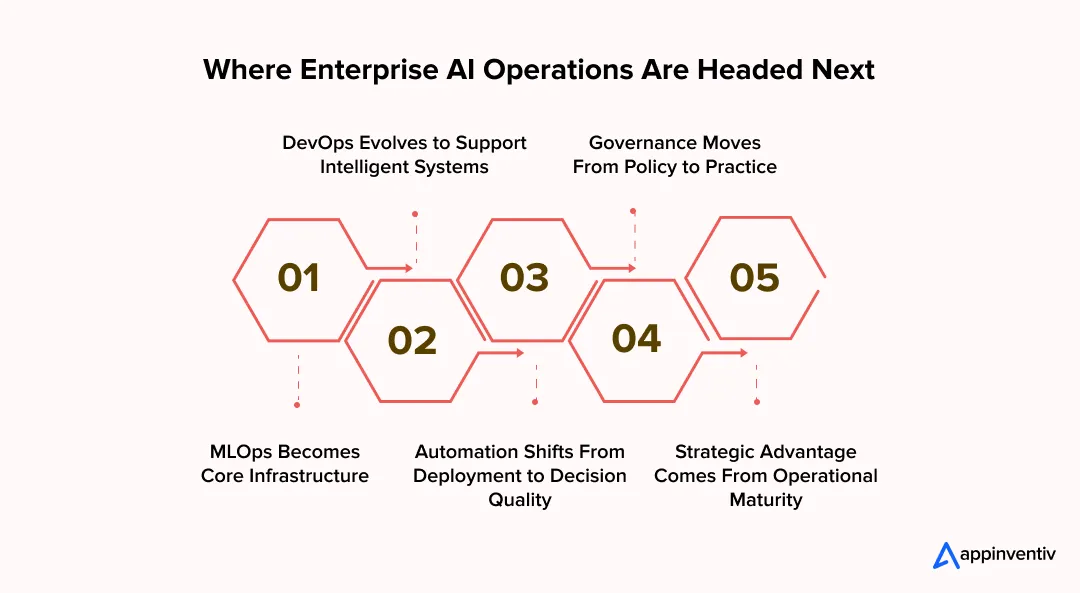

- MLOps Becomes Core Infrastructure

- DevOps Evolves to Support Intelligent Systems

- Automation Shifts From Deployment to Decision Quality

- Governance Moves From Policy to Practice

- Strategic Advantage Comes From Operational Maturity

- How Appinventiv is Your Partner for Enterprise-Scale AI

- FAQs

Key takeaways:

- DevOps runs systems. MLOps keeps decisions accurate.

- MLOps vs DevOps is a leadership choice, not a technical one.

- Scaling AI without MLOps only scales risk.

- MLOps turns AI into infrastructure, not experiments.

- Operational maturity beats model sophistication.

- Real advantage comes from mastering DevOps and machine learning together.

Most leadership teams don’t fail at AI because of bad models. They fail because the model works once and then quietly stops working when no one is watching. Accuracy drops, results become unreliable, and suddenly the tool that everyone celebrated six months ago is no longer trusted. That is not an engineering problem. It is an operational one. And it is showing up inside organizations faster than most leaders expect.

Many enterprises assume that strong DevOps skills alone will carry them through this next wave of AI adoption. But what worked for deploying applications does not automatically work for managing intelligence. Models depend on data that keeps changing, they degrade over time, and they behave differently in the real world than they did in testing. This is where the conversation around MLOps vs DevOps really begins. Not as a technical debate, but as a leadership decision about whether AI becomes a durable business capability or an unstable experiment.

This is also why more CIOs and CTOs are now re-examining how DevOps and machine learning actually work together in practice. Deployment pipelines alone do not catch data drift. Monitoring uptime does not tell you whether a model is making bad decisions. And model retraining does not fix itself. When enterprises rely only on DevOps to run intelligence systems, the result is often fragile automation and blind spots that grow quietly until they disrupt operations or revenue.

The growing shift toward DevOps vs MLOps is not about replacing one with the other. It is about recognizing that managing code is very different from managing decision-making systems. As AI moves into customer experience, forecasting, pricing, risk, and operations, leaders are discovering that DevOps for machine learning must be extended with new disciplines that watch data quality, model behavior, and long-term performance in real business conditions.

While DevOps manages traditional software efficiently, AI integration in DevOps workflows represents the evolution needed for intelligent, self-optimizing systems that go beyond code deployment to enable predictive operations.

This blog breaks down that shift in practical terms. You will see where DevOps fits, where it stops, and why organizations that succeed at scaling AI initiatives with MLOps treat AI operations as a business system, not a science project.

We will walk through real-world examples, common failures, and the best practices of MLOps and DevOps that separate companies running AI at scale from those stuck troubleshooting it. The goal is simple: help you decide how to build AI that keeps working, not just one that launches.

Why Traditional Operations Hit AI Scaling Walls

DevOps has done a remarkable job of modernizing how software is built and deployed. Most enterprises today can push updates faster, automate testing, and keep infrastructure stable. But artificial intelligence does not behave like traditional software. Models do not stay the same after release. They change as new data flows in, customer behavior shifts, and business conditions evolve. What used to be a linear pipeline becomes something far more unpredictable once machine learning enters the picture.

This is where the limits of DevOps start to appear. While DevOps manages code efficiently, it was never designed to manage data that changes daily or models that degrade quietly in production. In real AI environments, performance drops without warning, outcomes drift away from expectations, and technical teams often do not realize there is a problem until business metrics start slipping.

This is what turns the MLOps vs DevOps debate into a business discussion, not a technical one. DevOps handles deployment. MLOps handles intelligence after deployment. One keeps systems stable; the other keeps decisions accurate.

Consider high-impact use cases like fraud detection or predictive maintenance. The code may work perfectly, yet results fail because the model is trained on outdated patterns. That is what data drift looks like inside a business. And when it happens, leaders do not just lose accuracy. They lose trust in the system, confidence in forecasts, and clarity in decision-making. This is why the DevOps vs MLOps difference matters at scale. Without treating data and models as first-class operational assets, AI slowly stops delivering value.

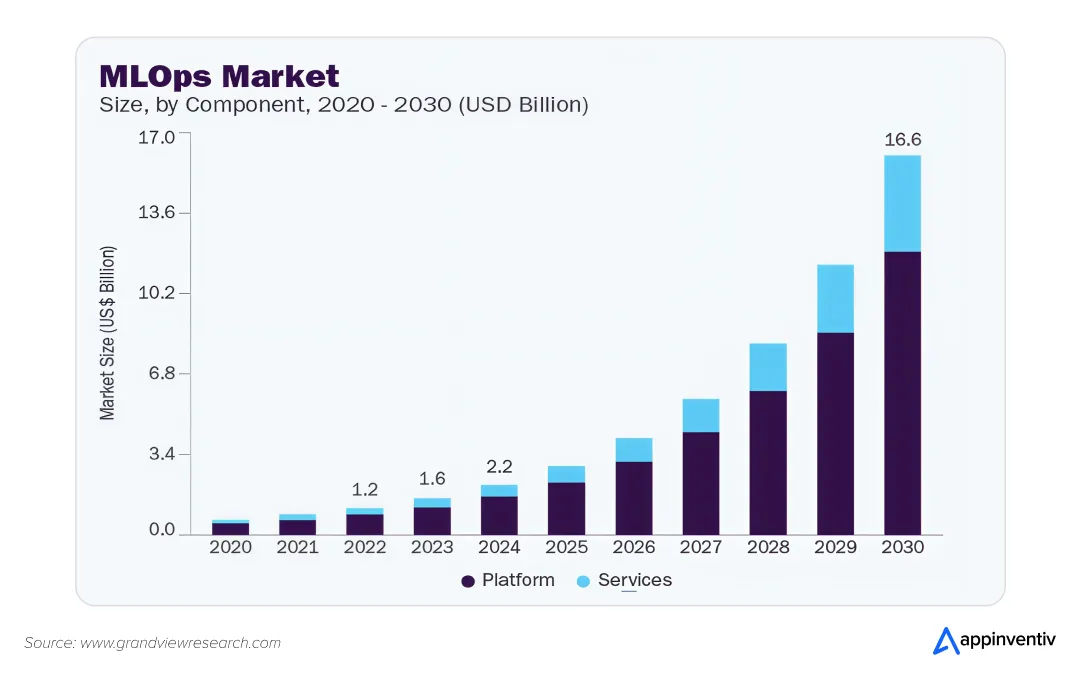

That is also why organizations focused on long-term scaling AI initiatives with MLOps are seeing faster deployment cycles, fewer failures, and more predictable outcomes. The market growth reflects this shift. In 2024, the global MLOps market was valued at $2.19 billion. It is projected to grow to $16.61 billion by 2030, showing how quickly enterprises are formalizing AI operations beyond experimentation.

Enterprises moving toward MLOps for enterprise AI adoption are doing it for one reason: execution. They are moving past theory into systems that can run and adapt at scale. The conversation has shifted from “Do we need MLOps?” to “How fast can we operationalize it?”

Which leaves leadership teams with critical questions:

- How do you keep models reliable after deployment?

- Are your best data scientists solving business problems or fixing production issues?

- Can you roll models out consistently across the organization without rebuilding everything each time?

MLOps vs DevOps: A Strategic Comparison

Before comparing DevOps and MLOps, one thing must be clear: they were built to solve very different problems. Confusing them is one of the main reasons AI programs stall after early success. Understanding what each discipline actually controls is the difference between running software and running intelligence.

What DevOps Really Means?

DevOps was created to solve a specific enterprise problem: software teams were moving faster than operations teams could safely deploy. The result was delay, risk, and constant conflict between teams responsible for building systems and those responsible for keeping them running. Enterprise DevOps introduced shared ownership, automation, and continuous delivery as a way to remove that friction.

The principles of DevOps focuses on making software releases predictable, repeatable, and reliable through CI/CD pipelines, infrastructure automation, and monitoring practices.

In short, DevOps is about getting code into production efficiently and safely.

Why Do MLOps Exist?

MLOps was not invented to replace DevOps, it was created because DevOps alone cannot manage learning systems. Machine learning introduces a new operating variable that traditional software does not have: models change behavior based on data. A deployed model can become less accurate even if the code never changes. Data pipelines evolve. Predictions drift. A system that worked yesterday can make poor decisions today with no visible system failure.

MLOps brings structure to this reality. It adds versioning for data, monitoring for model behavior, automated retraining, validation pipelines, and governance controls that DevOps was never designed to provide. It turns machine learning from experimentation into a managed business discipline.

If DevOps keeps systems running, MLOps keeps decisions correct.

[Also Read: Unleashing the power of custom MLOps platforms]

How DevOps and MLOps Work Together?

The relationship is not competitive. DevOps provides the delivery engine. MLOps manages the intelligence inside it. Most successful enterprises do not choose one over the other. They use DevOps for infrastructure and application lifecycle management, and layer MLOps on top to handle data, models, and performance in production.

This is where the distinction between MLOps vs DevOps becomes meaningful for leadership.

Without DevOps, you cannot deploy.

Without MLOps, you cannot trust what you have deployed.

While both methodologies share automation and collaboration principles, their operational focus and business impact differ significantly when applied to AI initiatives. Understanding these distinctions helps organizations allocate resources effectively and set realistic expectations for their AI transformation journey. The comparison below highlights the key operational areas where DevOps and MLOps diverge, particularly in handling the unique challenges of machine learning systems at enterprise scale.

| Operational Aspect | DevOps | MLOps | Why It Matters for Business |

|---|---|---|---|

| Primary Focus | Managing the lifecycle of software code | Managing the lifecycle of models, data, and algorithms | MLOps addresses AI-specific risks beyond DevOps’ scope |

| Deployment Artifacts | Static code packages & applications | Models, datasets, and hyperparameters | Ensures reproducible AI deployments at scale |

| Monitoring | System performance & uptime metrics | Model accuracy, data drift, and business KPIs | Prevents costly model degradation and failures |

| Version Control | Code repositories (e.g., Git) | Code, data, and model lineage tracking | Ensures compliance and clear audit trails |

| Testing | Unit, integration, and performance tests | Statistical validation, A/B testing, bias detection | Reduces bias and liability risks in AI |

| Rollback | Simple reversion to previous code | Restoring models and data states is more complex | Minimizes disruption from AI-related failures |

| Collaboration | Developers + Operations engineers | Data Scientists, Engineers, Domain Experts | Breaks down silos across AI projects |

| Scaling | Infrastructure capacity management | Balancing data quality and consistent model performance | Enables reliable AI growth across use cases |

| Automation | CI/CD pipelines for code deployment | End-to-end ML pipelines with retraining workflows | Cuts manual AI maintenance by 40–70% |

| Risk Management | Downtime and security vulnerabilities | Model bias, compliance, data privacy | Shields business from AI-specific risks |

| Success Metrics | Deployment frequency, system uptime | Accuracy, business value, time-to-insight | Ties AI performance directly to revenue |

| Resources Needed | DevOps engineers, infrastructure | MLOps specialists, compute-intensive training | Requires specialized talent and infrastructure investment |

Understanding the Cost of Getting MLOps Wrong

AI failures inside enterprises rarely arrive loudly. They arrive quietly, disguised as small performance drops, slightly worse forecasts, or gradual loss of trust in automation. By the time leadership notices, the damage is operational, financial, and reputational. When MLOps is missing or poorly implemented, organizations typically face the following:

Silent Model Failure

Models continue running long after real-world conditions have changed. Predictions still look “technically valid” but become business wrong. Revenue decisions drift. Risk models weaken. Forecasts stop matching reality.

Escalating Operational Cost

Teams spend more time fixing broken pipelines than building value. Engineers become fire-fighters. Data scientists lose weeks restoring performance that should have been monitored and automated.

Compliance and Audit Exposure

Without model lineage, data traceability, and deployment history, enterprises cannot explain decisions made by AI systems. That creates regulatory pressure, legal uncertainty, and governance breakdowns.

[Also Read: AI Regulation and Compliance in the US]

Loss of Leadership Confidence in AI

The greatest cost is not operational. It is strategic. When AI fails unpredictably, leadership stops trusting intelligent systems at all, slowing digital transformation and pushing innovation behind competitors.

Why Is This a Leadership Issue, Not a Technical One?

Choosing not to formalize MLOps is rarely an intentional decision. It is usually an oversight. But the outcome is the same: AI remains unreliable, expensive, and difficult to scale.

This is why MLOps for enterprise AI adoption is no longer optional. It is how organizations protect AI investments from erosion and build intelligence that leadership can trust in boardrooms, earnings calls, and strategy reviews.

How MLOps Pays for Itself?

MLOps is often assumed to be an engineering expense. In reality, it is one of the most effective cost control mechanisms inside an AI-led organization. Not because it adds technology, but because it removes waste, uncertainty, and rework from the system.

When machine learning runs without operational discipline, organizations bleed value in small but constant ways. Teams manually rerun models. Engineers troubleshoot production failures. Data scientists spend time repairing pipelines instead of building new capabilities. Infrastructure scales unpredictably. All of this adds up to rising operational spend without corresponding business benefit. Organizations implementing MLOps for AI initiatives typically see returns across four dimensions.

Faster Time-to-Value

AI initiatives do not generate value when models are built. They generate value when models are running reliably in production. MLOps shortens the gap between experimentation and deployment by standardizing workflows and automating transitions. Instead of month-long handoffs between teams, models move through repeatable pipelines that reduce delays and miscommunication.

The result is earlier impact, faster iteration, and shorter cycles between insight and action.

Lower Cost per Model

Without MLOps, every model becomes a custom project. With MLOps, models become reusable assets. Once pipelines, testing processes, and monitoring frameworks are in place, the cost of launching the second model is dramatically lower than the first.

This is where the benefits of MLOps in business become clear. The organization stops paying per experiment and starts building compounding value.

Reduced Operational Drag

Well-implemented pipelines remove the daily friction that slows teams down. Monitoring identifies issues early. Automated retraining restores performance without human intervention. Versioning ensures rollbacks are clean and controllable.

This is where the shift from DevOps machine learning approaches to purpose-built MLOps delivers returns. The system runs itself instead of consuming your best talent.

Controlled Risk and Fewer Surprises

Every undetected model failure carries financial risk. MLOps reduces the probability of those failures silently spreading through operations. Models are tested before deployment. Performance is tracked continuously. Errors are isolated quickly.

That control translates directly to fewer corrective projects, less disruption, and stronger confidence in AI-driven decisions.

When organizations commit to best practices for scaling AI with MLOps, spending becomes predictable and performance becomes measurable. AI stops behaving like an unpredictable research function and starts operating like infrastructure.

And infrastructure, when built correctly, always pays for itself.

MLOps Success Stories: Real Companies, Real Revenue Impact

The proof of MLOps’ power lies in the measurable results it delivers for some of the world’s most data-driven companies. These examples of MLOps implementation aren’t just theoretical success stories, they’re strategic blueprints demonstrating tangible business impact and competitive advantage.

Netflix’s Runway Platform

Netflix’s internal MLOps platform, Runway, manages thousands of machine learning models driving their personalization system. The platform processes millions of user interactions daily, with automated A/B testing frameworks that continuously optimize recommendation quality. Their sophisticated MLOps infrastructure enables rapid iteration and deployment across multiple user segments, directly contributing to their million subscriber retention.

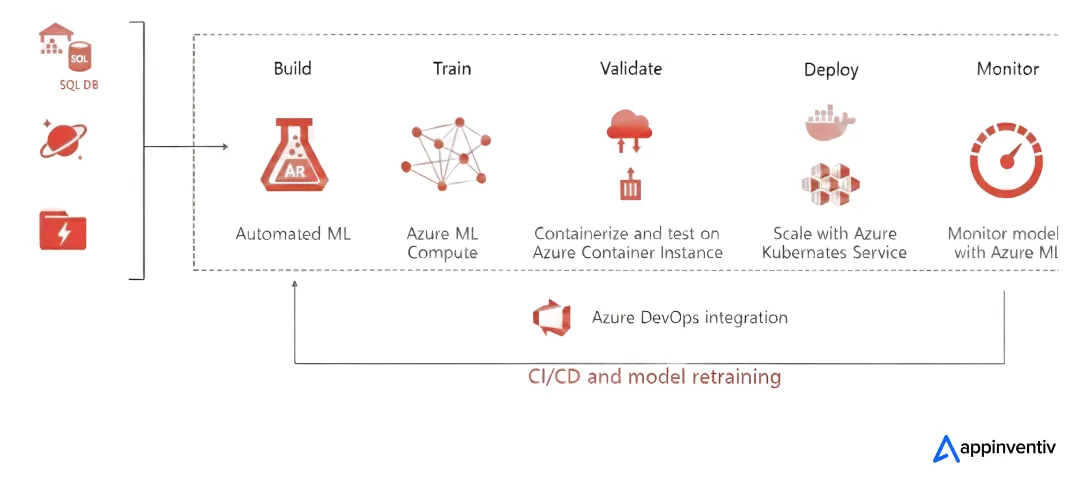

Microsoft’s Azure MLOps Framework

Microsoft’s MLOps v2 architectural framework, launched in July 2024, demonstrates enterprise-scale implementation across classical ML, computer vision, and natural language processing workloads. With Azure’s annual revenue surpassing $75 billion in fiscal 2025 and a $30 billion AI infrastructure investment planned for Q1 2026, their MLOps capabilities showcase the strategic importance of DevOps and machine learning integration at scale.

Starbucks’ Deep Brew Platform

Starbucks launched their AI-driven MLOps platform in 2019 and hit $36.7 billion in net revenue, growing 3.75% annually. Deep Brew uses MLOps practices for personalized customer experiences, optimized store operations, and inventory management across thousands of global locations, showing how DevOps and machine learning integration creates real business results.

Success stories like these signal something bigger happening in DevOps machine learning integration across enterprises. When Microsoft, Netflix, and Starbucks make serious investments, it confirms MLOps has moved beyond operational improvement into competitive requirement territory. Market growth shows that MLOps vs DevOps discussions now focus on implementation strategy rather than basic comparisons. Enterprise-scale AI transformation demands this approach.

Building AI That Works at Scale: How Enterprises Actually Implement MLOps

Adopting MLOps is not a tooling decision. It is an operating model decision.

Enterprises that succeed with AI do not simply “install” MLOps. They redesign how intelligence moves through the organization: from experimentation to production, from monitoring to accountability, and from insight to action.

The companies that scale effectively share one common trait. They stop treating AI as a side initiative and start treating it as core business infrastructure. Here is what changes inside organizations that build AI correctly.

Central Ownership Replaces Fragmentation

In early-stage AI adoption, models live everywhere. One team builds a forecasting model. Another deploys a fraud engine. A third experiment with personalization. Each system runs in isolation.

MLOps introduces central oversight.

Models, data, experiments, and deployments are governed through shared frameworks. There is visibility into what is running, how it is performing, and who owns it. This is how portfolios replace projects and AI becomes manageable at scale.

[Also Read: 11 Greatest Barriers to AI Adoption and How to Beat Them: Your Implementation Roadmap]

Execution Standardization Replaces Reinvention

Without MLOps, every model launch feels like a fresh project. New pipelines. New testing flows. New deployment logic.

MLOps replaces improvisation with repeatability.

Teams use the same deployment paths, validation methods, and performance checks across initiatives. That consistency compounds. Each new model becomes faster to deploy than the last. The organization stops rebuilding the wheel.

Monitoring Becomes Business-Level, Not Technical

In mature MLOps environments, monitoring is not limited to model accuracy.

Leaders track:

- revenue influence

- customer impact

- operational risk

- forecast reliability

This is where MLOps separates itself from traditional DevOps. The conversation moves from “Is the system running?” to “Is the system still making the right decisions?”

Responsibility Stops Floating

When AI fails, many organizations cannot clearly explain:

Who owned the model

Who approved the release

Who monitors its behavior

Who fixes it

MLOps makes responsibility visible.

Ownership structures become explicit. Audit trails become automatic. Accountability becomes measurable.

Strategy Becomes Scalable

The most important change is psychological.

When leadership knows AI will not decay silently, they invest more confidently. They scale more aggressively. And they integrate intelligence deeper into the organization.

This is how AI moves from “interesting” to “foundational.”

Scaling Right: MLOps Best Practices for Enterprise AI

Scaling AI is not about adding more models. It is about controlling the environment in which those models operate. Enterprises that succeed with MLOps treat it as infrastructure, not experimentation. The discipline lies not in tools, but in how intelligence is governed, deployed, and trusted across the organization.

Centralize Model Visibility

AI cannot be managed if it is scattered across teams and environments. Leaders should insist on a single operational view of all models in production including ownership, business purpose, performance health, and deployment history. Centralized visibility reduces duplication, exposes hidden risk, and makes portfolio-level decisions possible instead of firefighting individual models.

Measure Business Outcomes, Not Just Accuracy

Technical metrics tell you whether a model runs. Business metrics tell you whether it works. Mature MLOps programs link predictions directly to measurable outcomes such as revenue movement, cost reduction, or risk exposure. AI that cannot be measured in business terms is not ready to scale.

Treat Data as a First-Class Asset

In software, code is king. In AI, data decides. Enterprises should apply the same discipline to data that they apply to source code: version control, quality checks, and clear ownership. This is one of the biggest differences in DevOps for machine learning versus traditional DevOps delivery pipelines.

Automate Monitoring Before You Automate Scale

The fastest way to break AI is to scale it before you can see it. Organizations must build monitoring mechanisms for drift, performance decay, and failure patterns before expanding usage. Scaling AI initiatives with MLOps without visibility simply scales risk.

Embed Governance Into Workflows, Not Policies

Governance works when it is enforced automatically, not documented separately. Model approvals, data validation, access controls, and audit trails should be part of everyday pipelines. This is where MLOps for AI initiatives becomes operational discipline rather than compliance theater.

Design for Repeatability, Not Heroics

If a model requires manual intervention to survive, it is not production-ready. Best practices for scaling AI with MLOps focus on making deployments boring in the best way possible. Standard pipelines, shared templates, and repeatable workflows reduce cost and increase reliability with every release.

Align Ownership Across Teams

AI breaks when accountability is vague. Executive sponsors, engineering leads, and business owners need clear responsibility for outcomes in both DevOps and machine learning operations. Ownership clarity prevents operational drift and strengthens trust in automated systems.

Common Mistakes Leaders Make With MLOps

MLOps fails in enterprises not because the idea is wrong, but because execution is misunderstood. Many leadership teams move too fast into implementation without aligning governance, ownership, and operational maturity. The result is a fragile AI environment that looks advanced but behaves unpredictably. Understanding these mistakes early helps enterprises avoid wasted spend, compliance surprises, and stalled AI programs just months after launch.

The most common trap in the MLOps vs DevOps shift is assuming that DevOps alone can handle machine learning at scale. While DevOps and machine learning work best together, they solve different problems. DevOps ensures systems run. MLOps ensures systems continue making the right decisions. Confusing the two is how early success becomes long-term instability.

Where Enterprises Go Wrong and How to Fix It

| Challenge | What It Means in Practice | How to Resolve It |

|---|---|---|

| Treating MLOps as a tool | MLOps is adopted as software rather than as an operating system for AI | Design MLOps as governance, workflow, and accountability combined |

| Relying only on DevOps | AI pipelines are forced into DevOps frameworks that were never built for dynamic models | Extend DevOps for machine learning with model monitoring, retraining, and data controls |

| Ignoring data drift | Models continue running after the real world has changed | Implement lifecycle monitoring and automated retraining |

| No executive ownership | AI systems operate without senior accountability | Assign leadership ownership for AI governance |

| Building in silos | Data science, engineering, and operations work independently | Create joint responsibility across teams |

| Delaying compliance | Governance is handled after systems go live | Embed audit readiness and approval workflows from day one |

| Scaling too early | AI expands without stability | Mature pipelines before growth |

| Underestimating cost | Infrastructure spend grows without controls | Implement cost monitoring and usage limits |

Leaders who avoid these challenges of MLOps and DevOps build AI systems that last longer, cost less, and fail less often. When MLOps is positioned as infrastructure instead of experimentation, organizations move from occasional success to reliable scale. This is when the importance of MLOps becomes evident, not as a technical advantage, but as a business safeguard.

Future of MLOps and DevOps: Where the Technologies are Heading Next?

The next phase of enterprise AI will not be driven by who builds the smartest models, but by who operates them with the most discipline. As artificial intelligence moves deeper into strategy, finance, and customer operations, leadership teams are recognizing that the real battle is no longer about innovation speed alone. It is about reliability, accountability, and long-term control. This is where the future of MLOps and DevOps becomes a leadership discussion, not just an engineering trend.

MLOps Becomes Core Infrastructure

In the years ahead, MLOps will no longer be treated as an optional capability layered onto existing workflows. It will sit alongside DevOps as a core operational system inside the enterprise. Just as DevOps once transformed how teams ship software, MLOps for enterprise AI adoption will define how organizations deploy and maintain intelligence at scale. Companies that formalize this shift early will build AI systems that are easier to expand, easier to govern, and far more dependable over time.

DevOps Evolves to Support Intelligent Systems

DevOps is not fading. It is changing. As DevOps and machine learning become inseparable, DevOps teams will take on greater responsibility for infrastructure performance, cost management, and deployment efficiency, while MLOps manages model behavior, performance drift, and retraining cycles. This partnership ends the old DevOps vs MLOps debate and replaces it with a shared operating model where both functions reinforce each other.

Automation Shifts From Deployment to Decision Quality

Most enterprises today automate releases. Tomorrow, they will automate reliability. The future of MLOps in business will focus less on how fast models go live and more on how safely they operate afterward. Monitoring will expand from system uptime into business outcomes. Alerts will trigger not only when servers fail, but when predictions degrade. This evolution will redefine what operational excellence looks like in MLOps for AI initiatives.

Governance Moves From Policy to Practice

AI governance will no longer live in documents. It will live in pipelines. From data lineage to model approvals, controls will be baked directly into workflows. This will allow enterprises to scale with confidence instead of fear. The organizations that master this will use MLOps as their accountability engine, not as a compliance afterthought. This shift reflects the growing importance of MLOps as a business control system, not merely a technical layer.

Strategic Advantage Comes From Operational Maturity

The next advantage will not be having AI. It will be running AI better than competitors. Enterprises mastering DevOps for machine learning will launch faster, fail less often, and recover quicker when something breaks. The future of MLOps and DevOps belongs to organizations willing to invest in operational depth, not just innovation headlines.

We can help enterprises operationalize MLOps, modernize DevOps, and build AI systems designed for what’s next, not just what works today.

How Appinventiv is Your Partner for Enterprise-Scale AI

We hope this blog has helped you understand the comparison between MLOps vs DevOps. Now, enterprises come to Appinventiv when AI has moved beyond experiments and into revenue, risk, and operations. As a machine learning development services provider, our teams do not just build models. We design and run MLOps frameworks that keep those models reliable in real-world conditions. From the first proof of concept to scaled deployment, we align MLOps with your business priorities so AI supports strategy, not just technology roadmaps.

Because we work with global brands like IKEA, KFC, Adidas, Americana, The BodyShop and leading financial and retail enterprises, we understand what it takes to make MLOps work inside complex environments. Our teams combine data science, DevOps and machine learning engineering so you get one integrated view of your AI operations. That means fewer handoffs, clearer ownership and faster movement from ideas to stable production systems.

For organizations serious about MLOps for enterprise AI adoption, we focus on three things. First, building secure, cloud-native pipelines on platforms like AWS, Azure and GCP that can grow with your use cases. Second, embedding monitoring, governance and audit trails into those pipelines so you are always ready for regulators, auditors and internal risk teams. Third, designing operating models where your internal team and ours work as one unit, with clear responsibilities and transparent performance metrics.

Enterprises measure MLOps success by outcomes, not activity. Reduced operational overhead, higher model reliability, and measurable improvement in business performance define whether AI delivers value at scale. That is why organizations choose Appinventiv when they need an AI development services partner capable of supporting both strategic leadership priorities and daily operational execution, without compromising reliability, governance, or performance.

Talk with our MLOps experts today and see how Appinventiv’s tested approaches can fast-track your move from experimental AI projects to company-wide intelligent systems that create real growth.

FAQs

Q. What is the difference between MLOps and DevOps?

A. Think of DevOps as your foundation for managing traditional software; it handles code, testing, and deployment exceptionally well. Adding AI models complicates everything quickly. MLOps takes those proven DevOps principles and applies them to machine learning’s unique challenges: dataset management, model performance tracking, data drift handling, and continuous retraining. DevOps manages static code while MLOps manages living, evolving intelligent systems that genuinely learn and adapt over time.

Q. When should an enterprise invest in MLOps instead of “waiting to scale later”?

A. The moment AI affects revenue, risk, or customer experience, MLOps becomes a necessity, not a future upgrade. If models are influencing pricing, underwriting, personalization, forecasting, or operations, running them without MLOps adds hidden risk every day. Enterprises that wait typically spend more later fixing instability, compliance gaps, and broken pipelines than they would building operational discipline upfront. MLOps is not something you “add after scaling.” It is what makes scaling safe, predictable, and financially defensible in the first place.

Q. What is the ROI of MLOps for AI initiatives?

A. The ROI numbers are pretty compelling when done right. Organizations with mature MLOps practices see 60-80% faster deployment cycles, which translates directly to faster time-to-market and revenue generation. You’re also looking at 40-70% reduction in manual model maintenance tasks, freeing up your expensive data science talent for strategic work instead of operational firefighting. Plus, with only 20% of ML models actually making it to production without proper MLOps frameworks, you’re essentially protecting your entire AI investment from becoming expensive shelf-ware.

Q. Why MLOps is critical for AI scalability?

A. Here’s the harsh truth: without MLOps, your AI projects hit a wall pretty quickly. You might succeed with one or two models in production, but scaling to dozens or hundreds? That’s where everything falls apart. MLOps delivers the systematic framework for managing multiple models simultaneously; think automated deployment pipelines, centralized monitoring, and standardized governance processes. It separates having a few impressive AI demos from actually operating an AI-powered business. Without it, you’re essentially trying to scale handcrafted, artisanal models, which simply doesn’t work at enterprise scale.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

AI-Powered Booking Optimization for Beauty Salons in Dubai: Costs, ROI & App Development

Key Highlights AI booking optimization improves utilization, reduces no-shows, and stabilizes predictable salon revenue streams. Enterprise salon platforms enable centralized scheduling, customer insights, and scalable multi-location operational control. AI-enabled booking platforms can be designed to align with UAE data protection regulations and secure payment standards. Predictive scheduling and personalization increase customer retention while significantly reducing…

Data Mesh vs Data Fabric: Which Architecture Actually Scales With Business Growth?

Key takeaways: Data Mesh supports decentralized scaling, while Data Fabric improves integration efficiency across growing business environments. Hybrid architectures often deliver flexibility, governance, and scalability without forcing premature enterprise-level complexity decisions. Early architecture choices directly influence reporting accuracy, experimentation speed, and future AI readiness across teams. Phased adoption reduces risk, controls costs, and allows architecture…

Governance vs. Speed: Designing a Scalable RPA CoE for Enterprise Automation

Key takeaways: Enterprise RPA fails at scale due to operating model gaps, not automation technology limitations. A federated RPA CoE balances delivery speed with governance, avoiding bottlenecks and audit exposure. Governance embedded into execution enables faster automation without introducing enterprise risk. Scalable RPA requires clear ownership, defined escalation paths, and production-grade operational controls. Measuring RPA…