- Data Mesh vs Data Fabric: What They Mean For Scaling Businesses

- Data Mesh

- Data Fabric

- Data Mesh vs Data Fabric: Organizational Risk vs Integration Risk

- Data Mesh Use Cases And Where Data Fabric Actually Fits

- Benefits Of Data Mesh And Data Fabric Working Together

- How Data Mesh vs Data Fabric Choices Affect Reporting, Experimentation, And Early AI Readiness

- Improving Reporting Accuracy And Data Consistency

- Enabling Faster Experimentation And Product Iteration

- Supporting Foundational ML Pipelines Without Overengineering

- Reducing Data Preparation Time And Operational Friction

- Choosing The Right Approach Based On Your Growth Phase

- Early Scale Phase

- Growth Acceleration Phase

- Multi-Product Or Multi-Market Expansion Phase

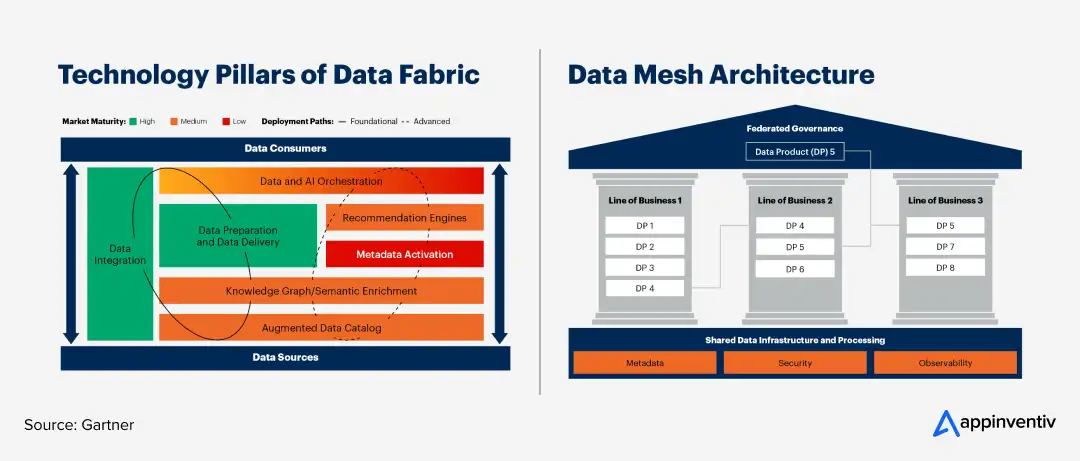

- Technology Foundations Behind Data Mesh And Data Fabric

- How Emerging Businesses Should Approach Data Architecture Without Over-Engineering

- Avoiding Premature Enterprise-Grade Complexity

- Managing Tool Sprawl And Integration Complexity

- Building Scalable Data Foundations Without Large Data Teams

- Governance Setup That Supports Growth Without Bureaucracy

- Continuous Optimization As Business Needs Evolve

- Phased Adoption And Low-Regret Decisions In Practice

- Starting Small With High-Impact Data Workflows

- Partial Adoption Patterns

- Investments That Deliver Value Regardless Of Final Architecture

- Signals That Indicate When Deeper Transformation Is Needed

- The Future Of Scalable Data Architectures

- Why Emerging Growth Businesses Choose Appinventiv For Data Architecture Modernization

- FAQs

Key takeaways:

- Data Mesh supports decentralized scaling, while Data Fabric improves integration efficiency across growing business environments.

- Hybrid architectures often deliver flexibility, governance, and scalability without forcing premature enterprise-level complexity decisions.

- Early architecture choices directly influence reporting accuracy, experimentation speed, and future AI readiness across teams.

- Phased adoption reduces risk, controls costs, and allows architecture evolution alongside business growth requirements.

- Strong data foundations prevent costly redesigns, governance gaps, and technical debt as organizations scale operations.

Many growing companies notice it gradually. Reporting takes longer than before. Numbers from different dashboards do not match. Decisions that once took hours now stretch into days because someone is still reconciling data.

As your business grows, data starts coming from more places: product analytics tools, CRM platforms, marketing dashboards, and finance systems. Without clear ownership, datasets get duplicated. Insights arrive late. Gartner estimates poor data quality costs organizations an average of $12.9 million annually, largely from inefficiencies and missed opportunities.

Growth usually depends on fast iteration. Still, when data access becomes complicated, experimentation slows. Teams wait for cleaned datasets, updated dashboards, or pipeline fixes. Research often shows analysts spend up to 80% of their time preparing data rather than analyzing it, which directly delays product decisions.

At the same time, new markets and larger clients bring stricter reporting expectations. Consistent data lineage, auditability, and reliability become non-negotiable.

Even short outages or inconsistent reporting can affect credibility, especially when enterprise customers or investors are involved.

Then costs start creeping up. More tools. More storage. More compute. Gartner estimates that poor data management and downtime can cost thousands of dollars per minute in some environments. Tool sprawl and inefficient pipelines quietly inflate cloud bills long before teams notice.

If your team is starting to see these signs, you are not alone. This guide walks you through the data mesh vs data fabric decision, how each affects growing businesses, and how to approach it without overengineering your data stack.

Identify hidden reporting gaps before they affect growth.

Data Mesh vs Data Fabric: What They Mean For Scaling Businesses

Before you get into architecture decisions, it helps to keep the definitions practical. Understanding data fabric vs data mesh starts with recognizing why growing businesses outgrow simple centralized data setups. They just solve the problem from different angles.

Data Mesh

Data Mesh architecture is primarily an operating model. It decentralizes data ownership and treats data as a product managed by individual business domains. Your product, marketing, finance, or operations teams own their datasets, define standards, and are accountable for quality. The focus is on organizational scalability, clearer ownership, and faster experimentation as teams expand.

Treating data as a product means it is not just stored. It is curated, documented, quality-checked, and maintained with clear ownership. Teams become accountable for usability, reliability, and discoverability, much like they would for a customer-facing product.

Data Fabric

Data Fabric architecture is primarily a technology-driven approach. It connects data across systems using integration layers, metadata, automation, and unified access controls. Instead of changing who owns data, it improves how data is discovered, governed, and accessed across the organization.

The principles of data fabric center on integration efficiency, consistency, and centralized visibility. In practice, this often includes metadata-driven orchestration, automated policy enforcement, lineage tracking, and centralized observability across pipelines. The objective is not just access. It is coordinated control over distributed systems as complexity grows.

Data Mesh vs Data Fabric: Organizational Risk vs Integration Risk

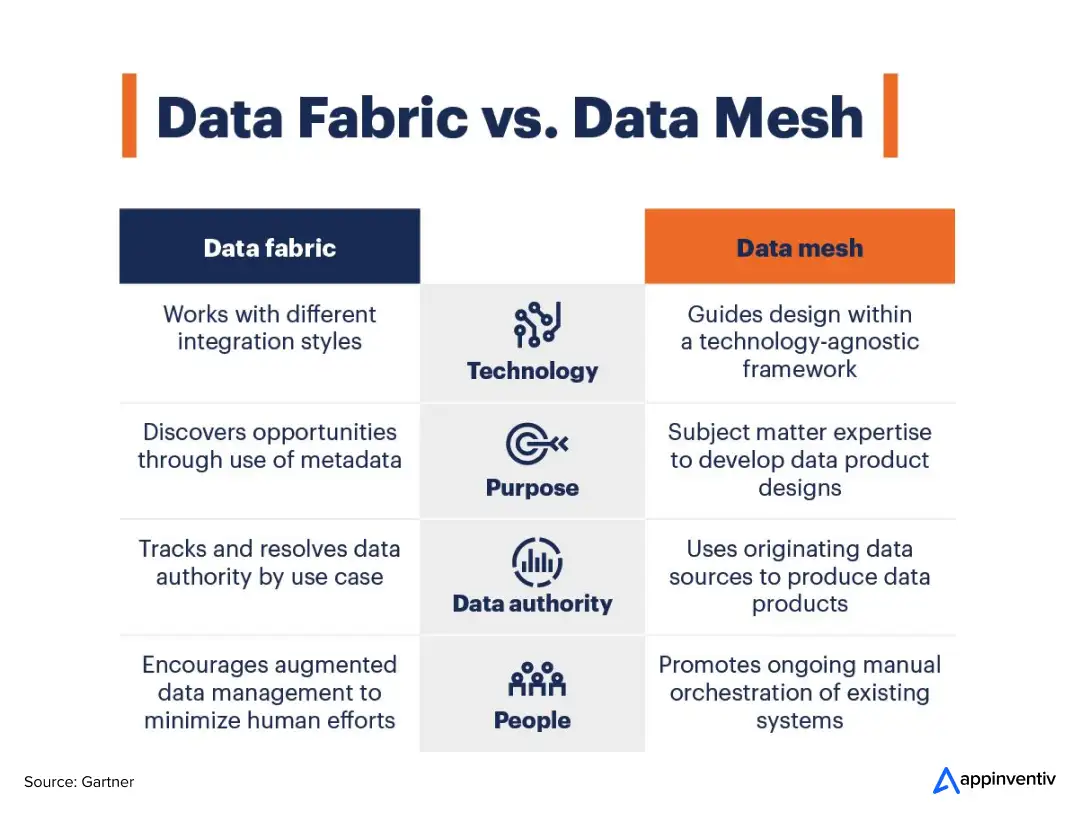

In the data fabric vs data mesh comparison, neither approach is universally better. The key difference is this:

- Data Mesh changes how your organization works.

- Data Fabric changes how your systems connect.

If your bottleneck is unclear ownership, duplicated datasets, and teams waiting on a central data group, you are facing an organizational scaling problem.

If your bottleneck is integration chaos, tool sprawl, inconsistent pipelines, and rising infrastructure costs, you are facing a technology architecture problem.

Understanding which problem you actually have prevents expensive misalignment.

- Many teams implement Fabric, expecting governance issues to disappear.

- Others attempt Mesh without preparing teams for ownership accountability.

Both scenarios create friction instead of scale.

Quick Data Mesh Vs Data Fabric Comparison Table

| Business Consideration | Data Mesh Approach | Data Fabric Approach | What Can Hurt If Misaligned |

|---|---|---|---|

| Reporting Accuracy | Depends on disciplined domain ownership | Central consistency is easier early | Leadership loses trust in metrics during growth |

| Experimentation Speed | Faster if teams own their data | Slower if integration layers become bottlenecks | Product iteration slows |

| Governance Complexity | Requires federated governance maturity | Easier centralized governance early on | Compliance risks or reporting gaps |

| Cost Trajectory | Higher coordination overhead if the domains are immature | Higher tooling costs if integration overbuilt | Cloud costs rise faster than revenue |

| Technical Debt Risk | Lower if domains maintain data quality | Higher if legacy integrations accumulate | Maintenance costs escalate |

| Data Integration | Distributed pipelines across teams | Unified integration through metadata layers | Data silos or integration bottlenecks |

| Long-Term Scalability | Strong for multi-product growth | Strong for complex integration ecosystems | Architecture redesign later |

These data fabric vs data mesh differences matter less at a small scale. As data volume, teams, and products grow, the consequences become harder to ignore.

Data Mesh Use Cases And Where Data Fabric Actually Fits

Most teams do not wake up and decide to adopt data mesh solutions. Something breaks first. Reports stop matching. Experiments slow down. Integration work starts taking longer than product development. That is usually when these models enter the conversation.

Typical data mesh architecture scenarios:

- A SaaS company expanding into multiple products, where each team needs direct ownership of analytics

- Product-led businesses running constant experimentation that cannot wait for centralized data teams

- Organizations where domain expertise matters more than centralized control

- Businesses are struggling with duplicated datasets and unclear ownership

In these situations, a decentralized data architecture improves speed and accountability.

Typical data fabric architecture scenarios:

- Companies are integrating data across CRM, marketing, product, finance, and support platforms

- Businesses are modernizing legacy systems while maintaining consistent reporting

- Organizations dealing with fragmented pipelines and tool sprawl

- Companies are scaling data systems with a data fabric to strengthen governance as they move toward enterprise clients

Here, data fabric solutions provide unified integration and metadata visibility to maintain consistency.

Where hybrid approaches usually emerge:

- Growing businesses balancing experimentation speed with reporting reliability

- Teams preparing for AI adoption without overhauling the entire stack

- Organizations expanding geographically or across multiple product lines

The objective is simple, and it reflects the core data fabric benefits: keep data usable, reliable, and adaptable as the business grows.

Once you understand the data mesh use cases and where each model fits, the next question is practical: what changes in your stack?

Also Read: Build an AI Strategy Consulting for Your Enterprise Growth

Benefits Of Data Mesh And Data Fabric Working Together

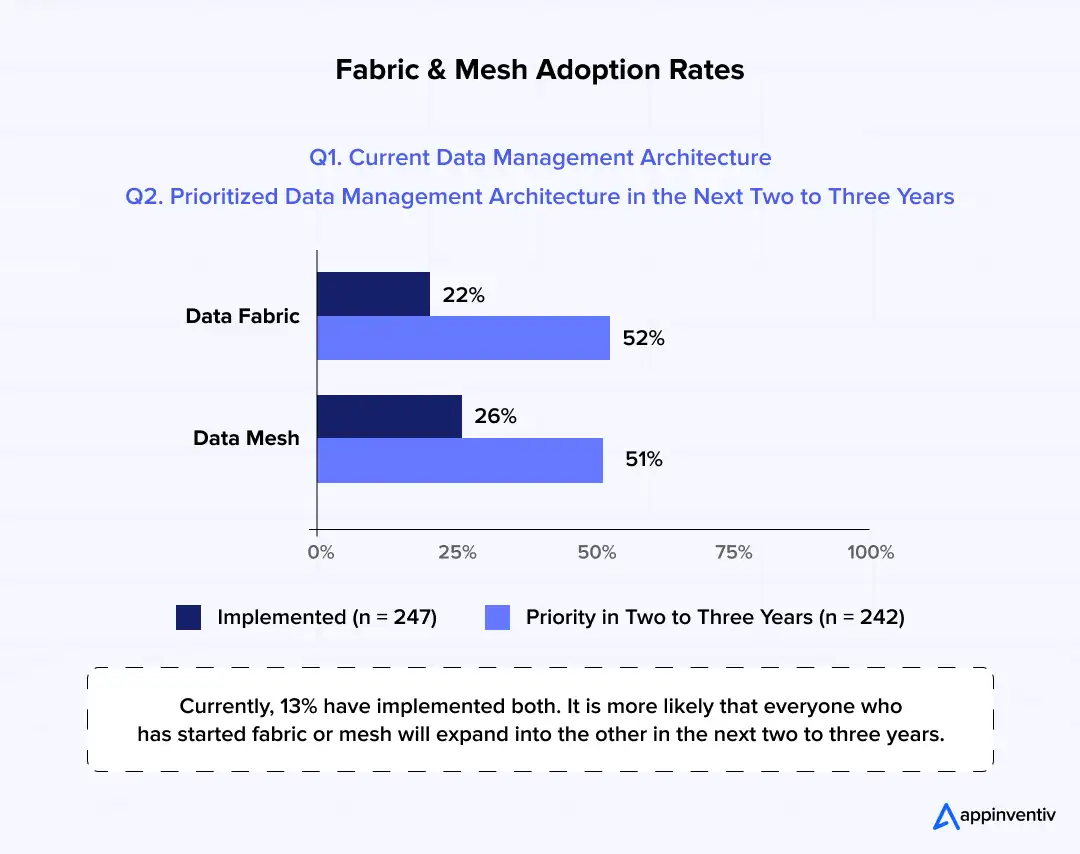

As businesses scale, the question around data fabric for business growth often shifts from choosing one architecture to combining what works. Data Mesh and Data Fabric are not competing approaches. They solve different parts of the same scaling challenge.

Rooted in data mesh architecture principles, data mesh typically strengthens ownership:

- Business domains own their data products

- Clear accountability improves reporting reliability

- Faster experimentation because teams control their datasets

- Better alignment between data and business context

Data fabric architecture focuses on integration and consistency:

- Metadata layers improve discoverability and lineage

- Automated policies help standardize governance

- Unified access across tools and pipelines

- Better visibility as data environments grow complex

Together, the data fabric benefits and data mesh strengths deliver balanced outcomes:

- Domain-level quality with centralized consistency

- Faster data analytics without losing governance

- Scalable reporting across teams and products

- Improved readiness for analytics and ML initiatives

Many growing businesses adopting data mesh architecture find hybrid patterns the most gradual and practical path. A benefit of a centralized data warehouse is that it may remain while teams take ownership of transformations. This becomes especially relevant for companies planning artificial intelligence services, where accessibility and governance consistency both matter.

The benefits of data mesh and a well-chosen architecture go beyond purity, it is about building a data ecosystem that supports speed, reliability, and scalable growth.

How Data Mesh vs Data Fabric Choices Affect Reporting, Experimentation, And Early AI Readiness

Choosing the right data architecture for AI readiness shapes everything from reporting accuracy to ML pipeline stability. Teams often realize this when they start thinking about data architecture because of AI hype.

It usually begins with reporting issues. Numbers do not line up. Experiments take longer. Basic machine learning projects stall because data preparation eats up most of the time. The architecture underneath your data quietly shapes it all.

Improving Reporting Accuracy And Data Consistency

When data ownership is unclear or pipelines are fragmented, reporting accuracy suffers first. Different dashboards show slightly different numbers. Teams lose confidence in analytics, and decision cycles slow down.

A structured data architecture helps standardize definitions, improve lineage visibility, and reduce reconciliation effort. This becomes especially important as reporting moves beyond internal dashboards to investor updates, enterprise clients, or regulatory contexts.

Enabling Faster Experimentation And Product Iteration

Product and growth teams depend on quick access to reliable data. If every experiment requires pipeline adjustments or manual cleanup, iteration slows.

A decentralized data architecture can speed experimentation, while unified integration can reduce access friction. The key is removing unnecessary data gatekeeping while maintaining enough governance to keep metrics consistent.

Supporting Foundational ML Pipelines Without Overengineering

Early AI or ML initiatives rarely fail because of algorithms. They usually stall due to inconsistent or poorly prepared data. Even basic predictive models require stable pipelines, clean datasets, and reproducible workflows.

You do not need enterprise-scale AI infrastructure immediately. What matters is having data structured well enough that simple ML pipelines can work without constant manual intervention.

Some industry research suggests organizations investing in AI see measurable ROI in many cases, but only when data accessibility and quality are addressed first.

Also Read: Machine Learning Recommendation Systems

Reducing Data Preparation Time And Operational Friction

Data preparation still consumes much of the analytics effort. Multiple studies suggest that analysts spend 70–80% of their time preparing data rather than analyzing it. That directly impacts reporting speed, experimentation cycles, and ML readiness.

Better architecture reduces redundant transformations, improves discoverability, and shortens the path from raw data to usable insights, for growing businesses, which often translates into faster decisions and more efficient use of analytics resources.

Data quality also directly affects AI initiatives. Even simple predictive models depend more on consistent pipelines than sophisticated algorithms. Many early AI failures trace back to fragmented data, not model performance. Strengthening your data architecture for AI often improves readiness without additional AI investment.

Your data foundation determines AI scalability and ROI

Choosing The Right Approach Based On Your Growth Phase

Most businesses do not redesign their data architecture because they want to. It usually happens when growth starts exposing friction. Reporting slows down. Teams cannot find consistent numbers. Product experiments take longer because data access is messy. The right data mesh vs data fabric choice depends less on theory and more on how your business is evolving.

Early Scale Phase

Implementing data mesh in a startup often begins here. This phase moves fast with new customers, features, and data sources. Governance usually lags behind growth, and that is normal.

Focus areas typically include:

- Fast access to product and customer data

- Lightweight governance, not heavy processes

- Supporting experimentation without complex pipelines

- Avoiding tool sprawl early

At this stage, flexibility usually matters more than architectural perfection.

Growth Acceleration Phase

Now things start getting serious. Teams expand, reporting demands increase, and leadership wants clearer visibility into performance and costs.

Common priorities:

- Consistent reporting across teams

- Better data integration between tools

- Cost visibility for data infrastructure

- Balancing centralized efficiency with team autonomy

This is often where businesses begin blending centralized integration with domain ownership.

Multi-Product Or Multi-Market Expansion Phase

Growth becomes more layered here. Multiple products, regions, or business units introduce complexity that informal data practices cannot handle.

What usually matters most:

- Clear data ownership across business units

- Reliable reporting for partners, investors, or enterprise clients

- Governance without slowing innovation

- Scalable architecture that supports new markets

Many organizations adopt hybrid approaches at this stage, combining elements of Data Mesh and Data Fabric to keep both flexibility and control.

Architecture decisions often fail because of readiness, not technology. Data mesh architecture requires teams willing to own data quality and documentation. Data Fabric requires operational discipline around integration, metadata, and governance tooling. If teams are not prepared for that shift, even well-designed architecture struggles to deliver value.

Quick Snapshot Table

| Growth Phase | Priority | Typical Direction |

|---|---|---|

| Early Scale | Speed, experimentation | Flexible data access |

| Growth Acceleration | Reporting, integration | Balanced approach |

| Multi-Product Expansion | Governance, scalability | Hybrid architecture |

Technology Foundations Behind Data Mesh And Data Fabric

Data fabric vs data mesh architecture discussions often stay conceptual. Still, technology choices eventually shape how these models work in practice. This does not mean replacing your entire stack. Most organizations evolve incrementally. You do not need a massive rebuild. Most businesses evolve their stack gradually.

Technology patterns supporting data mesh architecture:

- Domain-oriented data pipelines owned by business teams

- Data catalogs for discoverability and documentation

- Transformation frameworks for version-controlled data logic

- Data contracts to maintain schema consistency

- Observability tools to monitor quality and pipeline health

These technologies support data mesh architecture by enabling ownership, accountability, and faster experimentation without central bottlenecks.

Technology patterns supporting data fabric architecture:

- Metadata management platforms for lineage and governance

- Integration and orchestration layers connecting distributed systems

- Policy enforcement tools for access control and compliance

- Data virtualization or unified query layers

- Centralized monitoring and cost visibility tools

This data fabric architecture approach focuses on consistency, integration efficiency, and unified access across complex environments.

A key data mesh architecture principle is that ownership may decentralize while integration layers remain centralized. The objective is steady evolution, not architectural perfection.

Also read: AI-powered Data Governance: Reshaping Enterprise Data Strategy

How Emerging Businesses Should Approach Data Architecture Without Over-Engineering

As your data grows, the instinct is often to “upgrade everything.” New warehouse. New orchestration tool. New governance framework. In practice, that usually creates more complexity than value. The goal at this stage is not architectural perfection. It is building an AI-ready data architecture that scales with your product and reporting needs without locking you into heavy infrastructure too early.

Avoiding Premature Enterprise-Grade Complexity

Enterprise-level data product architecture patterns like federated governance councils or distributed data product marketplaces, or multi-region lakehouse deployments, sound impressive. They also introduce coordination overhead, tooling costs, and operational friction.

Instead, focus on:

- A single reliable data warehouse or lakehouse as the primary analytical source

- Clear ownership of datasets at the domain level, even if the pipelines remain centralized

- Version-controlled transformation logic using tools such as dbt or similar frameworks

- Basic observability, including schema change alerts and failed pipeline monitoring

You do not need a full data mesh architecture implementation to define domain ownership. Start with naming standards, documented data contracts, and ownership accountability inside existing infrastructure.

Managing Tool Sprawl And Integration Complexity

Tool sprawl becomes expensive quickly. One ETL tool for marketing. Another ingestion framework for product data. Separate BI tools across teams. Over time, this creates duplicate pipelines and inconsistent metric definitions.

Research shows organizations often run hundreds of applications but integrate only a fraction effectively. Poor integration increases maintenance overhead and slows reporting cycles.

To control this:

- Consolidate ingestion where possible

- Standardize transformation layers

- Define a canonical metrics layer for core KPIs

- Use metadata management to track lineage and dependencies

If data fabric architecture is being considered, its integration layer should reduce duplication, not add another abstraction without clear consolidation benefits.

Also read: The Role of AI in Intelligent Document Processing and Management

Building Scalable Data Foundations Without Large Data Teams

You do not need a 20-person data platform team to improve architecture.

Practical steps include:

- Establishing data contracts between producing and consuming teams

- Implementing automated data quality checks for null rates, freshness, and schema drift

- Designing modular data pipeline architecture for growth — separating ingestion, transformation, and serving layers

- Maintaining clear documentation in a shared repository

Modularity prevents tightly coupled systems that are expensive to refactor later. It also makes the gradual adoption of either Data Mesh or Data Fabric patterns easier.

Governance Setup That Supports Growth Without Bureaucracy

Among the most practical data governance strategies for emerging businesses: focus on clarity, not committees.

Start with:

- Defined ownership per dataset

- Role-based access control tied to business functions

- Central definitions for revenue, active users, churn, and other core metrics

- Lightweight audit logging for sensitive data

Around 40 percent of IT budgets in many organizations are spent maintaining legacy systems and technical debt. Weak governance accelerates that problem because undocumented pipelines and inconsistent metrics become harder to maintain over time.

Governance should reduce rework and reporting disputes, not slow delivery.

At growing companies, governance problems usually appear before architecture problems. Conflicting KPI definitions, unclear ownership, and inconsistent reporting often create more friction than infrastructure limits. Understanding data governance in data mesh vs data fabric early makes adoption smoother, because ownership, standards, and expectations are already clear.

Continuous Optimization As Business Needs Evolve

Architecture decisions should be revisited periodically. As product lines expand or new markets are added, integration patterns may need to evolve.

Continuous optimization includes:

- Reviewing pipeline performance and cost usage monthly

- Decommissioning unused datasets and redundant tools

- Refactoring brittle pipelines before they accumulate debt

- Evaluating whether domain-level ownership needs to increase as teams scale

Technical debt is not just old code. It is about outdated assumptions embedded in pipelines and integrations. Regular architectural reviews prevent small inefficiencies from compounding into large maintenance costs.

The objective is steady evolution. Not a massive rewrite. Not a full architectural reset. Just deliberate, controlled progress that keeps your data stack aligned with growth.

Phased Adoption And Low-Regret Decisions In Practice

Most architecture failures do not happen because the model was wrong. They happen because the rollout was too big, too fast. If you are scaling, the smarter move is usually phased adoption. Start where friction is highest. Expand only when the business case is clear.

Starting Small With High-Impact Data Workflows

Instead of redesigning your entire data ecosystem, begin with the workflows that hurt the most.

That usually means:

- Revenue reporting that requires manual reconciliation

- Product analytics pipelines that delay experimentation

- Customer data used across marketing, product, and support

Improve one critical domain first. Define ownership. Standardize transformations. Add data quality checks. If you are leaning toward data mesh implementation, pilot domain-level ownership within a single function. If you are leaning toward Data Fabric, introduce a unified integration layer for one high-value reporting stream.

The goal is measurable improvement, not architectural completeness.

Partial Adoption Patterns

Full transformation is rarely necessary in early stages — this is one of the common data mesh challenges. Partial adoption is often more effective.

Typical patterns include:

- Domain pilots are a first step in data mesh implementation, where one team operates with data product ownership while infrastructure remains centralized

- A centralized cloud data warehouse with federated governance overlays

- Introducing metadata management and lineage tracking before reorganizing ownership

- Hybrid models where ingestion and integration are centralized, but transformation ownership is distributed

This reduces risk and illustrates how data mesh supports data scaling, helping teams understand operational implications before expanding the model.

Investments That Deliver Value Regardless Of Final Architecture

Some improvements are ‘low regret’ because they support both sides of the data fabric vs data mesh equation.

Examples include:

- Version-controlled transformation logic

- Automated data quality monitoring

- Clearly defined metric layers

- Role-based access control

- Cost monitoring and pipeline observability

Research shows organizations often spend a significant portion of their IT budgets maintaining legacy systems. Foundational improvements reduce future maintenance overhead, a principle found across all major enterprise data architecture frameworks.

Signals That Indicate When Deeper Transformation Is Needed

Not every growth phase requires architectural redesign. Still, certain data mesh challenges signal when incremental adjustments are no longer enough.

Watch for:

- Persistent reporting inconsistencies across business units

- Repeated pipeline rewrites to support new products

- Executive decisions are delayed because leadership questions data reliability

- Analysts spend most of their time fixing data instead of analyzing it

- Enterprise clients are demanding stricter lineage and governance evidence

When these patterns become systemic rather than occasional, a deeper transformation may be justified. Until then, phased improvements often deliver faster results with less risk.

The objective is not to commit to a label early. It is to build momentum with practical steps that improve speed, clarity, and scalability over time.

If your team is already questioning data reliability, reporting speed, or infrastructure costs, it may be time to reassess your architecture approach.

Also read: Executive Guide to Enterprise AI Governance and Risk Management

Design scalable systems before complexity forces redesign

The Future Of Scalable Data Architectures

Data architecture is evolving rapidly. Data Mesh and Data Fabric are no longer static frameworks. They are adapting alongside AI adoption, product-led growth, and increasingly distributed data ecosystems. Most emerging businesses will see gradual convergence rather than rigid architectural choices.

AI-driven data management is accelerating:

- AI-driven metadata management is improving discovery and governance

- Automated trust signals help identify data quality issues early

- AI model performance increasingly tied to structured data foundations

- AI-driven discovery reduces manual data preparation effort

Product-led data ecosystems are expanding:

- Data product operating models are becoming more common

- Internal data product marketplaces are improving accessibility

- Data supply chains supporting faster analytics delivery

- Centralized delivery of critical data assets improves consistency

Technology convergence trends:

- Data virtualization enables unified access across distributed systems

- Knowledge graphs are proving context, lineage, and semantic connections

- Augmented data catalogs enhancing discoverability and governance

- Policy violation detection is becoming more automated through metadata intelligence

Most organizations will not fully replace one architecture with another. Instead, they will gradually blend decentralized ownership with intelligent integration layers. The focus is shifting from architecture labels to data reliability, accessibility, and AI readiness as businesses scale.

Also Read: Trends Shaping Future Of Data Infrastructure

Why Emerging Growth Businesses Choose Appinventiv For Data Architecture Modernization

As your business scales, data issues stop being minor inconveniences. Reporting delays affect leadership decisions. Fragile pipelines slow experimentation. AI pilots stall because ingestion and transformation layers are inconsistent. That is typically when you need data mesh solutions and structured modernization, not just another tool.

Appinventiv brings over 10 years of experience, with 1000+ enterprise-grade solutions delivered and 500+ enterprise processes digitized across 35+ industries. A team of 1600+ tech evangelists, supported by 5+ international offices, works with growth-focused businesses to design scalable data foundations.

Modernization efforts focus on measurable outcomes. Clients have achieved up to 10x data throughput improvements, 30% operational cost savings, and maintained a 95% SLA compliance rate across critical systems. The emphasis stays on building an AI-ready data architecture, strengthening governance through AI governance consulting services, and aligning architecture decisions with product velocity.

Whether implementing data mesh solutions, unified Data Fabric architectures, or hybrid models, the objective remains consistent. Strengthen reporting reliability, accelerate experimentation, and reduce long-term technical debt so your data systems scale alongside your business. The right architecture should support growth quietly in the background, not become a constant redesign project.

FAQs

Q. What Is The Difference in Data Fabric vs Data Mesh?

A. Data Mesh decentralizes data ownership. Each business domain treats its data as a product with shared governance standards. Data Fabric focuses on unified integration, using metadata, automation, and connectivity layers to make distributed data accessible consistently. Mesh prioritizes organizational scalability, while Fabric prioritizes integration efficiency and centralized data accessibility across systems.

Q. How Can Data Mesh Scale With Business Growth?

A. Data mesh architecture scales by distributing data ownership across business domains. As teams grow or products expand, each domain manages its own datasets, pipelines, and quality standards. This reduces central bottlenecks, speeds experimentation, and supports faster analytics. Strong governance practices are still required to maintain consistency as data complexity increases.

Q. Which Architecture, Data Mesh Or Data Fabric, Is Best For Scaling Businesses?

A. There is no universal best choice. Data Mesh often fits organizations scaling across multiple teams or products, requiring domain ownership. Data Fabric works well when integration complexity or legacy systems dominate. Many growing businesses adopt hybrid approaches, combining decentralized ownership with unified integration layers to balance flexibility, governance, and operational efficiency.

Q. How Should Businesses Decide on Data Mesh vs Data Fabric?

A. When evaluating data fabric vs data mesh, start with your growth context. If experimentation speed and domain autonomy are priorities, Mesh may fit better. If integration consistency, governance, or legacy system connectivity matter more, Fabric can help. Evaluate team maturity, reporting complexity, and data infrastructure constraints before committing. Phased adoption usually reduces risk.

Q. How Does Appinventiv Help Implement Data Mesh?

A. Appinventiv supports data mesh architecture adoption through architecture assessment, domain ownership modeling, pipeline restructuring, and governance design. The focus stays on practical rollout, pilot domains first, lightweight governance, and scalable infrastructure. Implementation typically includes data contracts, observability layers, and integration frameworks that allow decentralized ownership without losing reporting consistency.

Q. What Are The Benefits Of Data Fabric For Business Scalability?

A. Data fabric architecture improves scalability by unifying data access across systems through integration layers, metadata management, and automation. This reduces reporting inconsistencies, simplifies governance, and speeds analytics access. It is especially useful where multiple tools, legacy systems, or distributed data environments exist, helping maintain consistency as business operations expand.

Q. Can Data Mesh And Data Fabric Be Combined For Scalability?

A. Yes, many organizations combine both. Data Mesh can define domain ownership and data product accountability, while Data Fabric provides unified integration, metadata management, and accessibility. This hybrid approach supports faster experimentation, consistent governance, and scalable analytics. It is increasingly common as businesses grow across products, teams, and markets.

Q. What are the core conceptual differences between data mesh and data fabric approaches?

A. Data mesh focuses on decentralized data ownership using domain-oriented design, where each data product follows clear data product requirements under federated governance despite governance constraints. Data fabric connects original data sources through unified integration. Both rely on data asset inventories, self-serve design, and strong data privacy practices to ensure accessible, governed data ecosystems.

Q. What are the most common challenges organizations face when adopting data mesh or data fabric?

A. Organizations often struggle with central data team bottlenecks, data silos, and data proliferation and complexity rooted in legacy architecture. Data mesh introduces the cost of decentralization and requires a cultural shift, data literacy, and strong collaboration between business and data teams to sustain governance. Data fabric depends on robust metadata and a shared infrastructure layer, while domain-level self-serve data infrastructure must be carefully managed to avoid fragmentation.

Q. How are data mesh and data fabric evolving with AI and modern data ecosystem trends?

A. Data mesh and data fabric are evolving through AI-driven metadata management, AI-driven discovery, and improved AI model performance. Organizations are adopting augmented data catalog platforms, metadata catalogs, and knowledge graphs to strengthen trust signals and detect policy violations. Trends like data virtualization, data supply chain models, data product marketplace adoption, centralized delivery of assets, and data product operating model expansion are shaping future architectures.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

Governance vs. Speed: Designing a Scalable RPA CoE for Enterprise Automation

Key takeaways: Enterprise RPA fails at scale due to operating model gaps, not automation technology limitations. A federated RPA CoE balances delivery speed with governance, avoiding bottlenecks and audit exposure. Governance embedded into execution enables faster automation without introducing enterprise risk. Scalable RPA requires clear ownership, defined escalation paths, and production-grade operational controls. Measuring RPA…

How AI Overhauling Industrial Automation in Australia

Key takeaways: AI is shifting industrial automation from rule-based to data-driven decision ecosystems Predictive and autonomous operations are improving efficiency and cost optimisation Australian industries are leveraging AI to solve workforce, sustainability, and compliance challenges Enterprises adopting AI early gain competitive, operational, and economic advantages Industrial automation in Australia is no longer just an engineering…

Implementing Retrieval-Augmented Generation in Healthcare Systems: Challenges, Use Cases & ROI

Key takeaways: RAG helps clinicians make better decisions by connecting AI responses to trusted clinical data sources. Healthcare organizations are gradually adopting domain-specific AI to improve efficiency, compliance, and operational clarity. Successful RAG deployment usually depends on strong governance, interoperability planning, and secure data practices. Retrieval-backed AI can ease documentation workload while improving accuracy, productivity,…