- How AI Agents Work

- 1. Perception

- 2. Reasoning and Decision Making

- 3. Action Execution

- 4. Learning and Adaptation

- 5. Interaction with Other Agents and Systems

- Key Security Risks for AI Agents

- Core Principles for Agent Security

- Principle 1: Clear Human Oversight

- Principle 2: Limit Agent Capabilities

- Principle 3: Ensure Transparency and Observability

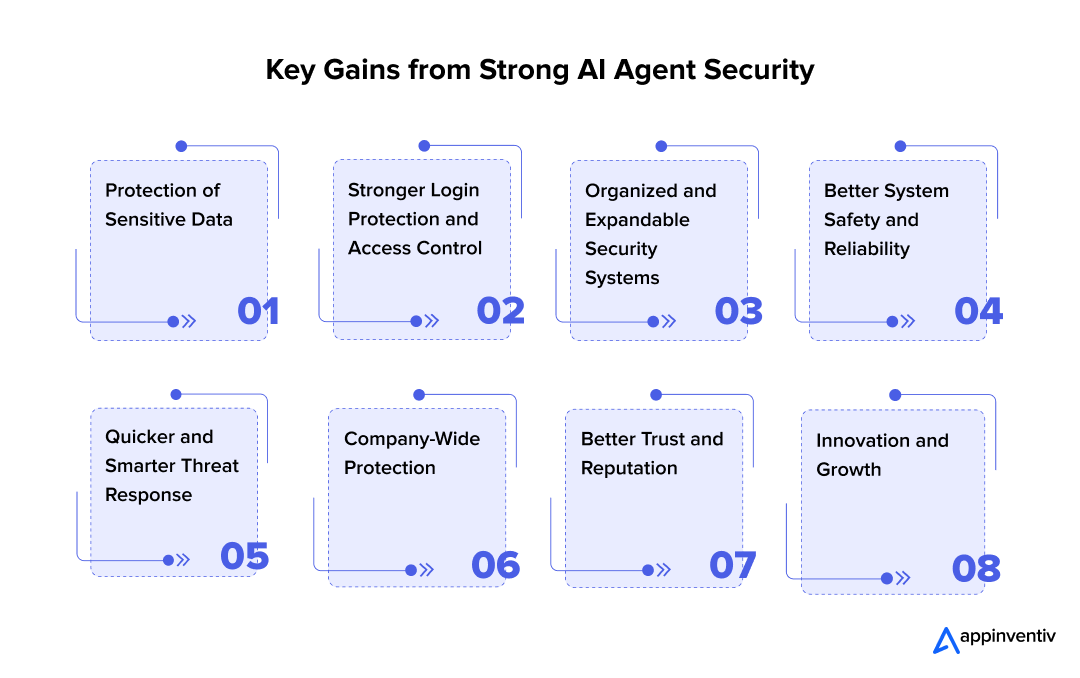

- Strategic Benefits of AI Agent Security

- Protection of Sensitive Data

- Stronger Login Protection and Access Control

- Organized and Expandable Security Systems

- Better System Safety and Reliability

- Quicker and Smarter Threat Response

- Company-Wide Protection

- Better Trust and Reputation

- Innovation and Growth

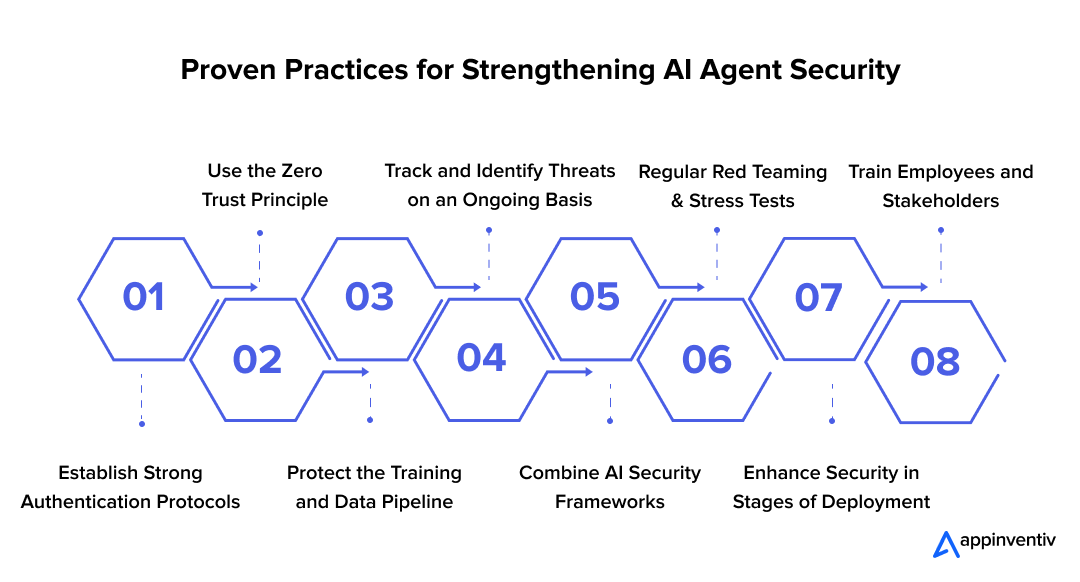

- Best Practices For Securing AI Agents

- Establish Strong Authentication Protocols

- Use the Zero Trust Principle

- Protect the Training and Data Pipeline

- Track and Identify Threats on an Ongoing Basis

- Combine AI Security Frameworks

- Regular Red Teaming and Stress Tests

- Enhance Security in Stages of Deployment

- Train Employees and Stakeholders

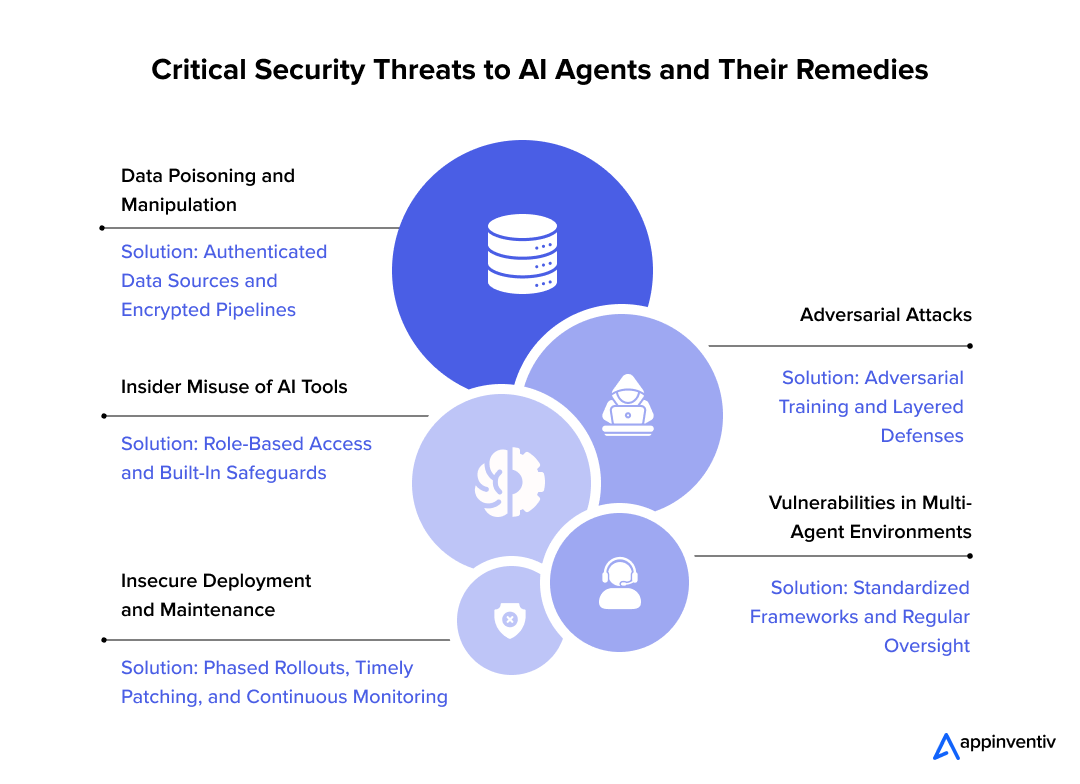

- 5 Most Pressing AI Agent Security Challenges and Solutions to Overcome Those

- 1. Data Poisoning and Manipulation

- 2. Adversarial Attacks

- 3. Insider Misuse of AI Tools

- 4. Vulnerabilities in Multi-Agent Environments

- 5. Insecure Deployment and Maintenance

- Implement AI Agents for Ensuring Robust Security with Appinventiv's Experts

- FAQs

Key takeaways:

- AI agents provide significant operational advantages, but their integration introduces unique security challenges that must be addressed strategically.

- Designing AI systems with modular security layers allows organizations to adapt quickly to emerging threats without disrupting ongoing operations.

- Regular audits, scenario testing, and resilience drills help identify weaknesses early and ensure AI agents behave as intended under diverse conditions.

- Collaboration between security, IT, and business teams is crucial to balance innovation, compliance, and practical usability of AI agents.

- A holistic approach to AI security not only mitigates risks but also supports scalability, smoother workflows, and long-term organizational agility.

In 2019, a UK-based energy company found out the hard way just how dangerous AI-driven fraud can be. Scammers used voice cloning software to copy the speech patterns of the CEO from the parent company. The fake voice was so real that the UK CEO approved a transfer of $243,000 to what he thought was a real supplier.

The money disappeared in minutes, and the attackers had shown everyone just how powerful deepfake technology could be when you mix it with old-school social engineering tricks. (Source: The Wall Street Journal)

That incident isn’t unusual anymore. AI agents are moving fast, taking over everything from customer support to business decision-making. As AI adoption accelerates, so do the opportunities for cybercriminals to exploit its reach, from routine customer interactions to high-stakes business decisions. What used to need technical know-how can now be automated, scaled up, and hidden with AI tools that make it nearly impossible to tell what’s real and what’s fake.

Jump ahead to today, and the stakes are way higher. AI agents are now built into customer service, operations, and decision-making systems. Their growing usage makes them absolutely essential for getting things done efficiently, but it also makes them perfect targets for cybercriminals who want to exploit weak spots on a massive scale.

The numbers tell the story of both the risks and the opportunities. IBM’s 2025 Cost of a Data Breach Report found that AI-related breaches are going up, but AI-powered defenses are actually working pretty well. Average global breach costs dropped to $4.44 million, down 9% from the year before, as organizations got faster at spotting problems and responding to them. The average time to identify and contain a breach is now 241 days, the lowest it’s been in almost ten years.

The lesson couldn’t be clearer: AI agents can either make your defenses stronger or create dangerous weak spots. The future of security will depend on how well organizations secure their agents.

In this blog, we will explore how AI agents work, examine the key security risks they face, and outline the core principles for agent security. We will also highlight the benefits of AI agent security, share best practices for securing AI agents, and discuss the most pressing challenges along with practical solutions to overcome them. Let’s dive in.

Secure your AI agent before it’s too late

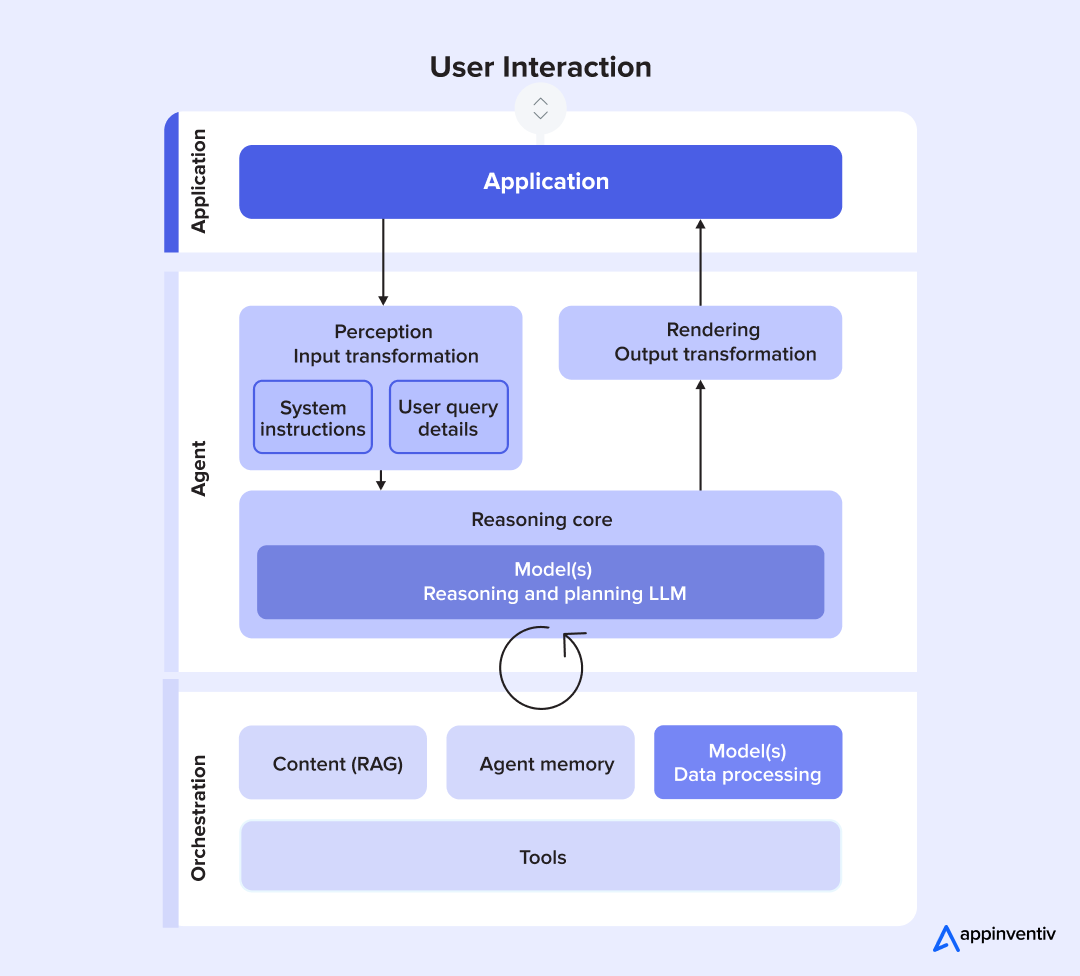

How AI Agents Work

AI agents get built to act for users or systems, making choices and handling tasks on their own. At their core, they operate through a cycle of seeing, thinking, and acting, which enables them to adapt to changing situations and produce effective results. Getting this cycle is important for understanding what they can do and what kind of security they need.

1. Perception

AI agents start by collecting data from whatever environment they’re working in. This might be text, pictures, voice commands, system logs, or sensor readings. The data gets cleaned up and interpreted using algorithms that help the agent figure out what’s going on. By securing AI agents during the data gathering phase, businesses can cut down on risks that come from corrupted inputs.

2. Reasoning and Decision Making

Once the agent has its input, it uses machine learning algorithms to weigh different possible actions. This analysis process enables the agent to make choices, solve problems, and adjust its approach based on what it has learned. Strong oversight and the implementation of AI agent security make sure that decision-making processes stay clear, dependable, and aligned with what the company actually wants to accomplish.

3. Action Execution

After the agent decides what to do, it actually carries out that action. This might mean sending a message, running a system command, approving a transaction, or getting a human operator’s attention. Building in safety rails and monitoring systems during this phase is crucial for AI agent safety because even tiny mistakes in execution can cause major business problems.

4. Learning and Adaptation

As time goes on, AI agents get better at what they do by learning from what happens. Reinforcement learning, supervised feedback, or pattern recognition help them adapt and improve their decision-making skills, which makes them more useful the more you use them. This ongoing process should also include AI-powered cybersecurity, so agents can develop better defenses just as fast as attackers come up with new ways to cause trouble.

5. Interaction with Other Agents and Systems

In most companies, AI agents don’t work by themselves. They often team up with other agents or connect with bigger IT systems. This multi-agent communication enables complex workflows but also introduces unique security challenges. Without proper safeguards, these interactions can expose AI agent security vulnerabilities, giving attackers fresh opportunities to break in and cause damage.

Also Read: A Guide to Generative AI Security- What Every C-Suite Executive Needs to Know

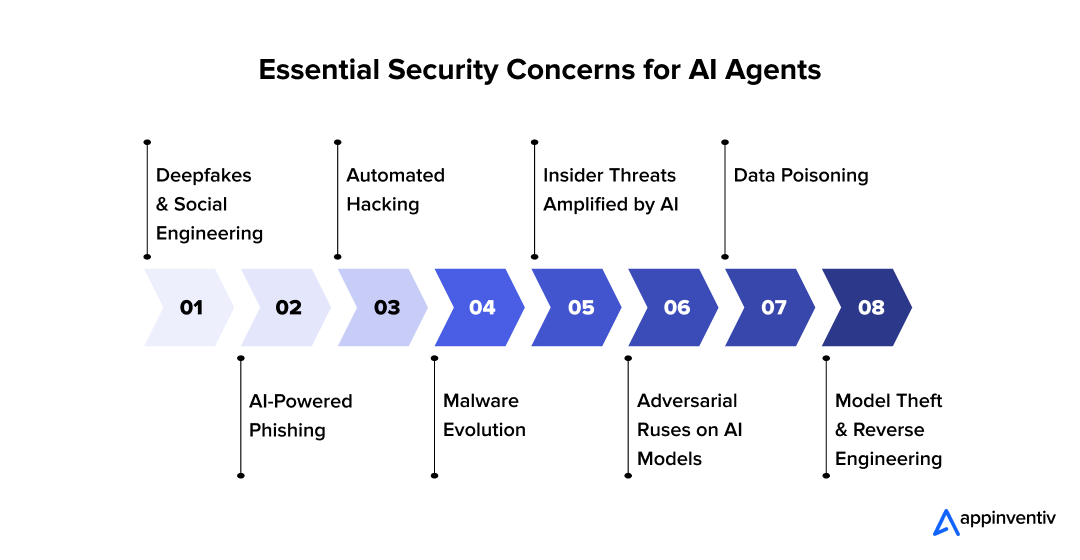

Key Security Risks for AI Agents

AI agents can be powerful tools, but they also bring special risks. From unauthorized access and data breaches to tampering with automated choices, these dangers increase as AI systems become more woven into business operations. Recognizing them is the first step toward good protection. Let’s have a look at some of the top security risks for AI agents:

Deepfakes and Social Engineering: AI now creates realistic fake videos, images, and voices. Criminals use these deepfakes to impersonate executives or partners for fraud, data theft, and reputational harm. Strong AI agent security is needed to block these identity tricks.

AI-Powered Phishing: With AI, phishing looks professional and personalized, pulling data from social media and company sites. Messages mimic the style, tone, and timing of real communications. An AI agent for security can analyze fraud by detecting patterns and preventing these attempts early.

Automated Hacking: AI tools scan systems, apps, and networks at speed, exploiting weaknesses through automated attacks. Companies must secure AI systems to resist this constant probing and prevent security gaps.

Malware Evolution: AI-powered malware adapts by learning how defenses respond, making it harder to stop. Strong AI agent security for business provides layered protection against these evolving threats.

Insider Threats Amplified by AI: Employees with access may knowingly or unknowingly misuse AI tools to gather data or mimic normal behavior. Companies need procedures and monitoring to ensure only authentic AI agents operate without breaking trust.

Adversarial Ruses on AI Models: Hackers manipulate data or images to trigger false AI outputs. A robust AI security agent model helps detect these distortions and safeguard decision-making systems.

Data Poisoning: Attackers can poison training data, leading to biased or flawed AI models. Through AI agent safety, businesses can secure pipelines and ensure only trusted data sources are used.

Model Theft and Reverse Engineering: Attackers can replicate proprietary AI models by analyzing inputs and outputs, leading to theft or misuse. AI-based threat mitigation tools like query tracking and access limits protect against this.

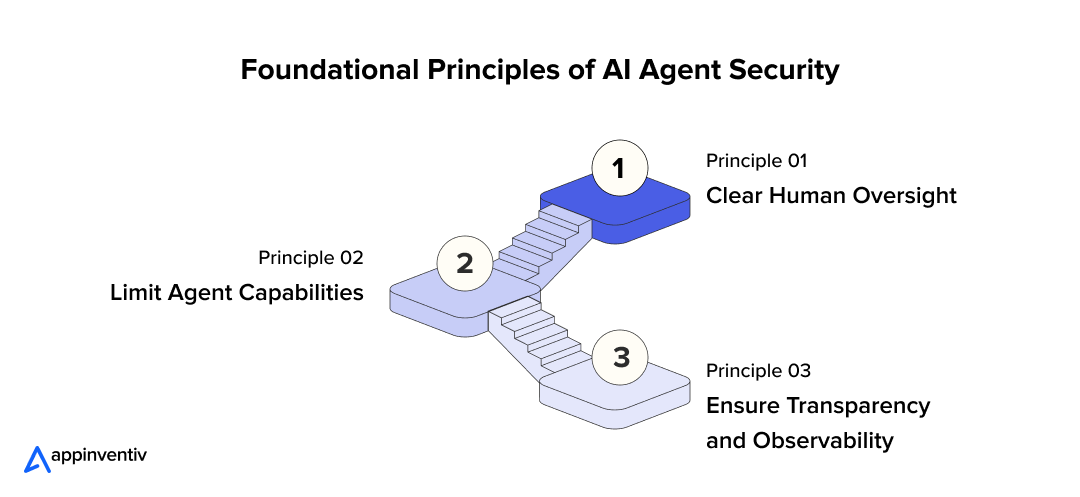

Core Principles for Agent Security

AI agents can really boost how efficiently your business runs, but they also bring some pretty unique risks to the table. To use their power safely, companies should stick to core principles that guarantee accountability, controlled operation, and transparency. Here are three basic principles for securing AI agents, plus practical steps you can take to put them into action.

Principle 1: Clear Human Oversight

AI agents work as extensions of human users, so every single agent needs to have someone specific in charge of it. This ensures that important decisions, such as approving transactions, changing sensitive systems, or taking actions that cannot be undone, will not occur without deliberate human approval.

In situations where multiple users or multiple agents are working together, you absolutely have to keep separate identities and access boundaries for each agent. Companies that use multi-AI agent security technology can handle these interactions much better, stopping conflicts or unauthorized data access before they start. Users should be able to assign permissions in detail, keep an eye on shared settings, and know exactly how their agent is going to behave.

Implementation Measures:

- Set up explicit human controllers for each agent.

- Secure input channels to double-check user commands.

- Turn on detailed permission management and shared configuration transparency.

Principle 2: Limit Agent Capabilities

AI agents should only get access to the resources and actions they actually need for their specific job. You need to restrict what they can do based on whatever task they’re working on right now. For example, an agent that’s supposed to do research should never be able to mess with financial records.

This principle takes the standard “least privilege” approach and tweaks it for AI systems that work across wide, potentially unlimited environments. Permissions need to be enforceable, you have to stop privilege escalation from happening, and users should be able to take away authority whenever they want. Using this principle supports AI agent security for business by making sure agents work safely within whatever limits your company sets.

Implementation Measures:

- Use context-aware permission controls that change based on tasks.

- Put sandboxing in place to stop unauthorized actions.

- Use strong multi-factor authentication, authorization, and auditing (AAA) frameworks.

Principle 3: Ensure Transparency and Observability

Trust in AI agents depends on being able to understand and audit what they’re actually doing. All important operations like inputs, reasoning steps, tools they use, and outputs should be logged securely. Being able to observe everything lets security teams catch weird behavior and helps users verify that things are working correctly.

Information describing what agents do, like whether they’re just reading data or messing with sensitive stuff, should be available for both automated monitoring and human review. Strong AI agent threat detection capabilities help identify suspicious activity as it occurs. User interfaces should give people insights into how the agent is planning and making decisions, especially for risky or complicated tasks.

Implementation Measures:

- Centralized, secure logging of inputs, outputs, and intermediate reasoning steps.

- APIs or dashboards that clearly show agent actions and related risks.

- Clear interfaces that help users understand agent decisions and authenticate AI agents.

Strategic Benefits of AI Agent Security

Most companies now use AI for daily business tasks, so protecting these systems has become absolutely essential. The benefits of AI agent security go far beyond basic system protection. They help build real customer trust, keep you ready for regulatory audits, and make your whole operation tougher in the long run. Here’s what companies actually get when they put secure AI environments first:

Protection of Sensitive Data

AI agents work with really sensitive information like financial transactions, health records, and customer details. Good security makes it much harder for this data to get stolen or misused, which saves your business from expensive breaches.

When you put an AI agent for security in place, you can watch everything automatically and block unauthorized access way better than the old methods. This keeps your risks low while all your important business information keeps moving like it should.

Stronger Login Protection and Access Control

When hackers break into systems, they usually get in through weak login security. Today’s security systems let companies authenticate AI agents using better methods like fingerprint scanning, digital certificates, and ongoing identity checks.

Strong login protection makes sure only the right people and systems can access your data, which cuts down on fake identity attacks and problems from people inside your company. This approach gives everyone more confidence when machines talk to other machines or when people work with AI systems.

Organized and Expandable Security Systems

Companies do much better when security gets built in from the start instead of being added later. An AI security agent framework gives you a solid structure for watching, catching problems, and responding across all your different systems.

This makes it way easier to add more AI tools without creating new security holes. With this kind of setup, organizations can weave security features right into their daily work processes, so they can grow with confidence as their operations get bigger.

Better System Safety and Reliability

When AI runs important stuff in banks, hospitals, and major infrastructure, you need systems that work right every single time, not just systems that work fast. Investing in AI agent safety makes sure your systems actually do what you built them to do, even when things get crazy or someone’s trying to break in.

Safe AI agents stop your operations from falling apart, keep your users safe, and make sure your business doesn’t grind to a halt. These safety steps also help you earn trust from government regulators, customers, and business partners who really need your services to keep working no matter what.

Quicker and Smarter Threat Response

Old security methods usually can’t keep up with what the bad guys are doing, but AI security completely changes that game. With AI-powered threat mitigation, your systems can catch suspicious stuff the moment it starts happening and shut it down before anything bad actually occurs.

These tools change tactics fast when brand new threats pop up, so you get way less downtime and your wallet doesn’t take such a big hit. Companies get ahead of the game by preventing problems instead of just picking up the pieces after everything goes wrong.

Company-Wide Protection

Today’s businesses run on connected digital systems where one weak spot can expose your whole network. Enterprise AI protection lets companies secure every department and process using one consistent approach.

Whether it’s your supply chain operations or customer service chatbots, having the same strong safeguards everywhere keeps all your AI contact points solid. This complete coverage stops weak areas from bringing down your entire security setup.

Better Trust and Reputation

Customers, business partners, and government agencies want to work with companies that can prove their AI systems are actually secure. When people can see you’re putting real money into AI agent safety and company-wide controls, it builds confidence and makes your brand look better.

Over time, this trust turns into customers who stick around, easier compliance checkups, and better chances to work with other businesses.

Innovation and Growth

When companies are assured that their AI implementations are safe, it gives them more courage to implement new tools and applications. By integrating an AI security agent framework into the operations, it becomes easier to experiment, innovate, and scale in a responsible manner.

Organizations have an advantage in their security-backed innovation, as it enables them to dominate the digital transformation with minimal risk exposure.

Also Read: AI Agents in Enterprise: Real-World Impact & Use Cases

Best Practices For Securing AI Agents

Securing AI systems needs powerful technology and well-disciplined procedures. The following best practices to secure AI agent deployments assist organizations in enhancing resilience, reducing vulnerabilities, and preserving trust as they increase their adoption of intelligent systems.

Establish Strong Authentication Protocols

All AI agents should be authenticated, and then they should be prohibited from dealing with sensitive data or any other business systems. Multi-factor authentication, cryptographic, and ongoing identity verification make them reliable at all levels.

This eliminates hackers impersonating systems and the introduction of fake agents to workflows. An AI agent for security works best when it is designed with authentication capabilities, and organizations are assured that only authorized agents are executing within the network.

Use the Zero Trust Principle

The concept of zero-trust security assumes that no system or user is trusted by default. Every request, be it by an employee, application, or AI, is verified continuously prior to being allowed access. This will reduce the chances of abuse of power or abuse of inside knowledge.

By using zero-trust models, businesses can regulate access to data by the agents, minimize potential exposure in case one account is compromised, and create more resistance against unauthorized activity.

Protect the Training and Data Pipeline

AI models rely on training information to come up with correct decisions. In case that information is altered, poisoned, or revealed, the performance of an AI agent is not reliable or exploitable. Encryption of datasets, source verification, and access controls help to maintain the model integrity.

In the context of AI agent security for business, this implies that sensitive datasets must be trustworthy, and it is essential in cases of high stakes, such as the finance or healthcare industry, where a mistake can be disastrous.

Track and Identify Threats on an Ongoing Basis

Even well-planned AI systems are under threat after operating in real-life scenarios. Constant monitoring will identify the abnormalities in agent activity, including uncharacteristic data requests, system overloads, or abnormal interactions.

Threat detection with real-time AI agents helps companies identify problems before they turn into full-blown ones. Following the best practices for securing AI agents will guarantee long-term visibility and prompt and effective response to the emerging threats.

Combine AI Security Frameworks

The security is enhanced when it is done in a systematic manner instead of ad hoc. A framework of an AI security agent defines the detection, response, and governance standards so that all departments use the same protection. Such a structure is easy to scale with more agents being added to the enterprise.

By securing AI agents under a single model, businesses can achieve transparency and efficiency, as well as mitigate the risks associated with the fragmented defense strategies.

Regular Red Teaming and Stress Tests

The weak areas are brought to light through attack simulations and stress testing before the actual attackers are able to use them. Red teaming reveals the behavior of AI models to adversarial input, manipulated queries, or targeted overloads.

Such exercises equip teams with real-life situations and also demonstrate where the controls are lacking. Implementation of AI agent security with regular drills to ensure that the defenses are not outdated when threats are changing.

Enhance Security in Stages of Deployment

Hasty deployments augment the likelihood of vulnerabilities going uncapped. The implementation of AI should be done in a sandbox, with progressive releases and constant updates to achieve reliability. This gradual process aids in the identification of defects in the initial stages, and it also alleviates the exposure of live systems.

As multi-AI agent security technology has emerged, organizations also have to test the interaction between various agents and how they will cooperate without introducing new security gaps.

Train Employees and Stakeholders

Technology cannot exist in isolation as it requires knowledgeable human supervision. It is necessary that employees and other stakeholders know how to be aware of suspicious behavior, how to manage data, and how to report as soon as possible.

Frequent training ensures the creation of awareness and accountability throughout the organization. Through integrating a spirit of vigilance and keeping pace with the most appropriate standards of protection of AI agent, businesses enhance their technical and human protection.

We follow expert strategies that strengthen resilience, close security gaps, and ensure your intelligent systems remain reliable at every step

5 Most Pressing AI Agent Security Challenges and Solutions to Overcome Those

As AI agents handle important business tasks, they encounter changing security problems like data breaches and hostile attacks. Spotting these threats and putting strong protections in place, such as access controls and ongoing monitoring, is necessary to stay ahead of possible risks. Let’s check those out.

1. Data Poisoning and Manipulation

Attackers may intervene with training data and introduce malicious and misleading inputs to AI systems. This will result in faulty model output, bias ,or even unauthorised access. The magnitude of this issue renders it challenging to detect.

Solution: Companies should authenticate data sources, store and transfer the information using encryption, and perform anomaly detection to identify the presence of unusual behavior. The first step towards AI agent security risks is to ensure that the data pipeline is uncompromised.

2. Adversarial Attacks

Minor, precisely calculated manipulations in input information can deceive AI models and cause them to make the wrong classification. As an example, a facial recognition system can be defeated by an image with slight manipulations. Such AI agent security vulnerabilities are particularly hazardous in businesses such as healthcare or financial systems, where mistakes can be extremely expensive.

Solution: The continuous model testing, adversarial training, and red-teaming exercises are used to expose the weaknesses. Layered defenses should also be used by the enterprises to check both the inputs and outputs of the enterprises in order to know whether they are being manipulated.

3. Insider Misuse of AI Tools

Employees and business partners sometimes abuse AI tools on purpose or by mistake. When AI boosts what people can do, insiders might misuse company data, create fake messages, or skip around normal business processes. Watching for this kind of abuse gets tricky without breaking down trust in the workplace.

Solution: Companies need role-based access controls, activity logs, and behavior tracking to keep tabs on what insiders are doing. Building strong security features into AI agent systems means these safeguards are built in from the start, rather than being added later.

4. Vulnerabilities in Multi-Agent Environments

When companies put several AI agents to work together, setup mistakes or bad configuration choices can expose sensitive company information. Not having clear communication rules or proper oversight can also create weak spots that hackers love to target.

Solution: Standardized frameworks, tight access controls, and regular checkups should guide how you roll these systems out. Using secure AI solutions makes sure your agents don’t just work well on their own but also play nice together within your bigger business systems.

5. Insecure Deployment and Maintenance

Even the most advanced AI agent can get completely undermined by sloppy rollout practices. Slow security patching, rushed updates, and untested deployment environments basically hand attackers easy ways in. This means security is something you continually need to work on, not just a one-time check.

Solution: Businesses should stick to step-by-step release strategies, apply updates regularly, and watch their systems around the clock. When you line up with proven methods for tackling AI agent security challenges, your organization can stay protected against new threats that show up long after you’ve successfully deployed.

Implement AI Agents for Ensuring Robust Security with Appinventiv’s Experts

The future of AI agent security depends on proactive, intelligent, and adaptive systems. As AI agents become more independent and woven into business operations, the spotlight will increasingly be on securing AI agents, putting multi-agent monitoring into place, and making sure there’s transparency and accountability. Companies that grab onto these strategies today will be in a much better spot to stop data breaches, handle AI-powered threats, and keep operations running smoothly in our increasingly digital and AI-driven world.

When businesses adopt core principles like clear human oversight, dynamic permissioning, and complete transparency of actions, they can dramatically cut down on weak spots. Implementing multi-agent security frameworks, continuous monitoring, and threat detection further enhances resilience against AI-powered attacks.

Appinventiv is an AI agent development company with deep expertise in building AI-powered applications while putting security, scalability, and enterprise compliance first. Our experts help organizations not only deploy AI agents but also secure them against evolving threats, ensuring that businesses can utilize automation without compromising sensitive data or operational integrity.

Key Highlights of Our Expertise:

Notable AI Projects: Americana ALMP, MyExec, MUDRA, Vyrb, Flynas

Enterprise Grade Security: We implement authentication protocols, dynamic permissions, and logging frameworks to protect AI agents and enterprise data.

Award Winning Company: Recognized globally for innovation, including App Development Company of the Year by Entrepreneur.com, Deloitte Tech Fast 50, Clutch Global Spring Award 2024, and by The Economic Times as “The Leader in AI Product Engineering & Digital Transformation”, showcasing its leadership in delivering secure, scalable AI solutions.

Continuous Improvement: AI systems are constantly changing, and we make sure that agents keep operating securely through updates, retraining, and adaptive threat mitigation strategies.

Customer Centric Design: Beyond technical excellence, Appinventiv puts user experience first, making AI agents intuitive, actionable, and aligned with organizational goals.

Connect with our experts today to build secure AI agents that operate safely and effectively, giving businesses innovation, efficiency, and enterprise-grade protection.

FAQs

Q. What makes securing AI agents so difficult?

A. Securing AI agents gets tricky because they work on their own, interact with multiple systems, and often handle sensitive data on a huge scale. Their ability to learn and change can create unexpected weak spots, while environments with multiple agents make everything more complicated. Together, these factors make traditional security methods fall short, so you need specialized AI agent frameworks and monitoring strategies.

Q. Why do AI agents pose different security challenges?

A. Unlike regular software, AI agents make choices based on patterns, training data, and what’s happening around them. This brings unique risks like adversarial attacks, data poisoning, and malware that can adapt. On top of that, AI agents can make human mistakes or insider threats way worse, which makes AI agent security for business absolutely critical for companies using intelligent systems.

Q. How can I protect my business from AI-powered threats?

A. Protecting your business takes a mix of technology, processes, and good governance. Put in place strong authentication to verify agent identity, continuous monitoring to catch suspicious behavior, layered access controls, and secure training pipelines. Adding AI-powered threat mitigation solutions ensures that threats are spotted early and responses occur automatically when possible, thereby reducing your risk exposure.

Q. What are the top security risks facing AI agents?

A. Major risks include deepfakes and social engineering, AI-powered phishing, automated hacking, adaptive malware, insider misuse, adversarial attacks on models, data poisoning, and model theft. Tackling these threats requires solid AI agent threat detection systems and careful management of permissions, inputs, and outputs.

Q. How can companies implement AI threat protection frameworks?

A. Implementing AI threat protection frameworks:

- Establish clear human oversight for each AI agent.

- Restrict agent capabilities based on purpose and tasks.

- Ensure transparency and observability of all actions.

- Monitor continuously for suspicious behavior.

- Maintain secure, centralized logs of inputs, outputs, and reasoning.

- Apply robust authorization and role-based access controls.

- Verify agent identities to authenticate AI agents.

- Combine these measures to strengthen overall enterprise resilience.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

AI Fraud Detection in Australia: Use Cases, Compliance Considerations, and Implementation Roadmap

Key takeaways: AI Fraud Detection in Australia is moving from static rule engines to real-time behavioural risk intelligence embedded directly into payment and identity flows. AI for financial fraud detection helps reduce false positives, accelerate response time, and protecting revenue without increasing customer friction. Australian institutions must align AI deployments with APRA CPS 234, ASIC…

Agentic RAG Implementation in Enterprises - Use Cases, Challenges, ROI

Key Highlights Agentic RAG improves decision accuracy while maintaining compliance, governance visibility, and enterprise data traceability. Enterprises deploying AI agents report strong ROI as operational efficiency and knowledge accessibility steadily improve. Hybrid retrieval plus agent reasoning enables scalable AI workflows across complex enterprise systems and datasets. Governance, observability, and security architecture determine whether enterprise AI…

Key takeaways: AI reconciliation for enterprise finance is helping finance teams maintain control despite growing transaction complexity. AI-powered financial reconciliation solutions surfaces mismatches early, improving visibility and reducing close-cycle pressure. Hybrid reconciliation logic combining rules and AI improves accuracy while preserving audit transparency. Real-time financial reconciliation strengthens compliance readiness and reduces manual intervention. Successful adoption…