- Market Overview: Small Language Models in Enterprise AI

- Why Enterprises Are Turning to SLMs: A Glimpse into the Benefits

- Lower Operating Costs

- Tighter Governance

- Speed of Execution

- Predictable Performance

- Sustainability Alignment

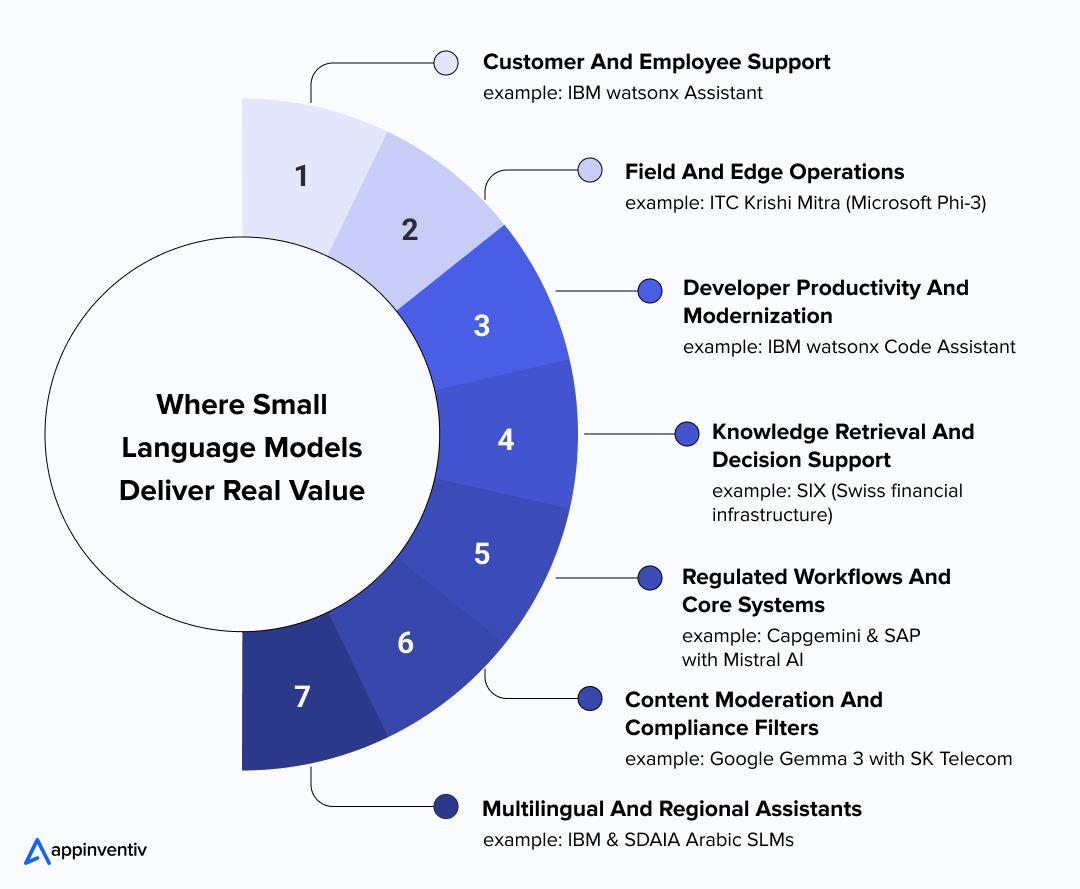

- Where Small Language Models Deliver Real Value: Understanding the Applications

- Customer And Employee Support

- Field And Edge Operations

- Developer Productivity And Modernization

- Knowledge Retrieval And Decision Support

- Regulated Workflows And Core Systems

- Content Moderation And Compliance Filters

- Multilingual And Regional Assistants

- Why Businesses Should Know About Open-Source And Custom Models

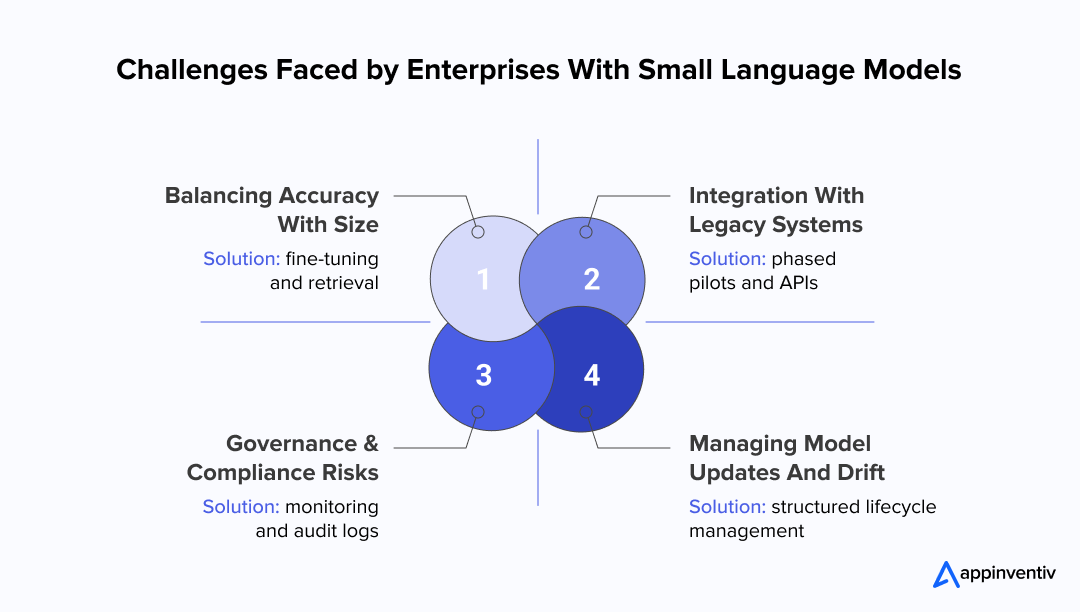

- Challenges Enterprises Face With Small Language Models

- Balancing Accuracy With Size

- Integration With Legacy Systems

- Governance And Compliance Risks

- Managing Model Updates And Drift

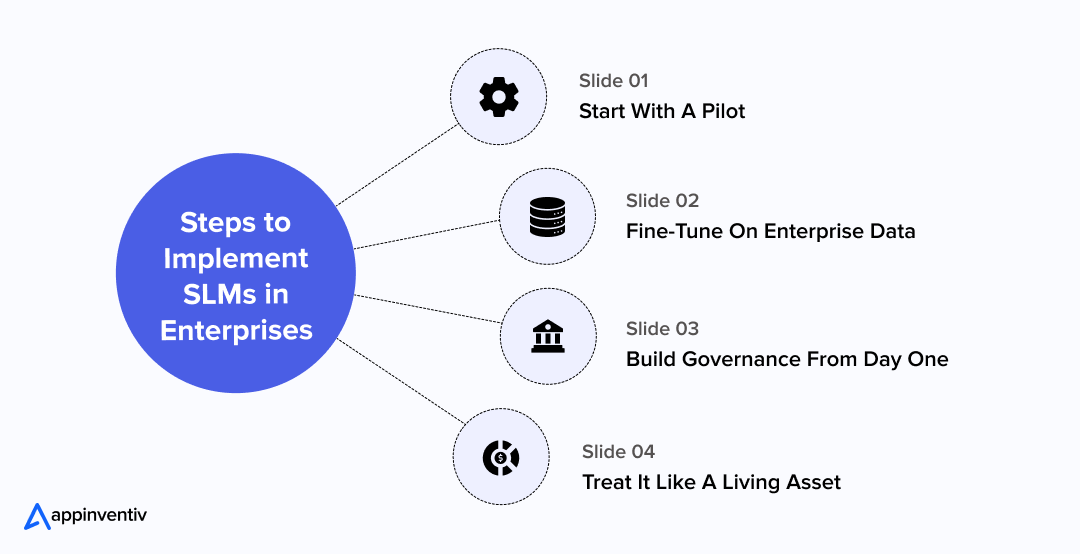

- How to Implement SLMs in Your Enterprise

- Step 1: Start With A Pilot

- Step 2: Fine-Tune On Enterprise Data

- Step 3: Build Governance From Day One

- Step 4: Treat It Like A Living Asset

- Measuring ROI: Cost, Speed, And Business Impact

- The Role of SLMs in Agentic AI and Modular Architectures

- Future Outlook: SLMs for Modern Enterprises

- From Savings To Strategy

- Industry-Ready Models

- Running Side By Side With Large Models

- Why Appinventiv Is The Right Partner For Leveraging SLMs

- FAQs

Key takeaways:

- Small language models for Enterprise AI deliver cost savings and faster adoption.

- Enterprise SLMs enhance governance, compliance, and data privacy.

- Fine-tuning SLMs on internal data boosts accuracy and relevance.

- SLM lifecycle management ensures models stay current and reliable.

- SLM deployments provide quicker ROI compared to large models.

- Future enterprise AI will balance SLMs for operations and LLMs for broader tasks.

For years, the conversation around enterprise AI has revolved around “bigger is better.” Large language models became the default choice, promising unmatched capabilities at the cost of equally unmatched infrastructure bills. But in the boardrooms of modern enterprises, that equation no longer holds. The shift is clear: smaller, sharper, and more cost-aligned models are proving to be the smarter investment.

Small language models for Enterprise AI are not about doing less but are about doing what matters most, faster and with far greater efficiency. Unlike their heavyweight counterparts, these models can be deployed with tighter governance, trained on domain-specific data, and run with far lower operational overhead. For CEOs and CIOs under pressure to cut costs while still accelerating digital transformation, this shift is not optional but strategic.

We are now entering a phase where small language models in Enterprise AI are enabling companies to unlock AI’s value without draining budgets or creating data-security blind spots. Whether it’s customer engagement, knowledge management, or automation at the edge, Enterprise AI small language models are showing that precision often beats scale.

The message is simple: the future of enterprise AI will not be defined by size, but by fit. And small language models are built to fit the enterprise reality which is cost-conscious, compliance-heavy, and performance-driven.

Discover how adopting Small Language Models today can accelerate ROI, strengthen governance, and secure a lasting competitive edge.

Market Overview: Small Language Models in Enterprise AI

Enterprise AI spend is accelerating, and leadership teams are moving from broad experiments to operational choices that protect margins and minimize risk. IDC reports that organizations spent about $235B on AI in 2024, with spending expected to reach $630 billion by 2028. That growth is forcing hard decisions about unit economics, energy use, and governance, which is exactly where small language models in Enterprise AI are gaining ground. Furthermore, the Gartner report also signifies that enterprises will use small, task-specific models three times more than general LLMs by 2027.

IBM frames the shift plainly: small language models (SLMs) use less memory and processing power, which makes them easier to deploy in constrained environments, on-prem, and at the edge. IBM’s view is that this efficiency advantage translates into faster responses, tighter control, and simpler scaling for enterprise SLMs. IBM also underscores that SLMs can be tuned to domain language and policies, improving alignment with enterprise workflows.

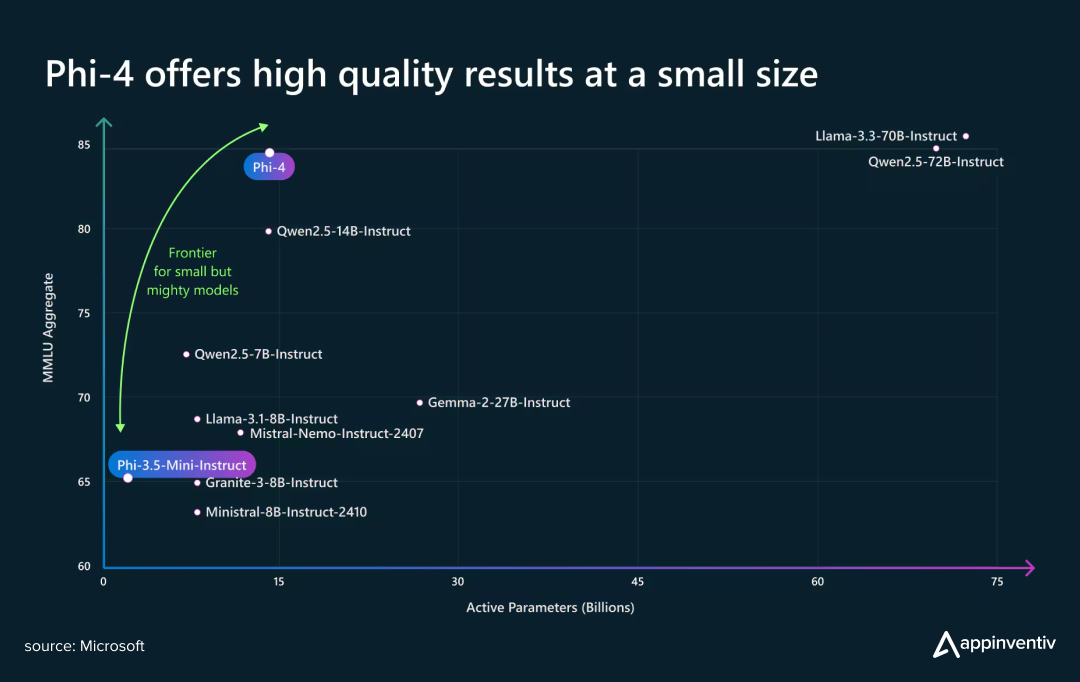

The World Economic Forum echoes this direction for CEOs. WEF highlights SLMs as a targeted, efficient, and cost-effective path to value, noting examples such as Llama, Phi, Mistral, Gemma, and IBM Granite as the class of models enterprises are now testing and deploying. WEF also links SLMs to edge AI gains and calls out their role in reducing AI’s energy footprint, two factors that resonate with cost control and sustainability mandates.

Furthermore, referencing Microsoft, the World Economic Forum implies that the new generation of small language models for enterprise AI is not only more accurate but also safer for handling sensitive data. The chart below highlights Microsoft’s Phi-4, released in December 2024, which shows how SLMs can outperform larger models while remaining compact and cost-effective.

C-suite conversations are no longer about raw model size. They focus on the high operational costs of LLMs and how cost-effective generative AI models can deliver the same or better task performance when the problem is narrow, the data is proprietary, and latency or privacy matters.

What this means for CEOs:

- Value over volume: Choose Enterprise AI small language models when the use case is well-scoped, privacy-sensitive, or latency-critical. You get measurable outcomes without a runaway compute.

- Governance that sticks: Smaller models simplify review, testing, and policy enforcement. This supports auditability and lowers operational risk.

- Energy and sustainability: SLMs help curb compute and power intensity, aligning AI strategy with environmental targets and cost discipline.

- Spend with intent: With AI budgets expanding, steer investment toward SLM model serving and fine-tuning pipelines that map directly to P&L outcomes, not just experimentation.

In short, small language models for Enterprise AI fit the realities that matter to the board: lower run-rate costs, stronger control over data, and clearer paths to production. They are emerging as the practical choice for enterprises that want measurable impact without the overhead of managing oversized models.

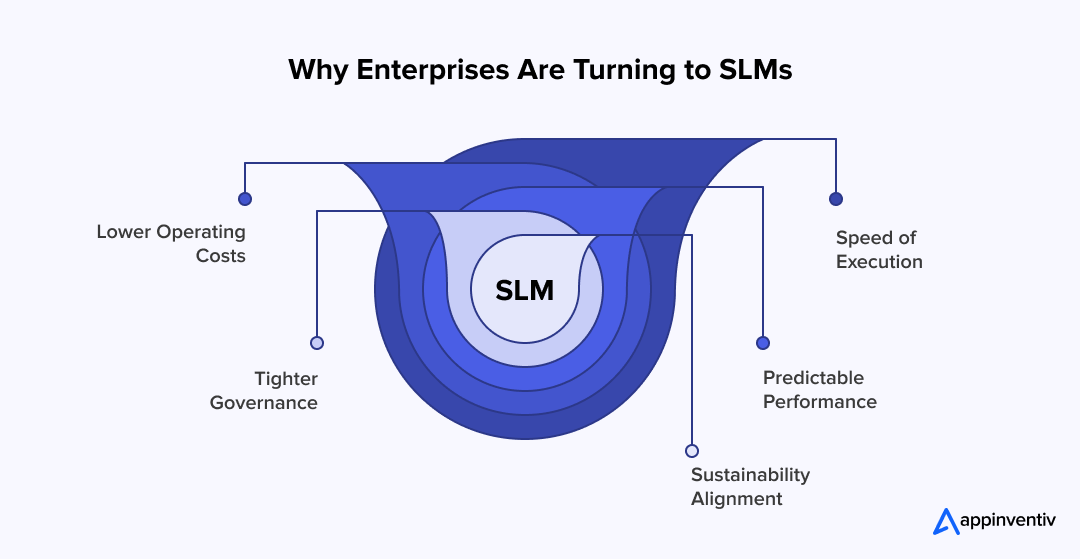

Why Enterprises Are Turning to SLMs: A Glimpse into the Benefits

The pressure on leadership today is not about whether to invest in AI, but how to do it responsibly and profitably. Many boards have already approved AI budgets, yet CFOs and CIOs are asking tougher questions: what is the return, what is the risk, and what is the true cost of ownership? This is why small language models for Enterprise AI are gaining attention inside the enterprise: they answer those questions in a way large models rarely can.

Let’s look into the benefits of Small Language Models in detail below:

Lower Operating Costs

The economics are straightforward. Running a large model requires heavy cloud infrastructure, high energy use, and constant optimization. Smaller models can be trained, fine-tuned, and deployed on standard enterprise servers, cutting spend without cutting capability.

Tighter Governance

Compliance has become a central board-level issue. Large general-purpose models often raise concerns around data privacy, auditability, and regulatory fit. Small models can be trained on controlled datasets, with outputs that are easier to monitor and approve. This gives executives confidence that AI initiatives will stand up to both internal and external review.

[Also Read: AI Regulation and Compliance in the US – Navigating the Legal Intricacies of Software Development]

Speed of Execution

Enterprises can’t wait 12–18 months for results. Smaller models move faster from pilot to production, with lighter integration needs and more flexibility for updates. This speed translates into earlier wins and a clearer view of business impact.

Predictable Performance

Large models often behave like black boxes, producing answers that are hard to interpret or explain. Small language models are narrower by design, which makes their outputs more predictable and easier to align with enterprise rules, tone, and brand.

Sustainability Alignment

For companies under ESG commitments, energy consumption is no longer just an IT concern but a board priority. Smaller models use less compute and power, helping enterprises show progress on sustainability targets while keeping costs under control.

Where Small Language Models Deliver Real Value: Understanding the Applications

Small language models are not abstract research projects anymore; they are finding their place inside enterprises where efficiency, compliance, and cost control matter most. From customer engagement to regulated workflows, these models are proving that precision often outweighs brute force.

Customer And Employee Support

Enterprises are using small language models to power chatbots and virtual agents that can be tuned to company-specific language and policies. Unlike larger models that rely on external hosting, enterprise SLMs can run in private environments, giving faster responses, lower costs, and stronger compliance with data regulations.

[Also Read: How Much Does It Cost to Build an AI Chatbot App Like Ask AI?]

IBM’s watsonx Assistant, built on Granite small language models, is a strong example. IBM itself uses these models to support internal IT operations, providing accurate helpdesk answers and mainframe troubleshooting without exposing sensitive information outside its controlled environment.

Field And Edge Operations

In industries where employees operate in remote or bandwidth-constrained areas, SLM-powered edge chatbots make information accessible even with limited connectivity. Their compact size allows them to run on standard hardware while still providing domain-specific insights in real time.

ITC’s Krishi Mitra is a real-world case. Powered by Microsoft’s Phi-3 small language model, it assists more than a million farmers across India with crop guidance and market advice. The assistant is designed to work in low-bandwidth conditions, showing how SLMs can extend enterprise reach to the field.

Developer Productivity And Modernization

Legacy systems remain a barrier for many enterprises, especially in banking, insurance, and government. Small language models in enterprise AI are being fine-tuned to analyze, explain, and modernize old codebases. Their smaller footprint makes them easier to deploy within secure IT environments, keeping critical data in-house.

IBM’s watsonx Code Assistant provides a clear example. It uses Granite models to automate COBOL-to-Java modernization on IBM Z mainframes, cutting down the manual effort required and enabling faster updates of mission-critical systems.

Knowledge Retrieval And Decision Support

Employees often need quick access to accurate information from internal policies and records. SLM deployments make this possible by powering retrieval systems that deliver compliant, consistent answers drawn only from enterprise-approved sources. This reduces risks of misinformation and ensures knowledge remains aligned with regulations.

SIX, the Swiss financial infrastructure provider, offers a compelling case. It has developed an on-premises retrieval solution using the best open-source small language models to process financial documents and customer interactions. The system provides staff with policy-aligned answers while maintaining strict data security and compliance standards.

Regulated Workflows And Core Systems

In heavily regulated industries, AI models must not only be accurate but also auditable. Small language models are increasingly being embedded into ERP and core systems to automate workflows while staying within legal and compliance frameworks. Their ability to be narrowly scoped makes them easier to certify and monitor.

Capgemini and SAP’s partnership with Mistral AI illustrates this shift. They are integrating small open-weight models into ERP platforms for clients in defense, energy, and public administration. These deployments streamline reporting and workflow execution while meeting the high bar of regulatory compliance.

Content Moderation And Compliance Filters

For enterprises managing user-generated content or sensitive communications, small language models for Enterprise AI act as effective compliance gateways. They can be fine-tuned to screen outputs for personal data, offensive material, or policy breaches before they reach customers or regulators.

Google’s Gemma 3 shows this in practice. SK Telecom has deployed a fine-tuned Gemma model to handle multilingual content moderation, ensuring safer interactions across its services while keeping compute demands and costs manageable.

Multilingual And Regional Assistants

Enterprises operating globally need to meet local language and regulatory needs. Small language models make it feasible to build assistants tailored to specific languages and cultural contexts, reducing reliance on generalized models that miss critical nuances.

IBM’s collaboration with Saudi Arabia’s SDAIA provides a real-world proof point. Together, they are training Arabic-focused small language models to power enterprise services in the Middle East, aligning AI deployment with local language requirements and national data policies.

Why Businesses Should Know About Open-Source And Custom Models

The applications of small language models we’ve seen so far make one thing clear: small language models can deliver real business impact. But how enterprises choose and manage these models is just as important as where they deploy them. The table below highlights the key areas leaders should pay attention to:

| Focus Area | Why It Matters For Businesses |

|---|---|

| Open Source Models Expand Options | Gives enterprises flexible starting points, freedom from vendor lock-in, and the ability to tailor AI systems closely to industry needs. |

| Open Source Is Freedom With Responsibility | Reduces licensing costs and increases transparency but shifts accountability for support, compliance, and governance onto the enterprise. |

| Fine-Tuning Is Smarter Than Starting Over | Saves cost and time compared to training from scratch, while embedding enterprise-specific vocabulary, processes, and customer context. |

| Adoption Requires A Clear Roadmap | Success depends on phasing: start with pilots, fine-tune, build governance, then scale into regulated workflows. |

Challenges Enterprises Face With Small Language Models

Small language models answer many of the cost and control concerns raised by boards, but they are not without limits. Leaders need to know where the pressure points are so they can plan adoption realistically. Let us look into the challenges of SLMs in detail below and their solutions:

Balancing Accuracy With Size

The biggest trade-off is performance. A compact model is faster and cheaper to run, but it may miss the depth and nuance of a larger system. That gap can become critical when the use case involves regulatory language, financial data, or medical records.

The way forward is to fine-tune small language models on company data and pair them with retrieval layers. This approach grounds responses in enterprise knowledge and closes much of the accuracy gap without giving up the efficiency benefits.

Integration With Legacy Systems

Enterprises rarely start with a clean slate. Core systems like ERP, CRM, and supply chain platforms are already in place, and plugging in a new model can expose compatibility gaps. Even lightweight small language models for Enterprise AI can struggle if the integration is handled poorly.

The fix is to start small. Run pilots in contained workflows and connect models through tested APIs or middleware before scaling. Treat integration as a staged exercise, not a single rollout, to avoid disrupting existing operations.

Governance And Compliance Risks

AI tools don’t get a free pass when regulators come knocking. Even smaller models can produce biased or non-compliant outputs if they are not monitored. In industries like banking, insurance, or healthcare, that risk alone can block adoption.

Enterprises need to build governance into the process, not bolt it on later. Dashboards for monitoring, audit logs, and regular bias checks should be part of every enterprise SLM deployment. That gives boards confidence that the system can hold up under regulatory review.

Managing Model Updates And Drift

Models age quickly. Custom-trained SLMs today may not reflect tomorrow’s policies, market conditions, or compliance rules. Without a clear plan for updates, enterprises risk running with outdated outputs.

The answer is structured lifecycle management: schedule retraining cycles, track versions, and assign ownership between business and IT. Treat the model like any other enterprise asset which is reviewed, maintained, and updated on a regular calendar.

How to Implement SLMs in Your Enterprise

Small language models are not switch-on solutions. To get real value, companies need to bring them in gradually, with clear checkpoints that prove they work and keep them under control.

Step 1: Start With A Pilot

Begin with one contained area such as an internal helpdesk, policy search, or HR query bot. This keeps the risk low while giving leadership an early view of accuracy and user adoption. A pilot also produces the first hard numbers that can be taken back to the board.

Step 2: Fine-Tune On Enterprise Data

Generic models won’t reflect the language of your business. Feed the model with your manuals, product notes, and process documents. Fine-tuning at this stage raises accuracy and ensures answers follow enterprise rules, not generic internet knowledge.

Step 3: Build Governance From Day One

No enterprise system should go live without oversight. The same applies to the SLM adoption process here. Monitoring dashboards, audit logs, and simple bias checks must be active from the start. This protects compliance and makes it easier to explain outputs to auditors or regulators later.

Step 4: Treat It Like A Living Asset

An SLM is never finished. Policies change, markets shift, and data grows stale. Put lifecycle management in place with scheduled retraining, version control, and clear team ownership, just as you would with an ERP or CRM platform. That discipline keeps the model useful and trusted over time.

Measuring ROI: Cost, Speed, And Business Impact

Once an enterprise has piloted, fine-tuned, and governed a small language model, the next question from the board is simple: what has this delivered? Implementation only matters if it translates into measurable results. A clear ROI framework helps decision-makers see the value in numbers, not just in technology milestones.

| Metric | What To Measure | Why It Matters |

|---|---|---|

| Cost Efficiency | Infrastructure, licensing, and training costs compared with LLMs | Shows savings from adopting cost-effective generative AI models |

| Speed To Value | Time taken from pilot to full production use | Demonstrates agility and the ability to capture quick wins |

| Operational Impact | Reduction in manual work, support tickets, or average handling time | Links SLM deployments to efficiency gains inside the business |

| Governance And Risk | Number of compliance checks passed, audit readiness, policy adherence | Quantifies risk reduction and strengthens trust with regulators |

| Business Outcomes | Improvements in customer satisfaction, decision turnaround, or employee productivity | Connects enterprise SLMs directly to financial performance and growth levers |

Up to this point, we’ve looked at the business case for small language models-their ROI, cost advantages, and operational impact. But the conversation shouldn’t stop at efficiency. For enterprises, the real question is how SLMs fit into the broader AI tech stack of the future. This is where the idea of modular or agentic architectures comes in, showing how enterprise SLM deployments and large models can work together as part of a scalable, long-term strategy.

The Role of SLMs in Agentic AI and Modular Architectures

In modern enterprise AI, small language models fit into modular, agentic architectures where different models handle different levels of complexity. This mix ensures coverage across both routine and advanced tasks, while keeping costs and risks under control.

| Model Type | Role in Enterprise AI | Benefits for Business |

|---|---|---|

| Small Language Models (SLMs) | Handle domain-specific, routine, and repeatable tasks such as compliance checks, customer service queries, or policy lookups | Lower cost, faster response, tighter governance, better privacy |

| Large Language Models (LLMs) | Invoked only for complex, cross-domain, or creative reasoning tasks | Broader context, deeper reasoning, multi-domain flexibility |

| Combined / Agentic System | Orchestrates SLMs for most workloads while using LLMs as an escalation layer | Balanced infrastructure use, scalable AI strategy, optimized ROI |

Enterprise SLM deployments require the right architecture and governance. Our AI experts help you overcome these challenges with confidence.

Future Outlook: SLMs for Modern Enterprises

Small language models for Enterprise AI have already shown they can cut costs and make AI easier to manage. Over the next few years, their role inside enterprises will grow well beyond efficiency. Here’s what business leaders should expect.

From Savings To Strategy

Right now, many firms use SLMs mainly to reduce heavy infrastructure spend. That will change. These models will become part of how companies deliver services, run internal processes, and protect compliance. What begins as a cost play will turn into a long-term capability.

Industry-Ready Models

Sectors like finance, healthcare, and energy will see more domain-trained models enter the market. Instead of starting from scratch, enterprises will be able to pick up SLMs already tuned to industry language and regulatory needs. This will shorten adoption cycles and raise accuracy.

Running Side By Side With Large Models

Large models will still have their place mainly in creative and cross-domain tasks. But for everyday work that needs control, speed, and compliance, smaller models will dominate. The enterprise AI stack will move toward using both in tandem, with SLMs carrying most of the operational load.

In short, businesses should plan for small language models to shift from being an efficiency tool to a core building block of enterprise AI consulting framework. They are lighter, easier to control, and more practical for the realities leaders face.

Why Appinventiv Is The Right Partner For Leveraging SLMs

We hope this blog has given you a clear picture of how small language models for enterprise AI are reshaping the way businesses think about cost, control, and speed. But moving from awareness to impact requires more than a model download. It calls for a structured adoption process, lifecycle management, and governance, all of which demand an experienced partner at your side.

At Appinventiv, we work closely with enterprises to translate AI strategies into practical outcomes. Our Generative AI services team specialize in building and fine-tuning SLMs for enterprises that align with domain-specific needs, whether that means training on internal data, deploying models in secure environments, or setting up monitoring systems that meet audit requirements. With our track record across industries like finance, healthcare, logistics, and retail, we know how to tailor enterprise SLMs to regulated and high-stakes environments.

We don’t just implement models but also design the full adoption roadmap. From pilot design to fine-tuning, governance frameworks, and SLM lifecycle management, Appinventiv ensures that each stage is handled with measurable ROI in mind. This approach helps leaders see early results while laying the groundwork for scaling AI across business functions without risk.

For enterprises exploring small language models in Enterprise AI, the next step is not about choosing between hype or hesitation but about choosing the right partner. With our expertise as an AI consulting company dealing with model deployment and enterprise integration, we can help you adopt SLMs with confidence and turn them into a lasting advantage for your business. Get in touch with us to design your adoption roadmap, fine-tune models on your enterprise data, and scale AI securely across your organization.

FAQs

Q. How do small language models compare to large language models in enterprise applications?

A. Small language models for enterprise AI are designed for efficiency, while large language models focus on scale. The two are not direct substitutes but complementary tools:

- Enterprise SLMs: Built for narrow use cases, faster to deploy, easier to govern.

- LLMs: Broader knowledge, suited for open-ended or creative tasks, but costly.

- Many enterprises combine both: Leveraging the applications of small language models for daily operations and using LLMs for innovation or research.

Q. What are the cost benefits of using small language models in enterprise AI?

A. The strongest benefit is financial control. Large models require high cloud spend and specialized hardware, while small language models are cost-effective generative AI models that fit better with enterprise budgets:

- Run efficiently on existing servers or private infrastructure.

- Lower fine-tuning and retraining costs.

- Reduced energy use, lowering both spend and environmental impact.

Q. How can small language models enhance data privacy in AI systems?

A. Data privacy is a key reason many enterprises adopt SLMs. By keeping models inside secure environments, leaders reduce exposure risks:

- Enterprise SLMs can be trained and deployed fully on internal systems.

- Sensitive data never leaves the firewall, meeting privacy standards.

- Easier to demonstrate compliance during audits and regulatory checks.

Q. What are the key advantages of small language models over large ones?

A. When leaders compare both approaches, the advantages of enterprise SLMs stand out clearly:

- Lower infrastructure and lifecycle costs.

- Faster rollouts across business functions.

- Narrow outputs that are easier to monitor and align with compliance rules.

- Support for structured SLM lifecycle management, making updates more predictable.

Q. How do small language models impact AI deployment in enterprises?

A. SLM deployments change how AI is introduced into the business. Instead of large, risky rollouts, companies can move step by step:

- Begin with pilots in low-risk areas.

- Fine-tune with enterprise data to ensure relevance.

- Scale gradually across departments, limiting disruption.

- Measure ROI early through cost, speed, and business impact.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

From Chatbots to AI Agents: Why Kuwaiti Enterprises are Investing in AI-Powered App Development

Key Takeaways Kuwaiti organizations are moving beyond basic chatbots to deploy AI agents that handle tasks, analyze data, and support operations intelligently. National initiatives such as Vision 2035 and CITRA regulations are creating a structured environment for AI-powered app development in Kuwait. Businesses in key sectors like banking, oil and gas, retail, and public services…

Beyond the Hype: Practical Generative AI Use Cases for Australian Enterprises

Key takeaways: Enterprises in Australia are adopting Gen AI to address real productivity gaps, particularly in reporting, analysis, and service delivery. From banking and pharmaceutical to mining and agriculture, Gen AI is applied across industries to modernise legacy systems and automate knowledge-intensive and documentation-heavy workflows. The strongest outcomes appear where Gen AI is embedded into…

The ROI of Accuracy: How RAG Models Solve the "Trust Problem" in Generative AI

Key takeaways: RAG models in generative AI attach real, verifiable sources to model outputs, which sharply cuts hallucinations and raises user confidence. Accuracy directly impacts ROI, driving fewer escalations, faster decision cycles, and lower support costs. RAG vs fine-tuning: RAG allows real-time updates without retraining, offering faster deployment and lower maintenance. Vector databases for RAG…