- Why Does Scaling AI Matters for Enterprises?

- Business-level Challenges When Scaling AI in Your Organization

- What are the Key Drivers and Steps for Scaling AI Successfully?

- Key Steps for AI Scaling

- Adding Generative AI to the Scaling Equation

- What makes scaling Generative AI different?

- Opportunity and Risk of GenAI Scaling

- Technologies that make it feasible

- The Cost Side of AI Scaling - Getting the Budgets Right

- How Appinventiv Helps Businesses Scale AI Responsibly & Cost-Effectively?

- Conclusion

- FAQs

Key takeaways:

- Only 11% of enterprises have scaled AI successfully.

- Scaling AI improves productivity, predictions, and personalization.

- Major barriers include data silos, talent gaps, and rising costs.

- Governance and business alignment are critical for success.

- Real-world leaders like JPMorgan and Netflix showcase the impact.

- Generative AI scaling offers big rewards but carries higher risks.

Scaling artificial intelligence is no longer about experimentation, it’s about creating impact at scale. Yet recent findings show that only about 11% of organizations have fully scaled AI across departments, even though those that have scaled their platforms report significant cost savings and operational gains. Meanwhile, an MIT study reveals that 95% of generative AI pilots produce no measurable impact on P&L, largely due to misaligned integration and execution .

From a CTOs point of view, it presents a dilemma – while AI scalability is essential, it is filled with pitfalls and unseen costs and to make the situation worse, leaders need an assurance that their investments will yield real business value – without budget overruns or governance failures.

That’s where it becomes important to find a balance. Scalability must be paired with AI project cost optimization, disciplined AI project budgeting, and a proactive AI project cost management process. Cost-effective AI scaling requires choosing between MLOps vs DevOps methodologies based on the complexity of machine learning workflows and infrastructure requirements.

When approached strategically, scaling AI unlocks massive enterprise opportunities – from intelligent automation to predictive insights and becomes a core differentiator.

Request a Strategic AI Scaling Assessment and get a custom roadmap aligned with your risk, compliance, and ROI priorities.

Why Does Scaling AI Matters for Enterprises?

For enterprises, the process to scale AI in business is no longer a side experiment – it’s fast becoming the biggest differentiator between those who lead industries and those who follow. The advantages of enterprise AI scaling stretch far beyond efficiency; they touch revenue, risk, and resilience at the core of operations.

- Better Productivity: When you strategically perform AI powered scalability, repetitive tasks once handled manually get automated at enterprise-grade levels. This not only cuts costs but also frees up teams for higher-value work.

- Predictive Power: AI project scaling can analyze far larger datasets, uncovering trends before they’re visible in traditional reports. From predicting customer churn rate to supply chain risks, predictive intelligence gets amplified at scale.

- Hyper-Personalization: Whether it is some retailer customizing product recommendations or a bank giving tailored financial advice, AI project scaling makes personalization consistent, adaptive, and cost-effective.

- Competitive Advantage: Enterprises which integrate AI deeply in their operating models are quicker to respond to changes, add innovation in customer engagement, and become more resilient to disruption.

These advantages of scaling AI have already started seeping into several real-world instances.

Take productivity for example, JPMorgan Chase’s COIN platform, for example, automated contract reviews that once consumed 360,000 lawyer hours a year, completing the work in seconds. That’s not just saved costs, its top legal talent freed up for more strategic work.

Or look at healthcare. HCA Healthcare rolled out its SPOT algorithm across 160 hospitals to detect early signs of sepsis, reducing mortality rates by nearly 23%. That’s AI directly saving lives at enterprise scale.

Customer-facing industries see the impact just as strongly. Netflix attributes about 80% of viewing activity to its recommendation engine. That level of personalization doesn’t just delight viewers – it keeps churn low in one of the most competitive subscription markets.

Risk and compliance are equally transformed. Mastercard uses AI to protect 159 billion+ transactions annually, spotting fraud in milliseconds. For global financial systems, speed and trust aren’t optional, they are survival.

And when it comes to infrastructure resilience, Siemens has deployed AI with Elvia in Norway to predict failures in power grids and increase capacity without costly upgrades. That is AI ensuring uptime in critical national infrastructure.

Put simply, AI-powered scalability is how enterprises boost productivity, sharpen predictions, personalize at scale, and mitigate risks that humans alone can’t manage. For CXOs, the message is clear: scaling AI for enterprises isn’t optional anymore, it’s the differentiator.

Business-level Challenges When Scaling AI in Your Organization

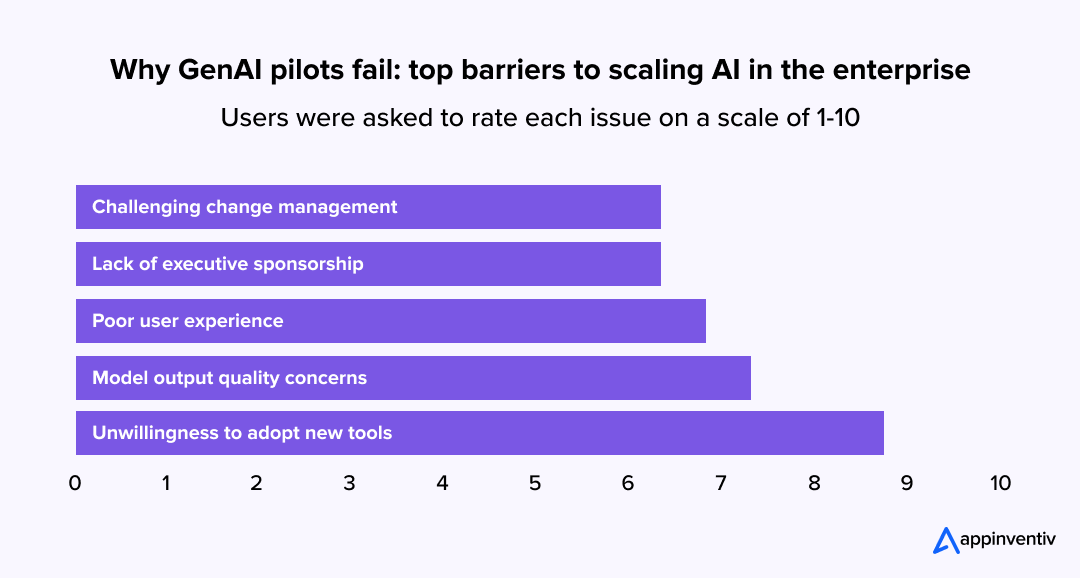

Every business leader loves the promise of AI, but when it comes to scaling AI for enterprises, solving the roadblocks can feel less like innovation and more like firefighting. The reality is that many organizations don’t fail because AI doesn’t work, they struggle because the environment around it is not ready.

Data silos and messy infrastructure

AI thrives on clean, accessible data. In many organizations, data still lives in isolated systems, making enterprise-wide model training incredibly difficult. This has not gone unnoticed by businesses, since 54% companies identify fragmented data systems as a major barrier to AI implementation and scaling.

Talent and skill gaps

AI pilots can get off the ground, but scaling often hits a roadblock: people. Advances in AI are being slowed by a global shortage of experts in deep learning, NLP, and RPA. This isn’t just an HR issue, it’s a strategic bottleneck for the enterprise.

Rising cloud and compute bills

As AI workloads scale, infrastructure costs don’t stay small. Spending on AI compute power – particularly for GPUs and inference pipelines – is rising dramatically. So much so that eight hyperscalers expect a 44% year-over-year increase to US$371 billion in 2025 for AI data centers and computing resources. alone. Mismanage this, and your AI scaling becomes a budgeting nightmare.

Shadow AI projects without governance

When departments roll out AI independently, risk multiplies. This “AI sprawl” leads to duplicated work, inconsistent policies, compliance threats, and spiraling costs. This is not innovation but a massive disarray.

In the boardroom, these challenges quickly consolidate into one core issue: AI project cost management, making it a concern that is much bigger than an IT or data science concern. The risks of spiraling costs, compliance gaps, and reputational damage mean AI scaling now sits squarely in the business agendas.

Get AI consulting from experts to execute solutions!

What are the Key Drivers and Steps for Scaling AI Successfully?

Scaling AI in an enterprise is not about adding big servers or hiring more data scientists, but orchestrating multiple levers so that the investment delivers predictable business outcomes. At the business level, the drivers one should care about most are data readiness, infrastructure, governance, business alignment, and the enabling technologies that make AI scalability practical.

Data is the foundation. Models trained on messy, fragmented datasets can’t scale reliably. Without clean pipelines, every additional use case risks months of rework and ballooning costs. Investing upfront in automated, unified data flows ensures models are robust, reduces operational friction, and allows your teams to deliver outcomes faster. This is critical for scaling AI for enterprises successfully.

Infrastructure comes next. Enterprise AI scaling requires cloud and MLOps frameworks that handle deployment, retraining, and monitoring without letting compute bills spiral. Organizations that build standardized pipelines see weeks shaved off rollout times and avoid unpredictable costs that often derail projects, achieving AI powered scalability.

Governance is equally critical. If there’s one thing we have learned watching companies try to scale AI, it’s this – governance and security aren’t items you work on at the end. The moment your models start making decisions at scale, every weak spot gets amplified. A small AI bias in training data suddenly affects thousands of customers, a missed compliance check can snowball into a regulatory inquiry, and reputational trust? That’s usually the first casualty if things go wrong.

It’s also not just about what your teams build. Most scaled systems lean heavily on outside vendors, APIs, and cloud providers. If those links aren’t managed carefully, you inherit their risks. That is why enterprise AI scaling works only when you treat governance as an ongoing discipline – something incorporated into every stage, from design to deployment to monitoring. Do that, and you don’t just keep regulators satisfied. You also give your board, investors, and customers a reason to believe your AI scalability strategy will last, instead of becoming another flashy pilot that collapses under pressure.

Business alignment is what separates successful initiatives from failed experiments. Projects that are tied directly to revenue growth, cost reduction, or operational efficiency are ultimately more likely to earn board approval and continue scaling. When your AI roadmap gets linked to measurable outcomes, budget approvals and stakeholder buy-in come naturally, enabling cost-effective AI projects.

Finally, investing in the right technologies in AI scaling is what forms an ecosystem. MLOps platforms streamline model operations, AutoML accelerates iteration, GPUs and vector databases handle large-scale data efficiently, and generative AI infrastructure allows predictive or creative workloads to scale. Together, these technologies underpin best practices to scale AI while keeping timelines and costs under control.

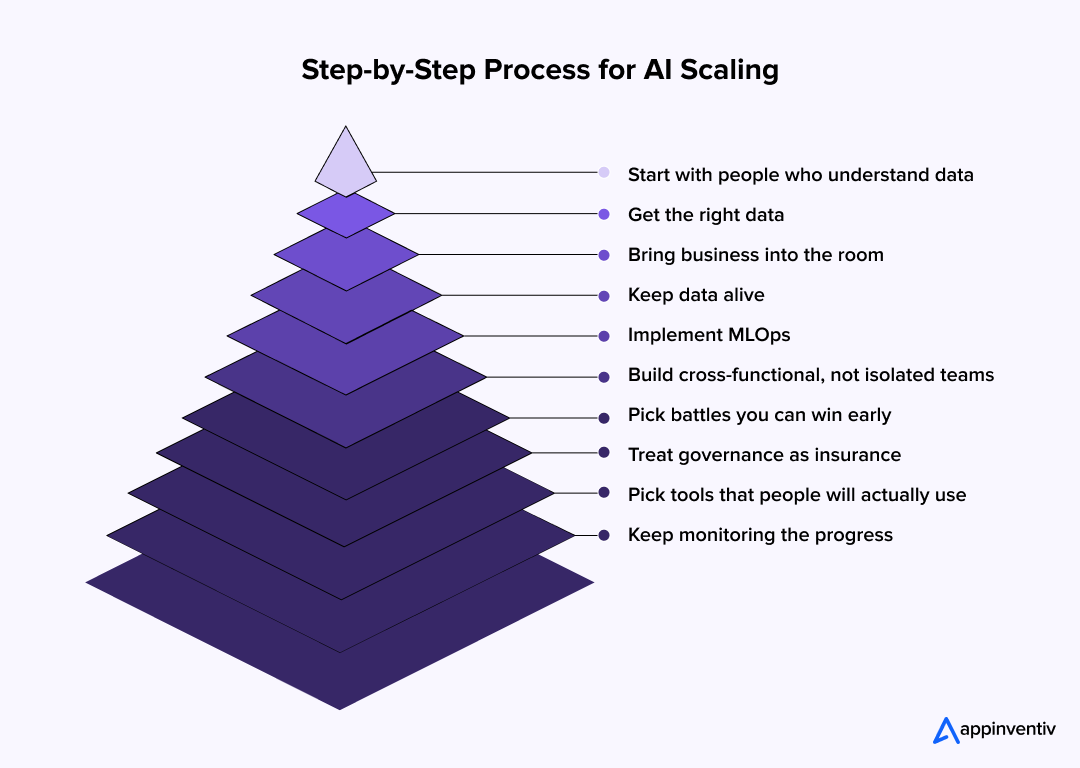

Key Steps for AI Scaling

With these elements covered, the next point of action is getting all of this together. Here are the key steps that can make your AI scaling journey easier.

Step 1: Start with people who understand data

Every AI journey begins with the right expertise. A strong data science team – people who actually know how to design and train models for your business – will save you from expensive detours. While APIs and large language models are equally critical for success, it is important to employ the right brains to build upon them.

Step 2: Get the right data

One of the easiest mistakes is assuming more data equals better results. What you really need is accurate, relevant information. That usually starts inside your own systems: customer interactions, transactions, operations data. Once you have that covered, look into the market studies, research reports, and trend data. If the quality isn’t there, scaling will only multiply the errors.

Step 3: Bring business into the room

AI fails when it’s treated as an IT-only project. Your finance, legal, customer service, and operations teams know the weak spots that matter, so when they are not involved from the start, you’ll spend months building something clever that no one actually trusts or uses.

Step 4: Keep data alive

Data is not static. Formats change, regulations shift, customers behave differently. If your data pipelines are not able to refresh and adapt, your models will get stale fast. Think of data as an asset that needs constant ongoing care, not a one-time clean-up exercise.

Step 5: Implement MLOps

We have seen enterprises spend months chasing the “perfect” MLOps stack, only to stall. What matters is whether your teams can actually use it and whether it fits your cloud and IT environment. A lean, well-matched MLOps setup keeps your deployments routine and costs contained.

Step 6: Build cross-functional, not isolated teams

The most effective AI projects are not run by just data scientists. They pull in IT, operations, compliance, and business units. This mix keeps models grounded in reality, avoids duplication, and ensures adoption when the system finally goes live.

Step 7: Pick battles you can win early

A common trap is trying to scale the hardest use case first. It almost always backfires. Go after projects that are easier to deliver but still make a visible difference, like customer support bots, employee productivity tools, or fraud detection tweaks. Those wins build credibility and buy you room for larger bets later.

Step 8: Treat governance as insurance

Compliance and ethics can’t be bolted on at the end. When your regulators or customers ask how your AI makes decisions, you will need to give them answers in almost real-time. Having reporting and governance baked from scratch can help protect you from reputational damage and future fines.

[Also Read: Responsible AI – Addressing Adoption Challenges With Guiding Principles and Strategies]

Step 9: Pick tools that people will actually use

The best platforms are often the simplest. Cloud-based data science environments let engineers and business teams experiment side by side. When the tools are easy to collaborate on, you scale faster and with fewer handoffs.

Step 10: Keep monitoring the progress

AI isn’t fire-and-forget. Models drift, costs creep, and users change how they interact with systems. Set up real-time monitoring for performance, cost, and actual user impact. It’s the difference between quietly optimizing and waking up to a very public failure.

For CXOs, the question isn’t whether you can scale AI – it’s whether you can scale it safely, cost-effectively, and in alignment with business priorities. Those who master these key drivers for scaling AI turn the technology from an experimental pilot into a strategic, revenue-generating asset that can achieve AI project cost optimization and unlock the full advantages of scaling AI.

Adding Generative AI to the Scaling Equation

While predictive AI helps enterprises analyze, optimize, and automate, generative AI scalability introduces a new set of opportunities and risks – at scale. CXOs increasingly see this not as a side experiment but as a boardroom priority, because generative models (like large language models and diffusion systems) touch knowledge, creativity, and customer experience directly.

What makes scaling Generative AI different?

Unlike your traditional ML systems, generative models need vast amounts of compute, fine-tuning, and data governance. Training or adapting such a large language model requires significant GPU capacity and highly curated datasets. Even with AI powered scalability, the cost curve can steepen quickly if enterprises don’t employ techniques like retrieval-augmented generation (RAG) or parameter-efficient tuning to control spend.

Opportunity and Risk of GenAI Scaling

When deployed responsibly, scaled GenAI enables knowledge management across the enterprise, personalized content generation at speed, AI-assisted software development, and adaptive customer engagement. For many industries – from financial services to healthcare and retail, this isn’t about novelty; it’s about creating new operating leverage.

The risk side –

Generative models scale their flaws – bias, hallucinations, and intellectual property concerns become board-level risks once outputs reach customers or regulators. Without robust guardrails, content moderation, and embedded governance, enterprises can find themselves exposed. This is where enterprise AI scaling frameworks need to adapt – adding policies and oversight unique to generative models.

Technologies that make it feasible

Scaling GenAI responsibly requires more than raw compute. Vector databases improve knowledge retrieval, cloud-native hosting (Azure OpenAI, Anthropic, AWS Bedrock) streamlines deployments, and hybrid infrastructure allows sensitive data to stay on-premise while still tapping into modern foundation models.

Generative AI can transform how enterprises create, communicate, and compete. But scaling it through generative AI development services is not a copy-paste of other AI projects. It requires cost discipline, governance by design, and the right technical ecosystem. Done well, scaling AI for enterprises shifts the organization from reactive efficiency to proactive innovation.

The Cost Side of AI Scaling – Getting the Budgets Right

Scaling AI for enterprises doesn’t mean writing a blank check – it means making deliberate, informed trade-offs so your investment delivers measurable business outcomes. For businesses, the challenge isn’t just technical execution; its AI project budgeting and AI project cost management at scale.

Here’s a clear picture of the major cost levers and trade-offs of AI development services that are built with a focus on scalability.

| Decision Area | Options | Cost and Effect | What’s Included | Business Implications |

|---|---|---|---|---|

| Build vs Buy | In-house AI development | $500k to $2M; 6 to 12 months | Model design, data collection & cleaning, training infrastructure, talent hiring | Full control, custom solutions, higher staffing & talent demands |

| Buy / Partner | Pre-built AI platforms / consulting | $150k to $600k; 2 to 4 months | Vendor platforms, integration, bias testing, limited customization | Access frameworks for scaling, faster deployment, less control |

| Pilot vs Scale | Pilot (PoC) | $50k to $150k; 4 to 8 weeks | Small datasets, single model, limited business unit, initial bias testing | Test viability, minimal investment, low risk |

| Scale | $600k to $3M+; 6 to 12 months | Enterprise-wide deployment, multiple models & business units, monitoring, retraining, compliance & bias checks | High exposure, requires governance and MLOps, potential for exponential cost if unchecked | |

| Infrastructure vs Innovation | Heavy cloud/GPU investment | $200k to $800k; ongoing | High-performance servers/GPUs, dedicated MLOps pipelines, real-time monitoring, disaster recovery | Speeds model deployment, risk of overspending if usage isn’t optimized |

| Lean + MLOps | $100k to $300k; 3 to 6 months | Standardized pipelines, automated retraining, resource optimization, integrated monitoring | Reduce AI development cost, supports repeatable model deployment, reduces operational waste |

Strategies for cost-effective AI projects scaling.

- Automate model retraining to avoid repeated manual effort.

- Reuse high-quality datasets across projects.

- Prioritize projects with the highest ROI per dollar spent.

- Combine AI project cost management frameworks with MLOps monitoring.

Timeline considerations.

- Pilots: 4 to 8 weeks, low cost.

- Partial deployment: 2 to 4 months, moderate cost, early ROI tracking.

- Full enterprise scaling: 6 to 12 months, high cost, requires governance and infrastructure planning.

Bottom Line for Businesses

Successfully scaling AI requires equal parts of financial and technical discipline. Through structured budgeting, AI project cost optimization, and smart trade-offs, AI can move from being a risky experiment to a sustainable, cost-effective AI project that drives measurable enterprise value.

Partner with Appinventiv and build AI systems that grow responsibly – without overspending.

How Appinventiv Helps Businesses Scale AI Responsibly & Cost-Effectively?

Scaling AI at an enterprise level is not just about technology but also ensuring outcomes are reliable, budgets are sustainable, and governance keeps you ahead of regulators and stakeholders. At Appinventiv, we act as more than an implementation partner; we are an AI consulting services company trusted by global leaders to deliver AI services and solutions that scale responsibly.

What sets us apart is our ability to align AI project cost optimization with real-world complexity:

- Financial Services: We worked with a leading bank to expand its risk scoring engine. The challenge wasn’t accuracy but scaling from a small PoC to handling millions of loan applications without cloud costs spiraling. By redesigning pipelines with lean MLOps and reusable components, we lowered our client’s compute spend by over 35% while still achieving regulatory-level reliability.

- Startup: We assisted a startup with building an AI agent that would access real-time data analytics and expert market insights and use them to offer professional consulting to SMBs. Our work ranged from building AI system architecture that included a multi-agent system, RAG pipeline, agentic framework, and an execution framework.

- Healthcare: We partnered with a top healthtech provider to roll out diagnostic AI across their hospital units. The project complexity lay in unifying fragmented datasets. With automated data cleaning and governance frameworks, we cut integration timelines by 40% and improved trust in model outputs.

- Restaurant: We helped Americana chain restaurants turn their fragmented last-mile operations into a unified data-driven engine that scales effortlessly across geographies and industries. Our team created a platform that could think fast, move faster, and offer complete control to last-mile operators with the help of functionalities like automated ETL, geospatial data processing, dynamic optimization, and actionable dashboards.

In each of these cases, the results were measurable: shorter timelines, lower operational costs, and sustainable adoption at scale. This is what enterprise AI scaling looks like when done with discipline.

For businesses, the choice is clear: scaling AI is too big to leave to chance. With Appinventiv, you get a partner who understands not just the algorithms, but also the governance, infrastructure, and cost levers that turn AI from pilot experiments into cost-effective AI projects.

Ready to scale AI with confidence? Partner with Appinventiv and build AI systems that grow responsibly – without overspending.

Conclusion

For businesses, scaling AI is no longer about experimenting with models, it’s about making calls that directly affect competitiveness, compliance, and shareholder trust. The decision to expand is both technical and strategic. Leaders who balance scalability, cost, and corporate governance today sets the foundation for their industries tomorrow.

The real advantage of scaling AI lies in creating sustainable, cost-effective AI projects that deliver value without extensive resources. This requires discipline – clear AI project budgeting, strong oversight, and deliberate choices about when to accelerate and when to consolidate.

Some enterprises will focus on expanding through AI project scaling across departments, while others will prioritize efficiency through tighter AI project cost optimization. In both cases, success comes from treating scaling as a business transformation, not just another IT rollout.

What’s clear is that enterprise AI scaling will define the next wave of market leaders. Those who adopt structured approaches now, blending financial discipline with technologies in AI scaling like MLOps, AutoML, and generative AI infrastructure – will gain resilience and agility that competitors can’t easily replicate.

FAQs

Q. What does AI powered scalability mean for enterprise AI projects?

A. AI powered scalability means growing AI systems efficiently without a proportional increase in cost or complexity.

For enterprises, this involves using modular architectures, automated resource allocation, and monitoring tools that adapt to workload changes. An effective AI project management scaling solution helps teams scale models, data pipelines, and infrastructure in a controlled way, ensuring performance remains stable as usage expands.

Q. How does Appinventiv help businesses scale AI responsibly and cost-effectively?

Q. What solutions help companies forecast AI infrastructure costs before scaling up in production?

A. Companies forecast AI infrastructure costs using simulation tools, usage modeling, and historical performance data.

These solutions estimate compute, storage, and deployment costs before production scaling begins. By combining cost dashboards with predictive analytics, enterprises can plan budgets more accurately. This approach supports smarter decisions within an ai project management scaling solution and reduces the risk of unexpected cost spikes.

Q. What are the most reliable AI systems for cost-effective scaling?

A. The most reliable AI systems for cost-effective scaling are those designed for modular growth and continuous optimization.

Systems that support containerization, automated scaling, and performance monitoring allow enterprises to expand incrementally. These capabilities make it easier to control spending while maintaining reliability. When paired with AI powered scalability practices, enterprises can scale AI workloads without overcommitting resources.

Q. What are the best ways to scale AI operations cost-effectively?

A. The best ways to scale AI operations cost-effectively focus on gradual expansion and constant cost visibility.

Enterprises start with pilots, optimize models before scaling, and automate infrastructure management. Regular performance reviews help teams eliminate waste early. Using a structured ai project management scaling solution ensures AI growth aligns with business value rather than unchecked experimentation.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

Real Estate Chatbot Development: Adoption and Use Cases for Modern Property Management

Key takeaways: Generative AI could contribute $110 billion to $180 billion in value across real estate processes, including marketing, leasing, and asset management. AI chatbots can improve lead generation outcomes by responding instantly and qualifying prospects. Early adopters report faster response times and improved customer engagement across digital channels. Conversational automation is emerging as a…

AI Fraud Detection in Australia: Use Cases, Compliance Considerations, and Implementation Roadmap

Key takeaways: AI Fraud Detection in Australia is moving from static rule engines to real-time behavioural risk intelligence embedded directly into payment and identity flows. AI for financial fraud detection helps reduce false positives, accelerate response time, and protecting revenue without increasing customer friction. Australian institutions must align AI deployments with APRA CPS 234, ASIC…

Agentic RAG Implementation in Enterprises - Use Cases, Challenges, ROI

Key Highlights Agentic RAG improves decision accuracy while maintaining compliance, governance visibility, and enterprise data traceability. Enterprises deploying AI agents report strong ROI as operational efficiency and knowledge accessibility steadily improve. Hybrid retrieval plus agent reasoning enables scalable AI workflows across complex enterprise systems and datasets. Governance, observability, and security architecture determine whether enterprise AI…