- Why AI Gigafactories Are Important for Enterprises & How They Differ From Traditional AI Infrastructure

- Why They Matter Now

- How Gigafactories Differ From Traditional AI Labs or Data Centers

- The EU AI Gigafactories Initiative: Vision, Funding, and Key Objectives

- A Focused Investment in Europe’s AI Future

- Clear Demand From Industry

- Key Objectives of the AI Gigafactories Initiative

- Why AI Gigafactories Change Enterprise AI Pipelines: Real World Advantages

- 1. Faster Training Cycles for Large and Complex Models

- 2. Reliable Access to High-Performance Compute

- 3. Built for Compliance and European Data Regulations

- 4. Support for Next-Generation AI Architectures

- 5. Integrated Access to AI Research and Development Hubs

- 6. Better Cost Efficiency at Scale

- 7. A More Sustainable Path for High-Compute AI

- 8. A Stable Foundation for Long-Term AI Roadmaps

- Sector-Specific Use Cases of AI Gigafactories

- Healthcare

- Financial Services

- Manufacturing

- Retail

- Energy & Utilities

- Public Sector

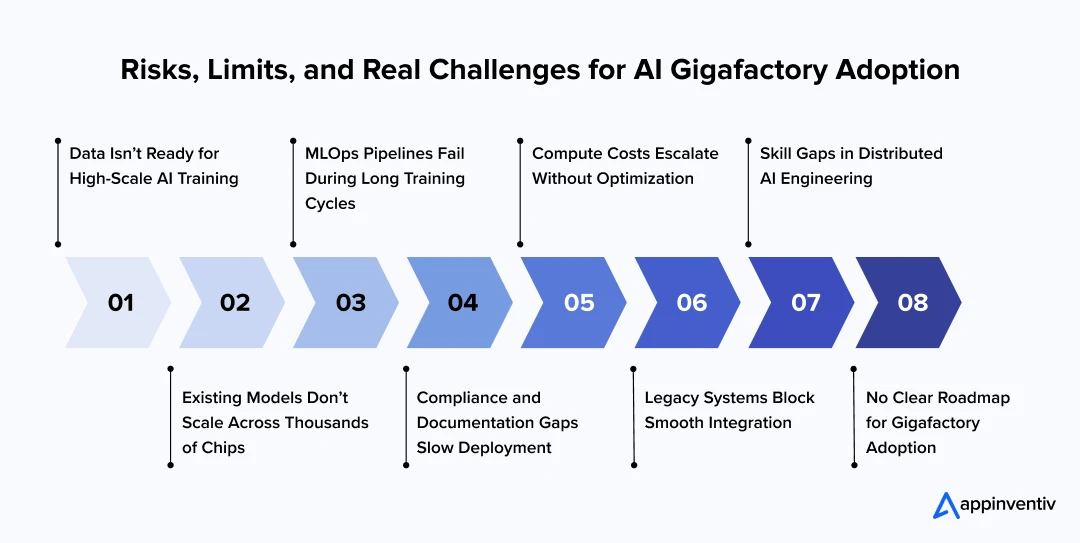

- Risks, Limits, and Real Challenges Enterprises Should Prepare For

- 1. Challenge: Data Isn’t Ready for High-Scale AI Training

- 2. Challenge: Existing Models Don’t Scale Across Thousands of Chips

- 3. Challenge: MLOps Pipelines Fail During Long Training Cycles

- 4. Challenge: Compliance and Documentation Gaps Slow Deployment

- 5. Challenge: Compute Costs Escalate Without Optimization

- 6. Challenge: Legacy Systems Block Smooth Integration

- 7. Challenge: Skill Gaps in Distributed AI Engineering

- 8. Challenge: No Clear Roadmap for Gigafactory Adoption

- How to Prepare Your Business for the Gigafactory Era: A Complete Enterprise Roadmap (2025–2027)

- Phase 1: Establish the Foundations (2025)

- Phase 2: Start Scaling AI Workloads (2026)

- Phase 3: Enterprise-Wide Acceleration (2027 and Beyond)

- How to Plan Your Project Timeline Strategy

- Strategic Timeline Considerations

- Looking Forward: The Future of AI Infrastructure

- Why Partner With Appinventiv to Become Gigafactory-Ready

- FAQs

- AI Gigafactories give enterprises access to far more compute than standard cloud setups, making it possible to train larger and more complex models without long wait times.

- Many enterprises still struggle with old systems, scattered data, and weak MLOps pipelines. These issues must be fixed early to get real value from high-capacity AI environments.

- Companies that prepare in advance, by auditing their systems, improving data flow, modernizing architecture, and building strong engineering practices, are better positioned to adopt Gigafactory-level AI.

- Getting “Gigafactory-ready” requires a clear plan. This includes reviewing current workloads, upgrading pipelines, improving governance, and choosing the right technical partners to guide large-scale AI adoption.

Most enterprises today want to scale AI, but they run into the same obstacles: not enough compute power, high GPU costs, slow training cycles, and scattered data systems that can’t support advanced models. As AI models grow into the trillion-parameter range, the gap between what enterprises want to build and what their infrastructure can handle is getting wider.

This gap is now visible across the market. In 2024 alone, global spending on AI infrastructure grew by 38%, while GPU wait times for enterprise AI teams stretched from weeks to months. Even Fortune 500 companies admit that infrastructure is their biggest barrier to AI adoption.

This is where AI Gigafactories enter the picture. These facilities give enterprises access to massive, shared compute power, far beyond what any single organization can afford to build. With nearly 100,000 AI chips per Gigafactory and full integration into Europe’s high-performance computing network, they offer the speed, scale, and reliability needed to train and deploy next-generation AI systems. For enterprises that need faster model development, stronger compliance, and cost-efficient scaling, AI Gigafactories change the game entirely.

But simply accessing this compute isn’t enough. To get real value, enterprises need the right engineering foundation, data pipelines, governance models, and AI systems that can make proper use of Gigafactory-grade infrastructure. That’s where an AI development company like Appinventiv plays a crucial role.

We help enterprises become “Gigafactory-ready” by aligning their data, architecture, and AI workloads with high-performance training environments. From advanced MLOps to distributed model training, regulatory compliance, and AI lifecycle management, we ensure enterprises can use Gigafactory compute effectively, safely, and without waste.

In a market where AI adoption is growing at a breakneck speed, the companies that prepare early will outpace competitors, not by a small margin, but by entire product cycles. AI Gigafactories provide the computational muscle. Appinventiv provides the engineering backbone that turns that power into business outcomes.

With the EU investing €20B+ in Gigafactory infrastructure and AI models doubling in size every 6–9 months, only organizations with the right foundations will keep pace.

Why AI Gigafactories Are Important for Enterprises & How They Differ From Traditional AI Infrastructure

Most AI systems today are trained in regular data centers that were never designed for the kind of models enterprises now want to build. These facilities work well for standard cloud workloads, but they struggle with:

- Long model training times

- GPU shortages

- High operating costs

- Limited support for trillion-parameter architectures

This is why AI Gigafactories are becoming essential in the next phase of AI growth. Think of it as Europe’s new backbone for AI supercomputing, combining:

- 100,000+ AI chips in a single site

- High-bandwidth networks for distributed training

- Petabyte-level storage grids

- Real-time data movement pipelines

- On-site AI research and development hubs

- Direct integration with EuroHPC supercomputers

While traditional AI factories may support around 25,000 AI processors, European AI Gigafactories offer four times that power. This unlocks capabilities that simply cannot be achieved within standard enterprise infrastructure.

Why They Matter Now

The size of modern AI models has exploded. Models that once required a few hundred GPUs now demand tens of thousands. Enterprises cannot keep scaling their internal compute at this pace; it’s too slow, too expensive, and too complex. AI Gigafactories in Europe aim to solve this by giving enterprises shared access to:

- Large-scale AI model training facilities

- Faster experimentation and retraining cycles

- Infrastructure that meets European compliance like GDPR and sovereignty requirements

- Specialized hardware optimized for deep learning and generative AI

With the EU planning billions in investments under the EU AI Gigafactories initiative, these facilities are becoming the core of next-generation AI Infrastructure in Europe.

How Gigafactories Differ From Traditional AI Labs or Data Centers

| Component | Traditional Data Center | AI Gigafactory |

|---|---|---|

| Primary Purpose | General compute workloads | Training large AI models at extreme scale |

| AI Chips | 1,000–25,000 GPUs | ~100,000 next-gen AI chips |

| Networking | Standard cloud networking | High-bandwidth interconnects for distributed training |

| Storage | Mixed workloads | High-speed, AI-first storage grids |

| Compliance | Varies | Built for EU AI Act and data sovereignty |

| Integration | Limited | Deep integration with EuroHPC supercomputers |

| R&D Support | Minimal | Dedicated AI research and development hubs |

For enterprises, this shift means they can finally build, train, and deploy AI systems without being limited by infrastructure constraints.

The EU AI Gigafactories Initiative: Vision, Funding, and Key Objectives

Europe is building AI Gigafactories to solve a growing problem: enterprises need far more compute power than traditional data centers or cloud setups can support. To close this gap, the EU has launched a coordinated effort to create high-capacity facilities that offer shared access to advanced AI infrastructure across member states.

A Focused Investment in Europe’s AI Future

Through the InvestAI strategy, the EU plans to direct more than €20 billion toward building Gigafactories equipped with:

- Next-generation AI chips

- High-bandwidth networks

- Large-scale AI model training facilities

- Integrated AI research and development hubs

- Connectivity to EuroHPC supercomputers

This gives European enterprises access to infrastructure that was previously limited to global hyperscalers.

Clear Demand From Industry

Interest has been strong: Over 16 member states have submitted proposals, and organizations have expressed willingness to deploy millions of specialized AI processors across proposed Gigafactory sites. The message is simple: enterprises cannot scale modern AI without this level of infrastructure.

Key Objectives of the AI Gigafactories Initiative

The EU’s strategy centers on four core goals that matter directly to enterprise leaders:

- Remove Compute Bottlenecks: Gigafactories allow enterprises to train large models faster, more affordably, and at a scale that typical cloud environments can’t match.

- Build a Secure, Compliant AI Ecosystem: Facilities are designed with European data residency, cybersecurity, and EU AI Act compliance built in, reducing regulatory risk for enterprises.

- Support Industry Innovation: By pairing high-performance compute with AI research and development hubs, the EU aims to accelerate breakthroughs in healthcare, finance, manufacturing, and public services.

- Ensure Sustainable AI Growth: Gigafactories will follow sustainable energy planning for AI data centers, using renewable power and efficient cooling to manage their ~1GW energy footprint.

The result of EU AI Gigafactories Initiative is simple: Faster innovation. Lower cost. Fewer infrastructure headaches. And a clear path to train models that would otherwise be out of reach.

Why AI Gigafactories Change Enterprise AI Pipelines: Real World Advantages

Enterprises are building larger and more complex AI systems than ever before, but most existing infrastructure wasn’t designed for this scale. As models grow into the billions and trillions of parameters, traditional cloud setups and on-prem GPU clusters begin to hit limits, both in terms of speed and cost. AI Gigafactories solve many of these pain points by providing purpose-built environments for high-volume model development and training. Below are the core ways AI Gigafactories reshape enterprise AI workflows.

1. Faster Training Cycles for Large and Complex Models

Enterprises working on domain-specific LLMs, multimodal systems, deep forecasting models, or simulation-heavy workloads face long training timelines. AI Gigafactories reduce this drastically by offering:

- Large pools of next-gen AI chips

- High-bandwidth interconnects

- Distributed training-friendly architecture

This allows teams to move from one experiment to the next much faster, improving both accuracy and development speed

2. Reliable Access to High-Performance Compute

GPU shortages and long provisioning queues slow enterprise AI adoption. Gigafactories provide:

- Predictable access to compute

- Dedicated capacity for long-running training

- Infrastructure that supports resource-heavy projects

For enterprises with ongoing AI initiatives, this reliability ensures smoother execution of multi-quarter AI roadmaps.

3. Built for Compliance and European Data Regulations

Enterprises in finance, healthcare, logistics, mobility, and the public sector face strict data usage and model transparency rules. AI Gigafactories are designed with:

- EU AI Act alignment

- Data residency controls

- Secure multi-tenant isolation

- Auditable model training workflows

This reduces compliance overhead and creates a safer path to scaling AI in regulated environments.

4. Support for Next-Generation AI Architectures

Modern AI systems, especially foundation models, depend on distributed compute, parallel processing, high-speed data exchange and large memory clusters. These requirements strain traditional cloud infrastructure. AI Gigafactories are optimized to run:

- Trillion-parameter models

- Large-scale adapter training

- Fine-tuning across massive datasets

- High-volume simulation workloads

This lets enterprises explore model sizes and architectures that were previously impractical.

5. Integrated Access to AI Research and Development Hubs

Unlike standard data centers, Gigafactories connect directly with University research labs, National HPC centers, Sector-focused AI innovation hubs and EuroHPC supercomputing networks. This ecosystem speeds up algorithmic improvements, benchmarking, and experimentation, giving enterprises earlier access to emerging techniques.

6. Better Cost Efficiency at Scale

Traditional cloud setups become expensive when training large models repeatedly. AI Gigafactories improve cost efficiency through:

- Shared infrastructure

- High utilization of specialized hardware

- Optimized parallel training environments

- Lower cost per experiment

This helps enterprises justify more ambitious AI use cases while maintaining predictable budgets.

7. A More Sustainable Path for High-Compute AI

Gigafactories are designed with sustainability in mind. They include renewable energy integration, efficient cooling systems, energy-aware scheduling and long-term sustainable energy planning for AI data centers. For enterprises with ESG goals, this provides a greener way to scale AI operations without building energy-intensive infrastructure on their own.

8. A Stable Foundation for Long-Term AI Roadmaps

AI Gigafactories give enterprises the confidence to:

- Develop multi-year model strategies

- Plan for bigger and more complex AI systems

- Expand into advanced AI use cases

- Support higher model volumes and workloads across teams

This stability is something most companies cannot achieve through traditional cloud setups alone.

Sector-Specific Use Cases of AI Gigafactories

AI Gigafactories give different industries a chance to work with far larger models, richer datasets, and faster training cycles than they can manage on their own. Here’s a practical look at what this means sector by sector, and what enterprises should sort out internally before tapping into such environments.

Healthcare

Hospitals and research teams deal with enormous volumes of medical images, lab histories, prescriptions, and admission records. Training diagnostic or forecasting models on this kind of data demands serious compute, especially when accuracy can literally save lives.

Gigafactory-scale environments make it possible to run bigger models, test ideas faster, and improve clinical AI systems without waiting days for each training cycle.

What organizations should prepare?

Make sure patient data is structured, cleaned, and governed properly. Strong pipelines and dependable MLOps are essential because high-volume AI training leaves no room for messy workflows.

Also Read: The Impact of AI in Healthcare Industry

Financial Services

Banks rely on AI for fraud alerts, credit decisions, investment forecasting, and stress-test simulations. These workloads grow heavier every year, fueled by rising transaction counts and tightening regulations. A Gigafactory gives financial institutions the compute they need to train and retrain risk models quickly, something that’s tough to do in regular cloud setups.

What organizations should prepare?

Clear audit trails, secure data flows, and model architectures that can scale across large clusters. Financial teams need both performance and traceability.

Manufacturing

Factories generate data nonstop: sensor readings, machine logs, inspection videos, and supply chain metrics. Training digital twins or predictive maintenance models on such data requires compute far beyond typical enterprise clusters. Gigafactories allow manufacturing teams to train complex simulations and spot failures before they happen.

What organizations should prepare?

A consistent method to collect and organize factory-floor data, plus training pipelines that can handle simulations and distributed workloads without breaking halfway through.

Retail

Retail businesses depend on fast and accurate models for demand planning, route optimization, real-time recommendations, and personalized shopping. These models need frequent updates because customer behavior shifts quickly. Gigafactory-level compute shortens training time and helps retailers experiment with richer algorithms.

What organizations should prepare?

Unify data from online stores, CRM systems, POS devices, and supply chain partners. Clean inputs and a flexible MLOps setup make a huge difference when retraining happens often.

Energy & Utilities

Energy providers work with huge amounts of time-series data; everything from grid loads to weather patterns. Forecasting and optimization models improve dramatically when trained at scale. With Gigafactory compute, it becomes possible to run large forecasting engines and optimization models that help stabilize entire grids.

What organizations should prepare?

Reliable data ingestion streams, strict governance, and model designs suited for long training cycles. High-frequency data requires tightly engineered workflows.

Public Sector

Government agencies run AI models for traffic planning, emergency response, public safety, census forecasting, and large-scale simulations. These tasks often involve massive datasets and long-running compute jobs that push traditional setups to their limits. Gigafactories help speed up this work.

What organizations should prepare?

Strong security controls, transparent workflows, and clear documentation. Public sector AI must be explainable, traceable, and compliant from the start.

Risks, Limits, and Real Challenges Enterprises Should Prepare For

Large-scale AI facilities solve many infrastructure problems, but enterprises still need the right groundwork to use them well. Here are the most common challenges companies run into and the kind of solutions that help them move forward with confidence.

1. Challenge: Data Isn’t Ready for High-Scale AI Training

Enterprise data is often scattered across legacy systems, stored in different formats, and lacks the consistency needed for large-scale AI model training facilities. This leads to weak model performance and wasted compute inside AI Gigafactories, where training depends on clean, fast-moving data.

Solution: Build strong data pipelines, improve quality checks, and unify storage so information can flow smoothly into AI Infrastructure Europe systems.

structured and well-governed datasets, enterprises can fully use the speed and accuracy benefits of Gigafactory environments.

2. Challenge: Existing Models Don’t Scale Across Thousands of Chips

Models built on small GPU clusters collapse or slow down when shifted to European AI Gigafactory hardware. Training breaks because the architecture isn’t designed for distributed compute or massive parallelism.

Solution: Redesign model architectures with parallel training strategies, sharding, and optimized communication layers.

This ensures the model can run efficiently across thousands of processors inside supercharged AI infrastructure with factories and gigafactories.

3. Challenge: MLOps Pipelines Fail During Long Training Cycles

Most enterprise MLOps systems weren’t created for multi-day or multi-week training jobs common in AI supercomputing in Europe environments. Pipelines break under load, fail silently, or lack proper monitoring for large-scale runs.

Solution: Strengthen orchestration, add detailed experiment tracking, and build automatic recovery paths for long training cycles.

These upgrades make MLOps stable enough to support high-volume workloads inside AI Gigafactories Europe.

4. Challenge: Compliance and Documentation Gaps Slow Deployment

Enterprises often lack the documentation, testing records, and data lineage needed for regulatory compliance for AI Gigafactories in Europe.

Missing paperwork or unclear audit trails create delays, especially in regulated industries.

Solution: Implement audit-ready workflows that record model versions, dataset approvals, risk classifications, and evaluation results.

This keeps AI development aligned with EU standards and avoids deployment bottlenecks.

5. Challenge: Compute Costs Escalate Without Optimization

Training at scale can become expensive if models and workflows are not optimized for large-scale AI model training facilities.

Inefficient runs can consume huge amounts of compute, driving up budgets quickly.

Solution: Use efficient training techniques like mixed precision, optimized batch sizes, and smart scheduling to reduce unnecessary usage.

These optimizations help enterprises gain maximum value from AI Infrastructure Europe with controlled costs.

6. Challenge: Legacy Systems Block Smooth Integration

Older enterprise applications and outdated APIs slow down or restrict data flow into AI Gigafactories. This creates bottlenecks that limit how fast and how well models can train at large scale.

Solution: Introduce a modern integration layer with flexible APIs, event-based data movement, and scalable connectors.

This ensures enterprise systems can communicate easily with Gigafactory clusters and high-performance training environments.

7. Challenge: Skill Gaps in Distributed AI Engineering

Most enterprise AI teams are used to small setups and lack hands-on experience with AI Gigafactory integration with EuroHPC supercomputers. This creates uncertainty around resource planning, cluster configuration, and training optimization.

Solution: Add distributed systems expertise to support model redesign, workload planning, and training optimization.

This helps teams use Gigafactory-level compute safely, efficiently, and without trial-and-error failures.

8. Challenge: No Clear Roadmap for Gigafactory Adoption

Many enterprises see the value in the future of AI Gigafactories but don’t know which use cases to scale first or what preparation is required. This makes adoption slow and unfocused.

Solution: Conduct an AI readiness assessment to identify data gaps, model upgrades, architectural needs, and priority use cases.

A structured roadmap helps enterprises adopt Gigafactory-level compute without confusion or wasted effort.

Must Read: How to Solve AI Biggest Development Issues

How to Prepare Your Business for the Gigafactory Era: A Complete Enterprise Roadmap (2025–2027)

The shift toward Gigafactory-scale AI will change how enterprises plan, build, and deploy AI systems. It’s not just about getting access to bigger compute. It’s about making sure your internal environment, data, architecture, processes, and people, can support this level of scale without breaking. This roadmap brings everything together so leaders can prepare their organizations step by step.

Phase 1: Establish the Foundations (2025)

This stage is about getting your house in order. Without these foundations, scaling AI becomes slow, expensive, and unreliable.

1. Audit Your Infrastructure Readiness

Many enterprises still carry legacy systems, slow storage layers, and old pipelines that make AI development harder than it should be. These systems limit how fast you can train models, move data, or test new ideas. Before thinking about high-performance compute, you need clarity on what parts of your infrastructure can keep up and which parts will slow you down. A simple audit brings these issues to the surface.

What to do:

Review your apps, data systems, pipelines, and networking. Identify which workloads are ready to scale and which require modernization.

2. Build an “AI-Native” Architecture

Legacy architectures hold AI back. Monolithic systems create bottlenecks, restrict data flow, and make scaling unpredictable. AI-native architectures work differently, they encourage modularity, faster changes, and smoother scaling. This gives teams the freedom to train bigger models and test more ideas without rewriting entire systems. It’s a structural shift that directly influences AI performance.

What to do:

Move toward microservices, containers, and event-driven systems. Build flexible pipelines that can adapt to different compute environments.

3. Strengthen Your Data Layer

No amount of compute can fix poor-quality data. If your data is spread across different systems, stored in inconsistent formats, or missing governance rules, your AI models will struggle. For large-scale training, data needs to be clean, reliable, and fast to access. Enterprises that invest in their data foundations early achieve better accuracy and faster model development later.

What to do:

Unify data formats, build strong cleaning and validation processes, and ensure data can move quickly when training large or complex models.

4. Upgrade MLOps for Long Training Cycles

Small experiments finish quickly. Large-scale training does not. Many enterprises find their pipelines fail, freeze, or lose progress when runs last several days. Long training cycles require stronger orchestration, better visibility, and recovery steps that kick in automatically when something goes wrong. Stable MLOps becomes the backbone of reliable and responsible AI development.

What to do:

Add workflow orchestration, experiment tracking, automatic retries, and live monitoring to support long and heavy training workloads.

5. Establish Clear Governance and Compliance

Modern AI cannot grow without clear governance. Regulations are evolving quickly, and enterprises need transparent records of how models are trained, what data they use, and how risks are managed. Missing documentation becomes a real blocker during audits or deployments. Good governance is not about slowing teams down. It’s about creating trust and accountability.

What to do:

Create structured documentation, review steps, approval flows, and audit trails for every model and dataset used in development.

6. Build the Right Strategic Partnerships

Enterprises don’t need to build massive compute infrastructure themselves. What they need are partners who understand distributed AI training, data engineering, system integration, and compliance. These partnerships help avoid costly missteps and reduce time to scale. The right external expertise accelerates progress while keeping internal teams focused on core business goals.

What to do:

Work with AI services and solutions providers who can guide model redesign, pipeline optimization, data readiness, and integration with large-scale compute environments.

Phase 2: Start Scaling AI Workloads (2026)

Once the groundwork is ready, enterprises can begin shifting serious workloads into high-performance environments.

7. Migrate High-Impact Use Cases First

Not every use case needs large-scale compute. The most value comes from identifying which workloads benefit from bigger training capacity: forecasting models, personalization engines, simulation-heavy apps, or domain-specific LLMs. These use cases generate meaningful ROI early and help refine internal processes. Focusing on the right areas builds momentum.

What to do:

Choose workloads that already push your current infrastructure to its limits. Test them in higher-capacity environments and measure the gains.

8. Adopt Distributed Training Methods

Traditional training approaches don’t scale well. Distributed training lets you run massive models and experiments across many compute units at once. It reduces training time and expands what your team can build. This is a key shift for enterprises planning long-term AI growth.

What to do:

Use parallelism strategies such as tensor, data, or pipeline parallelism. Redesign training loops to run efficiently at larger scales.

9. Integrate Real-Time or High-Frequency Data Streams

Large models deliver the best results when they learn from steady, rich, and fast-moving data. If data arrives slowly or inconsistently, model quality suffers. Preparing for this means adopting real-time pipelines and storage systems that can keep up with enterprise-scale AI needs. It directly improves prediction accuracy and responsiveness.

What to do:

Create streaming pipelines, event-based ingestion systems, and scalable storage that supports continuous data availability for training and inference.

Phase 3: Enterprise-Wide Acceleration (2027 and Beyond)

By this stage, AI becomes a core part of how the enterprise operates. All you need to do now is to expand its capabilities into operations.

10. Expand AI Across Multiple Business Units

Once early use cases succeed, other departments begin demanding similar capabilities. AI becomes a shared foundation across operations, supply chain, finance, customer experience, risk, and more. Aligning these teams under shared datasets and governance frameworks avoids duplication and improves consistency. This is where enterprises see compounding benefits.

What to do:

Roll out shared data assets, common evaluation frameworks, and reusable model components across departments to speed up enterprise-wide adoption.

11. Build a Hybrid AI Ecosystem

The future of enterprise AI won’t rely on one environment. Some workloads run best on cloud GPUs, some at the edge, and others on high-performance training clusters. A hybrid ecosystem creates the flexibility to match each workload with the right environment. It ensures scale, cost balance, and operational resilience.

What to do:

Design architectures that support movement between environments, cloud, edge, on-prem systems, and high-performance compute, without rewriting workloads.

If you’re planning to tap into Gigafactory-scale infrastructure, we can help you get the groundwork right.

How to Plan Your Project Timeline Strategy

The rollout of EU AI Gigafactories follows a carefully orchestrated timeline that creates specific windows of opportunity for strategic organizations. With the first AI factory operational in coming weeks and Munich launching in early September, the competitive landscape is rapidly evolving.

How can organizations optimize their positioning for maximum impact?

Strategic timing becomes crucial for organizations seeking to leverage AI Gigafactories Europe capabilities. Early engagement with the ecosystem provides access to resources and partnerships that may become more constrained as adoption accelerates.

Strategic Timeline Considerations

2025 Phase: Foundation Building

- Initial AI factory deployments across European locations

- Establishment of partnership frameworks with specialized AI development companies

- Development of internal capabilities and AI Data governance structures

2026 Phase: Scale and Optimization

- Full AI model training facilities operational across multiple sites

- Integration of business applications with Gigafactory infrastructure

- Measurement and optimization of AI-driven business outcomes

2027+ Phase: Innovation Leadership

- Advanced AI applications leveraging full European AI Gigafactory capabilities

- Market leadership in AI-driven business transformation

- Contribution to future gigafactory development and standards

Organizations that align their AI strategy with this timeline position themselves to capture maximum value from the substantial investments being made in AI Infrastructure of Europe.

Looking Forward: The Future of AI Infrastructure

The change from AI Gigafactories goes way beyond current computer needs. As companies prepare for the next phase of AI growth, strategic planning must consider quickly changing abilities and growing opportunities within the European AI world.

What trends will shape the competitive landscape?

The mix of huge computer infrastructure, teamwork networks, and practical business uses creates big opportunities for companies that can think and work strategically. Emerging Strategic considerations in the coming years are:

Technology Evolution

- Integration of quantum computing capabilities with traditional AI infrastructure

- Development of specialized processors optimized for specific AI applications

- Advancement in energy-efficient computing architectures

Ecosystem Development

- Expansion of facilities across additional European locations

- Integration with global AI development networks and partnerships

- Standardization of interfaces and protocols for cross-system compatibility

Market Dynamics

- Increasing demand for specialized AI development company services

- Growth in sector-specific AI applications requiring large-scale computational resources

- Evolution of regulatory frameworks supporting responsible AI deployment

Organizations that establish strong positions within the EU AI Gigafactories ecosystem today will be best positioned to adapt and thrive as these trends continue to evolve.

Why Partner With Appinventiv to Become Gigafactory-Ready

Preparing for the Gigafactory era is not just a technical shift; it requires strong engineering, mature data foundations, and AI systems designed to run at scale. This is where Appinventiv becomes a strategic partner for enterprises that want to move fast without breaking stability or compliance.

Over the decade, we have helped large enterprises modernize their AI ecosystems, redesign their architecture for distributed training, develop intelligent AI models and deploy scalable AI systems across business units.

With 200+ data scientists and AI engineers, 300+ AI-powered solutions delivered, and 75+ enterprise AI integrations completed, we understand what it takes to prepare organizations for high-performance AI environments.

Our depth comes from working across 35+ industries, building custom models, fine-tuned LLMs, and end-to-end AI systems that serve real business needs. Many of these projects involve complex data governance, legacy modernization, workflow redesign, and MLOps transformation; the same capabilities required to become Gigafactory-ready.

A Quick Case Study to Analyze Our AI Expertise:

A global retail group approached us to modernize its forecasting and personalization systems. Their legacy stack made it slow to test new models or retrain them on fresh data.

Our team rebuilt their data pipelines, introduced distributed training, and deployed optimized models designed for high-performance compute environments. The results were clear:

- 10x faster model training cycles

- 75% faster decision-making across supply chain teams

- 40% lower operational costs

This is the same journey enterprises must follow as they prepare for Gigafactory-scale AI. Our credibility in this space is backed by industry recognition as well. Appinventiv has been:

- featured in the Deloitte Technology Fast 50 India for two consecutive years in 2023 and 2024

- ranked among APAC’s High-Growth Companies (FT & Statista)

- Named “The Leader in AI Product Engineering & Digital Transformation” (2025) by The Economic Times

Becoming Gigafactory-ready isn’t about chasing new hardware. It’s about having the right partner who can build the systems, workflows, and models that actually make use of it. Appinventiv helps enterprises bridge that gap, turning high-performance AI infrastructure into measurable business outcomes.

Connect with our tech experts today to be ready for the AI gigafactory age.

FAQs

Q. How long does it take for an enterprise to become “Gigafactory-ready”?

A. It depends on the current state of their systems. Some organizations need only a few architectural upgrades, while others must modernize legacy stacks, restructure data, or rebuild pipelines.

On average, most enterprises see meaningful readiness in 3–6 months with a focused roadmap.

Q. What is AI Gigafactory in Europe?

A. An AI Gigafactory in Europe is a large-scale, purpose-built facility created specifically for training and deploying advanced AI models. These facilities are changing everything we thought we knew about AI capabilities and business scaling. But here’s what this really means for you:

- Those complex AI initiatives your team keeps postponing? They’re suddenly within reach

- The computational bottlenecks limiting your innovation? They’re about to disappear

- That competitive advantage you’ve been seeking? It’s sitting in infrastructure most executives don’t even know exists

- The strategic partnerships that seemed impossible to access? They’re opening up for organizations ready to move

Q. How do AI Gigafactories support European AI innovation?

A. AI Gigafactories support European AI innovation by providing large-scale computational infrastructure with approximately 100,000 AI chips, enabling organizations to develop and train next-generation AI models. These facilities foster collaboration between research institutions, industry partners, and startups, creating comprehensive innovation ecosystems that accelerate AI development timelines and reduce barriers to advanced AI implementation across European markets.

Q. What are the main components of an AI Gigafactory?

A. An AI Gigafactory comprises several critical components including massive computing architecture with state-of-the-art AI processors, high-bandwidth networking infrastructure, integrated storage systems for large datasets, power management systems requiring approximately one gigawatt capacity, cooling and environmental controls, and collaborative workspaces for research teams. These facilities also include specialized software frameworks, security systems, and integration capabilities with existing European supercomputing networks.

Q. What benefits do AI Gigafactories bring to AI startups and enterprises?

A. AI Gigafactories provide startups and enterprises with access to computational resources that would require billions in individual investment, dramatically reducing time-to-market for AI solutions. Organizations gain cost optimization through shared infrastructure, unprecedented scale for training complex AI models, regulatory compliance support, collaborative innovation opportunities, and access to specialized expertise through integrated research and development hubs within the facilities.

Q. What is the difference between AI Factories and AI Gigafactories?

A. AI Factories are equipped with approximately 25,000 AI processors and serve as foundational infrastructure for AI development, while AI Gigafactories feature four times more computing power with 100,000 state-of-the-art AI chips. AI Gigafactories require significantly larger investments ranging from 3-5 billion euros each, consume approximately one gigawatt of power, and are designed specifically for developing next-generation AI models with trillions of parameters.

Q. How do AI Gigafactory’s enable scaling of large AI models?

A. AI Gigafactories enable scaling of large AI models through massive parallel processing capabilities, distributed computing architectures that can handle models with trillions of parameters, high-speed interconnects for rapid data transfer, integrated storage systems capable of managing enormous datasets, and specialized software frameworks optimized for large-scale model training. The facilities provide the computational density and coordination necessary for breakthrough AI model development.

Q. What challenges do AI Gigafactories address in AI hardware and software?

A. AI Gigafactories address critical challenges including computational resource constraints that limit AI model complexity, infrastructure costs that create barriers for smaller organizations, integration difficulties between different AI development platforms, scalability limitations in traditional data center architectures, and coordination challenges in collaborative AI development. These facilities provide standardized, optimized environments that eliminate many technical and operational barriers to advanced AI development.

Q. How do AI Gigafactories contribute to energy-efficient AI computing?

A. AI Gigafactories contribute to energy-efficient AI computing through advanced cooling systems, optimized processor architectures designed for AI workloads, integration with renewable energy sources, intelligent resource allocation systems that minimize waste, and collaborative infrastructure sharing that maximizes utilization efficiency. The facilities incorporate sustainability planning from design phase, ensuring environmental responsibility while maintaining high-performance computing capabilities for intensive AI operations.

Q. What is the future outlook for AI Gigafactories in Europe?

A. The future outlook for AI Gigafactories in Europe is highly promising, with substantial investment momentum including €20 billion through the InvestAI initiative, 76 expressions of interest from organizations across 16 member states, planned expansion to five full-scale facilities, integration with quantum computing capabilities, and development of specialized sector-specific applications. The facilities will serve as foundation for European AI leadership and innovation ecosystem development.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

Governance vs. Speed: Designing a Scalable RPA CoE for Enterprise Automation

Key takeaways: Enterprise RPA fails at scale due to operating model gaps, not automation technology limitations. A federated RPA CoE balances delivery speed with governance, avoiding bottlenecks and audit exposure. Governance embedded into execution enables faster automation without introducing enterprise risk. Scalable RPA requires clear ownership, defined escalation paths, and production-grade operational controls. Measuring RPA…

How AI Overhauling Industrial Automation in Australia

Key takeaways: AI is shifting industrial automation from rule-based to data-driven decision ecosystems Predictive and autonomous operations are improving efficiency and cost optimisation Australian industries are leveraging AI to solve workforce, sustainability, and compliance challenges Enterprises adopting AI early gain competitive, operational, and economic advantages Industrial automation in Australia is no longer just an engineering…

Implementing Retrieval-Augmented Generation in Healthcare Systems: Challenges, Use Cases & ROI

Key takeaways: RAG helps clinicians make better decisions by connecting AI responses to trusted clinical data sources. Healthcare organizations are gradually adopting domain-specific AI to improve efficiency, compliance, and operational clarity. Successful RAG deployment usually depends on strong governance, interoperability planning, and secure data practices. Retrieval-backed AI can ease documentation workload while improving accuracy, productivity,…