- Private LLM vs Public LLM: Key Differences

- Cost Comparison: What You Need to Know

- Why Public LLMs Feel Flexible at First

- What a Private LLM Means for Your Budget

- Where a Hybrid Model Fits

- Understanding Security and Privacy Risks for Enterprises with Public vs Private LLMs

- What You Face With a Public LLM

- Why a Private LLM Strengthens Control

- How Your Team Can Choose

- Real‑World Examples of Public vs Private LLM Use in Enterprises

- 1. How Fujitsu Built Its Own Path

- 2. EY India (Professional Services)- Custom Fine‑Tuned LLM for BFSI Sector

- 3. ScaleOps- Enabling Self‑Hosted Enterprise LLM Infrastructure

- What These Examples Show

- How to Mitigate Bias, Privacy, and Compliance Risks in LLM Adoption

- Building a Strong Governance Framework & Ensuring Organizational Readiness

- How to Decide: Public or Private LLM?

- Public LLMs: Flexible and Cost-Effective

- Private LLMs: Secure and Customizable

- Hybrid Approach: A Practical Middle Ground

- Future Trends: Scaling and Evolving Your LLM Strategy

- How Appinventiv Empowers Your LLM Strategy for Future Success

- FAQs

Key takeaways:

- Public LLMs are fast and cost-effective but may lack security for sensitive data.

- Private LLMs offer better control, security, and compliance. Perfectly suited for an enterprise.

- Hybrid models combine the best of both: cost flexibility with data control.

- Choosing the right LLM for enterprise depends on your data needs, budget, and security requirements.

- Scaling AI solutions with the right LLM can drive long-term business growth and innovation.

As more companies start weaving AI into their day-to-day operations, the first big question they face is simple: which tool should they trust? Large Language Models (LLMs) have the potential to reshape customer support, automate routine work, and even guide strategic decisions. But choosing the right LLM for enterprise often leaves teams unsure about what truly fits their needs.

Public LLMs are an easy, cost-effective way to experiment with AI, as they’re managed by external providers, so you don’t have to worry about infrastructure or updates. They’re great for general tasks but may not be ideal if your business handles sensitive data or needs customization. That’s where private LLMs shine. A private LLM for enterprises keeps your data secure within your own environment, giving you greater control over security and compliance. For businesses in regulated industries, private LLMs provide a safer, more reliable path to AI adoption with better protection for sensitive data.

Most enterprises do not struggle with AI adoption, they struggle with choosing an LLM architecture that remains secure, compliant, and cost-predictable at scale. The decision between private and public LLMs often determines whether AI initiatives accelerate business outcomes or introduce hidden operational and compliance risks.

In the end, selecting an LLM is not only about choosing a capable model. It’s about aligning the technology with what your business values most, whether that is compliance, protecting confidential data, or long-term scalability with private LLM. In the sections ahead, we will break down the differences between private and public language models so you can choose the one that supports your goals with confidence.

Unlock the full potential of LLMs to enhance performance, security, and scalability across your business.

Private LLM vs Public LLM: Key Differences

Picking the right model isn’t just technical; it’s strategic. Scaling enterprise solutions with private LLM is a great option when you need to handle proprietary data or require heavy customization. With a private LLM, you get better security, compliance, and the ability to scale your operations effectively. Focus on what your operations require in the long term, and choose the path that protects your data while letting you scale.

| Factor | Public LLM | Private LLM |

|---|---|---|

| Data Security | Managed by third-party providers, data is stored on external servers. Security risks from sharing infrastructure. | Full control over data, stored within your organization’s infrastructure. Higher security. |

| Compliance | Limited control over compliance regulations (e.g., GDPR, HIPAA). May not meet industry-specific standards. | Easy to tailor to industry-specific regulations, ensuring full adherence. |

| Cost | Lower upfront cost; pay-per-use model. It can become expensive as usage grows. | Higher initial investment; costs for infrastructure, maintenance, and scaling. Potential for cost savings at scale. |

| Customization | Less flexibility in customization; models are pre-built and generalized. | Full customization options based on business needs and data requirements. |

| Scalability | Scales easily via API calls with minimal setup, but can incur variable costs. | Scalable within your infrastructure but requires resource setup and management. |

| Model Updates | Automatic updates and improvements by the third-party provider. | You control updates and fine-tuning, but require in-house expertise. |

| Infrastructure Management | No infrastructure management required. | Requires your business to manage infrastructure, monitoring, and maintenance. |

| Use Cases | Best for general tasks, non-sensitive data, experimentation, and small-scale operations. | Ideal for sensitive data, regulated industries, or use cases that require high levels of control and customization. |

| Performance Control | Shared resources so that performance may vary. | Dedicated resources allow for more predictable and reliable performance. |

| Security and Compliance Audits | Limited auditing and monitoring options, depending on the provider’s policies. | Full transparency and control over audit trails, security, and compliance checks. |

The real decision is not about selecting a model type, it is about choosing how much control, predictability, and governance your enterprise AI strategy requires as adoption scales.

Cost Comparison: What You Need to Know

Most teams eventually reach a point where cost becomes a key factor in shaping the entire LLM model for the enterprise decision-making process. You might already be running numbers, trying to figure out what your budget can handle without slowing down the work your team wants to do.

Most teams eventually reach a point where cost becomes a key factor in shaping the entire LLM model for the enterprise decision-making process. You might already be running numbers, trying to figure out what your budget can handle without slowing down the work your team wants to do.

Why Public LLMs Feel Flexible at First

Public LLMs keep the upfront cost low. You pay as you go, which makes it easy to test ideas or run smaller tasks without asking for a big budget increase. Your team can scale quickly because you only pay for what you use. Still, costs can rise rapidly as the workload grows. A few heavy use cases or a spike in activity can push your monthly bill higher than expected, especially with public LLM models handling high-volume tasks.

Many enterprises underestimate how quickly public LLM usage costs scale when AI moves from pilot to business-critical workflows. Without cost governance, AI experimentation can evolve into unpredictable operational expenditure.

What a Private LLM Means for Your Budget

A private LLM for enterprises requires more upfront investment. You handle the setup, maintenance, and updates, so the early work feels heavier. Even so, costs become more predictable over time. Once your infrastructure is stable, you are not surprised by usage spikes. Private LLM development is often viewed as long-term value rather than just an upfront expense, particularly for teams working with sensitive data or complex workloads.

Where a Hybrid Model Fits

Some teams blend both options rather than choose one. A hybrid model lets public LLMs handle routine or high-volume tasks, while private LLMs support the sensitive work that needs tighter control. It can help you balance cost and peace of mind, especially if your workload varies week to week. Using both private vs public language models lets you optimize costs without sacrificing data security.

What to Keep in Mind:

- Public LLMs make sense when you want low barriers to entry and flexibility.

- Private LLMs require more investment but give you lasting control over data security and performance.

- A hybrid setup helps manage costs while protecting critical data.

In the end, understanding how each model handles cost will help you pick the setup that fits your budget and long-term goals. Whether you opt for a public LLM for enterprise use, a private LLM model, or a hybrid approach, it’s crucial to choose the model that aligns with your business’s needs and future scalability.

Also Read: LLMOps for Enterprise Applications: A Complete Guide

Understanding Security and Privacy Risks for Enterprises with Public vs Private LLMs

Most enterprise AI initiatives fail security reviews not because models are ineffective, but because data governance frameworks are introduced too late in the adoption lifecycle.

Most teams reach a point where security concerns start slowing down every conversation about AI. When comparing public LLM and private LLM, security concerns often arise. If your work involves sensitive information and you need predictable security and compliance in a private LLM, a private LLM model will feel safer.

What You Face With a Public LLM

A public LLM gives you speed, lower costs, and no infrastructure to maintain. Your team can move quickly because the provider handles updates and scaling. Still, your data sits in their environment. If the provider shifts their policies or changes how their systems work, your risk picture can change overnight. For teams handling sensitive records, a lack of control can feel similar to storing files in a shared office cabinet that others manage.

Public LLM models are great for general tasks, but if your business handles sensitive data or requires stricter control, the risks can outweigh the benefits.

Why a Private LLM Strengthens Control

A private LLM for enterprises keeps your data inside your own environment. Your team gains tighter oversight, which matters when compliance reviews are regular. Even so, the trade-off is real. You take on the setup, the monitoring, and the ongoing security checks. Think of it like running your own secured workspace. You decide how it operates, but you are also the one keeping everything up to date.

Private LLM development allows you to customize security protocols and ensures compliance with industry regulations, offering a much safer option for businesses handling critical data.

How Your Team Can Choose

If convenience and quick deployment matter most, a public LLM for enterprise use may be a good fit. At the same time, if your work involves sensitive information and you need predictable security boundaries, a private LLM model will feel safer.

In the end, the right choice depends on how much responsibility your team is ready to take on, whether you prioritize security and compliance in a private LLM, or you need the flexibility and cost-efficiency offered by public LLM models.

Take control of your sensitive data with custom, secure LLM solutions.

Real‑World Examples of Public vs Private LLM Use in Enterprises

Most teams like to see how others handled the same decision before making their own. When it comes to public LLMs, private LLMs, and the broader question of Private LLM vs. public LLM, a few real examples make the trade-offs much easier to understand for enterprises evaluating language model selection.

1. How Fujitsu Built Its Own Path

Fujitsu chose to build a private LLM called Takane, highlighting the rising interest in private LLM development. They wanted something that could live safely within their environment, with strict security and compliance controls for private LLM deployments. It is the kind of setup that makes sense when your company handles sensitive data every day and does not want to rely on public LLM models for regulated workloads. Their move shows that more enterprises are leaning toward solutions that feel tailor-made, especially when weighing Private vs Public language models and considering the benefits of private LLMs vs public LLMs.

2. EY India (Professional Services)- Custom Fine‑Tuned LLM for BFSI Sector

Many financial services consultancies and BFSI vendors are moving toward private LLMs to handle compliance-intensive tasks such as risk analysis and customer data processing. Instead of relying on public LLM models, they’re choosing private LLM applications for enterprises that offer tighter control and stronger security.

A recent example is EY, which launched a custom LLM designed specifically for the BFSI sector. It’s fine-tuned for regulatory requirements and shows how private LLM development is becoming the preferred path for organizations that need secure, compliant AI without relying on shared systems.

3. ScaleOps- Enabling Self‑Hosted Enterprise LLM Infrastructure

ScaleOps recently announced an AI‑infrastructure product aimed at enterprises that operate self‑hosted LLMs, providing resource management and cost-efficient GPU usage for enterprise‑scale LLM deployment.

This is relevant for enterprises considering private LLM deployment: it shows that there are evolving infrastructure solutions that can make private/self‑hosted LLMs more affordable and operationally manageable.

These adoption patterns typically appear when enterprises begin classifying AI workloads based on sensitivity, regulatory exposure, and strategic importance, a key milestone in enterprise AI maturity.

What These Examples Show

Public LLMs still help teams move quickly and stay within a smaller budget, and some organizations use them for broader or community-driven workloads that involve public LLMs. Even so, private LLMs and tailored Private LLMs for enterprises are increasingly becoming the choice for work that needs strong security, tighter control, and deeper customization.

Whether a company is protecting financial data or training a model on internal knowledge, the key differences between private LLM and public LLM continue to influence enterprise decisions. For many, the right combination of Private LLM vs Public LLM ultimately depends on workload sensitivity and long-term strategy, yet private setups often give enterprises the confidence they need to move ahead.

Also Read: Small Language Models for Enterprise AI

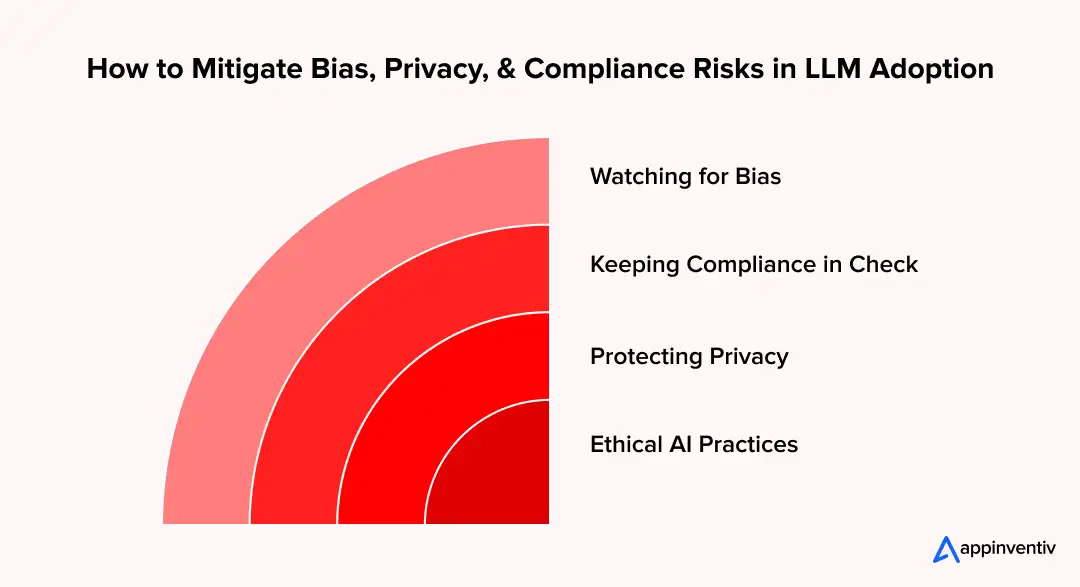

How to Mitigate Bias, Privacy, and Compliance Risks in LLM Adoption

When your team compares Private LLM vs Public LLM, it’s easy to get caught up in features and performance, but the real challenge is managing risk. How your organization handles bias, privacy, and compliance will determine whether AI helps or creates headaches.

- Watching for Bias: Even well-trained public LLMs and private LLMs can show unexpected biases. For enterprise teams, this can mean skewed outputs or unfair recommendations. Using a private LLM model allows your team to review and fine-tune results regularly. Private LLM applications for enterprises give you the control to catch issues early and make sure the AI in business behaves consistently.

- Keeping Compliance in Check: Industries such as finance, healthcare, and legal operate under strict rules. A private LLM for enterprises can be customized to meet regulations such as GDPR, HIPAA, or PCI DSS, while public LLM models may not offer the same flexibility. Thoughtful enterprise language model selection ensures your team stays compliant without slowing down workflows.

- Protecting Privacy: Sensitive data demands strong protections. With public LLMs, your organization has limited control over how data is stored or used. A private LLM keeps everything inside your own environment, giving you the confidence that confidential information stays secure. The benefits of a private LLM for handling sensitive data are especially clear for businesses that handle financial records, customer data, or internal reports.

- Ethical AI Practices: A growing number of organizations are expected to be responsible regarding the impact of AI on decision-making and individuals. During the development of the private LLM, it is possible to enable teams to have full responsibility over data sourcing, model training, and usage of output. Business applications of AI LLM are privacy-assured applications focused on specific customers to deliver AI to the enterprise in a manner that supports the organizational ethos, fosters trust, and does not run the risk of damaging reputation.

Concisely, the advantages of public LLMs can be speedy and less expensive, but the privacy, compliance, and ethical controls can be more controlled by the proprietor of the private ones in your team. The selection of the appropriate LLM-Public LLM to be used as an enterprise or a tailor-designed LLM to fit an enterprise is dependent on the level of oversight, security and customization that your organization requires.

Building a Strong Governance Framework & Ensuring Organizational Readiness

Bringing a private LLM for enterprises in-house is less of a tech upgrade and more of an operational shift. Success usually depends on two non-technical factors:

- Governance Framework: You need clear guardrails. It isn’t enough to just secure the server; you need protocols for who validates the AI’s output and who is authorized to feed it data. A strong framework for your private LLM model prevents ‘shadow AI’ habits from forming and keeps you on the right side of compliance regulations. Proper governance is a key benefit of private LLM applications for enterprises.

- Team Readiness: Even the most sophisticated private LLM will fail if your team doesn’t know how to use it. You can’t just plug it in and walk away; you need staff who can monitor for model drift and manage the infrastructure. Identifying these skill gaps early ensures smooth adoption and successful deployment. This is a critical part of private LLM development in any enterprise.

When you get governance right and the team is prepared, the technology follows suit. That is when a private LLM for enterprises moves from a science experiment to a genuine business asset, delivering measurable value while keeping data secure and compliant.

How to Decide: Public or Private LLM?

Instead of choosing based on technology trends, enterprises should align LLM deployment models with business priorities, compliance exposure, and cost predictability.

Use the quick decision matrix below to evaluate which model aligns with your enterprise objectives.

| If Your Enterprise Priority Is | Best Fit | Why It Works |

|---|---|---|

| Speed, experimentation, and rapid AI adoption | Public LLM | Minimal infrastructure setup with fast deployment |

| Data control, compliance, and security assurance | Private LLM | Enables auditability, governance, and regulatory alignment |

| Cost optimization with workload flexibility | Hybrid LLM | Balances scalability with secure processing of sensitive data |

| High customization and domain-specific AI intelligence | Private LLM | Enables model tuning using proprietary enterprise data |

| Variable workload with unpredictable usage spikes | Hybrid LLM | Optimizes resource usage while maintaining security boundaries |

The right LLM choice is rarely technical, it is a business architecture decision balancing agility, governance, and long-term AI scalability.

Public LLMs: Flexible and Cost-Effective

Public models are usually where teams begin because they remove most of the upfront barriers:

- Good for early exploration: If you’re testing ideas or running simple internal experiments, public models are the easiest way to get started. They’re inexpensive, quick to set up, and let you validate use cases without heavy commitments.

- Costs grow with usage: The catch shows up later. As teams depend on the model moe, and requests become heavier, monthly bills can rise quickly. It works for small workloads, but it’s not always predictable at scale.

- Not suited for sensitive data: Public LLMs are fine for information that is harmless or already public. Once confidential or regulated data is involved, the risks become harder to justify.

Private LLMs: Secure and Customizable

Organizations choose private models when control, compliance, and accuracy matter more than quick setup.

- Full control and compliance: With a private model, you own the data pipelines. That alone makes compliance with frameworks like GDPR, HIPAA, or PCI DSS far simpler. You decide where the data goes and who touches it.

- Higher upfront cost, fewer surprises later: Setting it up requires more investment upfront. After that, the operating costs are steady and easier to plan for, especially if AI is tied to core business workflows.

- Fits your business, not the other way around: A private LLM can learn your terminology, your processes, and your customer behavior. That level of tuning is often what makes the output more accurate and actually useful.

Hybrid Approach: A Practical Middle Ground

Enterprises that want flexibility without sacrificing control often land here.

- Balance cost with control: Many enterprises mix both models. Public LLMs handle the high-volume, low-risk tasks, while private ones are reserved for sensitive workloads where accuracy, compliance, and privacy matter most.

- Grows with your needs: This setup gives you room to experiment without risking regulated data. As certain use cases mature, you can move them to the private model when it makes business sense.

Future Trends: Scaling and Evolving Your LLM Strategy

AI strategy is never static. To keep your edge, you have to look past current capabilities and plan for a future defined by scale and autonomy. This is especially true when considering Private LLMs vs. Public LLMs for enterprise use.

Where to focus your attention:

- Move beyond chat: Prepare for Agentic AI—systems that don’t just retrieve data, but actively solve problems and execute tasks without hand-holding. Leveraging a private LLM model can help achieve this level of autonomy safely.

- Integrate, don’t just adopt: Stop treating LLMs for public-sector or private-enterprise applications as standalone tools. Real value comes from an AI-first approach that integrates models directly into core workflows, ensuring maximum efficiency and control.

- Keep it fresh: Models decay. A private LLM for enterprises requires a rigorous lifecycle management plan to retrain and refresh data, ensuring your insights remain accurate and actionable.

- Balance your architecture: Adopt a hybrid approach. Use public LLM for enterprise use for speed and scalability, and rely on private environments for the data that actually matters. This combination lets your team enjoy flexibility without compromising security, privacy, or compliance.

Enterprises preparing for agentic AI, autonomous workflows, and multi-model orchestration often require structured LLM architecture planning to avoid scalability and governance bottlenecks.

Evolve your AI strategy with future-ready LLM deployments that grow with your business.

How Appinventiv Empowers Your LLM Strategy for Future Success

At Appinventiv, we specialize in AI development services, providing custom AI solutions from private LLM development to hybrid integrations. Our team ensures your business stays ahead by navigating the complexities of scaling AI, optimizing models, and embedding AI seamlessly into your business processes.

We’ve successfully implemented impactful AI solutions, such as for Flynas, where we enhanced their mobile app with AI-powered features to improve booking efficiency and customer engagement. We also helped MyExec build an AI-driven business-consulting platform that enables SMEs to access actionable insights to make smarter decisions.

As an experienced AI consulting company, Appinventiv not only builds innovative AI solutions but also guides you in scaling enterprise solutions with private LLM, ensuring you get the most out of your AI strategy. Whether you’re looking for secure private LLMs or a hybrid approach, we’re here to help you stay competitive and adaptable in an ever-evolving AI landscape. Let’s connect!

FAQs

Q. What are the business benefits of a private LLM vs. a public LLM?

A. The main benefit of private LLMs is greater security and control, allowing businesses to handle sensitive data securely and ensure regulatory compliance. In contrast, public LLMs are cost-effective and provide flexibility for general tasks but may lack the customization and data privacy necessary for sensitive enterprise operations.

Q. How to choose between a private and public LLM for business?

A. When choosing the right LLM for enterprise, consider factors like security and compliance in private LLM, the need for a custom LLM for enterprises, and scalability. Public LLMs work well for experimentation and non-sensitive tasks, while private LLMs are ideal for businesses that need more control and tighter security.

Q. How can Appinventiv help choose the right LLM for my business needs?

A. At Appinventiv, we offer expert AI consulting services to guide your business through the decision-making process. Whether you’re considering public LLM models or looking for a private LLM development, we help you evaluate your needs, ensuring the solution aligns with your business goals and compliance requirements.

Q. How do private and public LLMs affect enterprise security?

A. Public LLM models are hosted by third parties, creating potential security risks, especially for sensitive data. Private LLMs offer enhanced security and compliance by keeping your data within your infrastructure, giving you greater control over security protocols and reducing risks.

Q. What is the cost difference between private and public LLMs for enterprises?

A. Public LLMs have lower initial costs with a pay-per-use model, but the cost increases as usage grows. Private LLMs require a higher initial investment for infrastructure and setup, but offer more predictable long-term costs and provide better control over security and compliance in private LLMs.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

Key takeaways: AI reconciliation for enterprise finance is helping finance teams maintain control despite growing transaction complexity. AI-powered financial reconciliation solutions surfaces mismatches early, improving visibility and reducing close-cycle pressure. Hybrid reconciliation logic combining rules and AI improves accuracy while preserving audit transparency. Real-time financial reconciliation strengthens compliance readiness and reduces manual intervention. Successful adoption…

How to Develop an AI Chatbot for Education Platforms in UAE: Architecture, Cost, and Timeline

Key Takeaways AI chatbots are helping UAE institutions handle repetitive queries, reduce response delays, and improve the availability of student support. Bilingual capability, PDPL compliance, and integration with LMS and student systems are essential for successful deployment. Costs typically range from AED 150,000 to AED 1.47M ($40K–$400K), depending on integrations, personalization, and language support. AI-powered…

How AI in Healthcare Administration Cut Staff Workload by 40%

Key takeaways: AI automates claims, scheduling, intake, and documentation, cutting repetitive work and freeing staff to focus on oversight and patient coordination. AI validation tools flag incomplete records before submission, reduce avoidable denials by 10–20%, and improve clean-claim performance, enhancing revenue predictability. EHR and note-processing AI reclaim thousands of staff hours in large health systems,…