- What Is an LLM-as-a-Judge System?

- Why Enterprises Need an LLM Evaluation Layer

- Manual GenAI Review Does Not Scale

- Automated Judges Create Consistent Quality Control

- Evaluation Becomes Part Of Governance

- What Are the Key Features of LLM-as-a-Judge?

- What Are the Core LLM-as-a-Judge Techniques?

- Direct Scoring

- Pairwise Comparison

- Multi-LLM Evaluation Strategies

- What Are the Key LLM-as-a-Judge Use Cases?

- Single-Response Evaluation

- Conversation-Level Evaluation

- Flexible Verdict Formats

- What Are the Types of LLM-as-a-Judge?

- Single Output Scoring Without Reference

- Single Output Scoring With Reference

- Pairwise Comparison

- LLM-as-a-Judge vs LLM-Assisted Labeling

- Where Does the LLM-as-a-Judge Layer Deliver Business Value?

- Customer Service

- Internal Copilots

- Regulated Content

- What Is the LLM-as-a-Judge Evaluation Framework for Enterprises?

- Integrating LLM Evaluation into Existing Workflows

- What Are the Business Risks and LLM-as-a-Judge Challenges?

- LLM-as-a-judge challenges include:

- What Is the ROI of LLM-as-a-Judge for Enterprises?

- Hard ROI

- Soft ROI

- How Mature Is Your Enterprise LLM Evaluation Strategy?

- Build vs Buy vs Custom LLM-as-Judge Development

- Choosing The Right Path

- How Do Enterprises Measure Success with LLM-as-a-Judge?

- How Does Appinventiv Help Enterprises Operationalize LLM-as-a-Judge?

- FAQs

Key takeaways:

- LLM-as-a-judge creates a scalable evaluation layer for enterprise GenAI governance and control.

- Automated LLM evaluation replaces manual review, reducing risk and improving model reliability.

- Enterprise LLM-as-a-judge frameworks enable compliance, auditability, and confident GenAI scale.

- Structured LLM evaluation pipelines turn GenAI performance into measurable business outcomes.

Many enterprises reach this stage after deploying conversational AI at scale, often starting with ChatGPT-like application development assessments. A customer chatbot goes live. An internal copilot starts summarizing contracts. A recommendation engine begins guiding decisions. That is when a new question appears. Can you trust what these systems produce at scale?

The issue is not model capability. The issue is proof. You need to show quality, safety, and compliance before GenAI earns a permanent place in customer journeys and internal workflows. Without that evidence, boardroom confidence stays low. Projects slow down. GenAI risks returning to experiment status.

Most enterprises don’t lose GenAI momentum because models fail. They lose momentum because they cannot prove reliability, safety, and compliance fast enough to satisfy leadership, regulators, and customers. LLM-as-a-Judge is emerging as the control layer that determines whether GenAI becomes scalable infrastructure or remains a risky experiment.

LLM-as-a-judge sits in the middle of this gap. It evaluates the outputs of your GenAI systems against business-defined standards for accuracy, tone, risk, and compliance. Instead of relying on scattered reviews, your team gets measurable evaluation signals.

For a C-suite responsible for governance, that evaluation layer becomes essential. Regulators expect traceable oversight. Boards expect defensible risk decisions. Trust now depends on evidence, not enthusiasm.

This article explores how LLM-as-a-judge reshapes your GenAI operating model. It highlights where evaluation creates leverage and where LLM evaluation risks must be managed. The goal is simple. Treat evaluation as core GenAI infrastructure and prove you stay in control.

Appinventiv has helped enterprises deploy governance-driven GenAI development solutions across highly regulated environments, designing evaluation pipelines that support compliance, auditability, and scalable automation.

Join the leading businesses driving innovation with LLM-as-a-judge for scalable, data-driven model evaluation.

What Is an LLM-as-a-Judge System?

At some point, your team needs a reliable way to check if GenAI outputs meet business expectations. An LLM-as-a-judge system handles that role. It uses one language model to evaluate another model’s responses against defined criteria such as accuracy, safety, tone, and compliance, then returns structured scores and reasoning for governance and control.

Three years after GenAI entered enterprise workflows, adoption has outpaced governance. Nearly nine out of ten organizations now report using AI regularly. This scale of adoption is exactly why LLM-as-a-judge has become critical for managing GenAI safely at the enterprise level.

Why Enterprises Need an LLM Evaluation Layer

Most enterprise GenAI initiatives start with momentum. A pilot succeeds. A chatbot goes live. An internal copilot enters daily workflows. Then reality sets in. You need a way to evaluate model behavior before scale introduces risk.

An LLM evaluation layer provides that control. It monitors GenAI outputs continuously. It replaces scattered testing with structured evaluation. This is what makes enterprise-grade deployment possible.

Manual GenAI Review Does Not Scale

In early pilots, teams rely on manual checks.

Typical patterns include:

- Reading sample chatbot conversations

- Flagging risky answers in shared documents

- Asking domain experts to review outputs

- Holding review meetings before releases

This approach works for small volumes. It fails when:

- Thousands of users interact daily

- Feedback cycles slow down

- Risk issues surface late

- Review costs grow quickly

An LLM-as-a-judge system removes this bottleneck. Judge models review outputs automatically. Human experts focus on defining evaluation standards and handling complex edge cases.

Automated Judges Create Consistent Quality Control

Human reviewers interpret rules differently. Standards shift. Policies evolve. This creates inconsistency in how GenAI outputs are evaluated.

Automated LLM-as-a-judge systems solve this by:

- Applying the same rubric to every output

- Scoring accuracy, tone, safety, and compliance

- Producing structured evaluation data

- Allowing fair comparison across models and prompts

This consistency gives product and engineering teams reliable performance signals. Decisions move from opinion to measurable evidence.

Evaluation Becomes Part Of Governance

Once LLM evaluation data flows into dashboards and release pipelines, it becomes part of the governance process.

This enables:

- Risk teams to monitor real model behavior

- Compliance teams to verify policy adherence

- Leadership to receive audit-ready evidence

- Boards to make informed GenAI risk decisions

At enterprise scale, the LLM evaluation layer becomes a core control system. It protects trust while allowing innovation to move forward.

What Are the Key Features of LLM-as-a-Judge?

Once your team starts evaluating GenAI at scale, the difference between a basic setup and an enterprise-grade LLM-as-a-judge system becomes clear. Strong evaluation does not happen by accident. It relies on a few core features that keep scoring consistent, explainable, and aligned with governance expectations.

Key features of LLM-as-a-judge include:

- Business-defined rubrics: Your domain teams define what good looks like. Accuracy, safety, tone, and compliance are written in plain business language. The LLM-as-a-judge then evaluates every output against these standards, not generic benchmarks.

- Structured scoring: Each response is scored using clear formats, such as numeric scales or pass/fail rules. This makes LLM-as-a-judge model evaluation easy to track across prompts, models, and use cases.

- Rationale output: The judge does not only return a score. It explains why a response passed or failed. This supports auditability, accelerates debugging, and strengthens trust in the LLM evaluation system.

- Continuous monitoring: Evaluation runs on curated test sets before deployment and on sampled production traffic after release. This allows teams to detect drift, emerging risks, and declining quality early.

- Audit-ready logs: Every judgment is stored with scores, explanations, and version history. These logs support compliance reviews, internal audits, and regulator inquiries across enterprise LLM evaluation frameworks.

What Are the Core LLM-as-a-Judge Techniques?

Once your team commits to structured LLM evaluation, the next step is choosing the right LLM-as-a-judge techniques. Not every workflow needs the same depth of review. Some require simple scoring. Others demand comparison or multiple evaluators to reduce risk. The techniques below form the foundation of an enterprise-grade LLM-as-a-judge system design.

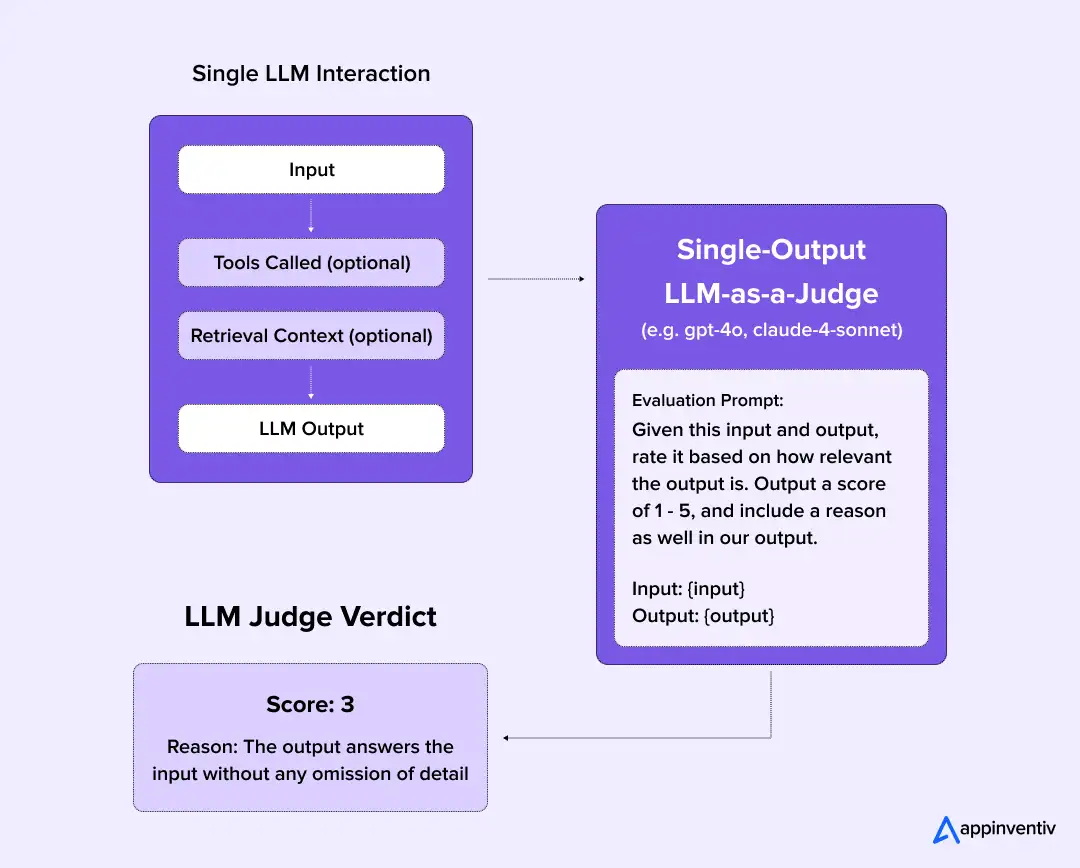

Direct Scoring

Direct scoring is the most widely used LLM-as-a-judge technique. A judge model evaluates a single GenAI response against a defined rubric for accuracy, tone, safety, and compliance. This method powers most LLM-as-a-judge model evaluation pipelines in customer service, copilots, and RAG systems.

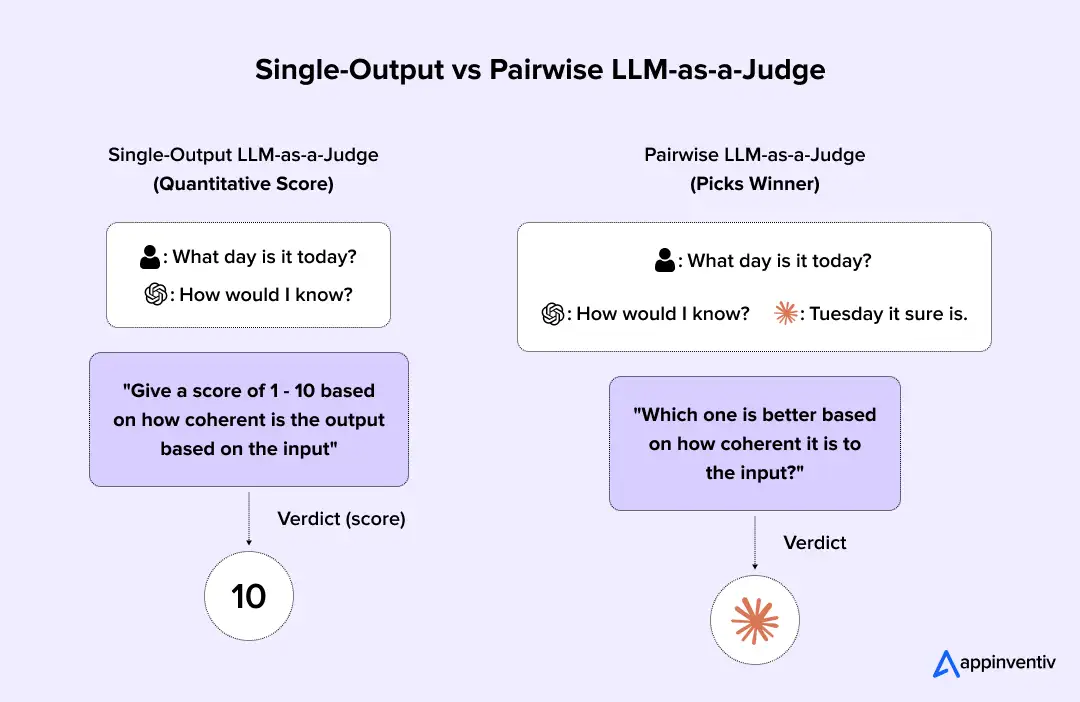

Pairwise Comparison

Pairwise comparison uses an LLM judge to evaluate two responses to the same query. The judge selects the stronger output based on clarity, reasoning quality, and policy alignment. Enterprises rely on this LLM-as-a-judge technique to test prompt variants, retrieval strategies, and competing foundation models before deployment.

Multi-LLM Evaluation Strategies

Multi-LLM evaluation strategies use multiple judge models to review the same output. When judges disagree, the system flags the response for human review or deeper analysis. This approach reduces bias in the LLM-as-a-judge system and strengthens reliability in regulated or high-risk enterprise workflows.

As enterprises adopt voice, image, and document-based copilots, evaluation must extend to multimodal AI application systems.

What Are the Key LLM-as-a-Judge Use Cases?

Before you design an evaluation strategy, it helps to understand one practical detail. Not all GenAI interactions look the same. Some produce a single response. Others unfold as ongoing conversations. LLM-as-a-judge must handle both.

Single-Response Evaluation

Some GenAI workflows produce one focused output. A RAG system answers a policy question. A copilot summarizes a document. A model drafts a short report. In these cases, the judge reviews a single input and a single output. It scores accuracy, completeness, and compliance based on your rubric. This is the simplest and most reliable LLM-as-a-judge setup.

Conversation-Level Evaluation

Other GenAI systems operate over multiple exchanges. Customer support chatbots, onboarding assistants, and advisory copilots fall into this category. Here, the judge reviews the entire conversation rather than just one response. It checks whether the system stayed on topic, followed policy, and handled context correctly across turns.

Multi-turn evaluation is harder. Longer conversations increase context load and raise the risk of missed details. Enterprises often address this by sampling key turns, summarizing conversation state, or applying multiple judges for higher-risk flows.

Flexible Verdict Formats

Judge outputs do not always need complex scoring. Some workflows require a numeric scale. Others only need a binary decision. For example, a compliance check may simply ask whether a response violates policy. That yes-or-no verdict can then trigger an escalation or human review.

This flexibility allows LLM-as-a-judge to fit a wide range of GenAI evaluation needs without overcomplicating the workflow.

What Are the Types of LLM-as-a-Judge?

Once your team commits to automated GenAI evaluation, the next question is practical. How should the judge actually assess model behavior? Different evaluation styles serve different needs, so choosing the right type matters.

Single Output Scoring Without Reference

In this approach, the LLM-as-a-judge reviews one model response. It compares the output against your rubric for accuracy, tone, safety, and compliance. This method works well when no perfect answer exists, such as summarization or open-ended assistance.

Single Output Scoring With Reference

Some workflows have a known correct answer. Policy Q&A and knowledge checks fall into this category. Here, the judge evaluates the model response against a reference output. This improves consistency when correctness is critical.

Pairwise Comparison

Pairwise judging presents two candidate responses for the same input. The judge selects the stronger one based on your criteria. Teams use this to test prompts, retrieval strategies, or model options before deciding what moves into production.

LLM-as-a-Judge vs LLM-Assisted Labeling

Both approaches use LLMs in evaluation workflows, but they solve very different problems inside enterprise GenAI systems.

| Aspect | LLM-as-a-Judge | LLM-Assisted Labeling |

|---|---|---|

| Primary role | Evaluates live GenAI outputs | Generates labels for training or test data |

| When used | After model deployment | Before or during model training |

| Input | User query and model response | Raw data samples |

| Output | Scores and evaluation rationale | Suggested labels for human review |

| Human involvement | Defines rubrics and reviews edge cases | Approves or corrects generated labels |

| Core purpose | Ongoing quality, safety, and compliance monitoring | Faster dataset creation |

| Enterprise value | Continuous GenAI governance and control | Accelerates data preparation pipelines |

These judging types apply to both single-turn and multi-turn GenAI systems. The same methods can evaluate a one-step answer or a full conversation, depending on how your workflow operates.

Where Does the LLM-as-a-Judge Layer Deliver Business Value?

GenAI becomes a business asset only when you can trust it in real operating conditions. Not in demos. In production systems. With real customers, employees, and compliance obligations.

That trust comes from visibility. LLM-as-a-judge provides an evaluation layer that inspects model inputs, outputs, and task instructions within a single request cycle. It returns structured scores and rationales that flow into LLMOps dashboards, CI release gates, and governance reporting. This turns GenAI behavior into monitored infrastructure, not black-box automation.

Three enterprise environments see a measurable impact first.

Customer Service

Customer service is where GenAI meets your brand.

Chatbots answer queries. Agent copilots draft responses. Voice systems resolve requests. All of them operate under real-time latency constraints and a dynamic customer context.

LLM-as-a-judge evaluates sampled service conversations by ingesting:

- The user query

- The model response

- Business policy or knowledge base references

The judge then scores:

- Factual accuracy

- Tone and professionalism

- Instruction compliance

- Safety and escalation triggers

Scores feed quality dashboards and drift monitors. When thresholds fall, prompts or retrieval pipelines are adjusted. This creates a closed-loop LLM evaluation that reduces complaint rates and customer risk.

This evaluation pattern is becoming critical for enterprises where AI responses directly influence customer trust, regulatory exposure, and brand reputation.

Internal Copilots

Internal copilots interact with sensitive knowledge.

They summarize contracts. Interpret policy. Support finance, HR, and procurement workflows. Most rely on RAG pipelines that retrieve internal documents. This makes evaluation critical across RAG integration and cost pipelines before generating responses.

LLM-as-a-judge validates:

- Whether responses remain grounded in retrieved sources

- Whether reasoning steps follow task instructions

- Whether the critical context is missing

- Whether hallucinated content appears

The judge compares the output with reference passages or structured rubrics. Failures trigger retraining of retrieval logic, prompt updates, or human review. This embeds continuous LLM evaluation into enterprise knowledge workflows and protects decision integrity.

Organizations increasingly discover that unverified internal copilots introduce decision risk that spreads faster than productivity gains.

Regulated Content

In regulated environments, compliance is non-negotiable. This is especially relevant for institutions adopting large language models in finance.

GenAI drafts reports, disclosures, claims responses, and policy interpretations. Outputs must align with legal language, risk policies, and disclosure rules.

LLM-as-a-judge performs specialized compliance evaluation by:

- Scanning generated text for prohibited claims

- Checking mandatory disclaimer presence

- Detecting sensitive data exposure

- Validating tone against regulatory communication standards

Judge outputs are stored as versioned evaluation logs. These logs integrate with audit systems and model risk management reports. This creates traceable oversight across the full content lifecycle. Enterprises scale GenAI in high-risk domains without losing compliance control.

For regulated industries, LLM evaluation is shifting from optional monitoring to a core compliance expectation.

What Is the LLM-as-a-Judge Evaluation Framework for Enterprises?

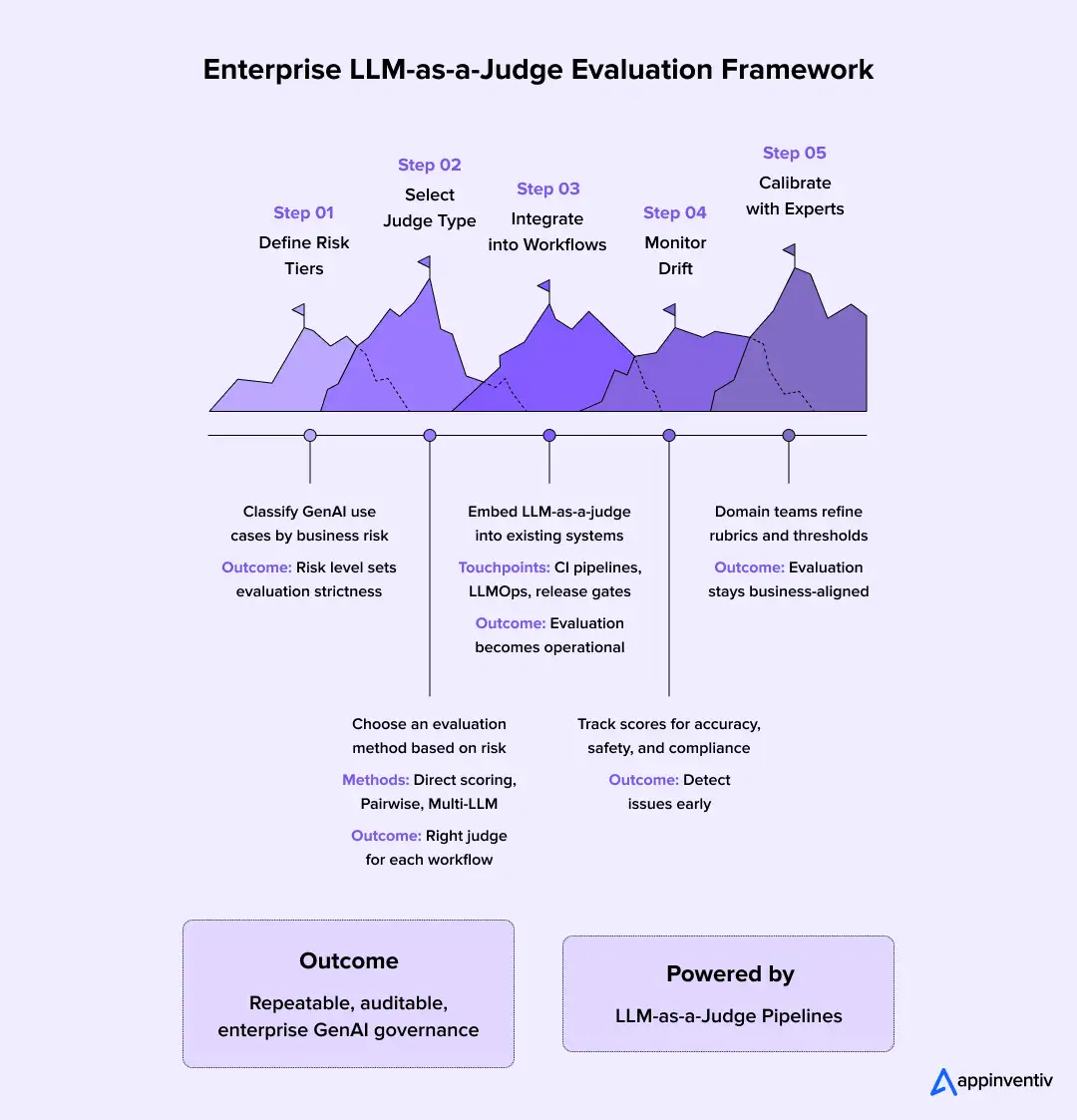

Most teams do not fail at GenAI because of models. They struggle because evaluation grows organically instead of by design. A clear enterprise LLM evaluation framework keeps quality, safety, and compliance aligned as GenAI expands across business units. It also prevents each team from inventing its own approach to LLM-as-a-judge pipelines.

A practical LLM-as-a-judge evaluation framework follows five core steps.

- Define risk tiers: Start by classifying GenAI use cases by business risk. Customer-facing chatbots, financial recommendations, and regulated content sit in higher risk tiers. Internal productivity tools and low-impact summaries sit lower. Risk tiers determine how strict your LLM evaluation system must be.

- Select judge type: Choose LLM-as-a-judge techniques based on risk. Direct scoring works for standard quality checks. Pairwise judges support the model and prompt comparison. Multi-LLM evaluation strategies help reduce bias in high-risk or regulated workflows.

- Connect to workflows: Integrate the LLM-as-a-judge system into existing pipelines. Judge scores should feed CI pipelines, release approvals, and monitor dashboards. This step ensures the LLM-as-a-judge model evaluation is part of normal operations, not a side process.

- Monitor drift: Track evaluation scores over time. Drops in correctness, safety, or compliance often signal model drift, data changes, or retrieval issues. Continuous monitoring keeps LLM evaluation risks visible before they reach customers.

- Calibrate with experts: Human domain experts review edge cases and judge disagreements. Their feedback refines rubrics, judge prompts, and scoring thresholds. This keeps your enterprise LLM evaluation framework aligned with real business expectations.

Once these steps are in place, evaluation becomes repeatable and auditable. Teams move faster because expectations are clear. Leadership gains confidence because LLM-as-a-judge pipelines now produce measurable evidence of control.

Integrating LLM Evaluation into Existing Workflows

Most enterprises already run CI pipelines, LLMOps dashboards, and governance reporting systems. Integrating LLM evaluation into existing workflows ensures LLM-as-a-judge scores flow directly into release gates, monitoring dashboards, and compliance reviews. Evaluation then becomes part of daily operations, not a parallel process. Effective judge deployment also depends on AI in data governance frameworks.

What Are the Business Risks and LLM-as-a-Judge Challenges?

Even the strongest evaluation layer introduces its own LLM evaluation risks. If these risks stay invisible, enterprises can build confidence in the wrong signals. This section highlights the business risks of LLM-as-a-Judge that C-suite leaders must keep on their radar.

LLM-as-a-judge challenges include:

False Confidence

Teams can start optimizing outputs to score well with the judge instead of serving real user needs. This creates clean dashboards while customer experience or decision quality quietly suffers. Without linking judge scores to business KPIs, LLM-as-a-Judge can reinforce the illusion of control rather than real governance.

Bias In Evaluation

A judge model inherits the bias patterns of its underlying LLM. If left unchecked, biased evaluation becomes embedded in approval workflows and policy enforcement. Over time, this can create fairness, compliance, and reputational risks that are difficult to trace back to the LLM-as-a-judge system. Enterprises must pair judge systems with reducing bias in AI model practices.

Data Exposure

LLM-as-a-Judge pipelines often process customer messages, internal documents, and sensitive policy data. If evaluation traffic leaves controlled environments, enterprises introduce new data privacy and sovereignty risks. Regulated industries must design judge deployments that meet security, compliance, and audit requirements from day one.

These challenges align with broader enterprise AI risk management priorities.

Assess your LLM-as-a-judge governance and evaluation readiness today.

What Is the ROI of LLM-as-a-Judge for Enterprises?

For most enterprises, the ROI of LLM-as-a-Judge is not just efficiency, it is the cost of preventing GenAI failures that can trigger regulatory penalties, customer churn, and delayed digital transformation programs.

Most executives do not invest in evaluation because it sounds innovative. They invest because unmanaged GenAI creates hidden costs. Support escalations. Compliance reviews. Rework cycles. Brand risk. LLM-as-a-judge changes that equation by turning evaluation into a predictable operating cost instead of an unpredictable liability.

The ROI of LLM-as-a-judge for enterprises shows up in two ways. Hard savings you can measure. Soft gains that unlock scale.

Hard ROI

These are measurable gains that show up in operational metrics and budget planning.

- Reduced manual review hours by replacing spreadsheet-based QA with an automated LLM-as-a-judge model evaluation

- Faster model and prompt testing cycles through continuous LLM evaluation pipelines

- Lower customer escalation and complaint rates by catching hallucinations and policy drift early

- Fewer compliance remediation efforts through a consistent LLM-as-a-judge system scoring

- More stable production performance through ongoing LLM evaluation system monitoring

Soft ROI

These gains remove friction in decision-making and create confidence to scale GenAI safely.

- Higher confidence from risk and compliance teams through transparent LLM-as-a-judge evaluation frameworks

- Faster executive approvals for new GenAI rollouts due to auditable governance signals

- Stronger cross-team alignment through shared LLM-as-a-judge techniques and scoring standards

- Reduced business risks of LLM-as-a-judge misuse by clearly defined ownership and thresholds

- Greater readiness to scale advanced workflows through multi-LLM evaluation strategies

In the end, enterprises see the real return when GenAI programs move faster with fewer surprises. Evaluation stops being a cost center and becomes a control layer that protects growth.

How Mature Is Your Enterprise LLM Evaluation Strategy?

As generative AI moves from pilot to production, most enterprises struggle to objectively measure whether their evaluation and governance frameworks are built to scale safely. Use the questions below to assess the maturity of your LLM evaluation strategy:

- Do you measure GenAI output quality using standardized, repeatable scoring frameworks (accuracy, hallucination rate, bias, toxicity), or rely on ad-hoc human reviews?

- Are LLM-as-a-Judge evaluations directly linked to business KPIs such as customer satisfaction, operational efficiency, or risk reduction — rather than isolated technical metrics?

- Can compliance and risk teams access audit-ready evaluation logs on demand to support regulatory reviews, internal audits, and model accountability?

- Do your CI/CD and deployment pipelines include automated evaluation gates that prevent low-quality or non-compliant model outputs from reaching production?

- Are GenAI use cases clearly tiered by risk level, with differentiated evaluation rigor for customer-facing, regulated, and decision-critical workflows?

Build vs Buy vs Custom LLM-as-Judge Development

Most enterprises already run GenAI on cloud platforms or internal AI stacks. When adding an LLM-as-a-judge system, the decision comes down to three paths. Buy existing tools, build on open frameworks, or invest in custom LLM-as-Judge development.

Each option supports a different level of control, risk, and operational effort.

Quick view comparison

| Approach | Best fit for | Strength | Limitation |

|---|---|---|---|

| Buy platform tools | Low to medium risk use cases | Fast setup and easy integration into existing workflows | Limited domain-specific control |

| Build on open frameworks | Internal copilots and regulated data flows | Flexible enterprise LLM evaluation framework | Requires in-house AI and LLMOps ownership |

| Custom LLM-as-Judge development | High-risk and compliance-critical systems | Full control and auditable judge behavior | Higher build and maintenance effort |

Organizations with internal AI teams often start by learning how to build AI models before developing custom judge systems.

Choosing The Right Path

Buying works when speed matters and standard evaluation is sufficient. Building on open frameworks fits when you need custom rubrics and tighter data control. Custom LLM-as-Judge development becomes essential when compliance, auditability, and risk reduction are critical.

Many enterprises adopt a hybrid model. Platform tools handle low-risk workflows. Open frameworks support internal evaluation. Custom judges protect sensitive operations.

For organizations pursuing advanced customer-facing or regulated use cases, working with a top AI development company like Appinventiv helps design and deploy customer LLM-as-Judge development that aligns with enterprise governance and scale requirements.

How Do Enterprises Measure Success with LLM-as-a-Judge?

At some point, your team will ask a direct question. Is this evaluation layer actually working? Measuring success with LLM-as-a-judge means tracking whether automated scoring improves GenAI quality, reduces risk incidents, shortens review cycles, and supports compliance reporting. The signal is simple. Better control with measurable business outcomes.

Map the fastest and safest path to governed GenAI scale.

How Does Appinventiv Help Enterprises Operationalize LLM-as-a-Judge?

Most enterprises do not struggle with GenAI because they lack models. They struggle because they cannot prove those models behave safely at scale. That gap slows adoption and keeps leadership cautious. A trusted LLM-as-a-judge evaluation layer changes this.

At Appinventiv, we see this challenge across real deployments. In recent enterprise GenAI programs, LLM-as-a-judge systems reduced manual QA cycles by up to 80 percent. Teams moved from reviewing scattered samples to continuous evaluation tied to compliance and risk thresholds. Rollouts became faster, and governance stayed intact.

Our experience comes from delivering 300+ AI-powered solutions through our custom AI development services. We build and deploy GenAI systems, fine-tuned LLMs, and LLM-as-a-judge pipelines across BFSI, healthcare, retail, logistics, and the public sector. Each solution is designed to fit cloud, hybrid, or private environments where data control matters.

We help enterprises:

- Identify high-risk GenAI workflows

- Define evaluation criteria and rubrics

- Build and integrate LLM-as-a-judge pipelines

- Connect judge outputs to quality and risk dashboards

- Set governance and escalation ownership

The outcome is clear. GenAI systems improve faster. Risks surface earlier. Leadership gains confidence to scale automation with control.

If your team is exploring how to bring structure to GenAI evaluation, a short conversation can help clarify the right starting point. Let’s connect.

FAQs

Q. How can LLMs evaluate other AI systems?

A. LLMs can act as evaluators by scoring or comparing outputs from other models against predefined criteria—accuracy, safety, reasoning quality, tone, or policy alignment. They use structured rubrics designed by your business teams and return consistent, repeatable judgments at scale. This turns subjective review into measurable signals leaders can track.

Q. Why should C-Suite leaders pay attention to LLM-as-a-Judge?

A. Because GenAI now influences customers, advisors, and internal decision flows. Without a reliable evaluation layer, leaders risk scaling systems they can’t fully explain or control. LLM-as-a-judge provides the oversight required to manage quality, reduce exposure, and justify large-scale deployment to boards and regulators.

Q. What are the business risks of using LLMs for decision-making?

A. The main business risks of LLM-as-a-Judge include hallucinations, policy drift, biased responses, inconsistent reasoning, and compliance violations that only surface after rollout. These failures can lead to reputational damage, regulatory scrutiny, and financial exposure. Evaluation gaps—not model flaws—usually cause these issues.

Q. How can enterprises leverage LLM-as-a-Judge while ensuring governance?

A. Start with clear rubrics, risk-tiered evaluation rules, and accountable owners. Integrate judge scores into CI/CD pipelines, release gates, and drift monitoring. Keep human experts involved in calibration and critical decisions. Finally, ensure all judge behaviour is versioned, auditable, and aligned with enterprise risk frameworks.

Q. How Do Enterprises Start Implementing LLM-as-a-Judge Without Disrupting Existing AI Systems?

A. Enterprises usually start by adding LLM-as-a-Judge as an evaluation layer alongside existing GenAI applications instead of replacing them. They begin with high-risk or customer-facing AI workflows, define evaluation criteria like accuracy and compliance, and integrate automated scoring into monitoring or release pipelines.

Need help building a safe and scalable LLM evaluation framework? Connect with enterprise AI experts to assess your readiness and implementation roadmap.

Q. What ethical issues should executives consider before adopting LLM judgment systems?

A. Executives must consider bias propagation, fairness across user groups, transparency of decision logic, and the potential for over-reliance on automated scoring in the LLM-as-a-judge system. They should also evaluate data privacy, consent, and how evaluation outputs influence real-world decisions. Ethical oversight must be designed in—not added later.

Q. How can Appinventiv help evaluate and implement safe LLM judgment frameworks?

A. Appinventiv helps enterprises build structured evaluation using the LLM-as-a-judge system by designing custom rubrics, integrating judge models across cloud and on-prem environments, calibrating scores with domain experts, and embedding governance into existing risk processes. With experience across 300+ AI solutions and 35+ industries, our team ensures your LLM judgment framework is safe, scalable, and aligned with your business and regulatory requirements.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

How to Develop an AI Chatbot for Education Platforms in UAE: Architecture, Cost, and Timeline

Key Takeaways AI chatbots are helping UAE institutions handle repetitive queries, reduce response delays, and improve the availability of student support. Bilingual capability, PDPL compliance, and integration with LMS and student systems are essential for successful deployment. Costs typically range from AED 150,000 to AED 1.47M ($40K–$400K), depending on integrations, personalization, and language support. AI-powered…

How AI in Healthcare Administration Cut Staff Workload by 40%

Key takeaways: AI automates claims, scheduling, intake, and documentation, cutting repetitive work and freeing staff to focus on oversight and patient coordination. AI validation tools flag incomplete records before submission, reduce avoidable denials by 10–20%, and improve clean-claim performance, enhancing revenue predictability. EHR and note-processing AI reclaim thousands of staff hours in large health systems,…

How AI Chatbots for eCommerce are Driving 3x More Sales in 2026

Key takeaways: AI chatbots for eCommerce have a direct impact on revenue. When aligned with buying intent, they lift conversions, increase order value, and drive repeat purchases. The strongest impact comes from personalization and guided selling, helping shoppers decide faster and buy with greater confidence. Abandoned cart recovery is a major revenue driver in 2026.…