- What is Enterprise AI Governance?

- What Makes AI Governance a Leadership Problem, Not a Technical One?

- Exercise: AI Presence & Exposure Mapping

- What Effective AI Governance Looks Like in Large Organizations?

- Exercise: Current-State AI Governance Mapping

- How to Define Ownership and Decision Rights Across the AI Lifecycle?

- Exercise: AI Decision Rights Definition

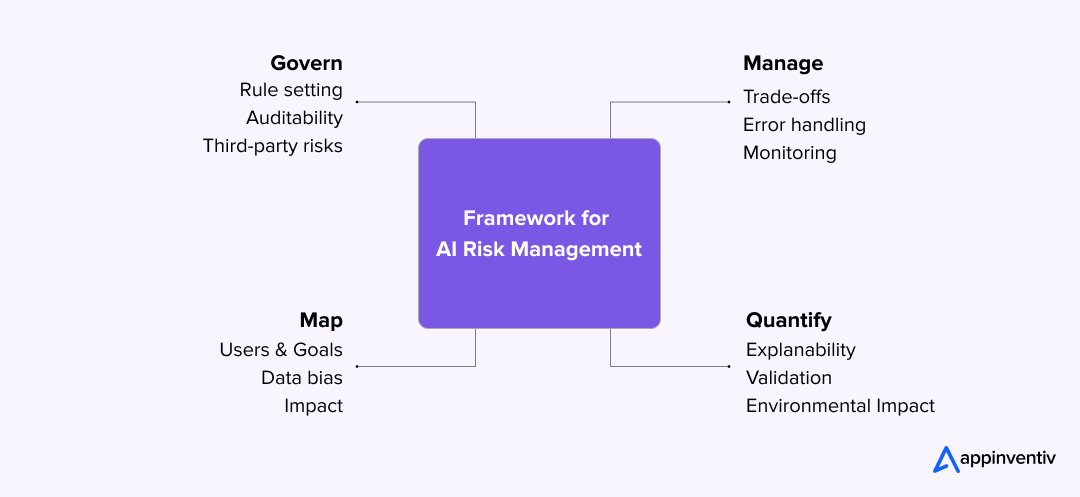

- Managing AI Risk Beyond One-Time Compliance Reviews

- Exercise: AI Risk Prioritization Matrix

- Using Guardrails to Control High-Impact AI Systems

- Exercise: Guardrails Definition Canvas

- When and How to Apply Red-Teaming and Stress Testing?

- Exercise: AI Stress Scenario Review

- Why Observability Is Essential for Ongoing AI Governance?

- Exercise: Observability Expectations Definition

- Auditability, Traceability, and Evidence

- Exercise: AI Audit Walkthrough

- Building Responsible AI Without Moral Fatigue

- Exercise: Values-to-AI Constraints Mapping

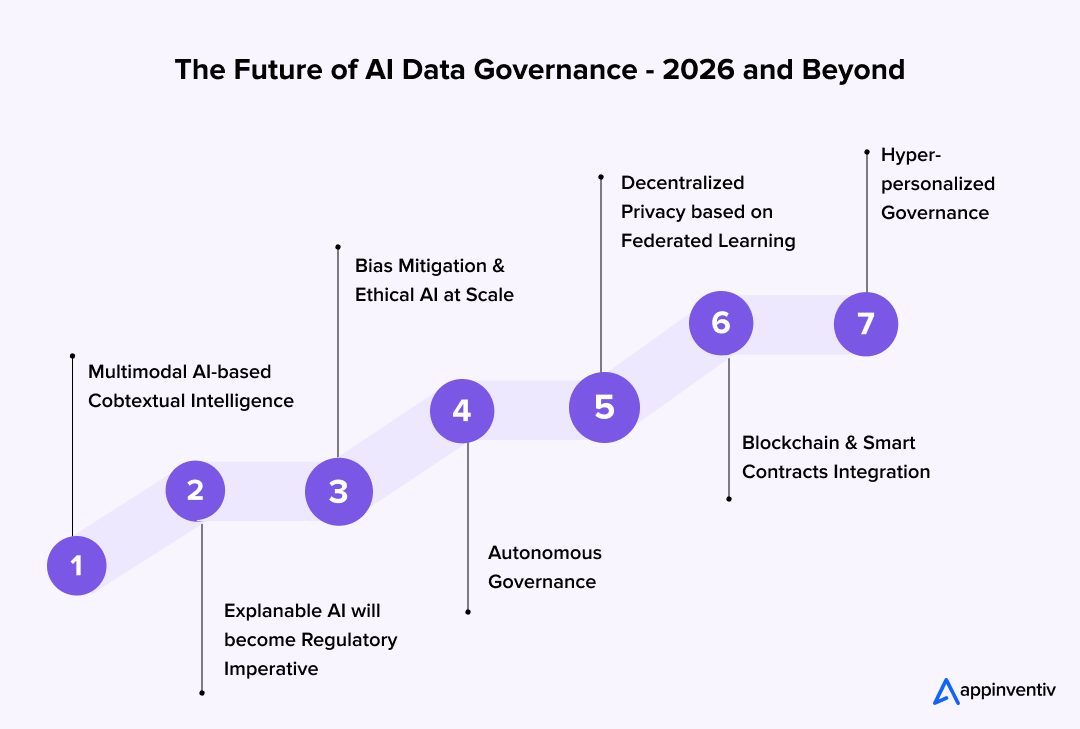

- What Enterprise AI Policies Must Address by 2026?

- Exercise: AI Policy Coverage Review

- Conclusion

- Toolkit for AI Governance Template for Enterprises

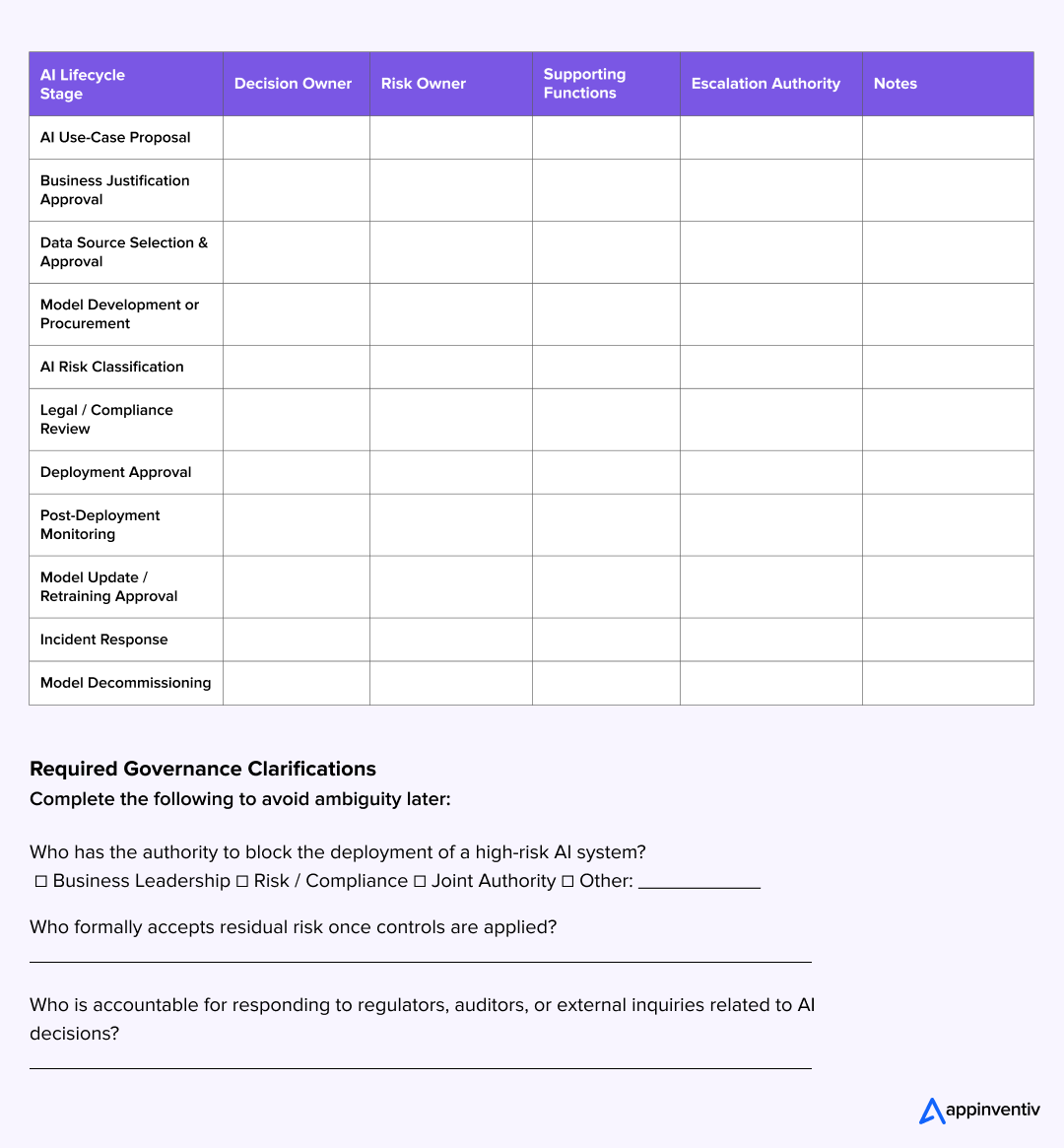

- Template 1: AI Governance Responsibility Matrix

- How to Use This Template

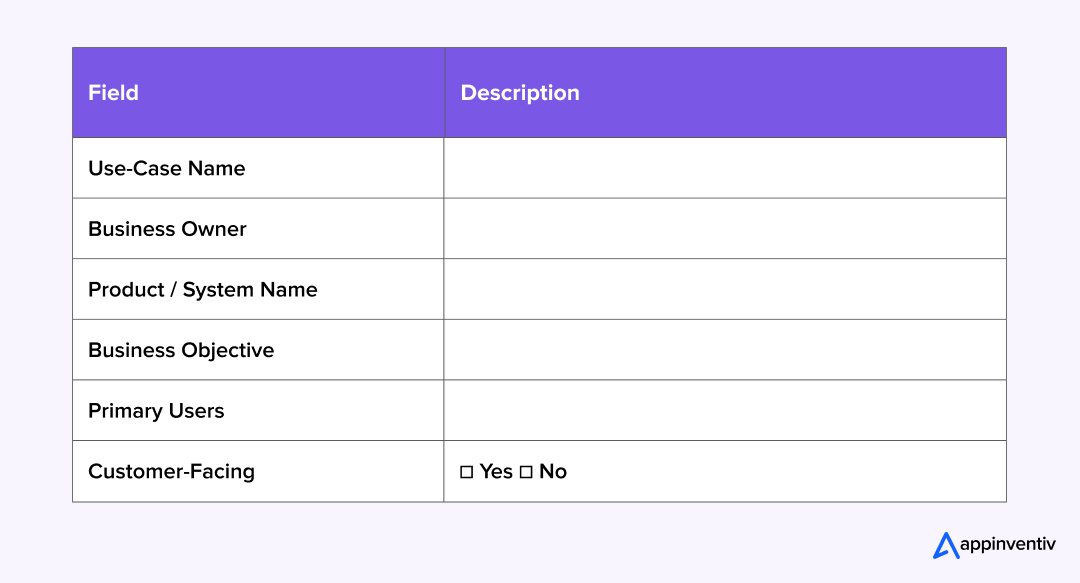

- Template 2: AI Use-Case Intake & Review Template

- When to Use This Template

- Template 3: Enterprise AI Risk Register

- How to Use This Template

- Template 4: Guardrails Definition Template

- When to Use This Template

- AI Guardrails Definition

- Template 5: Red-Team Scenario Library

- How to Use This Template

- Template 6: AI Observability Checklist

- How to Use This AI Risk Management Checklist for Executives Template

- AI Observability Checklist

- Template 7: Model Audit Trail Example

- Model Audit Trail (Example Structure)

- Template 8: AI Policy Starter Pack

- Core AI Policy Set

- Policy Design Principles

- Governance Note

- FAQs.

Key takeaways:

- AI governance fails most often due to unclear ownership and decision rights, not missing tools or intent.

- Effective AI governance operates as an enterprise operating model, not a one-time policy or committee structure.

- AI risk must be managed as an ongoing concern, since model behavior, data, and usage evolve after deployment.

- Proportional governance matters: high-impact, autonomous systems require stronger controls than low-risk, advisory use cases.

- Guardrails, observability, and auditability are essential to maintain oversight once AI systems are live.

- Responsible AI becomes sustainable only when values are translated into enforceable constraints and decision rules.

- Organizations that treat AI governance as decision infrastructure scale AI faster, with fewer surprises and lower exposure.

AI adoption inside large organizations didn’t wait for governance, risk, and compliance structures to catch up. The cost of this gap is now measurable: 65% of AI programs fail to scale beyond pilots, and organizations without clear governance frameworks spend 40-60% more on remediation, rework, and incident response than those with structured oversight in place.

Models started showing up in products, workflows, and decision systems across business units, often quietly. Some were built internally. Others came in through third-party tools or vendor platforms. By 2026, the average enterprise has 50-100 AI systems in operation yet only 30% of CIOs can inventory them all, and fewer than 20% can explain who owns the risk each system creates.

One consequence of this organic adoption is that many organizations do not have a complete view of where AI is actually in use. Models may be embedded in vendor platforms, deployed within individual teams, or repurposed over time without being formally tracked. This creates what is often referred to as “shadow AI” – systems that influence decisions without clear visibility, ownership, or oversight.

In practice, the first step toward effective AI data governance is not policy or tooling, but visibility. A centralized inventory of AI models and AI-enabled systems provides a factual starting point: what exists, where it is used, what decisions it influences, and who owns it. Without this baseline, governance efforts tend to operate on assumptions rather than reality.

That approach works, up to a point. It delivers value quickly. But it also introduces a different kind of risk. AI systems don’t behave like traditional software. They change as data changes. Context matters more than expected. Outputs can be hard to predict and, later, hard to explain. When these systems start influencing customer experiences, employee decisions, or regulated processes, gaps in AI governance and risk management stop being theoretical. They turn into business issues.

At the same time, expectations have shifted. Regulators ask tougher questions. Auditors do the same. Boards are no longer satisfied with high-level assurances. They want to know who approved an AI system, why it was deployed, how it’s being monitored, and what happens if it produces the wrong outcome. Answering those questions consistently is difficult without something more concrete than informal reviews or one-off approvals.

This is where a working risk management framework becomes necessary. Treating AI risk as something that’s resolved at deployment doesn’t match reality. Risk evolves. Data changes. Usage expands. People rely on outputs in ways that weren’t anticipated. Without a framework that accounts for this, organizations end up responding to incidents instead of staying ahead of them.

An effective AI governance framework isn’t a single committee or a policy that lives in a shared folder. It shows up in day-to-day decisions. Who can approve a use case? Who accepts risk when controls aren’t perfect? Who is accountable once a system is alive and behaving differently than expected? When those points aren’t clear, governance exists on paper but has very little influence on outcomes.

This executive guide to AI governance is written for organizations that recognize this gap and want to address it without defaulting to heavy-handed control. The intent isn’t to slow teams down or put approval gates in front of every model. It’s to create clarity around decisions, apply proportional risk management, and make accountability workable as AI use spreads across the enterprise.

Rather than focusing on tools or regulations in isolation, the guide takes an enterprise view of AI governance and risk management. It looks at how ownership is actually defined, how risks are prioritized in practice, how guardrails and monitoring are applied to high-impact systems, and how organizations prepare for audit and regulatory scrutiny without turning governance into bureaucracy. The emphasis is on what holds up in real, complex environments, not what looks neat on paper.

The enterprise AI governance guide is structured to support both understanding and action. Each section provides clear context on why a particular aspect of governance matters, followed by a focused exercise designed for cross-functional teams. These exercises are not theoretical discussions, but are meant to produce alignment and tangible outputs that organizations can build on. All supporting AI governance templates for enterprises are consolidated at the end of the guide so they can be shared and adapted internally.

This is not a compliance checklist nor is it a technical manual; it is a practical leadership enterprise AI governance guide that wants to scale AI responsibly – balancing speed with control, innovation with accountability, and ambition with trust. As expectations around AI governance continue to rise toward 2026 and beyond, the organizations that succeed will be those that treat governance as decision infrastructure, not as an afterthought.

This guide is designed for leadership teams who need to make defensible decisions about AI before regulators, auditors, customers, or boards ask the questions first.

Apply this guide to run a focused enterprise AI governance working session

What is Enterprise AI Governance?

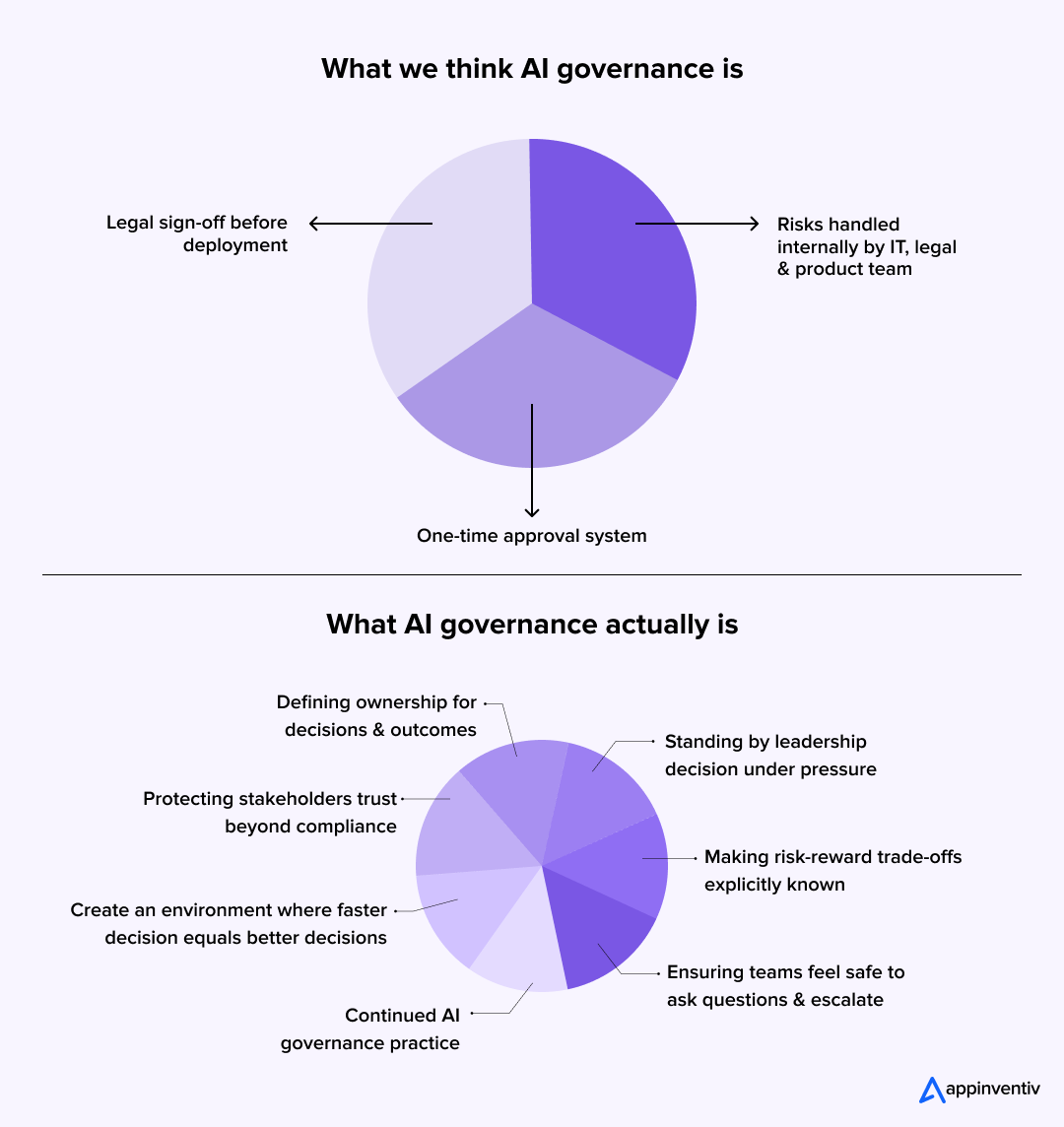

Enterprise AI governance is the set of decision-making structures, controls, and oversight mechanisms that organizations use to manage how AI systems are designed, deployed, monitored, and held accountable at scale. It ensures that AI use aligns with business objectives, risk tolerance, regulatory expectations, and operational realities throughout the AI lifecycle.

Most enterprises today sit somewhere between experimentation and dependence on AI, without having made a deliberate transition in how accountability is managed. AI adoption often begins informally, driven by speed and local problem-solving. Over time, these systems become embedded in critical decisions, long before governance structures are adapted to match their influence.

The shift required is not from “no AI” to “more AI,” but from ad-hoc AI to governed AI. In the ad-hoc state, ownership is unclear, risk is implicit, and accountability is often assigned only after an incident occurs. In a governed state, AI use is intentional: systems are inventoried, decision rights are defined, risk is assessed proportionally, and oversight continues after deployment.

This guide is designed to support that accountability transition. Each section addresses a specific capability required to move from informal adoption to AI that can be confidently explained, defended, and scaled as expectations rise toward 2026.

What Makes AI Governance a Leadership Problem, Not a Technical One?

AI governance is often framed as a technical challenge because AI systems are built, trained, and deployed by engineering teams. In practice, the consequences of AI decisions rarely remain technical, since when the models start influencing pricing, eligibility, recommendations, customer communication, hiring, credit assessment, or operational prioritization, the resulting impact of AI governance implementation is business-critical. These outcomes then affect revenue, customer trust, regulatory exposure, and brand reputation – areas that sit squarely within leadership responsibility.

Traditional governance models were designed for software systems that behaved predictably once deployed, but several C-suite AI governance handbooks highlight how AI systems behave differently. They learn from data, adapt to new inputs, and can produce outputs that are difficult to fully anticipate or explain after the fact. This introduces a new class of risk that cannot be managed solely through code reviews or technical testing, and requires decisions about acceptable behavior, tolerance for error, and accountability when outcomes fall outside expectations.

In many enterprises, AI risk management adoption has not followed a single, deliberate strategy. Instead, it has grown organically – product teams deploy recommendation engines to improve engagement, operations teams use predictive models to optimize workflows, business functions rely on analytics and scoring systems to prioritize leads, tickets, or customers, and vendors increasingly embed AI capabilities into platforms that enterprises adopt for unrelated reasons. Over time, these systems accumulate influence over decisions without being collectively recognized or governed as “AI.”

This is where leadership blind spots emerge. When AI usage is fragmented across teams and tools, accountability becomes unclear, and decisions start getting influenced by models that no single executive can fully inventory or explain. In the absence of explicit governance, risk is managed implicitly – often only when something goes wrong. By that point, organizations are forced into reactive modes, events, audits, or public scrutiny rather than demonstrating foresight and control.

An effective AI governance framework does not require leaders to understand model architectures or training techniques. It requires clarity on three fundamentals:

- Who decides when AI should be used

- What level of risk is acceptable for different types of decisions

- How does the organization ensure ongoing oversight once systems are live?

These are leadership decisions, not engineering ones. Just as financial controls or cybersecurity frameworks set boundaries within which teams operate, AI governance and risk management define the conditions under which AI can be safely and confidently scaled.

Without leadership ownership, AI compliance risk management tends to oscillate between two extremes: excessive control that slows delivery, or minimal oversight that allows risk to accumulate unnoticed. The role of leadership is to establish a balanced framework – one that recognizes where AI adds value, where it introduces material risk, and how accountability is maintained across the organization. Building this foundation into your AI risk management checklist for executives is essential before policies, guardrails, or technical controls can be applied meaningfully.

Exercise: AI Presence & Exposure Mapping

As a group, create a simple, shared view of where AI is currently used across the organization. This can be done on a whiteboard, shared document, or slide. In order to gauge the true AI governance benefits, begin with systems that are clearly AI-driven, such as customer chatbots, recommendation engines, forecasting models, or fraud detection tools. Then widen the lens.

Many teams uncover additional examples once the discussion starts: an internal system that ranks leads or support tickets, a marketing platform that automatically segments users, a vendor tool that prioritizes tasks using machine learning, or an HR system that scores candidates or flags profiles.

For each instance, note what decision or outcome the AI influences and whether it operates in an advisory capacity or triggers actions automatically. The aim is not to catalog every model in detail, but to build a credible picture of where AI risk management is shaping outcomes across the business.

How to measure effectiveness?

The exercise is effective if it surfaces AI usage that was not previously visible or explicitly acknowledged. A useful signal is when participants realize that a system they viewed as “just software” is actively influencing decisions, or when they discover automation they were not aware of. If the final list feels broader or more impactful than expected, then mapping has done its job.

Next steps

Use this initial view as the starting point for prioritization of AI compliance risk management. In the next sections, this list will be refined to identify which AI systems carry the highest business and regulatory exposure and therefore require clearer ownership and stronger governance controls.

What Effective AI Governance Looks Like in Large Organizations?

In large organizations, AI governance and risk management usually don’t fail because people don’t care. They fail because governance is treated as something static – committees exist. Policies are written. Approval steps are defined. But day-to-day AI decisions continue to happen through informal paths that change faster than any framework.

Effective AI governance works as an operating model, not a structure. It is not one committee or one document. It is a set of linked mechanisms that influence how decisions are made. This includes executive direction on risk appetite, named ownership across the AI lifecycle, controls that reflect actual risk, and monitoring after systems are in use. When these elements connect, governance becomes part of normal operations instead of something teams work around.

At enterprise scale, cross-border AI data governance adds another layer of complexity. Large organizations are rarely centralized. They operate across business units, regions, shared platforms, and third-party vendors. Fully centralized governance often creates delays. Fully decentralized governance leads to inconsistency and unmanaged risk. In practice, workable models combine central alignment on standards and accountability with local decision-making based on context.

A common assumption in governing generative AI in enterprise is that governance slows innovation. What usually slows teams down is uncertainty. When ownership is unclear and approval expectations are vague, work stalls or gets reworked. Clear governance reduces this friction. Teams know which decisions they can make, which ones need review, and what evidence is required. That clarity makes it easier to move forward without reopening the same questions.

Another defining feature of an effective AI governance framework is what happens after deployment. Approval-heavy models assume risk is mostly handled upfront. AI systems don’t behave that way. Data changes. Usage expands. Models get updated.

Governance at scale needs to focus on monitoring, accountability, and review over time. Without that, governance exists on paper but offers little protection.

Understanding what good governance looks like only helps if it reflects reality. Before adding new structures or controls to an AI governance maturity model, organizations need to see how AI decisions are actually being made today. That’s usually where the gap between intent and practice becomes clear.

Exercise: Current-State AI Governance Mapping

Map how AI-related decisions are made in practice today. Start with a recent or typical AI initiative and trace its path from idea to deployment. Capture where the idea originates, who reviews it, when risk or AI regulation & compliance becomes involved – if at all, and who ultimately decides that the system can go live. Include informal steps, side conversations, and exceptions, not just formal approvals. The objective is to document reality & not some AI policy development framework.

How to measure effectiveness

The exercise is effective if the group can clearly see inconsistencies, shortcuts, or decision points that are not formally defined. A strong indicator is agreement around statements like, “This isn’t how the process is documented, but it’s how decisions actually happen,” or “This approval depends on who is involved rather than what the system does.”

Next steps

Use this current-state view as the baseline for improvement. In the next section, this understanding will be used to define clear ownership and decision rights across the AI lifecycle, addressing the gaps and ambiguities that surfaced during the mapping.

How to Define Ownership and Decision Rights Across the AI Lifecycle?

AI governance and risk management often fail because ownership is unclear, not because controls are missing. Responsibility is frequently described in broad terms such as “the AI team,” “IT,” or “the business.” That language does not identify who actually makes decisions or who is accountable when outcomes are poor. This ambiguity creates risk well before any technical issue appears.

AI systems move through a set of stages. A use case is proposed. A model is built or purchased. Data is selected. Deployment is approved. The system then operates over time. Each stage requires different decisions. Some decisions are about whether AI should be used at all. Others are about risk acceptance. Others relate to who monitors the system and responds when behavior changes.

When ownership is not defined at each stage, decisions default to convenience. Teams proceed without approval because no decision owner is clear. In other cases, work slows down because several stakeholders believe they share responsibility. This leads to repeated reviews and informal escalation. Over time, this creates delivery friction and governance gaps.

Effective AI governance framework implementation requires separating three roles that are often mixed. One role builds or sources the system. Another approves its use. A third owns the risk created by its outcomes. These roles do not need to sit with the same function, but they must be named. Decision authority also changes over the lifecycle. Approval at the idea stage may sit with business leadership. Risk acceptance at deployment may involve compliance or legal issues. Ongoing accountability often sits with a product or platform owner.

Clear ownership does not increase control. It reduces unnecessary escalation. Teams move faster when they know which decisions they can make and which require review. Leaders have more confidence when accountability is visible. When issues arise, the organisation does not have to debate responsibility during the incident.

Defining ownership and decision rights is a basic requirement in any C-suite AI governance handbook. Without it, guardrails, monitoring, and policies remain theoretical. With it, governance can be applied in practice.

Exercise: AI Decision Rights Definition

Create a simple table covering key stages of the AI lifecycle, such as use-case approval, risk sign-off, deployment approval, ongoing monitoring, and incident response. For each stage of the AI governance implementation, name who has decision authority and who owns the associated risk. Use specific roles or functions rather than broad groups. Where disagreements arise, resolve them during the session rather than deferring them.

How to measure effectiveness

The exercise is effective when each lifecycle stage has a clearly named owner, and there is shared agreement across participants. Signals that more work is needed include vague ownership, overlapping authority, or reliance on informal escalation rather than defined decision rights.

Next steps

Use the agreed ownership model to complete a formal responsibility matrix using the toolkit template. This matrix will serve as a reference point for evaluating AI ethics and governance use cases, managing risk, and responding to incidents in later stages of governance implementation.

Partner with us to design ownership models that reduce friction without adding bureaucracy

Managing AI Risk Beyond One-Time Compliance Reviews

AI risk is often treated as a compliance question: does the system meet a defined set of rules at the time of deployment? That approach works for traditional software, but it does not hold up well for AI. AI systems change over time. Data changes. Usage expands. Outputs start influencing decisions more than originally intended. A system that looks low-risk at launch can become higher risk later without any formal change being made.

Treating AI governance in enterprise security as a one-time review creates a false sense of safety. Approval does not mean risk is resolved. It marks the point where risk begins to evolve. Models drift. Data distributions shift. Edge cases appear only after real-world use. In customer-facing or decision-critical systems, these changes can lead to misleading outputs, biased outcomes, automation errors, or unexpected regulatory exposure.

Because of this, AI risk assessment & management cannot be handled as a static compliance AI risk exercise. Risk needs to be treated as ongoing and revisited over time, which requires moving away from applying the same controls to every AI system, since uniform controls slow teams down where risk is low and leave gaps where risk is high.

Proportionality is central to an effective AI governance framework. The level of governance applied should reflect both the potential impact of failure and the degree of autonomy the system has. Systems that support low-stakes decisions carry a different risk profile than systems that automate or materially influence customer, financial, or regulated outcomes. Making this distinction allows attention and effort to be focused where it matters most.

This risk-based approach also reflects the direction of regulation, such as the EU AI Act, which differentiates governance and control expectations based on system impact, autonomy, and potential harm, rather than applying uniform requirements across all AI use cases.

AI risk management frameworks at scale also require shared understanding across functions. Business leaders, product teams, and risk stakeholders often perceive AI risk assessment differently, and AI governance consulting services bring these perspectives together is essential for prioritizing governance efforts based on real exposure rather than abstract concern. Without this alignment, organizations either over-govern out of caution or under-govern out of optimism.

Exercise: AI Risk Prioritization Matrix

Using the list of AI systems identified earlier, place each system into a simple prioritization view using two factors. The first is the business impact if the system fails or behaves unexpectedly. Consider potential effects on customers, revenue, compliance, or reputation. The second is the level of autonomy the system has. Note whether it only supports human decisions or whether it can trigger or execute decisions on its own.

The purpose is to distinguish systems that require closer governance from those that do not.

Place each system into this view through discussion rather than formal scoring. The value comes from debating placement and surfacing different perspectives, not from precise measurement. If disagreements arise, focus on impact rather than intent or design quality.

How to measure effectiveness

The exercise is effective if the group can clearly identify a small number of AI systems that stand out as higher priority for governance attention. If most systems cluster at the same level of risk, revisit assumptions about impact or autonomy until meaningful distinctions emerge.

Next steps

Direct governance effort toward the highest-priority systems first. These systems should be the initial focus for clearer ownership, defined guardrails, enhanced monitoring, and audit readiness in the sections that follow.

Using Guardrails to Control High-Impact AI Systems

As AI use increases, governance is often assumed to mean restriction. Guardrails are sometimes treated as fixed rules that limit system behavior. In practice, guardrails are used to define boundaries so teams do not have to re-interpret expectations every time a decision is made.

Guardrails are not a form of AI ethics and risk management in business. They reflect how much risk is acceptable in a given context. A customer-facing system that influences financial outcomes requires tighter boundaries than an internal analytics model used for exploration, so when you apply the same controls to both, it would add effort without reducing risk.

Next, guardrails focus on outcomes. They describe what a system is allowed to do, what it must not do, and where human judgment is required. This keeps governance tied to impact rather than implementation details, while making them easier to apply outside technical teams. It is critical to understand, however, that guardrails change over time. As usage expands, data shifts, or models are updated, existing boundaries may no longer hold. High-impact systems require periodic review, but if you treat guardrails as fixed at launch, it will only create gaps later.

Clear guardrails allow teams to operate without hesitation, while unclear guardrails create delays or unmanaged risk. Ultimately, governance will support delivery only when boundaries are understood.

Exercise: Guardrails Definition Canvas

Select one high-impact AI system. Document its guardrails on a single page. State what the system is designed to do and what outcomes it influences. Define what it must not do. Focus on unacceptable outcomes, not technical failure modes.

Next, note where human intervention is required and what signals would trigger it. These may include unusual outputs, changes in behavior, complaints, or activity outside expected patterns. Do not capture technical detail. The objective is agreement, not implementation.

How to measure effectiveness

The exercise is effective if participants can clearly articulate unacceptable outcomes and intervention points without resorting to vague language or prolonged debate. If the group struggles to agree on what the system must never do, this indicates unresolved risk tolerance that needs further discussion.

Next steps

Use the documented guardrails as a foundation for both technical controls and policy language. These guardrails for governing generative AI in enterprise should inform how the system is built, monitored, and reviewed, and should be revisited as the system evolves or its scope expands.

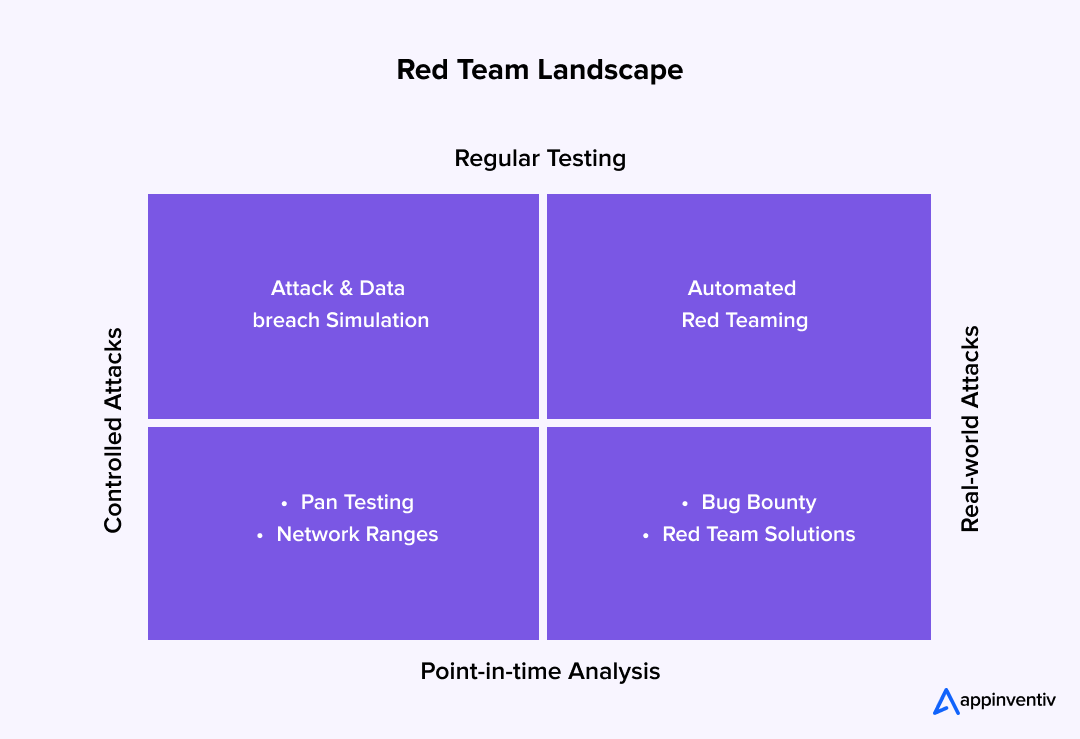

When and How to Apply Red-Teaming and Stress Testing?

Red-teaming and stress testing are used inconsistently across organisations. In some cases, they are done once before deployment. In others, they are applied across all AI systems. Neither approach reflects how risk appears in practice.

Red-teaming is used to identify failure modes that are not visible during design or standard testing. These include misuse, edge cases, unexpected interactions with data, and outcomes that appear only at scale. These issues are more relevant for AI systems that operate with autonomy or affect customers directly.

Not all AI systems require the same level of stress testing. Applying intensive red-teaming to low-impact systems increases effort without reducing material risk. Red-teaming is most effective when applied based on impact and consequence.

In enterprise environments, red-teaming focuses on operational behavior. Typical questions include how a system could be used outside its intended scope, how outputs could be over-relied upon, and which assumptions might fail under pressure. These questions usually cut across technical, business, and risk considerations.

Red-teaming is not intended to remove all risk. Its purpose is to surface blind spots early enough to plan monitoring, escalation, and response.

Exercise: AI Stress Scenario Review

Select one high-impact AI system. List a small number of realistic failure or misuse scenarios. Include incorrect outputs at scale, biased behavior, over-reliance by users, data changes, or misuse by internal or external actors. For each scenario, note how it could occur, how it would be detected, and what the initial response would be.

The aim is to identify gaps. Note where detection would be delayed, ownership unclear, or response dependent on ad hoc action.

How to measure effectiveness

The exercise is effective for establishing the AI governance benefits only if the group can clearly identify scenarios that would likely go unnoticed for too long or be handled inconsistently today. If every scenario feels well covered, the scenarios may not be sufficiently realistic or challenging.

Next steps

Use the gaps identified during this review to strengthen monitoring, observability, and incident response processes. These insights should directly inform what is tracked, what triggers escalation, and who is accountable when issues arise.

Why Observability Is Essential for Ongoing AI Governance?

In software governance, visibility is usually treated as an operational matter. Logs and alerts are used to identify outages or performance issues. With AI systems, visibility serves a different function. Observability is required to maintain oversight after a system is deployed.

AI systems often change without failing outright. Outputs can drift. Confidence levels can shift. Bias can appear. Usage can move beyond what was expected at launch. These changes do not always trigger technical alerts, but they can still affect customers, employees, or regulated outcomes. Without observability, systems may continue to be used even when their behavior has changed.

Regulatory and audit expectations now reflect this reality. Approval and documentation at deployment are no longer enough on their own. Organizations are expected to show how AI systems are monitored over time, what signals are reviewed, and how deviations are identified and addressed. Observability in this context is about maintaining oversight.

AI observability starts with defining expectations. Before tools or metrics are selected, organizations need to agree on what acceptable behavior looks like for each system. These expectations vary by context. An internal forecasting model may allow more variation. A customer-facing system that influences financial or eligibility decisions may not. Governance requires these differences to be stated clearly.

Observability ties accountability to the enterprise AI governance framework. When expectations are clear, it is possible to assign responsibility for monitoring, escalation, and response. When expectations are vague, data may exist, but no one acts on it. Issues then surface late, often through complaints or audits. Governance without observability has little effect. Observability without governance has no authority.

For most enterprises, sustaining AI governance over time requires more than initial frameworks and controls. Models continue to change, data evolves, usage expands, and regulatory expectations shift. This is where governance increasingly overlaps with managed services.

Ongoing AI governance activities, such as monitoring for drift, reviewing high-impact systems, maintaining audit evidence, and updating controls as systems evolve, are inherently operational. Appinventiv supports enterprises in this phase by providing managed AI governance services that help maintain consistent oversight without relying on ad hoc reviews or individual memory, particularly as AI usage scales across teams and vendors.

Exercise: Observability Expectations Definition

Select a high-impact AI control framework system. Agree on what needs to be visible once the system is live. Focus on expectations, not tools. Note what would indicate drift from intended behavior, unexpected outputs, bias, or use beyond the original scope. The aim is to clarify what the organization needs to know to respond.

How to measure effectiveness

The exercise is effective when the group can clearly answer the question: what would we need to see to know this AI is no longer behaving as intended? If participants struggle to articulate this without referencing tools or dashboards, expectations may not yet be clear enough.

Next steps

Use the defined expectations to guide observability tooling, reporting, and escalation processes. These expectations should also feed into audit documentation and ongoing governance reviews for the system.

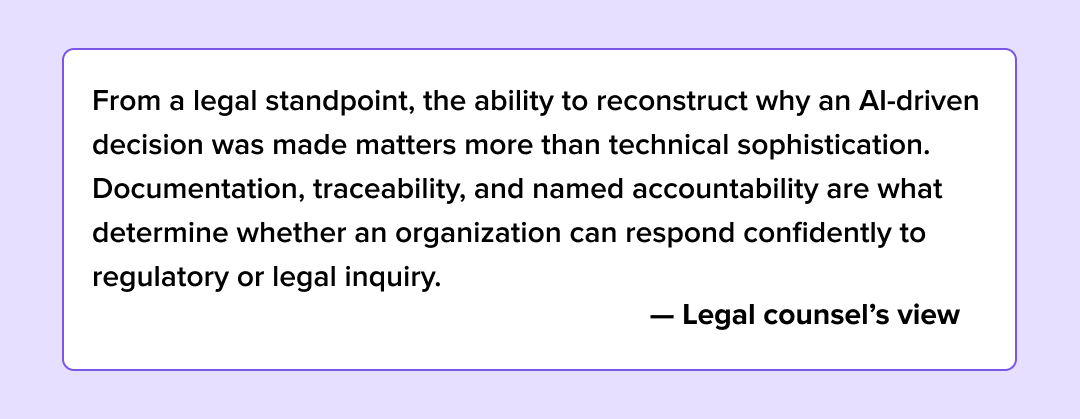

Auditability, Traceability, and Evidence

As AI systems become embedded in core business processes, questions of auditability move from theoretical to immediate. Regulators, internal audit teams, and external reviewers are less concerned with how sophisticated a model is and more concerned with whether its use can be explained, justified, and evidenced after the fact. In many organizations, this is where the AI governance framework is most exposed.

Audit readiness for AI is not about anticipating every possible outcome. It comes down to whether a past decision can be reconstructed without guesswork. That means being able to show why AI was used, what data fed into the decision, what controls existed at the time, and who approved its use. If this information can’t be pulled together reliably, explanations tend to rely on memory instead of evidence, which becomes harder to defend as scrutiny increases.

Traceability is what makes this possible. For AI systems, traceability goes beyond code or model versions. It includes when and why a system was deployed, what assumptions were made about its use, what guardrails were defined, and what signals were reviewed while it was running. Without that context, even systems built with good intent are difficult to explain during audits or investigations.

A common mistake is treating audit documentation as a one-time artifact created at launch. In practice, audit readiness is an ongoing discipline, as the AI ethics and risk management in business evolve, models get updated, data sources change, and usage expands. Each of these changes alters the risk profile of the system. Governance requires that these shifts be recorded in a way that preserves a clear decision trail, rather than relying on institutional memory or informal handoffs, thus lowering the probability of AI bias in business decisions.

Auditability matters for more than external compliance AI risks. Inside the organisation, it’s what allows teams to understand what actually happened when an AI-driven decision led to an issue. If decisions and outcomes can be traced, it’s possible to see where assumptions failed and what needs to change. If they can’t, the same problems tend to repeat because there’s no shared view of the cause.

Exercise: AI Audit Walkthrough

Choose an AI-driven decision or outcome from several months ago that had a real business or customer impact. Reconstruct how the decision was made. Identify why AI was used, who approved it, what data or model version was active at the time, and whether any monitoring or review was in place. Note who would be responsible for responding if this decision were reviewed by an auditor or regulator today.

The purpose is to check whether this information exists in records and systems, or whether it depends on individual recollection.

How to measure effectiveness

The exercise is effective if gaps in documentation, traceability, or ownership become immediately apparent. If reconstructing the decision requires informal explanations or assumptions, audit readiness is limited. If the process is straightforward and evidence is readily available, governance practices are likely mature.

Next steps

Formalize documentation and audit trail practices for AI systems, ensuring that approvals, changes, and monitoring signals are consistently recorded. These practices should align with defined ownership and observability expectations established in earlier sections.

Building Responsible AI Without Moral Fatigue

Responsible AI is often framed in ethical language that feels disconnected from how decisions are actually made inside large organizations. Principles such as fairness, transparency, and accountability are widely endorsed, yet they frequently remain abstract. When responsibility is discussed only in moral terms, data privacy AI concerns tend to fatigue leadership teams and delivery teams alike – either because it feels theoretical, or because it appears to conflict with business pressure and delivery timelines.

In practice, responsible AI is not an ethical posture but an operational discipline. It shows up in how decisions are constrained, how trade-offs are acknowledged, and how accountability is enforced when systems operate at scale. Organizations that succeed in this area do not attempt to encode values directly into models. Instead, they embed values into governance mechanisms that shape how AI is designed, deployed, and overseen.

This requires moving responsibility out of policy statements and into decision-making. Values matter most at points of tension: where automation could replace judgment, where efficiency could override fairness, or where model accuracy could come at the cost of explainability. These are not edge cases; they are recurring moments in AI governance implementation. Treating them explicitly, rather than implicitly, is what helps prevent responsible AI from becoming performative.

Another source of fatigue for an ill-made AI compliance risk management plan is the assumption that responsible AI requires universal constraints applied across all systems. This approach often leads to resistance or superficial compliance. In reality, responsibility should be applied proportionally. The expectations placed on a system that influences customer eligibility or pricing should differ from those applied to an internal analytics model used for exploration. No matter how many responsible AI checklists you have in place, they only become sustainable when it is tied to impact, not ideology.

Ultimately, responsibility is reinforced through governance, not intent. When values are translated into concrete limits, oversight requirements, and escalation paths, teams are able to make decisions with clarity rather than hesitation. This approach reduces ambiguity and supports trust, both internally and externally – without turning responsible AI into an exercise in moral signaling.

Exercise: Values-to-AI Constraints Mapping

Start with a small number of organisational values that people actually refer to when decisions get uncomfortable. Not the values that live on posters or internal sites, but the ones that genuinely shape what gets approved and what doesn’t. From there, talk through what those values mean once AI is part of the picture.

This usually forces some uncomfortable but necessary calls. There will be situations where AI simply shouldn’t be used. Others where automation is fine, but only if a human stays in the loop. And there will be trade-offs that need to be acknowledged openly, rather than glossed over because the system “mostly works.”

When teams get into specifics, the conversation changes. A commitment to fairness might mean certain automated decisions always need a second look, or that some data sources are off the table. A commitment to transparency might rule out models that are too hard to explain when the impact is high. The aim isn’t to restate values, but to turn them into limits that people can actually apply when building or approving AI systems.

How to measure effectiveness

The exercise is effective if values result in clear, actionable constraints that can be applied to real AI systems. If discussions remain abstract or symbolic, responsibility has not yet been operationalized.

Next steps

Reflect these constraints in AI control framework policies, guardrails, and governance guidelines. They should inform use-case approval, system design, and oversight expectations in a way that is consistent and defensible.

Work with us to pressure-test your approach and turn values into enforceable governance

What Enterprise AI Policies Must Address by 2026?

As AI adoption matures, policies move from being optional guidance to enforceable infrastructure. In many enterprises, however, AI-related expectations are scattered across existing documents – acceptable use policies, data governance standards, vendor management guidelines, and security controls – none of which were written with modern AI risk management for enterprises in mind. This fragmentation creates uncertainty at exactly the moment when clarity is most needed.

AI policies for the next phase of adoption, as an AI consulting company, must do more than declare intent. They need to define ownership, establish enforceable boundaries, and connect directly to how decisions are actually made. In a lot of organisations, policies don’t fail because they’re wrong. They fail because no one is quite sure who is supposed to use them. When policies sit outside day-to-day delivery and oversight, they don’t get picked up. They just sit there. At that point, they don’t reduce risk, and they don’t help teams move faster either.

Effective AI policies are specific about scope.

- They make it clear where AI can be used and where it shouldn’t be used at all.

- They call out third-party and vendor-provided AI directly, because risk doesn’t disappear just because a system is bought instead of built.

- They also set expectations around data usage, model updates, and human oversight in ways that reflect how teams actually work, not how workflows look in theory.

Ownership and enforcement are what separate policies people use from policies people work around. An AI policy development framework that doesn’t clearly state who interprets the rules, who enforces them, and who revises them over time is easy to ignore. As regulatory scrutiny increases toward 2026, organisations won’t get much credit for simply having AI policies. They’ll be expected to show how those policies are applied, checked, and updated as systems change.

This shift aligns with emerging standards such as ISO/IEC 42001, which emphasize the need for organizations to establish repeatable management systems for governing AI, rather than relying on static policies or one-time controls.

Rather than trying to predict every future use case, strong AI policies focus on a small set of decision principles that hold up over time. When this is done well, the enterprise AI governance framework reduces guesswork for teams, supports audit readiness, and gives leadership confidence that AI adoption is staying within agreed boundaries.

Exercise: AI Policy Coverage Review

Review existing organizational policies and identify where the AI control framework is already addressed, either explicitly or implicitly. Focus on areas such as acceptable use, third-party and vendor AI, data usage and training, accountability for automated decisions, and escalation or incident response. The objective is not to rewrite policies during the session, but to understand where coverage exists, where it overlaps, and where gaps or outdated assumptions remain.

Pay particular attention to policies that were written before widespread AI governance implementation and may no longer reflect current reality. In many cases, teams discover that AI is governed indirectly – or not at all – through documents that were never intended for this purpose.

How to measure effectiveness

The exercise for implementing AI governance in enterprises is effective when the group can clearly identify areas of strong coverage, areas of ambiguity, and areas where no policy guidance exists. If participants struggle to determine which policies apply to AI use today, that uncertainty itself is a meaningful signal.

Next steps

Use the AI policy development framework templates in the toolkit to update existing policies or create AI-specific ones where needed. Assign clear ownership for each policy and define review cycles to ensure they remain relevant as AI usage expands.

Conclusion

An effective AI governance framework isn’t about putting brakes on teams or creating another layer of control. Most enterprises don’t struggle with ambition around AI. What they struggle with is confidence – confidence that AI can be scaled without creating risks they don’t fully understand, and without being caught off guard when questions eventually come up. Governance exists to create that confidence by making ownership clearer, risk more visible, and decisions easier to explain when it actually matters.

As AI becomes more embedded in products, operations, and everyday decision-making, governance stops living in policy documents. It becomes part of how work gets done. In organisations where governance is treated as an afterthought, issues usually surface through incidents, audits, or regulatory reviews. Teams end up responding under pressure. Where governance is treated as decision infrastructure, those moments are far less disruptive. Fewer things come as a surprise, and trust – internally and externally – is easier to maintain.

This shift isn’t mainly technical. It’s organisational. It requires leadership to stay involved beyond the initial rollout and to apply proportional AI risk mitigation strategies for enterprises rather than blanket controls. Practical guardrails matter, but so does ongoing visibility into how AI systems behave once they’re live.

There’s also a quieter discipline that tends to get overlooked. Good governance means writing down why decisions were made, revisiting assumptions when systems or data change, and adjusting controls when reality doesn’t match expectations. That discipline is what keeps an AI governance framework useful over time, instead of letting it turn into something that looks reassuring on paper but no longer reflects how AI is actually being used.

This enterprise AI governance guide has focused on making that shift tangible. The intent is not to provide exhaustive rules, but to offer a structured way to think, decide, and act. Organizations that approach AI governance strategies in this way are better positioned not only to meet rising expectations toward 2026 but to use AI as a durable advantage rather than a growing source of risk.

Toolkit for AI Governance Template for Enterprises

This part of the executive guide to the AI governance framework contains practical templates designed for direct internal use. Each template can be shared, adapted, and reused independently across teams. The intent is not to prescribe a single governance model, but to provide structured artifacts that help organizations clarify ownership, plan out AI risk mitigation strategies for enterprises, and maintain accountability as AI systems scale.

These templates are deliberately tool-agnostic and policy-light. They are meant to support governance committees, compliance AI risks, and delivery leaders in operationalizing AI governance without introducing unnecessary complexity. Organizations may choose to adopt individual templates based on their immediate needs rather than implementing the AI governance in enterprises toolkit at once.

Template 1: AI Governance Responsibility Matrix

What it’s for

Clarifies who owns decisions, risk, and accountability across the AI lifecycle, from initial use-case proposal to decommissioning. The matrix is designed to remove ambiguity, AI bias in business decisions, reduce approval friction, and ensure that responsibility is explicit at every stage where AI introduces material risk.

How to Use This Template

- Use roles or functions, not individual names

- Each lifecycle stage must have one clear decision owner

- Risk ownership should be explicit and should not default to “IT” or “the AI team.”

- Escalation authority should be defined in advance, not during incidents

- Review and update this matrix when:

- New high-impact AI systems are introduced

- Governance structures change

- Regulatory or risk expectations materially shift

AI Governance Responsibility Matrix

Download PDF: AI Governance Matrix

Validation Checklist

Before finalizing this matrix, confirm that:

- Every lifecycle stage has a clearly named decision owner

- Risk ownership is not shared by default

- Escalation paths are explicit and realistic

- The matrix reflects how decisions should be made, not how they happened informally in the past

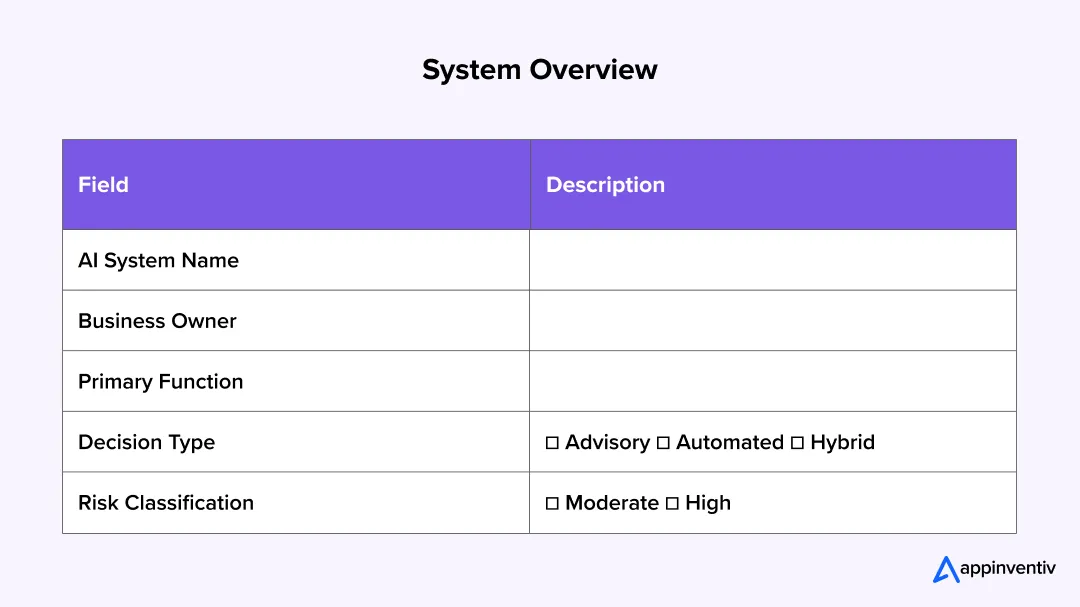

Template 2: AI Use-Case Intake & Review Template

What it’s for

Provides a consistent, structured way to evaluate AI use cases before development or deployment. The template helps organizations identify risk, impact, and governance requirements early, before AI systems become difficult to unwind or explain.

This template is not meant to slow innovation. Its purpose is to surface material considerations upfront so that everyone in the AI governance committee structure knows what level of oversight, controls, and approval is required.

When to Use This Template

Use this template when:

- A new AI use case is proposed

- An existing system is expanded to new users, data, or decisions

- A non-AI system introduces automated or predictive decision-making

- A third-party or vendor tool includes embedded AI capabilities

AI Use-Case Intake & Review

1. Use-Case Overview

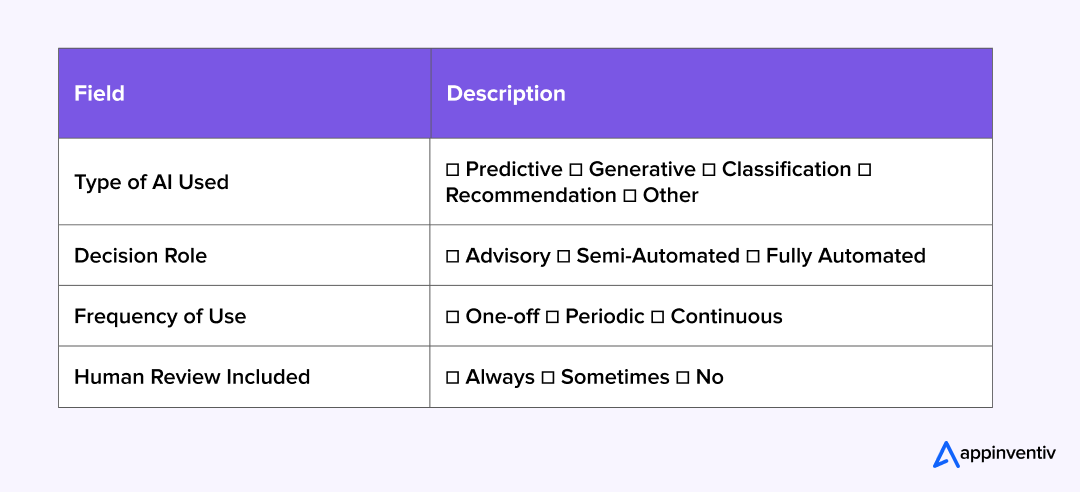

2. AI Characteristics

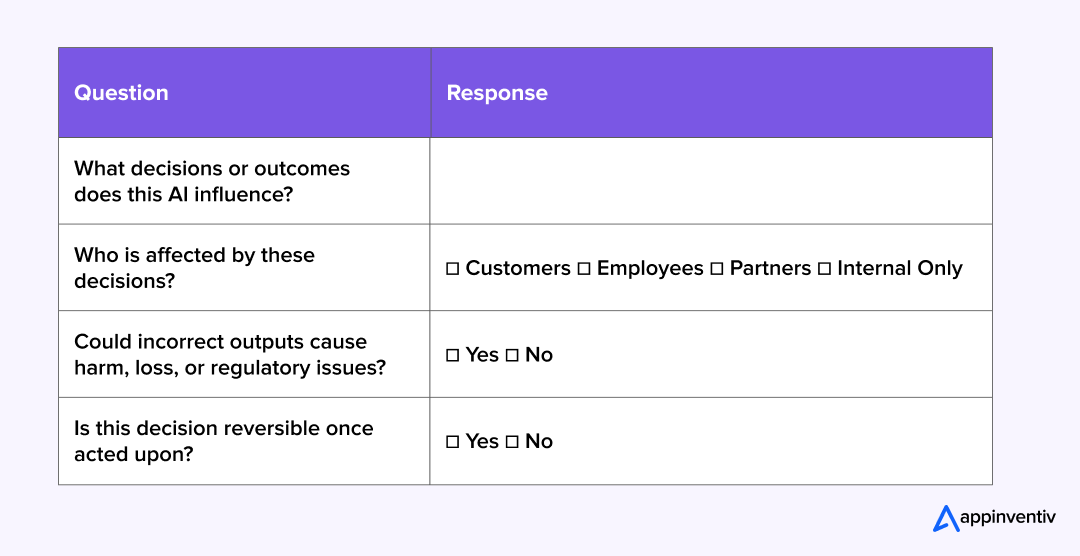

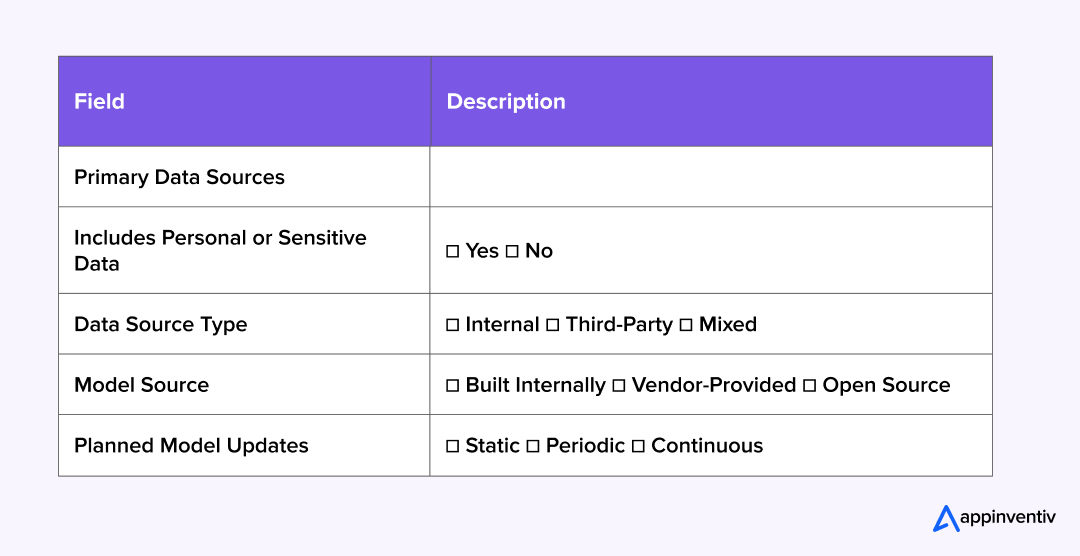

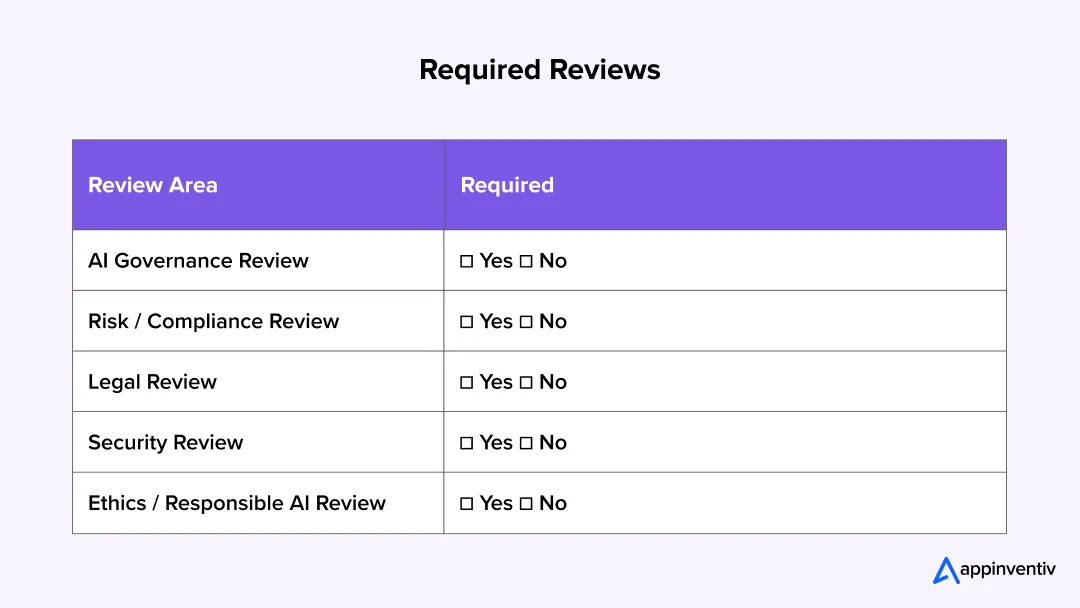

3. Decision Impact Assessment

4. Data & Model Considerations

5. Initial Risk Indicators

Check all that apply:

☐ Customer-facing or externally visible

☐ Influences financial, eligibility, or compliance decisions

☐ Operates with limited or no human oversight

☐ Uses sensitive or regulated data

☐ Embedded in a third-party or vendor system

6. Preliminary Risk Classification

Based on initial assessment:

☐ Low Impact

☐ Moderate Impact

☐ High Impact

(Final classification subject to governance review)

7. Required Reviews

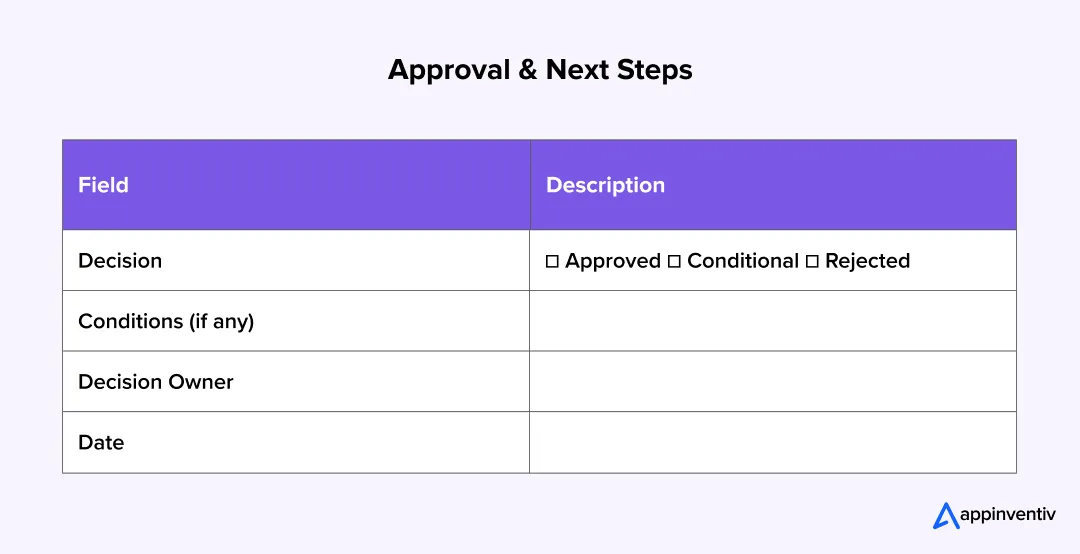

8. Approval & Next Steps

Governance Notes

- Approval does not imply risk elimination; it confirms risk acceptance under defined controls

- High-impact use cases should link directly to:

- Guardrails definition

- Observability requirements

- Audit documentation

- Updates to scope, data, or autonomy level require re-submission

Validation Check

Before closing this intake, confirm:

- The business objective is clearly stated

- Decision impact is understood

- Risk classification is justified

- Ownership and next steps are explicit

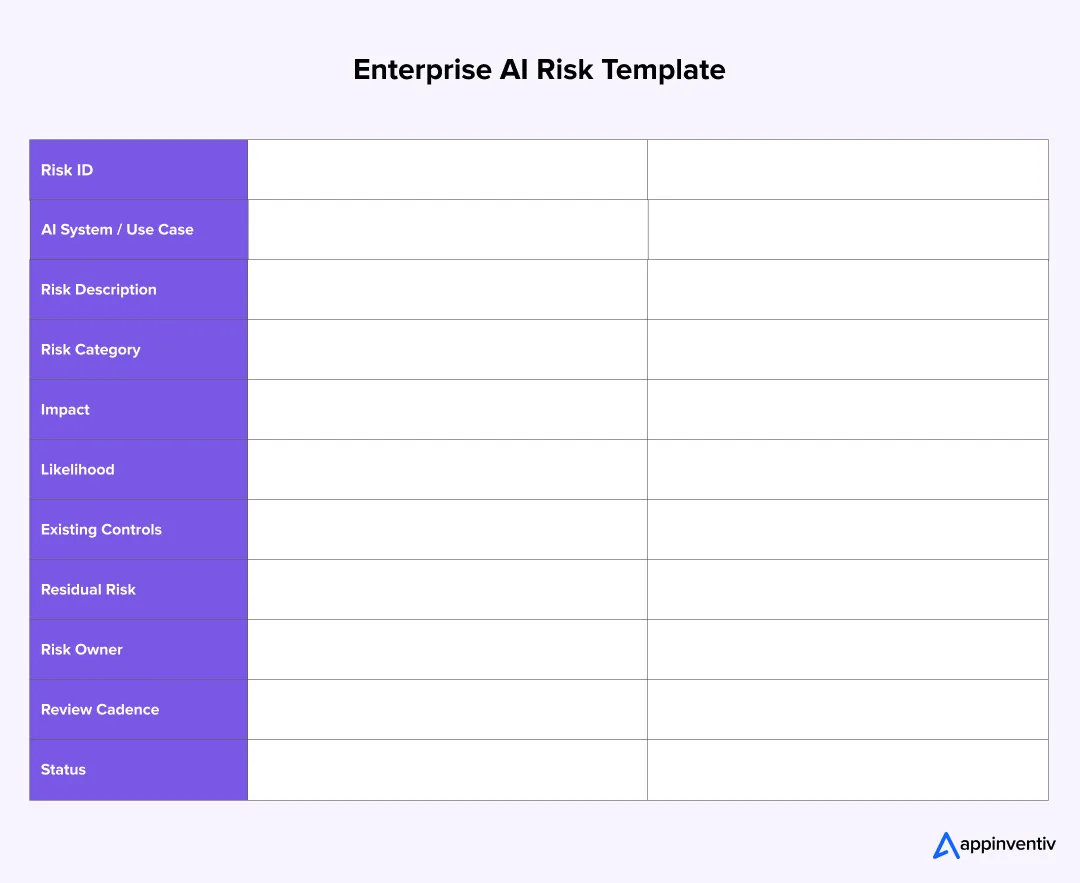

Template 3: Enterprise AI Risk Register

What it’s for

Captures, tracks, and manages AI-specific risks in a structured, auditable format alongside traditional enterprise risks. This register is intended to be a living document that evolves as AI systems, data, and usage change.

Practical note:

Appinventiv’s Risk Discovery Workbook will help enterprises populate an initial AI Risk Register within 48 hours by identifying high-impact systems, mapping risk exposure, and aligning stakeholders on prioritization.

How to Use This Template

- Maintain as a living register, not a one-time artifact

- Link risks to specific AI systems, not abstract categories

- Review periodically and whenever:

- A model is updated or retrained

- New data sources are introduced

- The scope or autonomy of an AI system expands

Enterprise AI Risk Register

Download PDF: Enterprise AI Risk Template

Risk Category Reference

- (Select one or more)

Strategic / Business

Legal / Regulatory

Operational

Ethical / Fairness

Security

- Data Privacy AI concerns

- Reputational

Impact Guidance

- High – Customer harm, regulatory breach, financial loss, or reputational damage

- Medium – Operational disruption, limited external exposure

- Low – Internal inefficiency or inconvenience

Governance Notes

- High-impact risks require explicit risk acceptance by the designated owner

- Residual risk must be reviewed, not assumed

- Closed risks should include evidence of mitigation or design change

Template 4: Guardrails Definition Template

What it’s for

Documents acceptable and unacceptable AI behavior for high-impact systems, translating governance intent into enforceable boundaries.

When to Use This Template

- For customer-facing AI systems

- For systems that automate or materially influence decisions

- For AI systems operating in regulated or high-risk contexts

AI Guardrails Definition

System Overview

Intended Behavior

Describe what the system is designed to do and the outcomes it is expected to support.

Prohibited Outcomes

Clearly state outcomes that must never occur, regardless of intent or performance.

Required Human Oversight

Specify where human intervention is mandatory, such as approvals, overrides, or exception handling.

Escalation Triggers

Define signals that require review or intervention, such as:

- Unusual outputs

- Performance drift

- Complaints or disputes

- Data quality issues

Enforcement Notes

- Guardrails must be enforceable through process, tooling, or controls

- Guardrails should be reviewed when the system’s scope or data changes

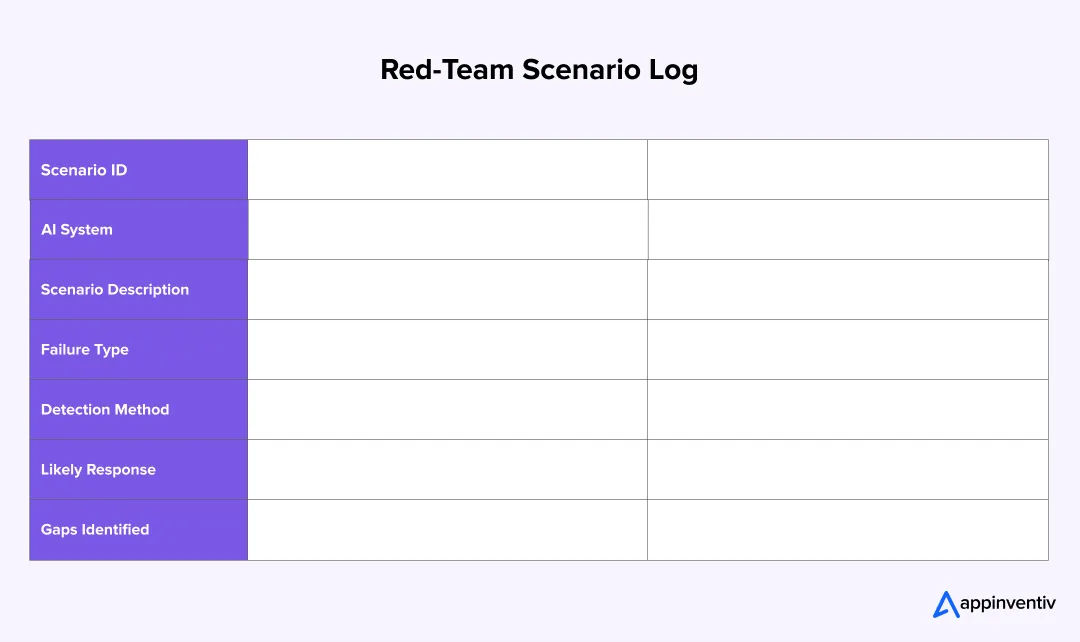

Template 5: Red-Team Scenario Library

What it’s for

Provides a structured set of realistic scenarios to stress-test the high-risk AI governance maturity model and uncover detection, response, or governance gaps.

How to Use This Template

- Apply selectively to high-impact systems only

- Focus on plausibility, not edge-case extremity

- Capture gaps rather than solving everything in-session

Red-Team Scenario Log

Scenario Categories

- Incorrect outputs at scale

- Bias amplification

- Over-reliance by users

- Data drift or contamination

- Misuse (internal or external)

- Vendor or integration failure

Governance Notes

- Undetectable scenarios indicate observability gaps

- Poor response clarity indicates ownership or escalation issues

Template 6: AI Observability Checklist

What it’s for

Defines what must be monitored to maintain ongoing oversight, the best AI risk management frameworks, and compliance for AI systems.

How to Use This AI Risk Management Checklist for Executives Template

- Define expectations before selecting tools

- Apply proportionally based on system impact

- Review regularly for high-impact systems

AI Observability Checklist

Performance & Behavior

☐ Output accuracy or quality

☐ Drift from expected behavior

☐ Confidence degradation

☐ Anomalous patterns

Fairness & Bias

☐ Disparate outcomes across groups

☐ Input sensitivity issues

☐ Feedback loop risks

Usage & Misuse

☐ Unexpected usage patterns

☐ Automation beyond the intended scope

☐ Dependency escalation

Data Integrity

☐ Input data drift

☐ Missing or degraded data

☐ Source changes

Governance Signals

☐ Threshold breaches

☐ Escalation triggers activated

☐ Manual overrides

Monitoring Ownership

- Responsible function: ___________________

- Review frequency: ___________________

- Escalation authority: ___________________

Template 7: Model Audit Trail Example

What it’s for

Demonstrates what defensible, regulator-ready AI documentation looks like in practice.

Model Audit Trail (Example Structure)

System Overview

- AI System Name

- Business Purpose

- Deployment Context

- Customer / User Impact

Approval History

- Use-case approval date and authority

- Risk classification decision

- Legal / compliance sign-off

Model Details

- Model type

- Training data summary

- Key assumptions

- Known limitations

Change Log

Monitoring & Performance

- Key metrics tracked

- Drift events

- Alerts and responses

Incident History

- Incident description

- Impact

- Resolution

- Corrective actions

Governance Notes

- Documentation should be time-bound and versioned

- Audit trails should be retrievable without relying on personal knowledge

Template 8: AI Policy Starter Pack

What it’s for

Outlines the structure of core AI policies enterprises are expected to have as regulatory scrutiny and accountability requirements increase toward 2026.

Core AI Policy Set

1. AI Acceptable Use Policy

Defines permitted and prohibited uses of AI across the organization.

2. Generative AI Usage Policy

Specifies how generative models may be used, including content creation, data handling, and disclosure expectations.

3. Third-Party & Vendor AI Policy

Covers procurement, risk assessment, and accountability for externally provided AI systems.

4. Data Usage & Training Policy

Defines what data can be used for training, fine-tuning, or inference, and under what conditions.

5. Human Oversight & Accountability Policy

Clarifies when human intervention is required and who remains accountable for AI-driven decisions.

Policy Design Principles

- Clear ownership and enforcement authority

- Alignment with enterprise risk appetite

- Regular review and update cycles

- Integration with existing governance frameworks

Governance Note

Policies should guide decisions, not merely exist for compliance. If your current AI governance committee structure cannot apply a policy to real AI use cases, it will require revision.

FAQs.

Q. How can AI governance frameworks reduce business risks?

A. AI governance reduces risk mostly by removing ambiguity. In many organisations, AI systems are approved informally, evolve quietly, and only attract attention when something breaks. Governance changes that make ownership, decision rights, and escalation explicit from the start.

When it’s clear who approved a model, what it’s allowed to influence, and how it’s monitored, risk becomes easier to manage in practice. This is especially important for model risk management AI, where the issue is rarely just model accuracy. It’s whether the organisation can show that models were reviewed, updated, and challenged as their data or usage changed.

Governance also lowers regulatory and reputational risk by creating a paper trail that reflects real decisions, not reconstructed explanations. When questions come from regulators, customers, or auditors, organisations with governance in place can explain not just what an AI system did, but why it was allowed to do so and how it was overseen.

Q. What are the key elements of an AI risk management strategy?

A. A workable AI risk management strategy focuses less on exhaustive rules and more on proportion. Not every AI system needs the same level of control, but high-impact systems need far more attention than they often get today.

At a minimum, this means clear ownership, a way to classify risk based on impact and autonomy, defined guardrails for sensitive use cases, and visibility into how systems behave once they are live. Risk doesn’t end at deployment, and strategies that stop there tend to fail quietly.

Newer use cases make this even harder. Generative AI security compliance challenges – like unreliable outputs, data leakage, or over-reliance by users – don’t fit neatly into traditional risk models. The organisations that manage this well don’t pretend the risks are fully understood. They integrate AI risk into existing enterprise risk processes, so it’s reviewed alongside financial, operational, and regulatory risks instead of sitting in its own silo.

Q. How does Appinventiv help enterprises implement AI governance?

A. Appinventiv helps enterprises move from abstract AI principles to operational governance. The focus is on designing governance frameworks that align with how large organizations actually work – across business units, platforms, and vendors.

This includes defining governance structures, implementing AI risk and model risk management AI, designing guardrails and observability requirements, and supporting policy development aligned with standards such as the ISO 42001 AI management system. The emphasis is on practical implementation, not theoretical models, so governance enables scale rather than slowing delivery.

Q. What are the benefits of AI governance in financial services?

A. In financial services, AI usually shows up in places where mistakes are expensive. Credit decisions, fraud flags, customer risk scoring, and collections prioritisation – once these systems are live, their outputs tend to get trusted very quickly. That’s where governance starts to matter.

AI governance helps institutions keep control over models that would otherwise fade into the background. It forces basic but important clarity: who approved this model, what it’s allowed to influence, and what happens if it starts behaving differently six months down the line. From a model risk management AI perspective, this matters more than raw accuracy, because regulators care about how decisions are made, not just whether the numbers look good.

Another very practical benefit is around vendors. Most financial institutions today use platforms where AI is embedded and largely opaque. Governance gives teams a consistent way to assess and question those tools instead of treating them as black boxes. That makes AI vendor risk management less reactive and far easier to defend when regulators start asking uncomfortable questions.

Q. How can businesses ensure AI compliance while managing risks?

A. The biggest mistake organisations make with AI compliance is treating it like a legal sign-off at the end of a project. That approach works poorly with AI, because risk doesn’t stop once a system goes live.

In practice, compliance improves when governance is built into everyday decisions. Teams know which use cases need extra scrutiny, who can approve higher-risk deployments, and what evidence needs to exist if something is challenged later. This shifts the focus from “are we compliant?” to “can we explain what we did and why?”

This becomes especially important with newer use cases. Generative AI compliance challenges don’t always fit into existing rules, particularly around content accuracy, data leakage, or over-reliance by users. Governance helps here by giving organisations a way to adapt controls over time, instead of pretending the risks are already fully understood. Traceability and accountability end up doing more work than rigid rules ever could.

Q. How does AI governance contribute to business continuity planning?

A. Most organisations don’t realise how dependent they are on AI until something breaks. Models that started as decision support slowly become operationally critical, and by then, there’s often no clear plan for what happens if they fail.

AI governance reduces that risk by making dependencies visible. When systems are governed properly, it’s clear which models matter most, who owns them, and how they’re monitored. That makes it much easier to think through failure scenarios before they happen, rather than during an incident.

Governance also pushes teams to define human intervention points and fallback options early. If a model drifts, a vendor system goes down, or outputs stop making sense, there’s already agreement on how decisions are handled. Over time, this makes AI part of business continuity planning in a very practical way – not as a technical add-on, but as something the organisation knows how to live without, if it has to.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

2026 is shaping up to be the year of eCommerce, and nobody can deny this. Global online retail sales are on track to reach the $8 trillion mark by 2027, expected to grow by 39%. Furthermore, analysts also expect more than 42% of the world’s population to buy something online this year, which is a…

Data science is the domain that couples data-bound analytical techniques along with scientific theories to extract insights for business stakeholders. Its form, factor and magnitude enable businesses to optimize operational fluency, identify new business opportunities, and alleviate the functional performance of departments like marketing and sales. Put simply, data science imparts competitive advantages over rivals…

When Google acquired Fitbit for $2.1 billion in 2021, it was not just buying a fitness tracker but investing in a thriving ecosystem powered by wearable apps. Fitbit’s success was not just about hardware; its app’s ability to deliver real-time health insights, sync seamlessly with multiple devices, and engage users with personalized recommendations made it…