- Why Traditional MLOps Fails at Enterprise Scale

- Inside an Enterprise-Grade LLMOps Framework

- The Model Optimization Layer: Continuous Learning, Controlled Costs

- The Deployment and Feedback Layer: Operationalizing Intelligence

- From Technical Architecture to Business Capability

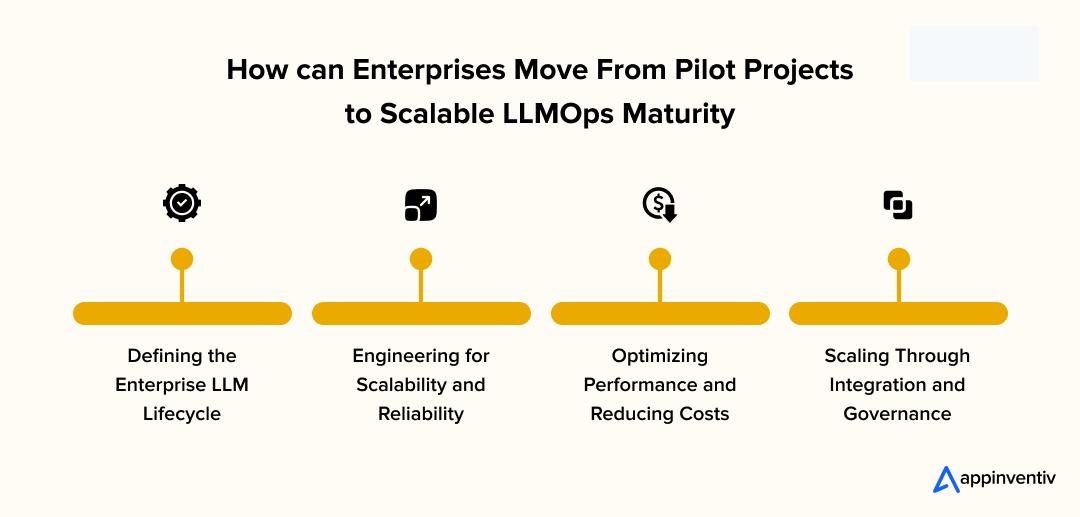

- How can Enterprises Move From Pilot Projects to Scalable LLMOps Maturity?

- 1. Defining the Enterprise LLM Lifecycle

- 2. Engineering for Scalability and Reliability

- 3. Optimizing Performance and Reducing Costs

- 4. Scaling Through Integration and Governance

- The Maturity Outcome

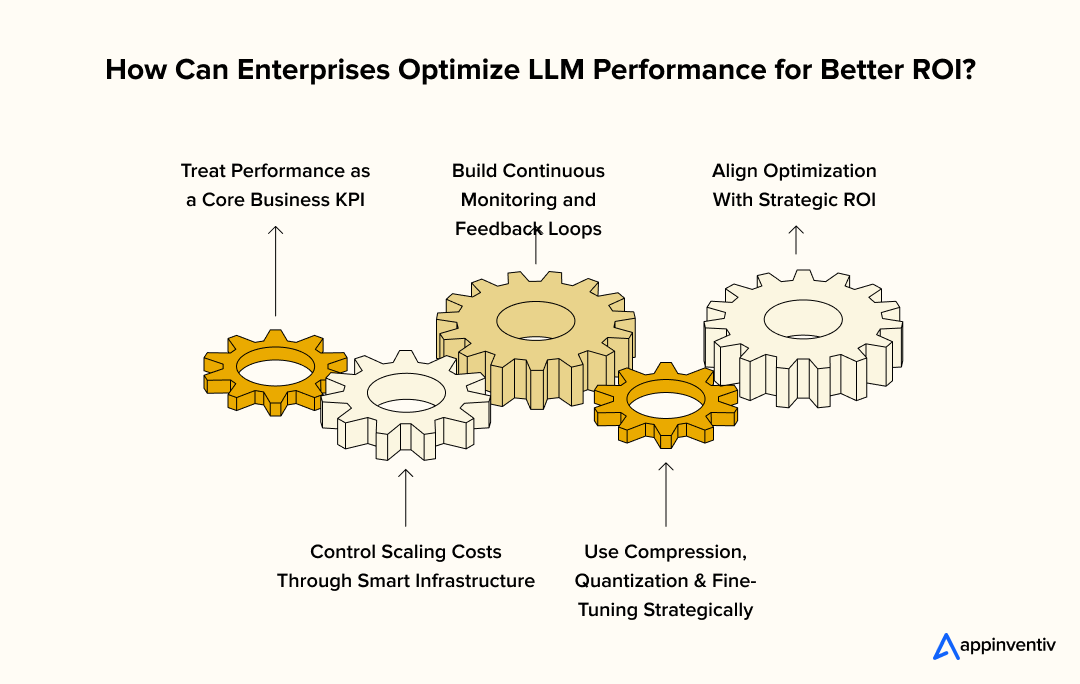

- How Can Enterprises Optimize LLM Performance for Better ROI?

- 1. Treat Performance as a Core Business KPI

- 2. Control Scaling Costs Through Smart Infrastructure

- 3. Use Compression, Quantization & Fine-Tuning Strategically

- 4. Build Continuous Monitoring and Feedback Loops

- 5. Align Optimization With Strategic ROI

- What are the Real-World Enterprise Use Cases of LLMOps?

- 1. BFSI & Legal Operations - Smarter Document and Contract Management

- 2. Healthcare & Life Sciences - Reliable, Compliant Decision Support

- 3. HR, Finance & Procurement - Process-Level AI Integration

- 4. Energy & Manufacturing - Knowledge Retrieval and Predictive Insights

- Why This Matters

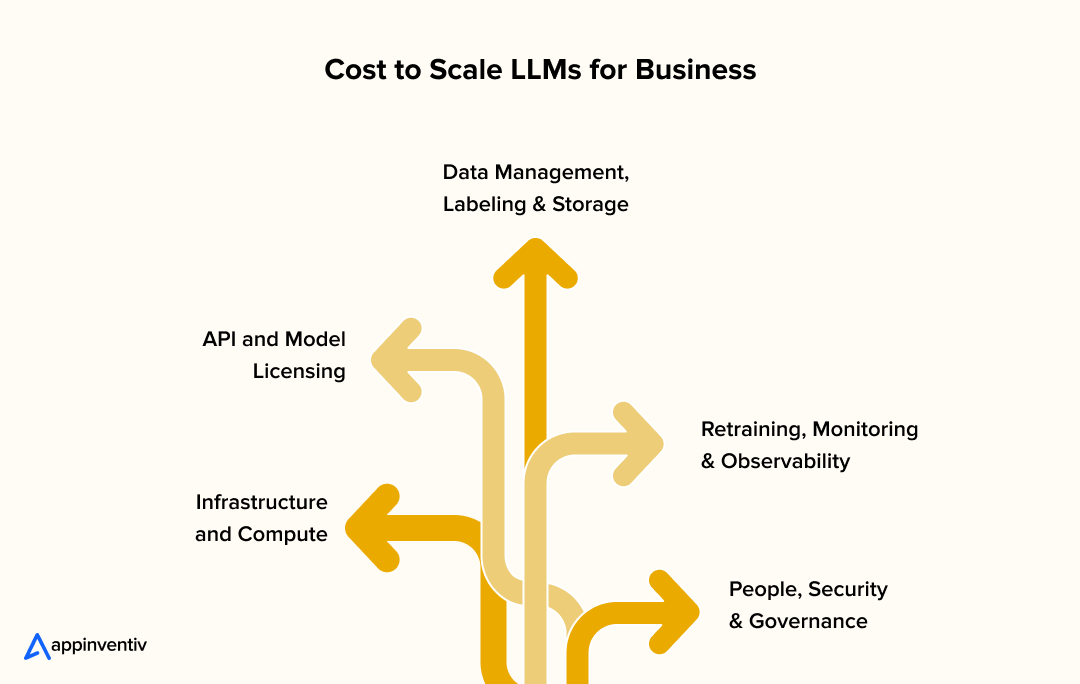

- How Much Does It Really Cost to Scale LLMs for Business?

- 1. Infrastructure and Compute

- 2. API and Model Licensing

- 3. Data Management, Labeling & Storage

- 4. Retraining, Monitoring & Observability

- 5. People, Security & Governance

- Sample Cost Architecture: 3-Year TCO for Enterprise LLM Scaling

- Smarter Budgets, Not Bigger Bills

- How Can Enterprises Build Governance, Manage Risk, and Scale Responsibly?

- Governance Built-In, Not Bolted-On

- Global Standards as Guardrails

- Continuous Oversight Through Infrastructure

- Responsible AI as an Enterprise Differentiator

- Why Appinventiv is the Enterprise LLMOps Partner You Can Trust

- Turning Large Language Models into Long-Term Enterprise Value

- FAQs

Key takeaways:

- Scaling LLMs goes beyond model training. The real challenge lies in managing costs, latency, governance, and consistency across enterprise-wide deployments.

- Traditional MLOps can’t handle LLM-scale complexity. Enterprises need automation in retraining, versioning, and monitoring to keep large language models efficient and compliant.

- LLMOps turns experiments into enterprise systems. It brings structured governance, feedback loops, and observability that ensure scalability without spiraling costs or risks.

- Optimized LLMOps reduces costs and downtime. Enterprises adopting it report 25–40% infrastructure savings and faster retraining cycles across departments and regions.

- Governed, responsible scaling drives real ROI. With frameworks aligned to ISO and NIST standards, LLMOps transforms AI from R&D trials into sustainable business value.

Two years ago, most enterprises were just experimenting with large language models – a few internal copilots, chatbot pilots, maybe a summarization engine tucked into an analytics tool. Today, those same pilots are running headfirst into reality.

Costs are ballooning. Response latencies spike under load. Governance teams can’t explain why a model’s output changed overnight. And the C-suite, once sold on AI’s promise, now asks a harder question: How do we scale without chaos?

The truth is, building and deploying one LLM is easy – scaling fifty across business functions, geographies, and compliance regimes is not. Enterprises are learning that model performance doesn’t fail at inference, it fails at operations – in monitoring, retraining, data drift, or cost management.

Before implementing LLMOps frameworks, enterprises must first evaluate choosing between private and public LLM deployments based on their data governance requirements, compliance needs, and operational constraints.

This is where LLMOps for enterprise applications become indispensable. It’s not just another process layer; it’s the missing operating system that turns raw model power into sustainable enterprise capability. By applying engineering discipline to every stage – from training pipelines to post-deployment observability – LLMOps for scaling large language models transforms innovation from an R&D experiment into an operational engine that leaders can trust, measure, and govern.

For CxOs planning the next phase of enterprise LLM deployment, the question is no longer “Can we build it?” but “Can we scale it responsibly?”

Why Traditional MLOps Fails at Enterprise Scale

At first glance, managing large language models might seem like any other machine learning challenge – data in, model trained, output delivered. But in practice, traditional MLOps collapse under the scale and complexity of LLMs.

Consider these real trends:

- Gartner projects that at least 30% of generative AI projects will be abandoned after proof of concept by 2025 due to costs, governance issues, or unclear value.

- Enterprise surveys report that only 48% of AI projects actually make it to production, with many stalling in pilot stages.

These stats bring the strain of scaling to the surface: while early models work, the moment deployment pressure hits, gaps in pipeline, drift control, and cost management starts showing.

What goes wrong in MLOps at enterprise scale:

- Unpredictable inference costs, especially when workloads spike unexpectedly.

- Model drift, hallucinations, and output inconsistency, because the model remains disconnected from real-time feedback loops.

- Data silos and governance gaps, especially in highly-regulated sectors where context-rich LLM data should comply with privacy and traceability standards.

- Slow, manual deployment cycles that cannot keep pace with the evolving business needs.

Enter LLMOps for enterprise applications – not as a buzzword, but as an operational backbone. Large Language Model Operations extends MLOps by automating retraining, version control, monitoring, and feedback loops specifically for language models. It enables scaling LLMs with LLMOps in ways that preserve performance, cost controls, and compliance.

When organizations adopt LLMOps for scaling large language models, they build systems that adapt and evolve – making model operations for enterprise work, not limping under pressure.

Inside an Enterprise-Grade LLMOps Framework

As large language models move from experiments to enterprise-scale adoption, organizations are discovering that the real complexity isn’t the model itself – it’s everything that keeps it running. Data needs to stay compliant, retraining cycles must be automated, and teams require visibility into what the model is doing in production. That’s the role of an enterprise-grade LLMOps framework for organizations – the operational backbone that keeps innovation from descending into chaos.

Unlike traditional MLOps, which were built for smaller and static models, LLMOps for enterprise applications introduces structure to dynamic, evolving systems that run on governance and adaptability. A well-architected framework brings together pipelines, monitoring, automation, and feedback loops, to ensure that the process of scaling large language models for business remains predictable, auditable, and cost-effective.

The Data and Governance Layer: Building a Trustworthy Foundation

The Data and Governance Layer: Building a Trustworthy Foundation

Enterprises cannot scale AI responsibly without disciplined data operations. In LLM environments, data flows from multiple, often unstructured sources – chat logs, customer data, documents, APIs – and must all conform to enterprise security and compliance standards.

A strong LLMOps framework for organizations begins with clear data lineage and validation. This means every token used in training can be traced back to its origin, enabling explainability and audit readiness. In one banking deployment, integrating governance-driven data audits reduced model drift by 40% and cut compliance review time in half – showing how governance, when built into design, accelerates scale instead of slowing it down.

In short, the first principle of LLM operations for enterprise is control. It ensures that innovation happens inside the boundaries of governance, not in spite of it.

The Model Optimization Layer: Continuous Learning, Controlled Costs

Once the data layer is stable, the next challenge is speed. At scale, hundreds of models may need fine-tuning, validation, and redeployment – often across teams and regions. This is where LLMOps framework for organizations comes in: the ability to iterate fast without losing precision.

Organizations that adopt structured retraining pipelines can reduce model downtime by more than 50%, using automated evaluation and promotion systems that decide which version goes live based on performance metrics, not assumptions. This type of adaptive automation is at the heart of scaling LLMs with LLMOps – enabling systems to evolve with changing business conditions while keeping costs predictable.

When teams begin treating retraining as a continuous process rather than a periodic project, the entire enterprise benefits – decision cycles shorten, insights improve, and AI systems stay relevant longer.

The Deployment and Feedback Layer: Operationalizing Intelligence

Deployment is no longer the end of the AI journey – it’s the midpoint. Enterprises must monitor their models in real time, collect feedback, and feed that intelligence back into retraining loops. This is where LLMOps integration delivers the most visible business value.

By integrating the continuous monitoring and feedback channels, organizations can detect degradation early on, forecast compute demand, and refine the model behavior on the basis of live interactions. These multi-cloud deployment strategies add resilience and flexibility, while cost telemetry ensures that decision scaling becomes data-driven instead of reactive.

For most enterprises, this phase represents the maturity of enterprise LLM deployment – where AI becomes an operational system, not an R&D artifact.

From Technical Architecture to Business Capability

When structured correctly, LLMOps for scaling large language models becomes more than an engineering function – it turns AI into an accountable, revenue-supporting capability. Enterprises that invest in operational governance and automation typically see 25 to 40% cost optimization, improved model reliability, and a marked increase in organizational confidence in AI decisions.

It’s a clear signal of where the enterprise ecosystem is heading: models may power AI, but operations will define who scales it successfully.

How can Enterprises Move From Pilot Projects to Scalable LLMOps Maturity?

For most organizations, deploying a single large language model feels like success – until it’s time to scale. What begins as a promising pilot often stalls when costs rise, governance falters, or systems fail to integrate with enterprise workflows.

This is where the discipline of LLMOps for enterprise applications bridges the gap between experimentation and impact. It takes what works in R&D and transforms it into a repeatable, monitored, and compliant system that drives real business outcomes.

Scaling responsibly calls for both LLMOps best practices and an enterprise-grade LLMOps strategies – not just more compute. The goal is straightforward: ensure every single model performs consistently across departments, languages, and user contexts while staying auditable and cost-efficient.

1. Defining the Enterprise LLM Lifecycle

A successful LLM implementation in enterprise applications framework begins by mapping out the entire model lifecycle – from ideation to iteration.

- Use Case Prioritization: Identify which AI initiatives will create measurable value before committing to any infrastructure spend.

- Infrastructure Readiness: Prepare a modular LLMOps platform that will connect data pipelines, cloud storage, and multiple deployment environments.

- Feedback Integration: Design continuous monitoring systems that track usage, bias, and drift across live deployments.

Enterprises that master this cycle treat AI as an operational system – not an experiment.

2. Engineering for Scalability and Reliability

Scaling large language models for business demands foresight. Teams must balance speed, reliability, and governance without trading one for another. Through LLMOps integration, enterprises can automate repetitive tasks – retraining, version control, and prompt tuning – while applying LLMOps for scaling large language models techniques that prevent downtime and optimize efficiency.

Key engineering practices include:

- Multi-model orchestration to handle multiple LLM variants for different departments.

- Latency-aware inference routing to minimize response delays.

- Cost management built directly into pipelines to align with business KPIs.

This is where LLMOps in business shows its real value: it converts unstructured AI experiments into predictable enterprise processes.

3. Optimizing Performance and Reducing Costs

Scaling brings costs – but also opportunities. Enterprises often tend to overspend because their models are over-provisioned, under-monitored, or misaligned with the usage demand. By optimizing LLM performance for enterprise, teams can monitor the inference patterns, reduce idle compute, and identify cost-efficient retraining windows.

Well-structured LLMOps for enterprise AI environments deliver measurable ROI. Enterprises adopting this model have reported up to 35% savings in infrastructure cost and faster model iteration cycles, all without sacrificing performance or security.

This continuous optimization mindset turns AI from a one-time project into a self-sustaining engine – evolving as business priorities change.

4. Scaling Through Integration and Governance

No enterprise LLM deployment succeeds in isolation. Integration with ERP, CRM, and communication platforms ensures models add value across business lines, while governance layers enforce compliance and traceability. A well-implemented LLMOps fundamentals weaves these integrations together – connecting technology, compliance, and business intent into one operational flow.

At this stage, scaling LLMs with LLMOps becomes more than technical growth; it’s strategic alignment. The enterprise’s AI ecosystem matures – from a collection of models to a cohesive operational platform with governance baked into every stage.

The Maturity Outcome

When enterprises move beyond pilots and build structured LLMOps in business, they shift from experimentation to enterprise-scale AI reliability. LLMs stop being isolated assets and become part of a company’s operational DNA – measurable, governed, and continuously improving.

This is the true promise of LLM operations for enterprise – the ability to scale intelligently, optimize cost predictably, and maintain trust across every AI-driven workflow.

How Can Enterprises Optimize LLM Performance for Better ROI?

Once a large language model moves from prototype to production, performance becomes a business metric – not just a technical one. Latency, compute waste, and scaling inefficiencies can silently burn six-figure budgets each quarter. The goal for enterprises isn’t just “faster models”; it’s predictable efficiency that compounds ROI over time.

This is where LLMOps for enterprise applications comes in – creating the operational discipline that transforms experimentation into sustained enterprise value.

1. Treat Performance as a Core Business KPI

When an AI system underperforms, the cost shows up everywhere: delayed responses, idle agents, and missed opportunities.

Even a 300-millisecond response delay can cut user engagement by 12%, according to Google’s latency research.

In regulated sectors, such as BFSI and healthcare, every second of lag translates into real operational losses.

That’s why optimizing LLM performance for enterprise means treating latency, uptime, and throughput as financial KPIs. Under a mature LLMOps for enterprise AI program, performance telemetry – token usage, model drift, inference time – feeds directly into governance dashboards tied to business goals.

2. Control Scaling Costs Through Smart Infrastructure

Uncontrolled inference is one of the top challenges in scaling LLMs for enterprise. Gartner estimates that organizations overspend by 500 to 1,000% when AI scaling lacks centralized governance.

By applying LLMOps best practices, enterprises can reduce cost-performance waste dramatically:

- Dynamic scaling policies (auto-right-sizing compute) lower GPU waste by 30 to 50%.

- Multi-model endpoints in platforms like AWS SageMaker have cut inference costs by up to 80% while maintaining accuracy.

- Epoch’s analysis found that the cost of achieving GPT-4-level performance dropped 40× per year between 2020 – 2024, driven by model optimization and hardware maturity.

In short, scaling efficiency is no longer theoretical – it’s measurable. Properly managed LLM infrastructure for enterprise can yield 35 to 60% total cost reductions within the first optimization cycle.

3. Use Compression, Quantization & Fine-Tuning Strategically

Larger models are not always smarter. For many enterprise LLMOps strategy cases, compact architectures (e.g., distilled BERTs, quantized GPT variants) deliver 80-90% of accuracy at 25-40% of the cost.

Model compression – pruning redundant weights and distilling knowledge – is a cornerstone for scaling large language models for business.

For example, a 6B-parameter model pruned and quantized to 3B can lower the inference latency levels by around 45% and memory footprint by 60%. This, in turn, means faster response times and lower infrastructure bills without any reliability sacrifice.

4. Build Continuous Monitoring and Feedback Loops

Optimization without feedback is a dead end. A production-grade LLMOps platform embeds monitoring hooks that track data drift, token anomalies, and user satisfaction metrics. Once a degradation threshold is hit, automated retraining or rollback is triggered – ensuring zero downtime.

This continuous feedback system is central to LLMOps integration, turning static deployments into adaptive ecosystems.

Enterprise systems that implemented real-time retraining loops saw model drift incidents fall by 35 to 45% and service availability rise above 99.9%.

5. Align Optimization With Strategic ROI

The true value of optimization lies in alignment. When engineering, finance, and business teams share visibility into cost and output metrics, performance tuning becomes a business accelerator, not a technical afterthought.

Mature large language model operations ensure that every optimization – from token truncation to prompt caching – directly maps to ROI and compliance goals.

At this stage, scaling LLMs for enterprise stops being about compute and starts being about competitiveness.

What are the Real-World Enterprise Use Cases of LLMOps?

Every enterprise deploying large language models eventually learns a hard truth: building the model is the easy part – scaling it sustainably is what separates early adopters from long-term winners.

That’s where LLM deployment best practices proves its worth – transforming experiments into integrated, measurable systems that work across departments and industries alike.

From HR workflows to finance analytics and patient communication, organizations are increasingly applying LLMOps for scaling large language models to ensure performance, compliance, and visibility in production.

1. BFSI & Legal Operations – Smarter Document and Contract Management

Banking and insurance institutions handle a constant flow of contracts, policy documents, and regulatory filings. Through structured LLM implementation in enterprise applications, these firms have started automating data extraction, risk flagging, and contract summarization.

Instead of running multiple standalone models, a unified LLMOps framework for organizations manages model drift, document versioning, and approval workflows under one governed process. This shift doesn’t just improve efficiency – it ensures audit readiness and consistent accuracy across thousands of transactions.

2. Healthcare & Life Sciences – Reliable, Compliant Decision Support

In healthcare systems, the balance between automation and ethics is delicate.

Hospitals optimizing LLM performance for enterprise frameworks use them to power multilingual triage assistants, claims summarization tools, and clinician support systems – all while maintaining traceability and HIPAA alignment.

LLMOps introduces continuous monitoring loops and human-in-the-loop checks that prevent over-reliance on automated outcomes – a safeguard essential for patient trust and compliance integrity.

3. HR, Finance & Procurement – Process-Level AI Integration

Beyond industry-specific uses, LLMOps fundamentals is increasingly applied to internal enterprise functions:

- HR: Automating employee query handling, policy search, and learning content creation.

- Finance: Drafting reports, reconciling the invoices, and detecting every anomaly in the transactional datasets.

- Procurement: Measuring bids and comparing all the vendor terms without any manual review fatigue.

These real-world applications of LLMOps rely heavily on strategic integration to handle sensitive data securely, enforce approval hierarchies, and ensure explainability in automated outputs. It’s where the LLMops infrastructure mindset shifts from “supporting IT” to “enabling every team.”

4. Energy & Manufacturing – Knowledge Retrieval and Predictive Insights

In sectors like energy and manufacturing, efficiency often depends on how fast field teams can access historical data. With scaling LLMs for enterprise, companies are now deploying AI knowledge assistants that retrieve plant maintenance records, SOPs, and troubleshooting guides in seconds.

Large Language Model Operations ensures these retrieval systems are monitored, retrained, and localized continuously – reducing downtime and ensuring operational consistency even in multilingual environments.

Why This Matters

These use cases – spanning industries and enterprise functions – prove that enterprise LLMOps strategy isn’t just a tech framework; it’s an operational model for how AI fits responsibly within enterprise ecosystems. By embedding governance, observability, and iteration into every layer, enterprise-grade LLMOps fundamentals makes AI scalable, secure, and measurable across the organization.

How Much Does It Really Cost to Scale LLMs for Business?

For most enterprises, scaling large language models isn’t expensive because the models are complex – it’s expensive because they’re not managed strategically. Without a disciplined LLMOps for enterprise applications framework, costs compound across compute, data management, retraining, and monitoring.

But with the right foundation, the cost of scaling LLMs for business becomes predictable, measurable, and even self-optimizing over time.

1. Infrastructure and Compute

This is the single biggest line item in any enterprise AI budget. LLMs need high-performance GPUs (A100s, H100s) or TPU clusters. Cloud providers such as AWS, Azure, or GCP work on a charge per instance hour model where the prices fluctuate on the basis of usage and demand.

- Cloud GPU costs: $2-$10 per GPU hour for the standard workloads.

- Training large models: $100K-$400K based on dataset size and fine-tuned cycles.

- Inference scaling: $20K-$80K per month for every sustained multi-region workload.

Well-implemented LLM fundamentals for enterprise practices (like dynamic scaling and GPU pooling) can trim 20 to 40% of these costs without sacrificing performance.

2. API and Model Licensing

If enterprises rely on proprietary APIs (OpenAI, Anthropic, or Cohere), token-based pricing adds up quickly. OpenAI’s GPT-4 Turbo, for instance, costs around $0.01 per 1K input tokens and $0.03 per 1K output tokens. Across millions of daily queries, that’s $15K-$50K per month in API calls alone.

Enterprises adopting hybrid models – blending open-source LLMs (like Llama 3 or Mistral) with commercial APIs – can strike the right balance between flexibility and cost. That hybrid design is a cornerstone of LLM deployment best practices.

3. Data Management, Labeling & Storage

Model training isn’t the only data expense – maintaining high-quality, compliant datasets is equally resource-intensive. Data engineering, annotation, and lineage tracking contribute $50K-$150K annually depending on the enterprise size.

By embedding automated validation pipelines via LLMOps integration, organizations can drastically reduce rework costs and data errors downstream.

4. Retraining, Monitoring & Observability

Every time data drifts, models need retraining – which means new compute cycles, new QA, and governance reviews. For a typical enterprise, annual retraining can add $100K-$250K, especially if compliance audits are involved.

Continuous monitoring through an LLMOps platform prevents unnecessary retraining cycles by catching drift early – optimizing cost per iteration.

5. People, Security & Governance

Beyond tech, human oversight and compliance are real costs. You will need ML engineers, DevOps, data scientists, and governance leads – salaries ranging from $120K to $250K per year per expert in mature markets. Add cybersecurity audits, data localization checks, and documentation – roughly 15-20% of total program cost.

A structured LLMOps for enterprise AI approach embeds these governance controls automatically, reducing human overhead and compliance friction.

Sample Cost Architecture: 3-Year TCO for Enterprise LLM Scaling

| Cost Element | Annual Range (USD) | 3-Year Estimate (USD) | Optimization Levers (LLMOps Impact) |

|---|---|---|---|

| Infrastructure (GPU, Cloud Infra) | $150K-$400K | $450K-$1.2M | Auto-scaling, GPU pooling, cost routing |

| API / Model Licensing | $50K-$200K | $150K-$600K | Hybrid LLM use (open + proprietary) |

| Data Management & Labeling | $50K-$150K | $150K-$450K | Automated validation & lineage checks |

| Retraining & Observability | $100K-$250K | $300K-$750K | Early drift detection, retrain triggers |

| People & Governance | $200K-$500K | $600K-$1.5M | Role automation, compliance templates |

| Estimated Total (3-Year TCO) | $550K-$1.5M / year | $1.6M-$4.5M | 25-40% savings with optimized LLMOps |

Smarter Budgets, Not Bigger Bills

Scaling isn’t about spending more; it’s about spending the cost of scaling LLMs for business strategically. Enterprises adopting LLMOps platforms as a governance-first discipline typically report 25 to 40% total cost reductions compared to unmanaged deployments.

That’s the power of an operational mindset – one that turns scaling from a cost problem into a business advantage.

How Can Enterprises Build Governance, Manage Risk, and Scale Responsibly?

Every conversation about LLMOps for enterprise applications eventually reaches one point: trust.

You can optimize inference time, fine-tune accuracy, and build state-of-the-art pipelines – but without auditability, governance, and ethical oversight, even the most powerful system becomes a liability.

Governance Built-In, Not Bolted-On

For most enterprises, the governance challenge begins when scaling LLMs for enterprise – multiple models, fragmented datasets, and uneven access controls. The fix isn’t a new document or compliance checklist. It’s embedding governance directly into the enterprise LLMOps strategy itself – where model versioning, role-based data access, and decision traceability are engineered into every workflow.

This approach transforms Large Language Model Operations from an engineering process into a system of accountability.

Global Standards as Guardrails

A future-ready governance setup aligns itself with global frameworks like ISO/IEC 42001:2023, the EU AI Act, and NIST’s AI Risk Management Framework. Each of these provide a structural backbone – fairness metrics, human oversight policies, explainability requirements – that enterprises can directly incorporate into their LLMOps framework for organizations.

These standards do not slow innovation; they simply allow it to scale securely across jurisdictions.

Continuous Oversight Through Infrastructure

In a compliant ecosystem, risk management isn’t a quarterly review – it’s continuous. A modern LLMOps platform monitors every stage of the lifecycle, flagging anomalies such as drift, bias, or unusual model behavior as they occur.

The underlying LLMOps infrastructure gives the enterprise compliance teams real-time visibility into the “why” behind every single output, ensuring regulatory and ethical integrity does not become afterthoughts.

Responsible AI as an Enterprise Differentiator

Compliance has evolved from a checkbox to a competitive advantage. Enterprises that invest early in explainable, ethically aligned AI gain more than risk protection – they win stakeholder confidence. A mature LLM operation for the enterprise ecosystem builds that confidence by making responsibility operational, not optional.

This is the new standard for LLMOps for enterprise AI – not just scaling language models effectively, but scaling them responsibly.

Why Appinventiv is the Enterprise LLMOps Partner You Can Trust

Enterprises today need more than AI pilots – they need enterprise-grade LLMOps strategy and execution that scale reliably, securely, and cost-efficiently. That’s exactly where Appinventiv AI development services stand apart.

Our approach to LLMOps for enterprise applications focuses on bridging innovation with operational control – helping Fortune 500s and fast-scaling enterprises deploy, govern, and optimize large language models with measurable ROI.

Proven Infrastructure and Scaling Expertise

Our engineering teams specialize in architecting LLMOps platforms that help you handle enterprise-grade workloads across your hybrid and multi-cloud environments. From elastic GPU orchestration to token-efficient inference routing, every component of our LLMOps infrastructure is built to reduce latency, cut operational costs, and enable global deployment.

This is what defines our strength in LLMOps for scaling large language models – we turn experimentation into production-grade reliability.

Enterprise-Grade Data Governance

Our LLM operations for enterprise frameworks embed compliance from day one.

We design pipelines aligned with ISO/IEC 42001, SOC 2, and NIST AI RMF, ensuring every stage – from data ingestion to retraining – meets governance, privacy, and audit standards. That’s how we help clients operationalize responsible AI at scale, not just adopt it.

Continuous Optimization, Long-Term ROI

The deployment phase is just the beginning. Through real-time monitoring, drift detection, and adaptive retraining loops, our post-launch systems keep models efficient, compliant, and business-aligned – turning every AI initiative into sustained enterprise value.

As an AI consulting company, we follow a guidance-first delivery model that ensures LLMOps in business isn’t just about technology; it’s about outcomes. That’s what makes Appinventiv a leading choice for enterprise-grade LLMOps delivery across BFSI, healthcare, energy, and manufacturing.

Turning Large Language Models into Long-Term Enterprise Value

Scaling large language models is no longer an experiment – it’s an enterprise necessity. But sustainable AI doesn’t come from more compute or bigger models; it comes from operational maturity.

That’s the true power of LLMOps for enterprise applications – it transforms fragmented innovation into a continuous, compliant, and scalable system of intelligence. When enterprises treat LLMOps as a core business discipline, they unlock faster time-to-market, stronger governance, and smarter cost control – the pillars of long-term AI ROI.

At Appinventiv, our role isn’t just building pipelines; it’s helping you optimize LLM performance for enterprise scale – aligning technology, governance, and capital to deliver measurable business outcomes. Whether your goal is compliance, efficiency, or competitive advantage, we architect systems that would seamlessly evolve alongside your enterprise.

FAQs

Q. How can LLMOps be used to scale language models in real-world applications?

A. Think of LLMOps as the backbone that keeps your AI running smoothly once it leaves the lab. In real-world applications of LLMOps, teams use automated data pipelines, feedback loops, and monitoring tools to scale models for customer service, knowledge management, and internal automation – without performance drops. It’s what makes scaling LLMs practical for AI enterprise applications using LLMs, not just research demos.

Q. What are the key considerations when implementing LLMOps for language model scaling?

A. Start with data – make sure it’s clean, compliant, and relevant.

Next, design your architecture around flexibility: retraining, monitoring, and versioning should all be part of your LLMOps best practices.

Finally, align every process with governance – that’s the only way to make scaling sustainable and secure across departments.

Q. How do I implement LLMOps for large language models in my organization?

A. You begin small – identify one high-value use case, like automating policy research or customer documentation.

Then, set up your LLMOps framework for continuous integration and deployment. From there, it’s about refining the pipelines and observability layer until your LLM operations for enterprise setup runs almost like your IT infrastructure: stable, predictable, and scalable.

Q. What are the challenges of scaling LLMs for enterprise use?

A. The biggest challenges in scaling LLMs for enterprise aren’t technical – they’re operational. Data silos, inference costs, model drift, and governance gaps usually slow things down.

That’s exactly why LLMOps for enterprise applications exists – to make sure AI models don’t just launch, but actually stay compliant, optimized, and cost-effective over time.

Q. How much does it cost to deploy LLMs in enterprise applications?

A. It varies by scale and setup. For small teams experimenting with APIs, costs might start around $20K–$50K annually.

Full enterprise LLM deployment, with private infrastructure, data governance, and monitoring, can reach $250K+ depending on the use cases.

The real value comes from how efficiently your LLMOps for enterprise AI setup manages retraining, drift control, and infra usage.

Q. How can Appinventiv help with LLMOps and enterprise AI deployment?

A. We act as your enterprise LLMOps strategy partner – helping you plan, build, and optimize systems that actually scale.

From architecture setup to post-deployment optimization, we’ve helped global clients operationalize LLMOps in business – making their AI systems more efficient, compliant, and future-proof.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

AI Fraud Detection in Australia: Use Cases, Compliance Considerations, and Implementation Roadmap

Key takeaways: AI Fraud Detection in Australia is moving from static rule engines to real-time behavioural risk intelligence embedded directly into payment and identity flows. AI for financial fraud detection helps reduce false positives, accelerate response time, and protecting revenue without increasing customer friction. Australian institutions must align AI deployments with APRA CPS 234, ASIC…

Agentic RAG Implementation in Enterprises - Use Cases, Challenges, ROI

Key Highlights Agentic RAG improves decision accuracy while maintaining compliance, governance visibility, and enterprise data traceability. Enterprises deploying AI agents report strong ROI as operational efficiency and knowledge accessibility steadily improve. Hybrid retrieval plus agent reasoning enables scalable AI workflows across complex enterprise systems and datasets. Governance, observability, and security architecture determine whether enterprise AI…

Key takeaways: AI reconciliation for enterprise finance is helping finance teams maintain control despite growing transaction complexity. AI-powered financial reconciliation solutions surfaces mismatches early, improving visibility and reducing close-cycle pressure. Hybrid reconciliation logic combining rules and AI improves accuracy while preserving audit transparency. Real-time financial reconciliation strengthens compliance readiness and reduces manual intervention. Successful adoption…