- What is AI Bias?

- What are the Different Sources of AI Bias?

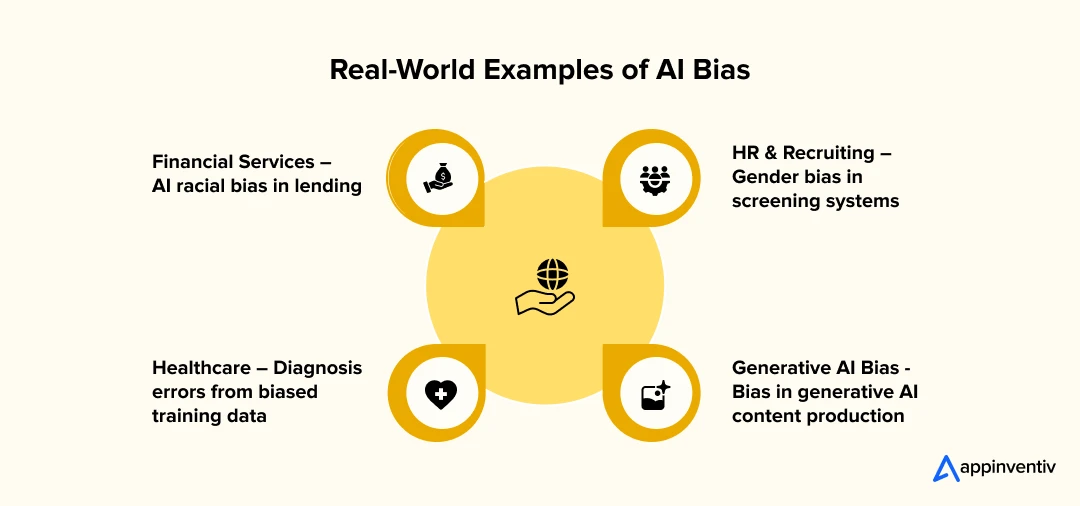

- What Are Some Real-World Examples of AI Bias?

- Financial Services – AI racial bias in lending

- Healthcare – Diagnosis errors from biased training data

- HR & Recruiting – Gender bias in screening systems

- Generative AI Bias - Bias in generative AI content production

- Why This Matters to CXOs

- Why Should Businesses Care About AI Bias?

- Turning Risk into Opportunity

- The Paths to Mitigating Bias in AI

- If You Already Have an AI Model in Production

- If You Are Creating a New AI Model from Scratch

- Bottom Line for Leaders

- How Appinventiv Helps You Deploy AI with Confidence?

- Conclusion

- FAQs

Key takeaways:

- Bias in AI isn’t just a technical concern; it affects brand reputation, compliance, and customer trust, making it a boardroom priority.

- AI models reflect the data they’re trained on, and even small biases in training data can lead to systemic issues in decision-making, hiring, and more.

- Bias in AI systems can lead to regulatory fines, brand damage, and legal liabilities, as shown by cases in finance, healthcare, and recruitment.

- Addressing bias early in the model development process is far cheaper and more effective than retrofitting solutions after deployment.

- Companies must integrate fairness metrics and AI bias detection into their AI lifecycle to avoid risks and build responsible, trustworthy systems.

AI Bias Isn’t a Tech Problem- It’s a $10M Liability Waiting to Happen.

When Amazon’s AI recruiting tool systematically downgraded women’s resumes, it wasn’t a coding error, it was $2M+ in sunk costs and a PR crisis that made global headlines. When a major healthcare algorithm underestimated the clinical needs of Black patients, it wasn’t just unethical, it exposed hospitals to regulatory investigations and class-action lawsuits.

The stakes are clear:

- 85% of enterprises deploying AI have no formal bias detection process in place

- Average cost of a bias incident: $4-10M (remediation + fines + reputation damage)

- Regulatory pressure increasing 300% year-over-year (EU AI Act, FTC enforcement, EEOC scrutiny)

- Customer trust erosion: 67% of consumers distrust AI-driven decisions after hearing about bias incidents

AI bias isn’t an edge case anymore. It’s the #1 governance risk CXOs face when scaling AI from pilots to production. Whether you’re deploying lending models, hiring tools, healthcare diagnostics, or generative AI bias is embedded in your data, your algorithms, and your business decisions.

The good news? Bias can be detected, measured, and mitigated, if you act before deployment, not after a lawsuit.

What You’ll Learn in This Guide:

- What AI bias actually is (and why “more data” doesn’t fix it)

- Real-world failures that cost enterprises millions (Amazon, healthcare, finance)

- Exactly how bias creeps into models (7 sources every CXO should understand)

- Mitigation strategies that work—whether you’re fixing production models or building new ones

- Cost & timeline benchmarks for bias remediation vs. prevention

- How to future-proof your AI governance against regulatory scrutiny

Now, to effectively reduce bias in AI models, its leaders must first grasp what they are up against and define the problem with clarity.

What is AI Bias?

Bias in AI systems in simple terms is when algorithms start behaving in ways that reflect unfair assumptions or skewed patterns. The catch is, these aren’t just random mistakes. They come from the data we feed, the way we design models, and the decisions we choose to automate. In other words, bias creeps in through human fingerprints, and then the machine scales it.

Think of AI bias in language models. They don’t “wake up” biased – they mirror what they’ve been trained on. If large swaths of the internet associate certain roles with men and others with women, the model learns and reproduces that imbalance. Now scale that bias across millions of users who rely on the system for writing, job descriptions, or educational content. Suddenly, stereotypes are amplified instead of challenged.

Or take AI bias in decision making. From approving mortgages to screening résumés, even the tiniest skew in training data can tilt outcomes in ways that look systematic. It’s not always visible at first. A bank might notice that default rates are stable, but miss that minority applicants are being filtered out disproportionately. The decision feels “data-driven,” but the reality is distorted.

Then there’s automation bias in AI – the human tendency to trust a system simply because it’s automated. Executives see dashboards, charts, and probability scores, and assume the outputs must be objective. But if the underlying model carries flaws, the automation only makes those flaws harder to question.

Here’s the point: AI model bias isn’t a back-office IT glitch. It’s a leadership issue, which affects brand reputation, compliance posture, customer experience, and in some industries, even patient safety. When an enterprise deploys AI, it’s not just rolling out software. It’s embedding a set of choices – ethical, operational, and strategic into its business fabric. That’s why leaders can’t afford to look at bias in AI as a side conversation anymore.

What are the Different Sources of AI Bias?

Executives often assume that if an algorithm is trained on big data, it must be neutral. Reality check: scale doesn’t eliminate distortion – it often amplifies it. The roots of bias in AI models lie in how data is gathered, labeled, and applied, understanding its different sources isn’t a technical curiosity; it’s the first step in managing enterprise risk.

Here are the some of the most common culprits:

- Sampling bias: When the dataset used to build and train a model doesn’t represent the diversity of the real world, the results tend to tilt. A customer service bot trained mostly on English-speaking queries, for example, may fail customers who use mixed languages or regional dialects.

- Measurement bias: When the labels or metrics are inconsistent, the system ends up learning the wrong lessons. In healthcare, this could mean something as serious as diagnostic tools trained on imperfect or incomplete patient records.

- Exclusion bias: Entire groups can be left out of training sets. If underrepresented populations aren’t reflected in your data, the model’s predictions will marginalize them again.

- Experimental bias: These are flaws in how the model is designed, tested, or benchmarked. These types of bias in AI often happen when teams chase speed-to-market and skip rigorous validation.

- Prejudicial bias: This one is usually harder to spot as it mirrors societal stereotypes. For example, recruiting algorithms that favor male candidates because historical hiring patterns skewed that way.

- Confirmation bias: Models are sometimes built to validate what leaders already believe, either consciously or unconsciously. If executives expect to see certain trends, developers may – knowingly or not – shape the algorithm to deliver them.

- Bandwagon effect: When models prioritize popularity over accuracy. Recommendation engines, for instance, may reinforce mainstream content while burying diverse voices.

At their core, these problems fall under algorithmic bias in AI, patterns that emerge not by accident, but by the way humans build and apply technology. And here’s the uncomfortable truth: no enterprise is immune. Whether you call it bias AI, prejudice in design, or flawed oversight, it shows up wherever people perform shortcut testing or overlook inclusivity in datasets.

This is why boards and leadership teams are beginning to treat bias in AI systems as a strategic risk. When left unchecked, these types of AI bias distortions can quietly shape decisions on who gets hired, who receives a loan, or which patients receive life-saving care. For enterprises, the cost isn’t just compliance fines, it’s the complete erosion of stakeholder trust.

What Are Some Real-World Examples of AI Bias?

Bias in AI isn’t hypothetical, it’s already embedded in enterprise-critical systems. These cases are not academic footnotes; they reflect examples of AI bias that ripple into regulatory exposure, brand erosion, and leadership risk.

Financial Services – AI racial bias in lending

In a controlled study using real mortgage data, researchers found that large language models consistently recommended that Black applicants needed credit scores about 120 points higher than white applicants with equivalent financial profiles to receive equal loan approval rates. A simple prompt “use no bias in making these decisions”, virtually eliminated this disparity. It showcases how bias in AI models can institutionalize inequality – and how well-designed mitigation can reverse it.

Healthcare – Diagnosis errors from biased training data

A widely used U.S. healthcare algorithm underestimated Black patients’ clinical risk because it relied on historical healthcare spending as a proxy for need. Since Black patients typically generate lower healthcare costs despite being equally ill, they were less likely to be flagged for additional care programs, creating a systemic blind spot with high-stakes consequences.

HR & Recruiting – Gender bias in screening systems

Amazon’s internal AI hiring tool that was trained on a decade of past resumes, consistently penalized applications that had the word “women’s” and further downgraded applicants from all-women’s colleges. Despite attempts to neutralize these biases, the program was ultimately scrapped, illustrating how even big enterprises can unwittingly codify biased AI into their talent hiring decisions and need to carefully find ways to build bias-free AI recruitment tools.

Further corroborated by academic audits, LLM-based screening systems were shown to favor White names in 85.1% of cases, with Black males disadvantaged across nearly 100% of tested scenarios.

Generative AI Bias – Bias in generative AI content production

Emerging studies into generative hiring tools reveal persistent gender bias: men are more likely to be recommended for high-wage roles, reinforcing historic stereotypes – even in new tech. This continues to underscore how bias in generative AI can replicate occupational segregation in supposedly neutral automation.

Why This Matters to CXOs

These are not isolated glitches. Algorithmic bias in AI is systemic, deeply rooted in data, proxies, and insufficient governance issues. From lending to healthcare to hiring, the imprint of bias can destabilize a stakeholder’s trust and expose enterprises to regulatory, reputational, and ethical liabilities.

| Domain | Bias Example | Enterprise Risk |

|---|---|---|

| Financial | Black applicants penalized in loan approval | Regulatory plus discrimination exposure |

| Healthcare | Under-prioritization of Black patients for care programs | Patient harm, compliance, and trust erosion |

| Recruiting | AI downgraded women’s resumes or women’s colleges | DEI setbacks, legal risk, talent loss |

| Generative AI Bias | Gender-skewed callbacks for high-wage roles | Brand misrepresentation, equity perception gaps |

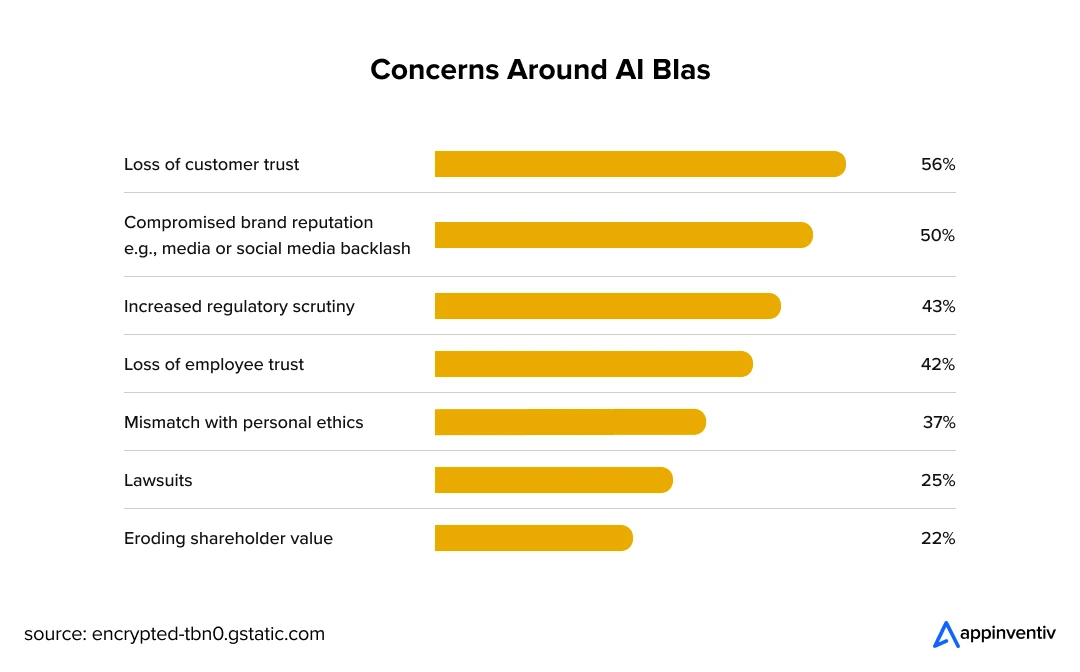

Why Should Businesses Care About AI Bias?

For leadership, AI bias risk management is increasingly a mandate – not an IT optionality. The implications for enterprise value span four domains: trust, compliance, capital, and operational efficiency.

- Brand & Market Trust

According to PwC, bias isn’t just a technical flaw, it invites “brand and reputation damage, lost revenue, and regulatory fines.” Meanwhile, Edelman’s 2024 data shows rising institutional skepticism: a majority of consumers want businesses to transparently explain how they are using new technologies, or risk eroding their confidence. - Regulatory & Compliance Pressure

The EU’s AI Act brings fairness and audit mandates for high-risk applications. In the U.S., the FTC (backed by DOJ, EEOC, and CFPB) has made it clear – biased AI tools may violate civil and fair-trade laws, even if developers claim innocence. Liability rests with enterprises deploying them. - Investor & ESG Expectations

Governance-focused investors such as BlackRock now expect firms to manage systemic risks – including bias – in their stewardship approach. Their latest report shows bias oversight is a key pillar of board-level ESG accountability. - Operational Efficiency & Growth

PwC projects AI could generate up to $15.7 trillion in global GDP gains by 2030 – but that potential depends on fair, trustworthy deployment. Biases embedded in operational systems – like claim scoring in insurance – can cause inefficiencies, regulatory friction, and customer loss.

To move boards when it comes to investing in solutions that help reduce bias in AI models we need more than abstract risk, we need tangible consequences that resonate with CXOs. Here are high-stakes real-world failures that illustrate what happens when leadership overlooks bias in AI models.

| Failure Example | Business Consequence |

|---|---|

| Amazon hiring tool | Public embarrassment; brand damage; internal project abandonment |

| iTutorGroup settlement | Legal liability; direct financial cost; forced process overhaul |

| Workday lawsuit | Regulatory escalation; potential class-action payouts; reputational risk |

| HireVue biometric analysis | Regulatory scrutiny; forced policy changes; candidate disengagement |

These AI bias issues are not academic, they are real, board-level crises that affect brand equity, operational continuity, and legal standing.

Turning Risk into Opportunity

The message for CXOs is clear: ignoring AI bias detection methods isn’t a cost-saving strategy, it’s a risk multiplier. At the same time, leaders who act decisively to govern bias are investing in brand resilience, regulatory readiness, investor confidence, and sustainable growth.

Next Steps for a Competitive Lead:

- Create a responsible AI oversight structure (e.g., a board-or executive-level committee) to reduce bias in AI models.

- Embed a fairness metric into your procurement and model approval processes.

- Publicly signal AI model bias reduction capability via investor and ESG reporting to lock in trust and differentiation.

From Amazon to Workday, enterprises have paid the price of ignoring AI bias. We help boards turn this risk into a framework for brand trust and regulatory safety.

The Paths to Mitigating Bias in AI

Every enterprise is at a different maturity stage with AI. Some already have production systems shaping credit, hiring, healthcare, or customer service decisions. Others are still experimenting, deciding how to build responsibly from day one.

The reality: your approach to AI bias risk management depends entirely on where you stand today. But in both cases, the risks are not optional – regulators, shareholders, and customers are already watching your AI bias mitigation strategies.

If You Already Have an AI Model in Production

Bias isn’t always visible in dashboards, it shows up in headlines, lawsuits, and churn. Think of AI bias in decision making like hidden financial debt, it compounds silently until the damage becomes public.

How to approach it to reduce bias in AI models:

- Audit with the right tools. Platforms like IBM AI Fairness 360, Fairlearn (Microsoft), Fiddler AI, or Arthur AI can detect disparities in outcomes across groups. Example: loan approvals being skewed against minority applicants.

- Run “bias fire drills.” Just like cybersecurity penetration testing, simulate worst-case scenarios: Would your model deny women promotions? Would a chatbot produce harmful stereotypes?

- Retrofit with intent. Options include:

- Collecting missing or underrepresented data.

- Re-training with fairness constraints.

- Running shadow models to compare fairness scores.

- Cost & timeline: Fixing automation bias in AI post-deployment can take 3 to 6 months and cost up to 10x more than pre-emptive bias control. The financial sector learned this the hard way – several banks faced investigations after algorithms were found to systematically deny loans to minorities.

What this means for CXOs: You can’t delay. Waiting increases exposure to lawsuits, loss of customer trust, and shareholder scrutiny. Treat bias remediation as urgently as a cyber breach.

If You Are Creating a New AI Model from Scratch

It’s tempting to prioritize speed-to-market, but skipping fairness at design stage is like building a skyscraper without fire exits. Cheap at first, costly later.

AI bias mitigation strategies:

- Data first. Prioritize representative sampling. Where gaps exist, use synthetic data augmentation to avoid sampling bias.

- Fairness baked in. Use fairness metrics (e.g., demographic parity, equalized odds) during model development, not after. Tools like Google’s What-If can run bias detection techniques in AI while training.

- Smarter algorithms. Leverage adversarial debiasing, feature blinding, or fairness-aware optimization to reduce AI racial bias at source.

- Governance from day zero. Establish AI bias detection methods and human oversight as part of the ML lifecycle, with clear documentation and audit trails.

- Timeline & cost: Expect bias-proof design to add 8-12 weeks upfront. But compare this to years of remediation, re-training, and reputational damage if issues emerge after launch.

What this means for businesses: If you’re building new, you hold the advantage. Embedding fairness early costs less, builds regulatory goodwill, and positions your enterprise as a leader in AI model bias reduction.

Bottom Line for Leaders

Whether you are fixing an existing model or building new ones, mitigating bias in AI is something that should be accounted for from day one.

- AI model bias won’t go away on its own. It grows.

- Costs multiply with delay. Fix early = 1x cost. Fix late = 10x + regulatory risks.

- Trust is the ultimate currency. Shareholders and customers reward businesses that treat AI bias as a leadership issue, not an IT issue.

How Appinventiv Helps You Deploy AI with Confidence?

Tackling bias in AI models isn’t a side project – it needs an end-to-end partner who can blend technology expertise with real-world business impact. That’s where Appinventiv comes in.

As an AI consulting company, we’ve worked with global enterprises to design and deploy AI services and solutions that aren’t just technically strong but also responsible by design. What sets us apart is not theory, but break-proof execution across industries:

- Financial Services: Built AI-driven lending platforms where AI racial bias in credit scoring was detected early through bias detection techniques in AI, saving millions in potential regulatory penalties.

- Healthcare: Partnered with a top health tech provider to cleanse training datasets and integrate AI bias mitigation strategies into diagnostic models, reducing misdiagnosis risk and strengthening patient trust.

- Retail & E-commerce: Deployed personalized recommendation engines with enterprise AI bias solutions, ensuring inclusivity in product visibility and avoiding reputational pitfalls.

- Generative AI: Helped a media company tackle bias in its generative AI development services outputs by implementing oversight frameworks and introducing bias detection methods, making the creative AI safer for global rollouts.

Our approach to mitigating bias in AI goes beyond just ‘removing bias’. We help leadership teams answer tough questions:

- How much effort (and budget) is required if you already have a live AI model?

- What does it take to embed fairness and accountability if you’re building from scratch?

- How can AI bias risk management be integrated into your governance so it scales with you?

Every engagement is designed to balance timeline, complexity, and ROI. Whether it’s rapid prototyping with fairness controls built in, or ongoing monitoring for models already in production, we bring frameworks and toolkits that shorten the path from awareness to action.

That’s why leading CXOs trust Appinventiv – not only for cutting-edge AI services and solutions, but also for helping them stay ahead of regulators, shareholders, and customers who demand responsible AI.

From financial services to healthcare, we’ve helped enterprises detect, mitigate, and govern AI bias before it becomes a revenue and reputation issue.

Conclusion

Every enterprise leader knows that innovation doesn’t come without risks. One of the most underestimated risks today is bias in AI technology. Unlike a technical glitch, bias quietly shapes decisions, data insights, and even customer trust. Over time, this risk compounds, and we’ve seen too many examples of AI bias – from lending models showing disparities to healthcare systems misclassifying patients.

The challenge is amplified by the rise of generative AI bias. What looks like a simple model output can quickly become a reputational crisis if stereotypes, offensive content, or misleading information creep in. This is where strategy must evolve. It’s not only about innovation velocity but about building in checks, balances, and fairness.

Forward-looking companies are already investing in AI bias mitigation strategies. These include better detection methods, governance frameworks, and independent audits. For some, the path is about correcting what’s already live – how to reduce bias in AI models without disrupting business. For others starting fresh, it’s about embedding AI model bias reduction from the ground up, avoiding costly rework later.

Even the most advanced tools can fall short if AI bias in language models isn’t addressed. Whether in customer service chatbots or knowledge management platforms, subtle issues accumulate and turn into larger AI bias issues if ignored. The lesson? Leaders need vigilance, not just vision.

At Appinventiv, we’ve guided enterprises across industries through this exact journey – offering both AI services and solutions and acting as a trusted AI consulting company. From risk workshops to hands-on remediation, our teams work with you to identify hidden vulnerabilities, deploy practical fixes, and ensure that Bias AI doesn’t become your organization’s headline.

For CXOs, the decision point is clear: waiting invites risk, acting creates advantage. The companies that treat responsible AI as core strategy – not compliance overhead, are the ones pulling ahead.

FAQs

Q. How can enterprises reduce bias in AI models?

A. The easiest way to think about it is: bias should get integrated during data collection, algorithm design, and testing. Enterprises usually reduce it by diversifying their datasets, auditing their models regularly, and this is big – keeping humans in the loop. Some teams also bring in external reviewers because you can’t always spot your own blind spots.

Q. What are the steps to implement unbiased AI?

A. It’s less of a checklist and more of a mindset. But generally:

- Collect representative data (no shortcuts here).

- Use diverse teams to label or validate it.

- Build algorithms that do not just chase perfection but also fairness.

- Test against legit real-world scenarios, not just benchmarks.

- Keep monitoring – models tend to change, and what was fair yesterday may not be tomorrow.

Q. How to check if your AI model is biased?

A. You basically “stress-test” it. Give it edge cases – like different genders, ethnicities, or unusual data points – and see how it behaves. If the output is skewed or favors one group, that’s bias. There are also tools like IBM AI Fairness 360 or Google’s What-If Tool that give you dashboards to visualize where the model leans.

Q. What are some popular open-source tools for AI bias detection?

A. Here are some examples:

- IBM AI Fairness 360 (super comprehensive, covers multiple bias metrics).

- Fairlearn from Microsoft (handy for Python devs).

- What-If Tool from Google (lets you play with scenarios visually).

- Aequitas from UChicago (more policy-focused, great for audits).

- Amazon’s recruiting tool: scrapped because it downgraded women’s resumes.

- Apple Card: accused of offering women lower credit limits than men with similar profiles.

- COMPAS in the US justice system: flagged for racial bias in predicting reoffending.

- Healthcare algorithms in the US: studies found they gave less care prioritization to Black patients.

Each of these made headlines not because the tech failed, but because trust failed. That’s the real danger.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

Real Estate Chatbot Development: Adoption and Use Cases for Modern Property Management

Key takeaways: Generative AI could contribute $110 billion to $180 billion in value across real estate processes, including marketing, leasing, and asset management. AI chatbots can improve lead generation outcomes by responding instantly and qualifying prospects. Early adopters report faster response times and improved customer engagement across digital channels. Conversational automation is emerging as a…

AI Fraud Detection in Australia: Use Cases, Compliance Considerations, and Implementation Roadmap

Key takeaways: AI Fraud Detection in Australia is moving from static rule engines to real-time behavioural risk intelligence embedded directly into payment and identity flows. AI for financial fraud detection helps reduce false positives, accelerate response time, and protecting revenue without increasing customer friction. Australian institutions must align AI deployments with APRA CPS 234, ASIC…

Agentic RAG Implementation in Enterprises - Use Cases, Challenges, ROI

Key Highlights Agentic RAG improves decision accuracy while maintaining compliance, governance visibility, and enterprise data traceability. Enterprises deploying AI agents report strong ROI as operational efficiency and knowledge accessibility steadily improve. Hybrid retrieval plus agent reasoning enables scalable AI workflows across complex enterprise systems and datasets. Governance, observability, and security architecture determine whether enterprise AI…