- Do You Really Need an AI Development Partner?

- Look Honestly at Internal Readiness

- Choose Between Hiring, Outsourcing, or Partnering Based on Reality

- Notice the Signs That Signal External Support Is Needed

- What an AI Development Partner Actually Does

- Beyond “Building Models”: Enterprise Delivery Responsibilities

- Strategic Validation, Planning, and Governance Facilitation

- End-to-End Delivery Versus Piecemeal Support

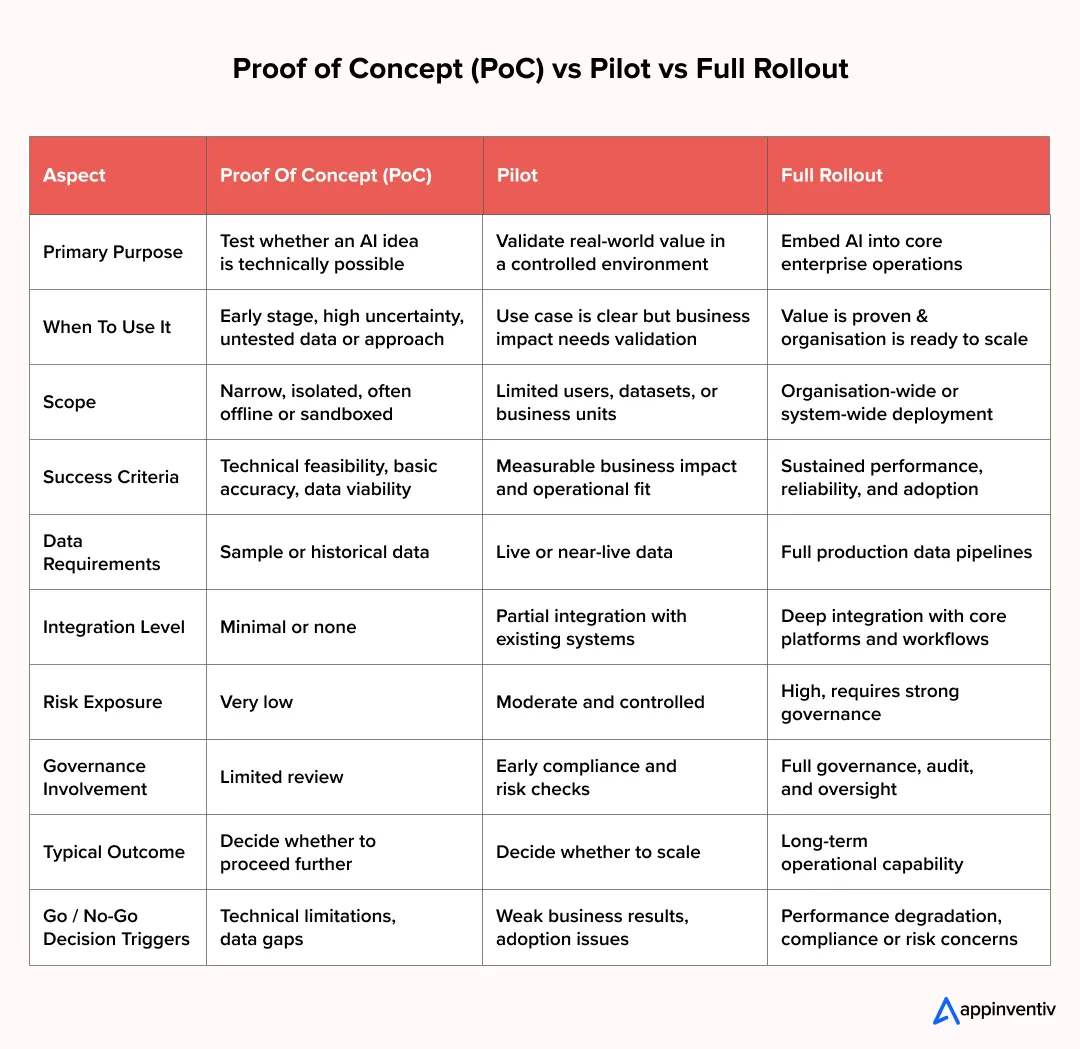

- How to Define Your AI Initiative Scope

- Start With the Business Outcome, Not the Technology

- Choose Opportunities That Balance Impact With Reality

- Plan Beyond the Pilot From Day One

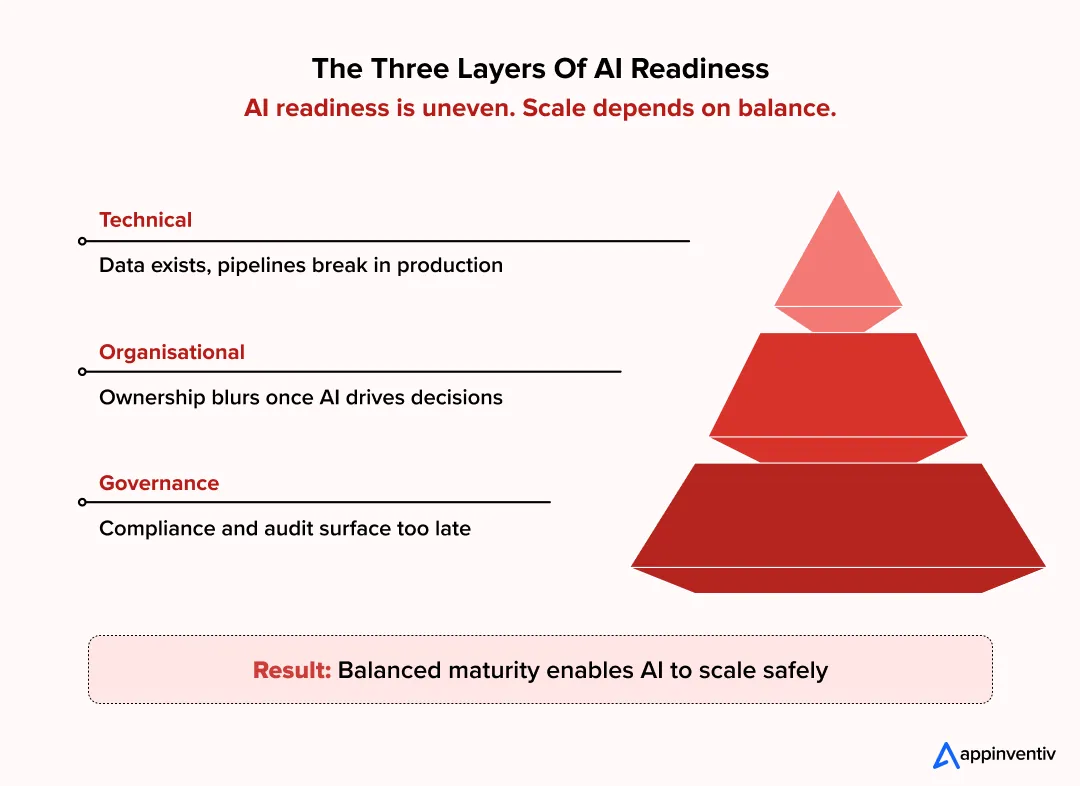

- A Maturity Framework for AI Readiness

- Technical Readiness: Data, Infrastructure, and Tooling

- Organisational Readiness: Stakeholders and Ownership

- Governance Readiness: Risk, Compliance, and Audit Expectations

- A Step-by-Step Checklist for Hiring an AI Development Partner in the UK

- Readiness Assessment

- Shortlisting And Vendor Evaluation

- Technical And Commercial Scorecards

- Final Negotiation And Contracting

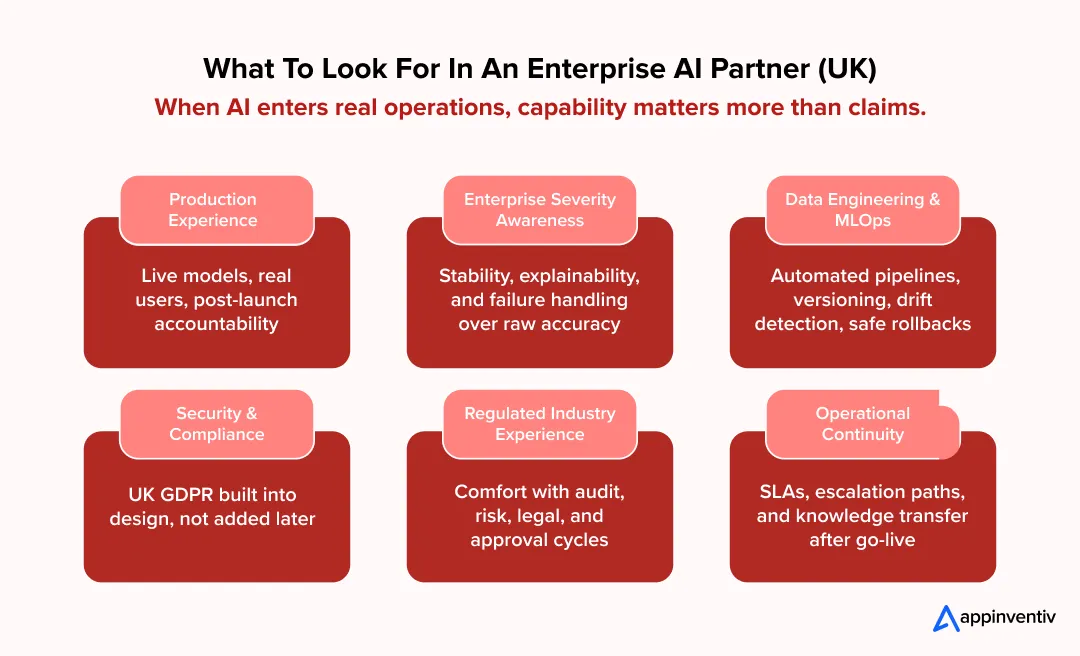

- Key Capabilities to Look for in an Enterprise AI Development Partner UK

- Applied AI and Production Experience

- Data Engineering and MLOps Maturity

- Security, Cloud, and Scalable Architecture

- Experience With UK and Regulated Sectors

- Responsibility for Operational Continuity

- Choosing the Right Engagement Model

- Dedicated Teams vs Hybrid Delivery Models

- Red Flags When Hiring an AI Development Partner

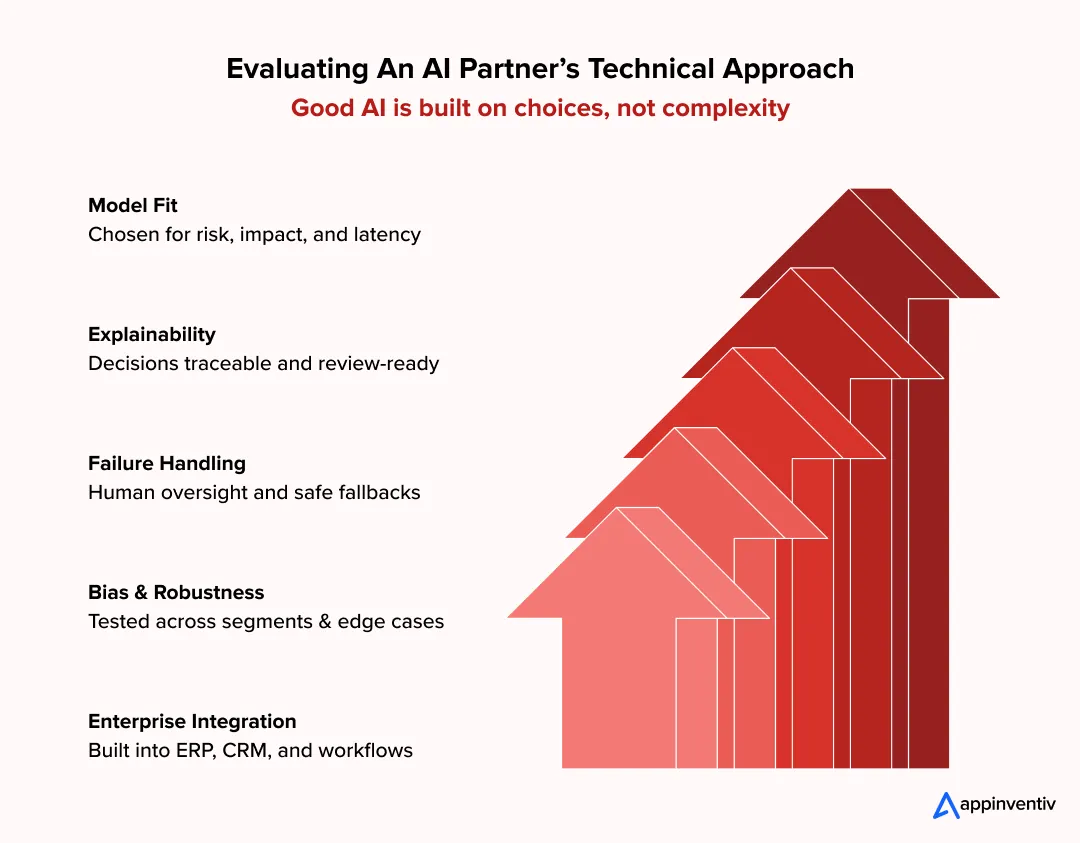

- Evaluating Technical Approach of Your AI Technology Partner in the UK

- Model Selection Based on Business and Risk Context

- Explainability and Decision Transparency

- Risk Controls and Failure Handling

- Data Bias Identification and Mitigation

- Robustness and Stress Testing

- Integration Strategy With Enterprise Systems

- Technologies And Tools Used In AI Development

- Cost Structures and Budget Planning

- What Influences AI Partner Cost

- UK Cost Benchmarks And Hidden Cost Categories

- How To Build Realistic Budgets And Contingency Buffers

- Security, Compliance And Governance Considerations

- UK GDPR, Algorithmic Fairness, And Documentation Needs

- Audit Capabilities And Explainability Expectations

- Risk Escalation Frameworks And Governance Checkpoints

- Common Mistakes And How To Avoid Them

- Over-Engineering Before Validating Business Value

- Treating AI As a Standalone System

- Underestimating Data Preparation Effort

- Ignoring Governance Until Late In The Process

- No Clear Ownership After Go-Live

- Post-Selection Onboarding And Transition

- Internal Preparation For Partner Collaboration

- Knowledge Transfer And Documentation Expectations

- Setting Performance Reviews And KPIs

- Making A Confident, Long-Term AI Partner Decision

- FAQs

Key takeaways:

- AI initiatives fail more often due to delivery, governance, and integration gaps than model limitations.

- Choosing the right AI partner is a business decision, not just a technical one.

- Production experience, data engineering maturity, and UK regulatory awareness matter more than innovation claims.

- Clear scope, realistic budgeting, and early governance involvement reduce rework and stalled pilots.

- The right AI partner helps turn experimentation into reliable, long-term enterprise capability.

“AI sounds decisive in boardrooms. It feels far less certain on the ground.”

Do you know that many UK organisations invest in AI with clear intent, yet struggle to turn it into something that genuinely changes how teams work? Deloitte’s UK AI research shows that while adoption continues to rise, a large number of initiatives stall at pilot stage because operating models, data readiness, and delivery capability are not built for scale. In most cases, the technology works. The organisation around it does not.

In the UK, this challenge shows up early. Legal teams raise questions about data use. Risk teams ask who owns automated decisions. IT teams struggle to integrate models into systems that were never designed for AI. UK GDPR obligations, legacy platforms, and cautious governance slow momentum, often forcing teams to pause or rethink projects that looked strong on paper.

This is why hiring an AI development partner in the UK is not a technical choice, but a business one. This guide is built to support UK AI development partner selection by focusing on execution reality, regulatory confidence, and long-term delivery, so AI initiatives move beyond experimentation and into dependable enterprise use.

88% of organisations report measurable value from AI, yet most struggle to scale it across the enterprise.

Do You Really Need an AI Development Partner?

This question usually comes up when progress starts to slow. Teams may have tested an idea, built a small model, or explored a tool, and then realised that moving forward feels harder than expected. It is rarely because the team lacks interest. More often, the work demands skills, time, and ownership that stretch what is already in place.

Look Honestly at Internal Readiness

Many organisations have capable engineers and analysts, but AI use cases in the real world ask for more than isolated expertise.

- Data is spread across systems and not easy to prepare

- No clear process exists for deploying or monitoring models

- Ownership becomes unclear once something goes live

- Security and compliance reviews introduce delays

When these gaps appear, internal teams often end up maintaining workarounds instead of building momentum.

Choose Between Hiring, Outsourcing, or Partnering Based on Reality

Each path has trade-offs, and none is a default answer.

- Hiring takes time and works best when AI is a long-term, core capability

- Outsourcing can help with specific tasks but rarely solves end-to-end delivery

- Partnering suits situations where delivery, integration, and accountability need to move together

For many UK enterprises, a partner provides structure without forcing an immediate organisational overhaul.

Notice the Signs That Signal External Support Is Needed

Certain patterns tend to repeat when organisations try to do everything alone.

- Pilots that never move beyond testing

- Difficulty connecting AI outputs to real workflows

- Ongoing concerns from legal, risk, or IT teams

- No single owner responsible for outcomes

When these issues persist, hiring an AI development partner in the UK often helps shift the focus from experimentation to execution, making progress feel achievable again.

What an AI Development Partner Actually Does

In enterprise environments, AI delivery is rarely a single task. It is a chain of decisions, handovers, approvals, and integrations that must hold together over time. This is where the role of an AI development partner becomes clearer. Their responsibility is not just to build intelligence, but to carry it safely from idea to day-to-day operations.

A custom AI solutions partner in the UK must adapt delivery to existing systems rather than forcing generic implementations.

Beyond “Building Models”: Enterprise Delivery Responsibilities

Model development is often the most visible part of AI, but it is not where most effort goes. In practice, partners spend far more time dealing with system constraints and operational realities.

- Translating business goals into AI use cases that can actually be deployed

- Designing data pipelines that remain stable as data volume and sources change

- Integrating AI into existing platforms such as ERP, CRM, analytics, or workflow tools

- Ensuring performance, security, and reliability once systems are under real load

Without this work, even strong models struggle to gain adoption across teams.

Strategic Validation, Planning, and Governance Facilitation

Before development starts, experienced partners help enterprises slow down in the right places. This stage is critical for avoiding rework later.

- Validating whether AI use cases in the UK are feasible given data, timelines, and constraints

- Helping define success in business terms, not just technical metrics

- Supporting conversations around ownership, accountability, and escalation

- Aligning AI initiatives with internal governance and regulatory expectations

This planning phase often determines whether a project scales smoothly or repeatedly hits internal roadblocks.

End-to-End Delivery Versus Piecemeal Support

One of the biggest differences between suppliers becomes visible over time. Some focus on isolated tasks. Others take responsibility for the full lifecycle.

| Area | Piecemeal Support | End-to-End AI Partner |

|---|---|---|

| Scope | Limited to model building or experiments | Covers strategy, build, deployment, and optimisation |

| Ownership | Handoffs between multiple teams | Clear accountability throughout the lifecycle |

| Integration | Often left to internal teams | Designed and delivered as part of the solution |

| Governance | Treated as a later concern | Embedded from the start |

| Long-term value | Declines after delivery | Improves as systems mature |

For enterprises, end-to-end delivery reduces coordination risk and avoids gaps between teams.

In practice, a strong AI development partner acts as an extension of the organisation. They help translate ambition into systems that survive internal scrutiny, integrate cleanly into existing operations, and continue delivering value long after the initial implementation is complete.

How to Define Your AI Initiative Scope

Scope is often where AI initiatives quietly go off track. Teams start with good intent, but without clear boundaries the work expands, priorities shift, and momentum fades. In enterprise settings, discipline at this stage saves far more time than it costs.

Start With the Business Outcome, Not the Technology

AI works best when it is tied to something concrete.

- What decision needs to be made?

- Where is time, cost, or risk building up today?

If those questions cannot be answered clearly, AI is unlikely to deliver meaningful results.

Choose Opportunities That Balance Impact With Reality

Some ideas look valuable on paper but are hard to execute.

- Favour use cases where data already exists and teams can act on the output

- Be cautious of initiatives that depend on major system changes or unclear ownership

- Think through regulatory or reputational implications early

Progress comes faster when the first use case is manageable as well as valuable.

Plan Beyond the Pilot From Day One

Pilots often prove that something is possible, but not that it is sustainable.

- Decide upfront what would justify scaling

- Make sure systems, data, and governance will support growth

- Avoid building something that only works in isolation

When scope is set with these realities in mind, AI initiatives are more likely to mature into something the business can rely on rather than another short-lived experiment.

A Maturity Framework for AI Readiness

Before bringing an AI partner into the picture, it helps to take an honest look inward. Most organisations are not uniformly “ready” for AI. Some teams are technically prepared, others are still figuring out ownership, and governance often lags behind both. Seeing AI readiness as a maturity curve rather than a checklist makes gaps easier to address when choosing a predictive analytics vendor in the UK.

Technical Readiness: Data, Infrastructure, and Tooling

This is usually where confidence is highest and where assumptions are most common.

- Data may exist, but not always in a form that models can use reliably

- Pipelines often work for analytics but struggle with real-time or production workloads

- Tooling may support experimentation but lack support for deployment, monitoring, or version control

Enterprises that underestimate this layer often find themselves rebuilding foundations mid-project.

Organisational Readiness: Stakeholders and Ownership

AI introduces shared responsibility, which can slow progress if roles are unclear.

- Decisions span IT, data teams, business owners, and risk functions

- Ownership can become blurred once models start influencing outcomes

- Progress depends on whether leaders stay engaged beyond initial approval

When accountability is weak, even technically sound initiatives tend to lose momentum.

Governance Readiness: Risk, Compliance, and Audit Expectations

This is where many AI initiatives pause unexpectedly.

- Risk teams need clarity on how automated decisions are controlled and reviewed

- Compliance requirements shape what data can be used and how outputs are explained

- Audit and documentation expectations often emerge late if not planned upfront

Building strong AI Guardrails for governance does not slow down your operations. It reduces rework and builds confidence to scale.

Looking at readiness through these three lenses helps organisations move forward with fewer surprises. It shifts AI from an aspirational goal to something that can be delivered, defended, and sustained over time.

A Step-by-Step Checklist for Hiring an AI Development Partner in the UK

Choosing an AI project partner for UK businesses tends to go wrong when decisions are rushed or driven by surface-level impressions. Effective UK AI development partner selection depends on structure, not speed. This simple checklist helps teams understand how to hire AI developers in the UK.

Readiness Assessment

Before speaking to vendors, most of the work needs to happen internally. This step is about getting everyone aligned.

- Be clear on what problem the business wants to solve and why AI is being considered

- Check whether the data needed actually exists and who controls it

- Agree on who will make decisions when trade-offs appear

- Set realistic expectations around budget, timing, and risk

This groundwork saves time later and narrows the field quickly.

Shortlisting And Vendor Evaluation

Shortlists should reflect relevance, not brand familiarity.

- Look for partners who have worked on similar problems or in similar environments

- Pay attention to how openly vendors talk about challenges, not just outcomes

- Notice whether answers are practical or overly generic

Partners who understand the work tend to ask better questions than they answer.

Technical And Commercial Scorecards

Scorecards help teams compare options without relying on instinct alone.

- Technical criteria might include data handling, deployment approach, and operational readiness

- Commercial criteria should cover pricing structure, flexibility, and ongoing support

- Weight each area based on what matters most to the business

This makes trade-offs visible and discussions more objective.

Final Negotiation And Contracting

Contracts should reflect how AI work unfolds in reality, not how it looks in proposals.

- Allow room for iteration without constant renegotiation

- Be explicit about IP ownership, data usage, and confidentiality

- Agree on how issues are escalated and resolved

- Match service levels to business impact, not just delivery milestones

A clear agreement at this stage reduces friction later and sets the tone for a productive working relationship. For organisations wondering how to choose an AI development partner in the UK, evaluating technical and delivery capabilities is the most dependable first step.

Key Capabilities to Look for in an Enterprise AI Development Partner UK

Once AI starts touching real operations, enterprises stop asking what could work and start asking what will hold up. At this stage, capability is not about innovation claims. It is about whether your AI technology partner in the UK has already dealt with complexity, scrutiny, and failure in real environments.

Applied AI and Production Experience

Many custom artificial intelligence developers can show a working demo. Fewer can explain what happened after launch.

- Models in live use, not only in demos

Look for partners who have supported AI models after they went live. That usually means dealing with changing data, unexpected behaviour, and real users rather than static test sets. - Understanding enterprise severity, not just accuracy

In enterprise settings, a small error can trigger financial loss, compliance issues, or customer impact. Mature partners design for stability, response time, and AI explainability, and they plan what happens when the model is unsure or wrong.

The difference shows in how comfortably a partner talks about edge cases and failure, not just performance scores.

Data Engineering and MLOps Maturity

This is where AI either becomes dependable or quietly starts to decay.

- Pipeline automation, versioning, and drift management

Strong partners treat data pipelines as long-term systems. They automate ingestion and preparation, track model versions, and watch for data or behaviour changes that affect output quality. - Deployment automation and rollback controls

Models should be deployed with the same care as enterprise software. That includes controlled releases, testing in live environments, and the ability to roll back quickly when results drift from expectations.

Without these controls, confidence in AI systems erodes fast.

Security, Cloud, and Scalable Architecture

In the UK, security questions tend to surface early and stay central.

- UK GDPR compliance baked into design

Partners should be clear about AI compliance and integration. They must be aware of how data is collected, used, and retained. Explainability, consent, and traceability need to be designed into the system rather than documented after the fact. - Secure cloud deployment and data residency plans

Enterprises expect clarity on where data sits, who can access it, and how it is protected. Scalability matters, but not at the expense of control or compliance.

If these points are vague, approval for production is often delayed or denied.

Experience With UK and Regulated Sectors

Delivery in regulated environments follows a different rhythm.

- Working with audit, legal, procurement, and risk teams

Partners need to handle reviews, documentation requests, and approval gates without slowing delivery to a halt. - Familiarity with sector-specific governance expectations

Financial services, healthcare, and public-sector organisations impose additional checks. Partners who have worked in these environments tend to anticipate them rather than react late.

This experience reduces friction and avoids last-minute redesigns.

Responsibility for Operational Continuity

AI systems do not end at go-live. That is often when the real work begins.

- Post-launch support, SLAs, and escalation paths

Enterprises need to know who responds when performance drops or incidents occur, and how quickly issues are addressed. - Training and handover to internal teams

Long-term success depends on internal understanding. Good partners document decisions, explain system behaviour, and help teams take ownership over time.

In practice, the right UK AI software development expert is one who plans for life after launch. They build systems that can be questioned, maintained, and trusted, not just delivered.

Choosing the Right Engagement Model

Once an organisation commits to AI, delivery decisions start to matter more than intent. In many UK enterprises, projects slow down not because the model is wrong, but because the engagement model does not match internal constraints, risk appetite, or technical reality. Choosing how you work with a partner is as important as choosing who that partner is.

Build, buy, or partner: understanding the real trade-offs

Each approach places a different burden on the organisation.

- Building internally gives full control over architecture, data, and IP, but it also means carrying the full weight of hiring specialist roles, setting up custom MLOps, maintaining infrastructure, and meeting governance expectations. For teams without mature data platforms or AI operations experience, timelines often stretch far beyond initial plans.

- Buying off-the-shelf solutions can accelerate early adoption, especially for narrow use cases, but these tools are often opinionated. Integration with existing systems, custom workflows, and UK-specific compliance requirements can become limiting factors over time.

- Partnering is typically chosen when enterprises need production-grade delivery without rebuilding internal structures. A strong partner brings established engineering practices, deployment pipelines, and governance experience while sharing responsibility for outcomes.

In regulated UK environments, partnering often reduces delivery risk while preserving enough control to satisfy compliance and audit teams.

Fixed-scope contracts vs outcome-based engagements

The commercial model directly affects how technical decisions are made.

- Fixed-scope contracts work when requirements are stable and well understood, such as system integration or data pipeline build-outs. However, AI initiatives often surface unknowns around data quality, model behaviour, or integration complexity once work begins.

- Outcome-based engagements allow scope to evolve as learning emerges. These models focus on agreed business metrics, such as reduction in manual effort or improvement in decision accuracy, rather than predefined tasks.

From a technical perspective, outcome-based models better support iterative development, model tuning, and architecture adjustments without constant renegotiation.

Dedicated Teams vs Hybrid Delivery Models

Team structure influences both speed and long-term sustainability.

- Dedicated partner teams bring focus and continuity. They are well suited when AI systems are core to business operations and require deep context around data, workflows, and risk tolerance.

- Hybrid delivery models combine partner specialists with internal engineers, data teams, and product owners. This setup supports knowledge transfer, shared ownership, and smoother handover over time.

Ultimately, the right AI roadmap strategy in the UK reflects how much control, flexibility, and accountability the organisation needs at its current stage. When chosen carefully, it enables AI initiatives to move forward with fewer blockers and far less rework as complexity increases.

Red Flags When Hiring an AI Development Partner

Certain warning signs tend to appear early in conversations, long before delivery begins. Spotting them upfront can save months of rework and avoid stalled initiatives.

- Overpromising outcomes: Claims of near-perfect accuracy, guaranteed ROI, or rapid enterprise rollout usually signal a lack of real production experience.

- Vague answers on data and integration: If a partner cannot clearly explain how they will handle your data, integrate with legacy systems, or manage data quality, delivery risk is high.

- Weak governance and compliance awareness: Limited understanding of UK GDPR, EU Data Act, explainability requirements, or audit expectations often leads to late-stage blockers.

- No plan beyond go-live: Partners who focus only on build and deployment, without discussing monitoring, retraining, or support, rarely deliver long-term value.

- Generic case studies: Examples that lack detail on scale, constraints, or lessons learned are often a sign of shallow experience.

Understanding these warning signs is a critical part of how to choose AI development partner UK without relying on surface-level signals.

Evaluating Technical Approach of Your AI Technology Partner in the UK

When AI moves from idea to implementation, technical approach is where differences between partners become clear. A credible AI technology partner in the UK makes trade-offs explicit rather than hiding complexity.

This is not about using advanced models. It is about how decisions are made when accuracy, risk, explainability, and integration start pulling in different directions.

Model Selection Based on Business and Risk Context

A strong partner does not default to the most complex model available. They start by understanding where and how the output will be used.

- Model choice should reflect decision criticality, latency needs, and tolerance for error

- Simpler models are often preferred in regulated or high-impact workflows because they are easier to explain and govern

- Partners should be able to justify why a specific model type is suitable for the use case

This shows whether the partner designs for real-world use rather than technical novelty.

Explainability and Decision Transparency

Explainability is not a documentation task. It is part of system design.

- AI outputs should be interpretable by business, risk, and audit teams

- Decisions that affect customers or operations should be traceable back to inputs and logic

- Partners should plan for explainability early, not retrofit it after deployment

In UK enterprises, lack of transparency is a common reason AI systems fail internal reviews.

Risk Controls and Failure Handling

Enterprise AI must assume that models will be wrong at times.

- Confidence scoring and thresholds help flag uncertain predictions

- Human-in-the-loop workflows reduce exposure where automation carries risk

- Fallback logic ensures systems degrade safely rather than fail silently

Partners who design for failure tend to deliver systems that earn long-term trust.

Data Bias Identification and Mitigation

Bias rarely appears obvious during early testing. It emerges over time and scale.

- Partners should assess training data for imbalance and hidden patterns

- Outputs should be reviewed across different segments to identify skew

- AI bias mitigation techniques should be applied before and after deployment

This discipline protects both outcomes and reputation.

Robustness and Stress Testing

Enterprise systems face conditions that test environments rarely capture.

- Models should be tested against edge cases and abnormal inputs

- Performance should be assessed as data distributions change

- Stress testing helps reveal brittleness before it reaches production

Robust systems survive change. Fragile ones do not.

Integration Strategy With Enterprise Systems

AI creates value only when it fits into existing operations.

- Models should integrate with core platforms such as ERP, CRM, and data layers

- Outputs must appear where decisions are made, not in separate dashboards

- Latency, security, and access controls should be designed upfront

When integration is treated as an afterthought, adoption usually suffers.

A machine learning development partner’s methodology is revealed through these choices. The right approach balances performance with reliability, transparency, and fit within the enterprise ecosystem rather than optimising for models alone.

In short, a strong AI implementation partner in the UK plans for failure, not just deployment.

Technologies And Tools Used In AI Development

The tools an AI partner uses matter less than how they are applied. In enterprise environments, technology choices are typically shaped by security, scalability, explainability, and integration requirements rather than preference alone.

Most AI development partners work across a flexible stack that includes:

- Foundation Models And Platforms

Platforms such as OpenAI, Google Vertex AI, and Anthropic are commonly used for large language model workloads, depending on governance, hosting, and data control needs. - Model Development Frameworks

Libraries like PyTorch and TensorFlow are widely adopted for training, fine-tuning, and deploying machine learning algorithms at scale. - Orchestration And Application Layers

Tools such as LangChain enable structured workflows, tool calling, and retrieval-based reasoning in production-grade AI applications. - Retrieval And Knowledge Augmentation

Techniques like integrating retrieval-augmented generation (RAG), supported by vector databases, are used to ground AI outputs in enterprise data while maintaining control over accuracy and provenance. - Developer Productivity And Experimentation Tools

Platforms including Cursor.ai and Windsurf are often used to accelerate prototyping and developer workflows, particularly during early-stage experimentation.

In mature enterprise delivery, these tools are interchangeable. What differentiates a strong AI partner is the ability to select, combine, and govern them in ways that align with security policies, data residency requirements, and long-term maintainability.

Cost Structures and Budget Planning

Cost is often discussed late in AI initiatives, but in practice it shapes almost every delivery decision. For UK enterprises, budgeting for AI is less about finding the lowest number and more about understanding AI partner cost in the UK early to prevent budget shock later.

What Influences AI Partner Cost

AI project costs vary widely because the work behind them does too.

- Project size and scope: Narrow use cases with limited data and integration needs sit at the lower end, while multi-system, enterprise-wide initiatives push costs up quickly.

- Data maturity: Clean, well-governed data reduces effort. Fragmented or low-quality data increases time spent on engineering and validation.

- Integration complexity: Connecting AI to ERP, CRM, legacy systems, or real-time workflows adds both engineering and testing overhead.

- Governance and security requirements: Regulated environments require more documentation, controls, and review cycles, which increases delivery effort.

UK Cost Benchmarks And Hidden Cost Categories

For most UK enterprises, typical AI partner cost in the UK range between $40,000 and $400,000, depending on complexity and ambition.

Hidden costs often appear when:

- Data preparation is underestimated

- Internal teams need more support than planned

- Security, compliance, or audit requirements expand mid-project

- Ongoing monitoring and optimisation are not budgeted upfront

These costs are rarely visible in initial proposals but have a real impact on timelines and outcomes.

How To Build Realistic Budgets And Contingency Buffers

AI initiatives benefit from budgets that expect change rather than resist it.

- Allocate contingency for data issues and integration rework

- Budget separately for post-launch monitoring and optimisation

- Avoid committing the full budget before early milestones are validated

Enterprises that plan for uncertainty tend to make better decisions under pressure. A realistic budget does not just fund delivery. It protects momentum when assumptions are challenged and adjustments become necessary.

Security, Compliance And Governance Considerations

For an enterprise AI development partner in the UK, governance is part of delivery, not a final review step. They shape whether an AI initiative is approved, deployed, and allowed to scale inside the enterprise.

UK GDPR, Algorithmic Fairness, And Documentation Needs

UK GDPR places clear obligations on how data is used, processed, and explained in automated systems. This becomes critical when AI influences customer outcomes, pricing, eligibility, or risk decisions.

From a technical standpoint, enterprises should expect:

- Clear data lineage showing where training and inference data comes from

- Controls around data minimisation, retention, and lawful processing

- Design choices that support fairness testing and bias monitoring over time

- Documentation that explains model purpose, inputs, limitations, and intended use

Well-documented systems are easier to defend internally and externally when questions arise.

Audit Capabilities And Explainability Expectations

AI systems increasingly fall within audit scope, especially in regulated industries. Explainability is not just a regulatory concept. It is a practical requirement for review and accountability.

Strong technical practices include:

- Model explainability techniques appropriate to risk level and use case

- Decision traceability that links outputs back to inputs and logic

- Logs that support retrospective review without manual reconstruction

- The ability to answer why a decision was made, not just what the output was

When explainability is weak, deployment often stalls regardless of model performance.

Risk Escalation Frameworks And Governance Checkpoints

Enterprise AI must assume that things will go wrong at some point. Governance defines how the organisation responds when they do.

Effective governance frameworks typically include:

- Defined thresholds for acceptable performance and risk

- Clear escalation paths when models behave unexpectedly

- Human oversight points for high-impact or sensitive decisions

- Regular governance checkpoints tied to deployment, scaling, and retraining

From a delivery perspective, these controls reduce uncertainty. They allow AI systems to operate within known boundaries rather than relying on informal judgement.

In UK enterprises, strong security and governance do not slow AI down. They make it possible to deploy with confidence and sustain trust as systems grow in influence and reach.

Common Mistakes And How To Avoid Them

Most AI initiatives that stall do so for reasons that are easy to recognise in hindsight. The same patterns appear across industries and delivery models. Breaking them down clearly helps teams spot risk early and correct course before cost and confidence erode.

Over-Engineering Before Validating Business Value

This mistake often comes from good intentions. Teams want to build something robust, advanced, and future-ready from day one.

What typically goes wrong:

- Models are optimised for accuracy without linking results to business outcomes

- Engineering effort grows before stakeholders agree on what success means

- Complexity makes systems harder to explain, govern, or adapt

How to avoid it

Start with the simplest model that can answer a real business question. Let proven impact justify additional sophistication, not the other way around.

Treating AI As a Standalone System

AI that lives outside core operations rarely survives long-term.

Common symptoms include:

- Outputs delivered through separate tools or dashboards

- Manual steps required to act on predictions

- Confusion over who owns outcomes once decisions are automated

How to avoid it

Design AI around existing workflows. Integration with ERP, CRM, and operational systems should be part of the initial scope, not a later enhancement.

Underestimating Data Preparation Effort

Data challenges are often assumed to be temporary. They rarely are.

Typical issues:

- Inconsistent data formats across systems

- Poor data quality only discovered during training

- Unclear ownership delaying access or approvals

How to avoid it

Treat data engineering as a core workstream. Validate data availability, quality, and ownership before committing to timelines.

Ignoring Governance Until Late In The Process

Governance rarely blocks AI at the start. It blocks it at scale.

Late-stage problems often include:

- Legal or risk teams raising concerns after development

- Explainability gaps preventing approval

- Audit requirements forcing redesign

How to avoid it

Involve governance stakeholders early. Build explainability, documentation, and escalation paths into the delivery plan from the beginning.

No Clear Ownership After Go-Live

Once AI systems are live, accountability can quickly blur.

This usually shows up as:

- No owner for performance degradation

- Delays in responding to incidents

- Uncertainty around retraining or updates

How to avoid it

Define ownership clearly. Decide who monitors performance, who approves changes, and how issues are escalated before launch.

Avoiding these mistakes does not require slowing delivery. It requires sequencing decisions correctly. When teams validate value early, plan for integration, and treat governance as part of delivery, AI initiatives are far more likely to reach dependable enterprise use.

Post-Selection Onboarding And Transition

The role of an AI implementation partner in the UK becomes most visible after contracts are signed. Once the paperwork is done, attention often jumps straight to delivery, but the early transition period is what sets expectations on both sides. Taking a little more care here usually saves a lot of time later.

Internal Preparation For Partner Collaboration

Before work gathers pace, it helps to be clear about how the relationship will actually run.

- Someone needs to own decisions, not just tasks

- Stakeholders should agree on priorities so the partner is not pulled in different directions

- Access to data, systems, and people should be ready early, not requested mid-sprint

- Communication should follow a predictable cadence rather than ad-hoc calls

When this groundwork is missing, the first few weeks are often spent clearing blockers instead of making progress.

Knowledge Transfer And Documentation Expectations

AI systems become difficult to manage when understanding sits with only one group. Knowledge needs to move steadily, not all at once at the end.

- Ask for explanations of why choices were made, not just what was built

- Keep a simple record of key decisions, changes, and assumptions

- Use walkthroughs to let internal teams see how the system behaves in practice

- Treat documentation as something that evolves alongside the work

This makes it easier for teams to step in, ask the right questions, and make changes later.

Setting Performance Reviews And KPIs

Once systems are live, it is easy for them to fade into the background. Regular check-ins keep them grounded in reality.

- Review outcomes that matter to the business, not only technical indicators

- Look at performance as data and usage patterns shift

- Be clear about when retraining, tuning, or escalation is needed

Handled well, onboarding and transition turn delivery into a shared effort rather than a handover. That early clarity often makes the difference between a partnership that feels reactive and one that feels dependable.

Get clarity on feasibility, governance gaps, and the fastest path to dependable AI delivery.

Making A Confident, Long-Term AI Partner Decision

Choosing the right partner is often the difference between an AI initiative that stays experimental and one that becomes part of everyday operations. For UK enterprises, hiring AI development partner UK decisions carry long-term implications around risk, scalability, and trust. The most effective choices are usually grounded in delivery maturity, governance readiness, and the ability to operate under real enterprise constraints.

At Appinventiv, our work as an enterprise AI development partner in the UK is shaped by this reality. We focus on helping organisations move beyond pilots by designing AI systems that integrate into existing platforms, meet regulatory expectations, and continue to perform as conditions change. Our experience delivering complex, data-driven systems for regulated and high-scale environments, including platforms like the Flynas airline app, reflects this execution-first approach.

Ultimately, selecting an AI partner is not about finding the most advanced model or the loudest promise. It is about choosing a team that understands how AI behaves in production, how enterprises manage risk, and how value is sustained over time. When those elements align, AI stops being an initiative and starts becoming a dependable capability.

If you are evaluating your next steps, a structured conversation with our experienced partner can help clarify feasibility, risk, and the most practical path forward.

FAQs

Q. How Do I Choose the Right AI Development Partner in the UK?

A. Choosing the right partner starts with understanding delivery capability, not model sophistication. UK enterprises should look for partners who have taken AI systems into production, worked under regulatory constraints, and supported systems post-launch.

The strongest indicator is whether the partner can explain trade-offs clearly, including where AI may not be appropriate. This approach is central in understanding how to choose an AI development partner in the UK that holds up beyond pilots.

Q. What Factors Should I Consider Before Hiring an AI Development Company in the UK?

A. Before hiring, assess readiness on three fronts: data maturity, internal ownership, and governance expectations. Many organisations move too quickly without validating these basics.

It also helps to understand whether you need a vendor or an AI technology integration partner who can embed AI into existing platforms, workflows, and operating models rather than delivering isolated components.

Q. What Questions Should I Ask an AI Development Partner Before Signing a Contract?

A. The most useful questions are practical rather than technical.

Ask how the partner:

- Handles data quality issues

- Manages model performance over time

- Supports explainability and audit requests

- Responds when models underperform

Clear answers here reveal whether you are dealing with a delivery partner or a short-term AI solutions provider UK focused only on build.

Q. How Do I Evaluate the Technical Expertise of an AI Development Partner?

A. Technical evaluation should focus on systems, not algorithms. Look for evidence of production deployments, MLOps practices, and integration depth.

A structured AI development services comparison UK approach helps here, using scorecards that weigh data engineering, deployment maturity, security, and governance alongside model capability.

Q. What Makes a Good AI Development Partner for Enterprise Projects?

A. For enterprise environments, a good partner understands scale, scrutiny, and continuity. They design for failure, explainability, and long-term operation rather than one-off success.

The best AI development partner in the UK is usually the one who is most realistic about constraints and most comfortable operating within them.

Q. What Are the Risks of Choosing the Wrong AI Development Partner?

A. The biggest risk is stalled progress. Poor partner choices often lead to pilots that never scale, repeated rework, or late-stage governance blockers.

Other risks include hidden technical debt, weak security practices, and long-term dependency without knowledge transfer, all of which increase cost and operational exposure.

Q. What Are the Common Mistakes Companies Make When Hiring an AI Partner?

A. Common mistakes include over-engineering early, underestimating data preparation, and treating AI as a standalone system.

Another frequent issue is focusing on credentials rather than delivery evidence, especially when selecting a machine learning development partner without verifying real-world deployment experience.

Q. How Do UK Regulations Impact AI Development Partnerships?

A. UK regulations influence how AI systems are designed, approved, and audited. UK GDPR requirements around explainability, lawful data use, and accountability shape both technical and governance decisions.

Partners must be able to support AI project governance and compliance discussions from the outset rather than reacting late in the process.

Q. How Do AI Partners Ensure Scalability and Long-Term Maintainability?

A. Scalability depends on architecture and operations, not just model performance. Strong partners build automated pipelines, monitoring, retraining workflows, and rollback mechanisms.

They also align delivery with an AI roadmap strategy UK, ensuring systems can evolve as data, regulation, and business needs change.

Q. How Do AI Development Partners Handle Data Security and Compliance in the UK?

A. Security and compliance are built into design, not added later. This includes encryption, access control, audit logging, and clear data residency practices.

Partners experienced in AI compliance and integration ensure AI systems can pass internal security reviews and external audits without redesign, making long-term operation viable.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

Governance vs. Speed: Designing a Scalable RPA CoE for Enterprise Automation

Key takeaways: Enterprise RPA fails at scale due to operating model gaps, not automation technology limitations. A federated RPA CoE balances delivery speed with governance, avoiding bottlenecks and audit exposure. Governance embedded into execution enables faster automation without introducing enterprise risk. Scalable RPA requires clear ownership, defined escalation paths, and production-grade operational controls. Measuring RPA…

How AI Overhauling Industrial Automation in Australia

Key takeaways: AI is shifting industrial automation from rule-based to data-driven decision ecosystems Predictive and autonomous operations are improving efficiency and cost optimisation Australian industries are leveraging AI to solve workforce, sustainability, and compliance challenges Enterprises adopting AI early gain competitive, operational, and economic advantages Industrial automation in Australia is no longer just an engineering…

Implementing Retrieval-Augmented Generation in Healthcare Systems: Challenges, Use Cases & ROI

Key takeaways: RAG helps clinicians make better decisions by connecting AI responses to trusted clinical data sources. Healthcare organizations are gradually adopting domain-specific AI to improve efficiency, compliance, and operational clarity. Successful RAG deployment usually depends on strong governance, interoperability planning, and secure data practices. Retrieval-backed AI can ease documentation workload while improving accuracy, productivity,…