- How Are Enterprises Scaling Generative AI Adoption Beyond Pilots?

- What Does an Enterprise Generative AI Implementation Roadmap Look Like?

- Phase 1: Discover

- Phase 2: Prioritize

- Phase 3: Architect

- Phase 4: Pilot

- Phase 5: Scale and Optimize

- What Architecture Enables Scalable LLM and Generative AI Implementation?

- Why Are Data and Governance Critical to Generative AI Strategy Implementation?

- How Can CFOs Measure Generative AI ROI for Enterprises?

- What Are the True Generative AI Implementation Costs for Enterprises?

- What Challenges in Generative AI Implementation Prevent Enterprise Scale?

- What Are the Best Practices for Generative AI Adoption in Enterprises?

- How Should Enterprises Choose a Partner for Generative AI Strategy Implementation?

- How Does Appinventiv Deliver Enterprise Generative AI ROI?

- Frequently Asked Questions

Key takeaways:

- Enterprise generative AI implementation rates now exceed 80%, yet fewer than 35% of programs deliver board-defensible ROI.

- The 2026 shift is from chatbots to autonomous AI agents embedded inside core enterprise workflows.

- High-performing organizations achieve 6–12 month payback by combining RAG architectures, LLMOps cost governance, and human-in-the-loop controls.

- Generative AI strategy business implementation succeeds when business owners (not IT) own outcome metrics.

- CFO-ready programs track token costs, inference spend, automation yield, and financial KPIs.

The board wants to hear one thing next quarter: “How is generative AI changing our numbers?”

Here’s the problem: Enterprise generative AI implementation rates now exceed 80%, yet fewer than 35% of programs deliver board-defensible ROI. Most executives can’t answer whether their $2-5M AI investment improved margins, reduced costs, or accelerated revenue.

The gap costs real money: Organizations without clear ROI tracking waste 40-60% of their AI budget on disconnected pilots that never scale. Meanwhile, high-performing enterprises achieve 6-12 month payback by combining RAG architectures, LLMOps cost governance, and human-in-the-loop controls.

This guide shows you how to join the winning 35%.

You’ll discover:

- A 5-phase implementation framework that moves from pilot to production in 6-12 months

- CFO-ready ROI formulas tracking token costs, automation yield, and financial KPIs

- Architecture patterns that prevent the #1 killer of AI programs: uncontrolled inference costs

- Real cost ranges for enterprise deployments (3-5 use cases: $700K-$2M Year 1)

- How to avoid the 7 structural barriers that stall 65% of AI initiatives

In short: Moving from “We’re trying AI” to “AI improved our EBITDA by $4.7M this quarter.”

Over 80% of enterprises have AI in motion, but only 35% achieve measurable ROI. Move from pilots to value with proven generative AI implementation frameworks.

How Are Enterprises Scaling Generative AI Adoption Beyond Pilots?

AI is already in motion across enterprises. Pilots are alive. Teams are testing new tools. With enterprise generative AI implementation rates at around 80% of companies using AI, generative AI adoption is no longer about if. It is about how and where to scale.

Yet many programs still move in isolation. Use cases live inside departments. Ownership is unclear. Data readiness differs. Governance often arrives late. This leaves enterprise adoption of generative AI active in effort, but uneven in results.

That is the reality of generative AI enterprise adoption today.

Leaders making progress treat adoption as an operating shift. They tie initiatives to P&L goals, assign accountability early, and build a defensible AI roadmap. These generative AI enterprise adoption trends separate scattered pilots from execution that delivers generative AI ROI.

Use the checklist below to see where you stand.

| Area | Diagnostic Question |

|---|---|

| Strategy & Value Themes | Do we have 3–5 P&L-linked value themes for generative AI? |

| Use Case Portfolio | Have we prioritized high-value, high-feasibility initiatives? |

| Business Ownership | Does a business leader own every use case? |

| Data Readiness | Are the required data sources clean, governed, and accessible? |

| Tech & Integration | Can we integrate LLM and generative AI into core systems? |

| Governance & Risk | Do we have risk tiers and guardrails defined? |

| People & Change | Is workforce enablement planned for AI-assisted workflows? |

| Measurement | Have we defined generative AI ROI for enterprises? |

| Roadmap | Do we have a documented enterprise generative AI roadmap? |

Most enterprises reviewing this checklist discover gaps in at least two areas — usually governance, ROI tracking, or business ownership. Identifying these early prevents late-stage implementation resets.

Also Read: Top AI Trends in 2026: Transforming Businesses Across Industries

What Does an Enterprise Generative AI Implementation Roadmap Look Like?

A generative AI strategy for business implementation succeeds only when execution is clear and aligned. Many enterprises stall as teams move in different directions, technology outpaces data readiness, and governance follows experimentation.

With generative AI enterprise adoption trends showing 90% of tech workers using AI tools and global AI investment up over 40% last year, momentum is undeniable.

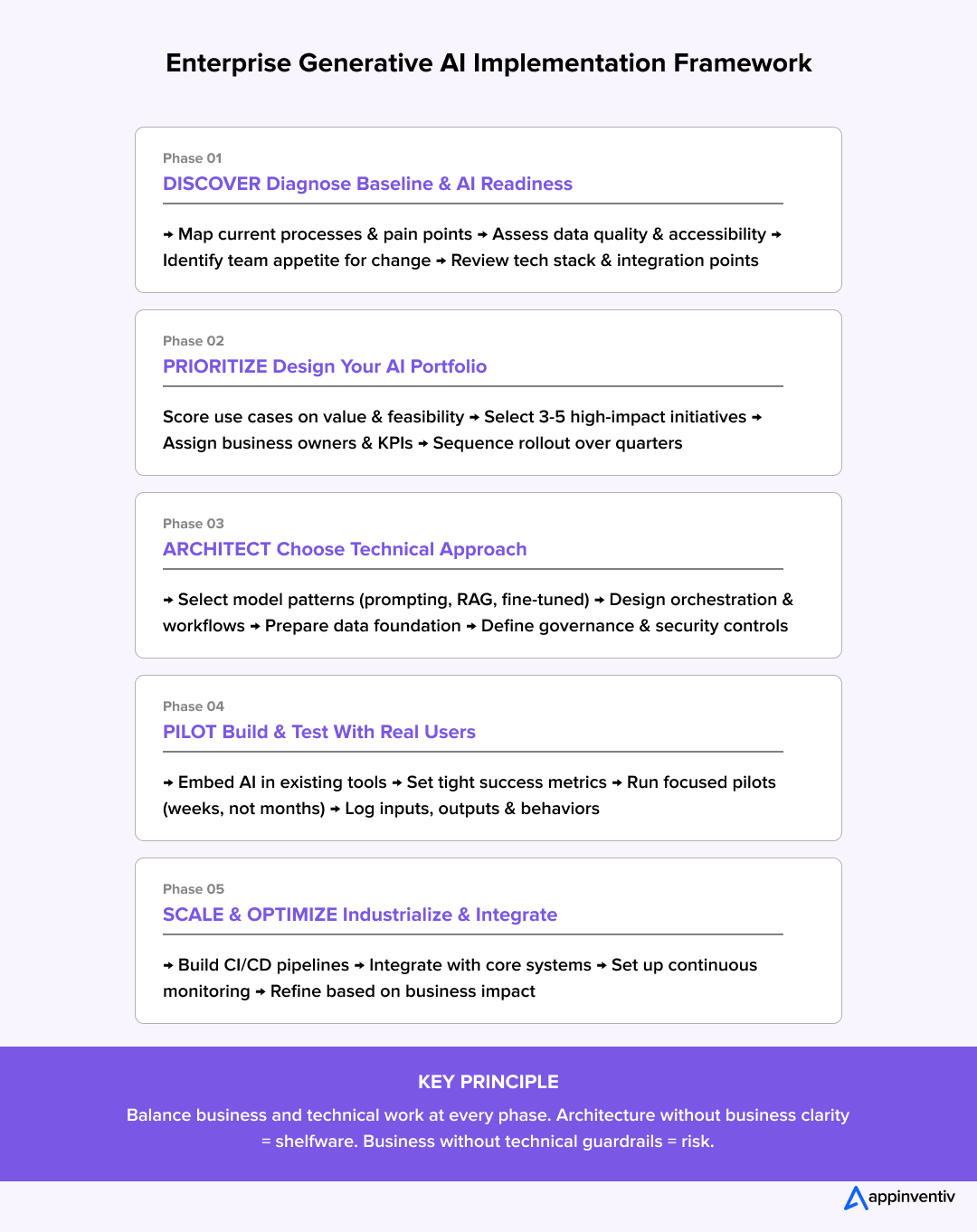

This generative AI implementation framework consolidates execution into five disciplined phases. Each phase combines business alignment, technical readiness, and governance design to ensure scalable enterprise generative AI adoption and measurable ROI.

Many enterprise AI initiatives fail during this stage because teams begin building before diagnosing data maturity, business accountability, or workflow readiness. The result is technically impressive pilots that never reach operational scale.

Phase 1: Discover

Key Focus: Diagnose Baseline, Value Gaps, and AI Readiness

Effective generative AI strategy implementation starts with an honest understanding of current operations, data maturity, and organizational readiness.

This phase maps where value leaks today and what prevents transformation. Enterprises examine key processes through three lenses:

- What volume and cost sit in the process

- How structured or unstructured the data is

- How dependent the process is on human judgment

On the technical side, teams map core systems, data stores, integration patterns, existing analytics assets, API availability, and security constraints.

Equally important is people’s readiness. Identifying which teams are already experimenting with AI and where change appetite exists prevents selecting use cases that lack a natural operational home.

Outcome: A baseline diagnostic covering process value gaps, data readiness, technical constraints, and organizational maturity. This forms the foundation for the disciplined implementation of generative AI.

Phase 2: Prioritize

Key Focus: Design the Generative AI Portfolio and Business Cases

With the baseline established, enterprises move from scattered ideas to a structured portfolio. Not every promising use case deserves immediate investment.

Initiatives are scored across:

- Value potential measured by revenue, cost, or risk impact

- Feasibility is measured by data availability, technical complexity, and regulatory friction

- Time to value is measured by the speed of pilot results

- Change impact is measured by the degree of workflow disruption

For shortlisted initiatives, teams develop a one-page enterprise generative AI business case defining target users, workflows, required data sources, and expected outcomes. This forces clarity before engineering begins.

Outcome: A sequenced generative AI implementation roadmap containing a focused set of high-impact use cases, assigned business owners, and defined investment envelopes.

Phase 3: Architect

Key Focus: Select Technical Approaches, Data Foundations, Platforms, and Governance

This phase defines how each use case will be built safely, scalably, and cost-effectively. In 2026, the difference between successful LLM and generative AI implementation and stalled pilots is an architecture that enables AI agency, not model wrappers.

Not every use case requires the same technical approach. Enterprises balance:

- Prompting foundation models for low-risk tasks

- Retrieval augmented generation for governed enterprise knowledge access

- Fine-tuned or domain-specific models for regulated and specialized workflows

Architectural design extends beyond model selection. It includes agentic orchestration layers that allow AI to reason through tasks, call enterprise APIs, and execute supervised workflows across ERP, CRM, and data platforms.

Cost control is built into the architecture through model routing logic. Simple queries are directed to lower-cost models. Complex strategic tasks are routed to high-performance models. This prevents token spend escalation while maintaining output quality.

Memory persistence is established using vector databases. This preserves user and workflow context across sessions, enabling enterprise-grade AI experiences.

In parallel, data foundations and governance are implemented:

- Identifying authoritative data sources

- Cleaning and normalizing documents

- Designing metadata, access controls, and retention policies

- Defining how data enters knowledge bases or vector stores

- Embedding auditability, permissioning, and compliance controls

Platform selection follows clear criteria:

- Integration with cloud, identity, and logging stacks

- Support for current and future model strategies

- Isolation of prompts, data, and outputs across business units

- Built-in monitoring, evaluation, and incident response

Outcome: A production-ready generative AI implementation framework combining model strategy, data pipelines, agentic orchestration, platform selection, and governance controls that scale enterprise adoption and protect ROI.

Phase 4: Pilot

Key Focus: Build, Deploy, and Validate with Real Users and Real Metrics

With strategy, architecture, and data aligned, enterprises build focused pilots embedded into real workflows.

Pilots are designed around specific user journeys, such as agents handling customer interactions or analysts preparing reports. AI capabilities are integrated directly into existing tools to drive adoption.

Technical focus areas include:

- Prompt design and rapid iteration

- Guardrails for sensitive tasks

- Logging of inputs, outputs, and system events

Before launch, teams define tight success metrics:

- Time saved per task

- Error rate reduction

- Backlog or throughput improvements

- User satisfaction and adoption

Pilots run long enough to reveal performance patterns, but short enough to support scale or stop investment decisions.

Outcome: Validated use cases with proven KPIs, confirmed feasibility, and defensible assumptions for generative AI ROI for enterprises.

Phase 5: Scale and Optimize

Key Focus: Industrialize, Integrate, and Operationalize LLMOps

When pilots demonstrate value, scaling begins. This is where generative AI implementation becomes an enterprise capability.

Scaling activities include:

- Integrating AI services into core systems through APIs or event streams

- Hardening security, authentication, and authorization

- Establishing CI CD pipelines for prompts, configurations, and model versions

- Defining ownership for incident handling, change approvals, and support

Continuous monitoring ensures sustained performance:

- Technical metrics such as latency, error rates, token usage, and model health

- Functional metrics such as output quality, policy alignment, and escalation frequency

- Business metrics based on KPIs defined in the business case

Regular review cycles refine prompts, update training data, adjust guardrails, and retire underperforming use cases. A formal LLMOps or AI operations capability institutionalizes governance, cost control, and lifecycle management.

Outcome: A repeatable, governed, continuously improving generative AI implementation guide that scales enterprise adoption, controls run cost risk, and sustains ROI.

The five-phase generative AI implementation framework defines execution steps. Scalable success depends on an enterprise architecture that governs models, data access, security, and operating cost.

What Architecture Enables Scalable LLM and Generative AI Implementation?

LLM and generative AI implementation require coordinated model orchestration, retrieval grounding, and cost governance. Simple prompting cannot meet enterprise-grade privacy, auditability, and hallucination-control requirements.

Enterprise-Grade Architecture Pattern

- Data Layer: Vector databases grounding models in governed enterprise knowledge

- Retrieval-Augmented Generation (RAG): Reduces hallucinations and enforces data access controls

- Orchestration Layer: LangChain or Semantic Kernel managing multi-step agent workflows

- LLMOps: Versioned prompts, drift monitoring, evaluation pipelines

- Token Governance: Routing low-complexity tasks to Small Language Models (SLMs) to reduce inference cost

This technical foundation enables generative AI implementation best practices at scale.

Executive Insight:

Without retrieval grounding, orchestration layers, and cost routing, generative AI often produces impressive demonstrations but unreliable enterprise systems.

Also Read: Choosing the Right AI Tech Stack – The Whys and Hows for Businesses

Why Are Data and Governance Critical to Generative AI Strategy Implementation?

Scalable generative AI strategy implementation starts with strong data and governance foundations. Enterprises that embed control mechanisms early avoid compliance risk, inconsistent outputs, and stalled AI adoption later.

Governance delays are now the leading cause of enterprise AI rollback. Programs that add compliance controls late often face adoption resistance, legal scrutiny, or restricted production usage.

Core governance and data principles:

- Data readiness first: Clean, permissioned, continuously updated enterprise data feeding RAG pipelines

- Human-in-the-loop controls: Mandatory review for high-impact or regulated decisions

- Auditability by design: Prompt and output logging for traceability and accountability

- Access enforcement: Role-based data permissions embedded into AI retrieval layers

- Regulatory alignment: EU AI Act, GDPR, and industry compliance built into the architecture

- Risk-tiered workflows: Clear approval paths based on use case risk classification

In 2026, the cost of inaction has shifted to the cost of bad governance. Enterprises that operationalize governance early scale generative AI faster, safer, and with sustained executive trust.

Also Read: AI Regulation and Compliance in the US – Navigating the Legal Intricacies of Software Development

How Can CFOs Measure Generative AI ROI for Enterprises?

CFOs do not invest in innovation narratives. They invest in measurable financial outcomes. For generative AI to secure sustained funding, ROI must be calculated, tracked, and defended at the portfolio level.

This requires a standardized financial model that links generative AI implementation directly to efficiency, revenue, and cost governance.

The 2026 Enterprise AI ROI Formula

We track the Efficiency Multiplier to justify year-one generative AI investment and ensure financial accountability across scaled deployments.

ROI (Generative AI) = (Total Operational Savings + Total Revenue Lift) – (Token Costs + LLMOps Overhead)/ (Total Implementation Capital)

- Automation Yield: Percentage of tasks completed without human intervention.

- Token Efficiency: Cost per successful AI outcome compared to equivalent human labor cost.

This financial model allows executives to defend AI investments using language finance teams already trust, cost efficiency, margin improvement, and capital productivity.

Target Payback Benchmarks

- Mid-market enterprises: under 9 months

- Global 2000 enterprises: under 14 months

KPI Chains Linking AI to Financial Impact

- Customer Service AI Agents: AI suggestions → lower handle time → more cases per agent → reduced cost per case

- Document Automation: Auto-extraction → fewer manual checks → faster cycle times → SLA compliance → reduced operational cost

This measurement framework makes generative AI ROI for enterprises transparent and defensible, enabling leadership teams to improve ROI with generative AI across business portfolios with financial clarity and board-level accountability.

What Are the True Generative AI Implementation Costs for Enterprises?

Generative AI implementation costs usually fall into a few predictable buckets. Below is an indicative year-one view for a mid to large enterprise running three to five meaningful use cases.

| Cost Component | Typical Year-One Range (USD) |

|---|---|

| Strategy & Discovery | $50K – $150K |

| Data & Integration Foundation | $100K – $400K |

| Platform & Infrastructure Setup | $75K – $250K |

| Build & Pilots (3–5 Use Cases) | $200K – $700K |

| Scaling & Change Management | $100K – $300K |

| Run-Rate Optimization | $100K – $250K |

| Post-Launch LLMOps & Token Governance | $75K – $200K |

Note: All figures are directional, in USD, and will vary by region, vendor choices, and scope.

Run-cost drift after deployment is now the primary risk area in the implementation of generative AI.

While implementation costs are predictable, uncontrolled inference and token consumption are now the fastest-growing hidden expense in scaled AI deployments.

Also Read: How Much Does AI Development Cost in 2026? A Complete Guide

What Challenges in Generative AI Implementation Prevent Enterprise Scale?

Most enterprise AI initiatives stall not because the technology underperforms but because execution ownership, governance, and measurement models are unclear.

Even with budget, talent, and intent, challenges in generative AI implementation are rarely technical. There are often structural challenges in how enterprises select, design, and operationalize AI inside real workflows. Addressing them early determines whether AI scales or stalls.

Here are a few key generative AI implementation challenges:

- No Clear Ownership or Value Hypothesis

Many initiatives start as model experiments with no accountable business owner. Without defined KPIs and an enterprise generative AI business case, pilots fail to survive budget scrutiny. Successful programs assign ownership of outcomes before development begins.

Also Read: How to Fast-Track Legacy Application Modernization with Artificial Intelligence

- Fragmented Data and Legacy Infrastructure

Scattered data and aging systems cause hallucinations, latency, and fragile integrations. Enterprises must build data foundations first: governed data catalogs, API layers, and RAG pipelines that securely ground LLM outputs in enterprise knowledge.

- Underestimated Change Management

Generative AI reshapes workflows, roles, and decision paths. Without frontline co-design, training, and AI-assisted workflow adoption plans, resistance quietly undermines deployment.

- No Standard ROI Measurement Framework

Tracking prompt volume instead of financial impact leaves CFOs unconvinced. High-performing enterprises implement unified KPI chains linking AI activity to cost reduction, revenue uplift, and risk mitigation.

Also Read: 11 Greatest Barriers to AI Adoption and How to Beat Them: Your Implementation Roadmap

- Missing or Late Governance

Uncontrolled model access, unlogged prompts, and the absence of human-in-the-loop reviews expose enterprises to compliance and reputational risk. Embedding auditability, HITL controls, and risk-tiered governance from Phase 1 is now mandatory.

These generative AI implementation challenges define the gap between experimentation and scalable enterprise value.

Unify strategy, architecture, governance, and execution across enterprise AI programs.

What Are the Best Practices for Generative AI Adoption in Enterprises?

There is no universal generative AI implementation guide, but consistent best practices separate scaled enterprise programs from stalled pilots.

More than one-third of organizations applying best practices for generative AI adoption now operate centralized AI Centers of Excellence, reducing fragmentation and accelerating generative AI adoption across business units.

- Design for an autonomous AI agent scale. Start with narrow, high-impact use cases, but architect for future expansion. Build data pipelines, access controls, RAG layers, and observability as shared infrastructure to avoid disposable pilots.

- Tie every use case to a named business owner. Each initiative must carry a defined metric and an accountable leader. Without ownership, implementation of generative AI drifts from ROI into experimentation.

- Put data quality on the critical path. RAG pipelines, vector databases, and governed data catalogs must be production-ready before model deployment. Poor data surfaces immediately as hallucinations or compliance risk.

- Build cross-functional delivery squads. Effective generative AI implementation best practices require product, data, engineering, security, risk, and business teams jointly designing workflows and guardrails.

- Maintain HITL in high-risk workflows. Human-in-the-loop review remains mandatory for regulated, financial, or safety-critical decisions.

- Operationalize continuous evaluation through LLMOps. Version prompts, monitor drift, track token usage, and route workloads to cost-efficient models. Standardized LLMOps are now core to best practices for generative AI adoption.

Applied together, these practices turn generative AI implementation from isolated pilots into a governed, scalable enterprise capability.

How Should Enterprises Choose a Partner for Generative AI Strategy Implementation?

Enterprises rarely fail because they selected the wrong AI model. They failed because they selected partners that could build prototypes but could not operationalize enterprise-scale systems.

Enterprises selecting partners for generative AI strategy business implementation must prioritize operational execution over experimentation. The goal is not model deployment. It is a scalable, governed, and ROI-producing transformation.

The right partner connects enterprise generative AI roadmaps directly to P&L impact by delivering production-grade systems rather than isolated pilots.

Key evaluation criteria:

- Proven experience in LLM and generative AI implementation within regulated, complex enterprise environments

- Ability to deliver end-to-end generative AI implementation frameworks, from value discovery through scaled deployment

- Deep expertise in RAG architectures, workflow orchestration, and LLMOps cost governance

- Integration capability across core enterprise platforms such as ERP, CRM, data lakes, and identity systems

- Built-in human-in-the-loop controls, audit logging, and compliance alignment

- Shared accountability for generative AI ROI for enterprises, not just project delivery

The right partner does more than build solutions. They operationalize governance, control run-cost drift, and institutionalize best practices for implementing generative AI.

This is how enterprise generative AI business case execution moves from roadmap to measurable financial outcomes, securely, scalably, and board-ready.

Partner with experts delivering enterprise AI systems with proven ROI.

How Does Appinventiv Deliver Enterprise Generative AI ROI?

At Appinventiv, we treat generative AI as a business instrument, not a lab experiment. As a generative AI development services, every engagement starts with your P&L priorities, then moves into a focused portfolio of high-impact use cases that can prove or disprove ROI in months, not years.

Our teams have delivered 300+ AI-powered solutions across 35+ industries, giving us a clear view of where GenAI actually shifts unit economics and where it remains hype.

Behind that is a deep engineering bench: 200+ data scientists and AI engineers, 150+ custom AI models trained and deployed, and 50+ bespoke LLMs fine-tuned for specific domains and workloads.

We have also led 75+ enterprise AI integrations into CRMs, ERPs, core banking stacks, logistics platforms, and healthcare systems, so new capabilities land inside the tools your teams already use.

Our focus is not AI experimentation. It is production-grade AI systems that integrate with enterprise operations, financial measurement frameworks, and governance models from day one.

If your organization is running multiple generative AI implementation pilots but still lacks a clear ROI story, this is the inflection point to reset. Our generative AI development company can help you audit current initiatives, define a practical generative AI strategy, design the right architecture, and build production-grade solutions that your CFO, CIO, and business heads can all stand behind.

Frequently Asked Questions

Q. What are the business benefits of implementing a Generative AI strategy?

A. Generative AI drives measurable improvements in revenue, cost reduction, and risk management. Benefits include reduced operational costs through automation, faster decision-making, improved customer satisfaction, shorter cycle times, enhanced employee productivity, and creation of reusable data assets that compound value across the enterprise.

Q. How to Know If Your Enterprise Is Ready to Scale Generative AI?

A. A generative AI consulting partner usually uncovers these signals when evaluating whether AI investments are positioned to deliver measurable business ROI at scale.

- Multiple pilots running but no ROI baseline

- Token or inference cost unpredictability

- AI outputs disconnected from core systems

- Business teams using AI without governance controls

Q. What ROI can executives expect from Generative AI adoption?

A. Executives should target 6–12 month payback for operational use cases. Typical returns include 30-50% reduction in process time, 20-40% cost savings per task, improved conversion rates, higher CSAT/NPS scores, and reduced error rates. Strategic initiatives may require longer horizons but build lasting capabilities.

Q. What are the main challenges in implementing generative AI?

A. Key challenges in the implementation of generative AI include experimentation without clear ownership, fragmented and poor-quality data, legacy infrastructure constraints, underestimated change management needs, skills gaps, lack of standardized ROI measurement frameworks, and governance that either blocks progress or arrives too late to prevent risk.

Q. How should enterprises build a Generative AI strategy implementation?

A. Start your generative AI strategy business implementation by diagnosing the current baseline and value gaps. Prioritize 3-5 use cases tied to P&L impact using a scoring model. Design architecture and governance upfront. Run focused pilots with real users and metrics. Scale what works through industrialization. Measure continuously and optimize based on business outcomes.

Q. How does Appinventiv help enterprises implement Generative AI for ROI?

A. Appinventiv starts with P&L priorities, not technology. With 300+ AI solutions across 35+ industries, 200+ data scientists, and 75+ enterprise integrations, they design ROI-driven portfolios, build production-grade solutions, and integrate AI into existing systems such as CRMs and ERPs to drive measurable business impact.

Q. How can I implement Generative AI into my business?

A. Begin with an honest assessment of data readiness and value opportunities. Identify high-impact, high-feasibility use cases with clear business owners. Start small but scale design. Build cross-functional teams including business, tech, and governance. Run disciplined pilots with defined metrics, then industrialize what proves ROI.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

AI-Powered Booking Optimization for Beauty Salons in Dubai: Costs, ROI & App Development

Key Highlights AI booking optimization improves utilization, reduces no-shows, and stabilizes predictable salon revenue streams. Enterprise salon platforms enable centralized scheduling, customer insights, and scalable multi-location operational control. AI-enabled booking platforms can be designed to align with UAE data protection regulations and secure payment standards. Predictive scheduling and personalization increase customer retention while significantly reducing…

Data Mesh vs Data Fabric: Which Architecture Actually Scales With Business Growth?

Key takeaways: Data Mesh supports decentralized scaling, while Data Fabric improves integration efficiency across growing business environments. Hybrid architectures often deliver flexibility, governance, and scalability without forcing premature enterprise-level complexity decisions. Early architecture choices directly influence reporting accuracy, experimentation speed, and future AI readiness across teams. Phased adoption reduces risk, controls costs, and allows architecture…

Governance vs. Speed: Designing a Scalable RPA CoE for Enterprise Automation

Key takeaways: Enterprise RPA fails at scale due to operating model gaps, not automation technology limitations. A federated RPA CoE balances delivery speed with governance, avoiding bottlenecks and audit exposure. Governance embedded into execution enables faster automation without introducing enterprise risk. Scalable RPA requires clear ownership, defined escalation paths, and production-grade operational controls. Measuring RPA…