- What AI Tokenization Means Today

- Why 2026 Matters for AI Tokenization in Asset Ownership

- How AI Strengthens Tokenization Systems

- Automated valuation and pricing

- Identity intelligence and verification

- Smart contract automation

- Risk scoring and fraud detection

- Continuous compliance and monitoring

- Enterprise Guide to Developing an AI Tokenization Platform

- Real-World Use Cases of Asset AI Tokenization

- 1. Real estate: Inveniam + Cushman & Wakefield

- 2. Banking and institutional finance: HSBC

- 3. Renewable energy and carbon credits: Powerledger

- 4. Asset management and digital securities: SIX Digital Exchange (SDX)

- How Tokenization Rules Differ Around the World

- United States

- Europe

- Middle East

- Asia Pacific

- Challenges of AI in Tokenization and How to Solve Them

- Legal classification and asset validity

- Data quality and valuation accuracy

- Smart contract risk and security

- Identity management and fraud

- Regulatory changes

- Liquidity and secondary market fragmentation

- Future Outlook for AI Tokenization in 2026 and Beyond

- Partner With Appinventiv for AI Tokenization

- FAQs

By 2026, AI tokenization has moved beyond early-stage experiments and pilot projects. Tokenizing real-world assets has become a serious commercial strategy for financial institutions, supply chain operators and technology-driven enterprises. A 2025 report by the World Economic Forum in collaboration with Accenture highlights tokenization as a key mechanism for value exchange in modern financial markets.

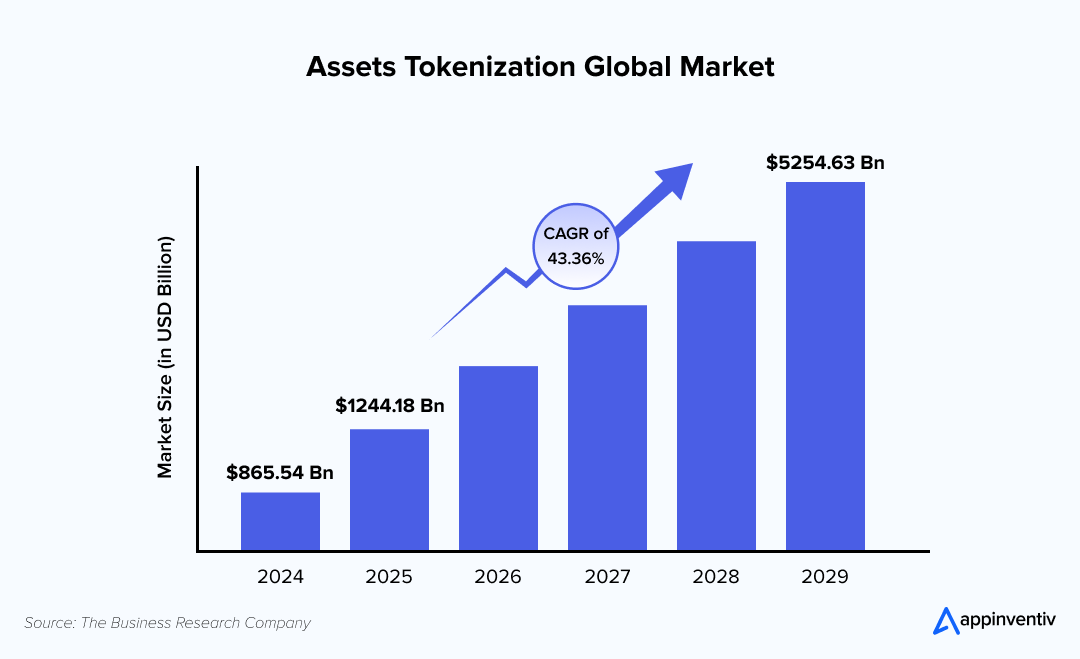

The overall market is expanding fast. Research from Coinlaw shows the global asset tokenization market could reach USD 5,254.63 billion by 2029 as businesses adopt digital forms of ownership, trading and asset management.

In this changing landscape, adding artificial intelligence to tokenized systems makes the idea of digital ownership more practical. AI tokenization for asset ownership brings automated valuation, identity intelligence, fraud prevention and continuous compliance to tokenized assets. These capabilities improve security and create more transparent asset ownership for enterprises and users.

This article explains how AI-powered tokenization works today, where it is headed in 2026 and why the model offers a future-ready foundation for secure digital ownership.

Contact our team to build a compliant, AI-powered tokenization platform today.

What AI Tokenization Means Today

AI tokenization is the process of converting physical or digital assets into blockchain-based tokens and adding intelligent automation to the way those tokens are valued, verified and tracked. While traditional tokenization focuses on recording ownership, AI tokenization for asset ownership introduces active decision-making and continuous monitoring.

AI agents in asset tokenization help platforms handle tasks that once needed manual review or third-party approval. This includes validating asset data, spotting irregular transactions and keeping ownership records updated.

Here are simple elements that define modern tokenization systems:

- Smart classification of assets using machine learning in asset tokenization

- Automated pricing models powered by real data

- Audit trails for transparent record keeping

- Permissioned access for compliance-based workflows

- Continuous checks for fraud and risk scoring

A practical AI tokenization example is dynamic real estate valuation. Instead of fixing a static value, AI uses data inputs like location, rental demand, historical pricing and risk indicators to update token values over time. This creates a more realistic way to represent and manage property on a digital ledger.

AI-powered asset tokenization is already changing how enterprises monitor valuations, track ownership data and control risk. By combining tokenization in AI models with blockchain integrity, organizations can create systems that update themselves based on real-world conditions.

Why 2026 Matters for AI Tokenization in Asset Ownership

The next phase of AI tokenization in 2026 is shaped by enterprise adoption, regulatory clarity and the need for transparent digital ownership. Businesses are no longer experimenting with isolated pilots. They are looking for systems that support compliance, liquidity and long-term asset management.

Research from multiple financial reports suggests tokenized assets will continue moving into mainstream portfolios, driven by risk reduction and faster settlement cycles. This shift supports AI integration with tokenization as a practical business strategy rather than a technical concept.

The benefits of using AI tokenization for asset ownership go beyond automation. Better pricing insight, faster verification and reductions in operating risk are pushing more investors toward AI-driven tokenization markets. Key reasons 2026 matters:

- Regulators in major markets are drafting clearer rules for digital assets

- Institutional investors are funding AI-powered tokenized ownership products

- Secondary markets are forming for tokenized real estate, energy credits and intellectual property

- Enterprises want faster, data-driven verification with less manual review

The growth of AI-driven tokenization markets creates a new layer of competition in financial technology. Organizations that move early gain access to tools that improve valuation accuracy, support real-time monitoring and help manage asset risk at scale.

Together these changes set the stage for smarter, more secure ownership models and mark a meaningful step toward the future of tokenization and AI integration.

How AI Strengthens Tokenization Systems

AI adds intelligence on top of blockchain-based records. Instead of simply storing ownership data, AI turns tokenized assets into living digital entities that can react to changes, manage risk and update value over time. From a development perspective, this means designing systems that combine data engineering, machine learning and secure smart contracts in one structure.

Automated valuation and pricing

Most traditional tokenization platforms assign fixed values to assets at the time of issuance. This approach becomes outdated quickly.

With machine learning in asset tokenization, valuation models take in large volumes of data including market trends, asset condition, geographic risk and historical performance.

This is also where AI-based asset tokenization supports continuous price discovery. Developers can train tokenization in AI models using historical contracts, regional demand data and market signals.

Identity intelligence and verification

Identity has always been one of the biggest challenges in digital asset systems. AI helps detect fake identities, unusual login behavior and mismatched credential patterns. This supports stronger AI integration with tokenization and reduces human intervention in validation workflows. Implementation often includes identity graph analysis and multi-factor verification with a machine learning layer.

Smart contract automation

Developers can design smart contracts that trigger actions using AI signals. Examples include adjusting pricing based on risk scores or blocking transfers when fraud is likely. These contracts form the foundation of AI-powered asset tokenization because they allow the system to take action without waiting for manual approval.

- Event-based triggers

- Adaptive contract clauses

- Post-transaction review and logging

These structures are evolving into AI-powered tokenized ownership frameworks where transactions become conditional, adaptive and transparent.

Risk scoring and fraud detection

Tokenized systems that handle valuable assets need protection against malicious activity. With AI’s risk management capabilities, models can examine transaction flows, asset behavior and network history to detect patterns that look suspicious. This is a core part of implementing AI tokenization for enterprises because it supports audit readiness and reduces legal exposure.

Practical AI tokenization example: If a tokenized property suddenly changes owners multiple times in a short period, risk scoring models can freeze transfers, alert administrators or request additional verification.

Continuous compliance and monitoring

Legal environments around tokenization are still developing. AI enables automated compliance checks based on jurisdiction, asset class and risk category. Developers can integrate rule-based logic with evolving datasets to support:

- AML checks

- KYC procedures

- Cross-border transfer rules

- Documentation requirements

This helps build AI tokenization platforms that remain aligned with regulatory standards without frequent manual oversight.

AI development in tokenization systems is a multi-layer process that combines storage, analysis and execution. The objective is to create secure token ownership for the long-term while supporting systematic updates based on real-world conditions.

Enterprise Guide to Developing an AI Tokenization Platform

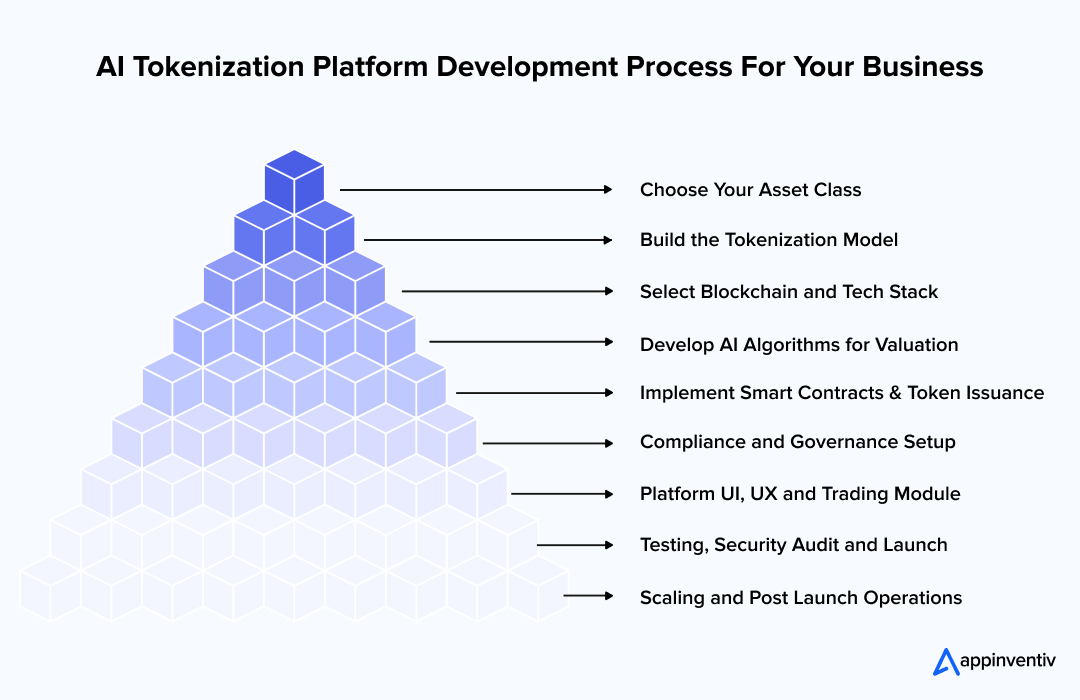

Enterprises need a clear roadmap when building an AI tokenization platform. Below is a structured plan showing core development stages from basic prototype to full scale deployment.

- Choose Your Asset Class

Select what you want to tokenize. This can include real estate portfolios, corporate bonds, carbon credits, trade finance instruments, supply chain inventory, or enterprise data models. Your asset class determines compliance, valuation logic and technical complexity.

- Build the Tokenization Model

Define your objective and prepare a clear tokenization strategy. At this point, an enterprise can work with an AI tokenization development company that understands asset structuring, digital registries and on chain logic.

- Select Blockchain and Tech Stack

Choose the underlying blockchain framework, token standard, wallet integrations and smart contract language. Standards like ERC 20 and ERC 3643 are common for security tokens. Enterprises should also plan for identity modules, audit logging and secure storage.

- Develop AI Algorithms for Valuation

Collect data, select machine learning techniques, and build models that track value, detect unusual transfers and monitor risk. Your AI models should support continuous price discovery and maintain a clear record of how valuations change over time.

- Implement Smart Contracts and Token Issuance

Create logic for token minting, ownership transfer and redemption. Ensure compatibility with enterprise identity systems, existing databases and any intended secondary markets. Smart contract testing is vital to prevent lockups or faulty transfers.

- Compliance and Governance Setup

Integrate automated KYC and AML checks, set user roles and support regulator friendly reporting. Large enterprises should work with legal advisors early so regional and industry specific rules are baked in from the start.

- Platform UI, UX and Trading Module

Design dashboards for asset issuers, investors and compliance teams. Include modules for transaction history, analytics and trade matching. Focus on straightforward navigation so adoption does not slow down operational teams.

- Testing, Security Audit and Launch

Conduct performance testing, penetration testing and manual code reviews before going live. Use external smart contract auditors to identify vulnerabilities and plan a secure staging deployment before the official release.

- Scaling and Post Launch Operations

Monitor platform performance, address user feedback and update AI models. Enterprise teams may later expand into new asset categories or support multi region deployments as regulatory clarity improves.

Real-World Use Cases of Asset AI Tokenization

AI tokenization now supports real asset ownership across finance, property, energy and digital rights. These examples show how adoption has moved from conceptual pilot programs to working systems with regulated institutions and global enterprises.

1. Real estate: Inveniam + Cushman & Wakefield

Inveniam works with global real estate services firm Cushman & Wakefield to tokenize commercial property data and support automated valuation through blockchain. The platform processes valuation data to support institutional real estate tokenization.

Development perspective: AI tokenization systems here use pricing engines and real-time valuation models fed by transactional data, occupancy rates and market history.

2. Banking and institutional finance: HSBC

HSBC is also using a digital asset custody platform for tokenized securities, including digitally issued bonds and tokenized investment products. This shows regulated infrastructure for real-world tokenization.

Development perspective: AI agents in asset tokenization help identify anomalies, flag suspicious transfers and support real-time risk scoring for large portfolios.

3. Renewable energy and carbon credits: Powerledger

Powerledger is tokenizing renewable energy credits so users can buy and sell solar energy units on decentralized marketplaces. Their most recent whitepaper describes tokenized clean energy trading across Australia, India and Japan.

Development perspective: AI models evaluate energy production forecasts, pricing behavior and regional demand to update token values dynamically.

4. Asset management and digital securities: SIX Digital Exchange (SDX)

The Swiss exchange SIX received approval from FINMA to launch the SIX Digital Exchange, which supports tokenized structured products and digital bond issuances. This adds regulated infrastructure for tokenized securities.

Development perspective: AI supports exposure analysis, compliance automation and transaction monitoring across tokenized securities.

These examples are real confirmation of AI agents in asset tokenization examples being deployed at scale, especially in highly regulated sectors like banking and energy. More enterprises are planning pilots in 2026, which supports the Future of AI Agents in Tokenization of Assets as a priority for development teams.

How Tokenization Rules Differ Around the World

Tokenization is global, but regulation is not. Every region is moving at a different pace with different rules for digital ownership, custody, transaction monitoring and disclosure. Organizations building AI tokenization platforms need to understand how these frameworks shape design, data processing, compliance workflows and asset governance.

United States

Regulators in the United States are focused on defining digital assets through existing financial laws rather than creating an entirely new category. The SEC has issued guidance on how tokenized securities must meet registration, disclosure and investor protection requirements. Federal frameworks focus on securities classification, while state treatment varies.

Developers working on AI tokenization models in the United States need to design systems that support:

- Know your customer checks

- Transaction reporting

- Custody controls

- Clear asset classification

Europe

Europe has taken a structured approach with MiCA, the Markets in Crypto Assets regulation. MiCA is creating standardized rules for issuing and trading tokenized assets across EU member countries.

For data-linked tokenization, GDPR plays a major role. Any AI tokenization system handling personal data must use encryption, explicit consent and limited data retention. Systems should also track where data flows to remain compliant with cross-border data laws.

Middle East

Governments in the Gulf region are investing in regulated tokenized asset markets. Dubai’s Virtual Asset Regulatory Authority (VARA) and Abu Dhabi Global Market (ADGM) have created digital asset frameworks that allow token issuance and custody for institutional clients. Saudi initiatives around digital asset sandboxes are helping organizations test tokenization safely.

Enterprises exploring tokenization in this region often look for a mobile app development company in Dubai to build solutions that match local requirements and multilingual environments.

Asia Pacific

In Singapore, the Monetary Authority of Singapore (MAS) has issued clear guidelines for tokenized securities, stablecoin regulation and custody standards. These rules provide predictable compliance paths for projects.

Japan has updated its crypto asset regulation to support institutional tokenized securities and custody networks for financial products. Developers in the Asia Pacific often incorporate rule-based compliance layers to support trading, reporting and security standards across-borders.

Many global projects now rely on AI and tokenization development services to meet regional standards. Selecting the right asset tokenization platform development company becomes essential when systems need multilingual support, identity layers and scalable API integrations.

Challenges of AI in Tokenization and How to Solve Them

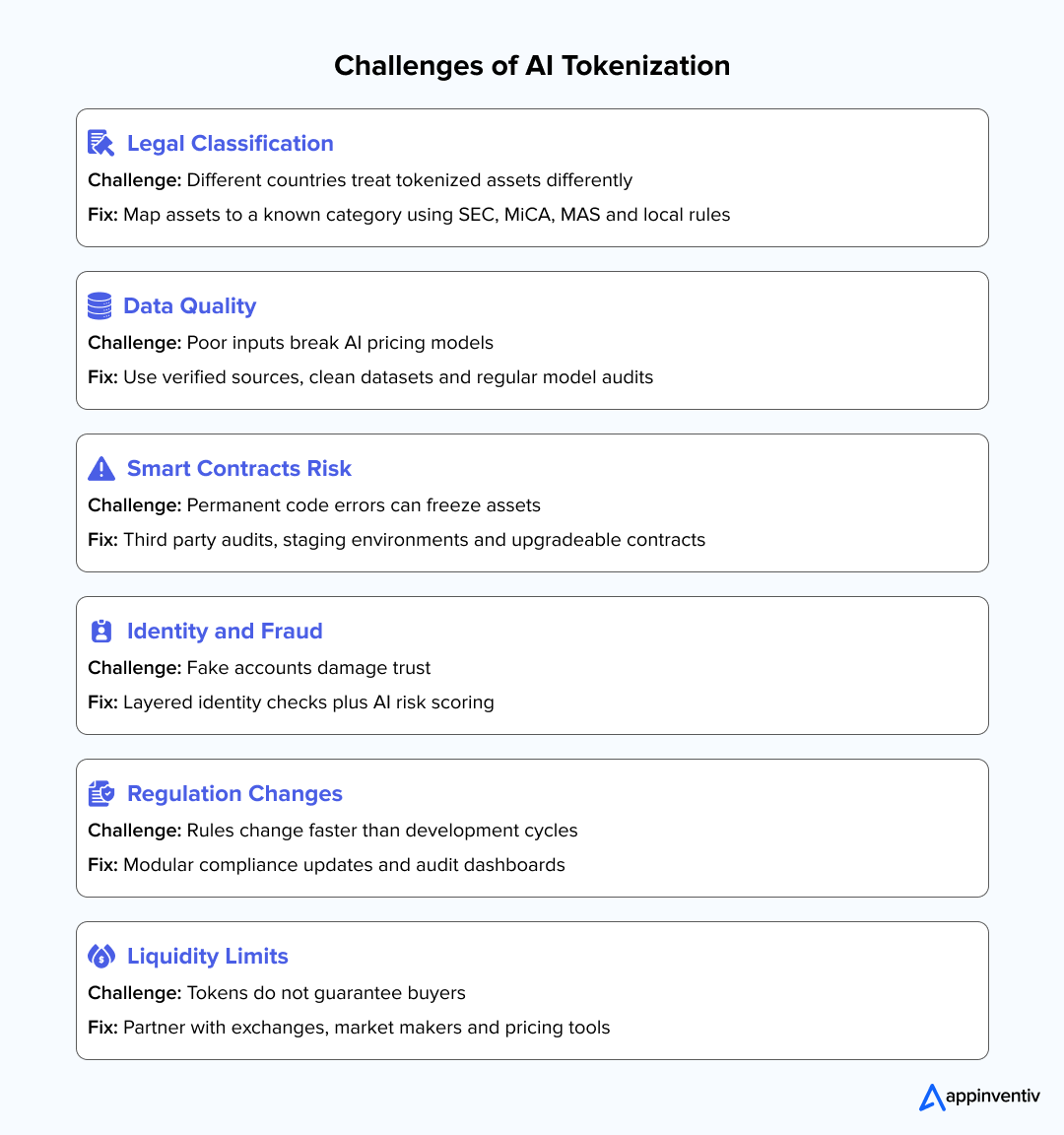

AI tokenization offers strong benefits for valuation, monitoring and transparent ownership, but it also introduces technical and regulatory hurdles. The most successful systems address these issues early, combining secure development practices with ongoing compliance updates.

Legal classification and asset validity

Tokenized assets are not always recognized in the same way across jurisdictions. Some countries treat them as securities, some as commodities and some as digital records with no clear legal standing. This creates uncertainty for AI based asset tokenization projects.

How to overcome: Work with a compliance framework that maps the asset to a known category. Developers can implement asset classification modules that follow SEC, MiCA, MAS and local regulatory guidelines. Legal consultation during early design saves time later.

Data quality and valuation accuracy

AI valuation models need reliable data. Poor quality inputs create inaccurate pricing and unstable token value. This is one of the biggest challenges of AI in asset tokenization because valuation depends on external data feeds.

How to overcome: Use verified data sources, clean historical sets and validation rules. Development teams should run periodic model audits and maintain version control on pricing algorithms so updates remain transparent.

Smart contract risk and security

Smart contracts are permanent once deployed on a chain. A coding error can freeze assets, leak funds or allow unwanted transfers. This affects AI tokenization platforms because automation relies on contract triggers.

How to overcome: Perform third-party smart contract audits before deployment. Maintain a secure staging environment and use upgradeable contract structures where possible. Routine penetration testing should continue after launch.

Identity management and fraud

Token systems need reliable identity verification. Fraudulent accounts or abnormal transfers can harm user trust. This impacts AI tokenization for asset management especially when institutional investors are involved.

How to overcome: Apply layered identity controls such as biometrics, risk scoring and document verification. AI agents in fraud detection can track unusual patterns, but human review should confirm high-risk transactions.

Regulatory changes

Regulations evolve faster than development cycles. New rules may require updates in token transfer, custody handling or reporting. Developers need a way to update systems without rewriting core code.

How to overcome: Separate logic into modular components so compliance modules can be updated independently. Include reporting dashboards to generate audit-ready records automatically.

Liquidity and secondary market fragmentation

Tokenizing an asset does not guarantee liquidity. If there are no secondary markets or buyers, tokens may sit idle. This limits growth for AI-powered tokenized ownership models.

How to overcome: Develop partnerships with regulated exchanges and marketplaces. Include automated investor matching and pricing discovery features for better liquidity. Market makers can support healthy trade activity.

Building AI tokenization systems means planning beyond technical architecture. Addressing data reliability, regulatory shifts and security risks early helps organizations launch token platforms that are secure, compliant and sustainable.

Future Outlook for AI Tokenization in 2026 and Beyond

Tokenization will shift from experiments to standardized systems. Industry analysts expect future trends of AI tokenization to focus on data quality, modular architecture and risk scoring improvements rather than only new asset types. The next few years will focus on building stable, predictable infrastructure that supports compliance and real market activity.

- Hybrid AI blockchain infrastructure: Development teams will combine on chain records with off chain data processing. This includes AI engines that manage pricing and fraud detection while blockchain stores ownership history.

- Predictive compliance: AI will monitor evolving regulations and detect when a transaction may violate regional rules. Systems will generate alerts before a potential compliance issue occurs, reducing manual review.

- Blockchain Interoperable identity standards: Identity frameworks will move toward shared credentials that work across exchanges and custodians. This supports faster onboarding and reduces risk around identity fraud in token transfers.

- Tokenized data ownership for AI models: Data used to train AI models will be tokenized so owners can track usage, grant access or monetize contributions. This supports transparent data exchanges for enterprises and research teams.

- ESG reporting linked through tokenization: Sustainability metrics will be stored as tokenized units. Organizations will track carbon credits, energy production and emissions through verifiable digital records.

- Tokenization combined with digital credentials: Credentials for licenses, certifications and access rights will be stored as secure tokenized assets. AI agents in asset tokenization models can verify authenticity without sharing raw data.

These possibilities are shaping the Future of AI Agents in Tokenization of Assets and changing how enterprises plan their long-term digital ownership strategies.

Our team designs secure, scalable AI solutions tailored for enterprise workflows.

Partner With Appinventiv for AI Tokenization

Building secure token systems requires more than blockchain. It calls for expertise in AI architecture, data governance, and compliance aware development. Appinventiv supports enterprises that want to move from concept to production with AI tokenization solutions built for scalability and long term asset ownership management.

Our team works across valuation engines, identity verification models, transaction risk scoring, and hybrid on chain infrastructure. We provide full stack support using AI development services to streamline asset workflows, digitize ownership rules, and support real time operational visibility.

To further extend automation and monitoring, our AI agent development services help organizations build intelligent systems that track asset behavior, enforce smart contract logic, and alert teams to unusual activity. These agent driven tools support continuous compliance and reduce operational risk.

Why enterprises choose Appinventiv

- Blockchain solutions deployed: 150+

- Smart contracts audited: 10+

- Years in blockchain research and development: 8+

- Partnerships with leading protocols: 8+

Appinventiv helps organizations design token models, integrate AI valuation systems and implement smart contract security. We assist with regulatory mapping, compliance automation and data privacy controls. For teams looking to tokenize assets responsibly, we deliver development support that protects users and aligns with industry standards.

To explore AI tokenization for your organization, connect with our specialists and discuss how Appinventiv can support your next build.

FAQs

Q. What is AI tokenization, and why does it matter for businesses?

A. AI tokenization converts physical or digital assets into blockchain-based tokens and adds intelligence through AI models. It matters because it improves transparency, pricing accuracy, fraud prevention and overall efficiency in digital asset ownership.

Q. What are the examples of AI agents in asset tokenization?

A. Common examples include AI agents for valuation, identity verification, transaction monitoring, risk scoring and compliance alerts. AI agents in asset tokenization examples are found in real estate platforms, energy trading systems and institutional digital asset custody.

Q. How does AI tokenization ensure secure asset ownership in 2026?

A. AI models track identity patterns, detect suspicious transfers and score risk before a transaction goes live. These features support secure asset ownership by reducing fraud, improving audit readiness and applying continuous compliance checks.

Q. What are the benefits of AI tokenized asset ownership for enterprises?

A. AI tokenization helps enterprises unlock value from existing assets, reduce operational effort, and improve the transparency of ownership records. It allows organizations to adopt smarter, data backed asset strategies at scale.

Key benefits include:

- Access to high value assets through fractional ownership models

- Improved liquidity via secondary markets and smaller trading units

- Transparent ownership records backed by blockchain logs

- Lower transaction costs using automated valuation and smart contracts

- Stronger portfolio diversification across multiple asset categories

This supports enterprise growth by reducing operational bottlenecks and making asset management faster, more secure, and data driven.

Q. How AI integration is helping to solve tokenization challenges?

A. AI integration helps fix data quality issues, improve valuation accuracy, identify risk behavior and streamline compliance workflows. Machine learning supports better pricing models, smarter smart contract triggers and automated regulatory reporting.

Q. What is the future of tokenization and AI integration?

A. Over the next few years, tokenization will focus on hybrid infrastructure, predictive compliance, standardized identity frameworks and tokenized data for AI models. The future of tokenization and AI integration points to more real-world use cases beyond finance.

Q. How much does it cost to build an AI tokenization platform?

A. Costs depend on scope, asset class, smart contract complexity and regulatory requirements. Basic platforms may start at development level budgets, while enterprise systems with advanced features can require larger investment in design, AI models and security.

Q. How To Build an AI Tokenization Platform For Your Business?

A. Start by identifying asset types, designing valuation models, planning smart contract logic and preparing compliance documentation. Development usually includes data integration, identity systems, backend infrastructure, security controls and testing.

Q. Can Appinventiv assist with tokenization services?

A. Yes, Appinventiv provides AI and tokenization development services that cover architecture planning, valuation engine design, smart contract audits and scalability support.

Q. How can Appinventiv assist in implementing AI tokenization for enterprises?

A. Appinventiv helps with token model design, AI deployment, risk scoring systems, compliance workflows and secure platform development. The team supports long term growth with monitoring tools, privacy controls and custom integrations.

- In just 2 mins you will get a response

- Your idea is 100% protected by our Non Disclosure Agreement.

AI Fraud Detection in Australia: Use Cases, Compliance Considerations, and Implementation Roadmap

Key takeaways: AI Fraud Detection in Australia is moving from static rule engines to real-time behavioural risk intelligence embedded directly into payment and identity flows. AI for financial fraud detection helps reduce false positives, accelerate response time, and protecting revenue without increasing customer friction. Australian institutions must align AI deployments with APRA CPS 234, ASIC…

Agentic RAG Implementation in Enterprises - Use Cases, Challenges, ROI

Key Highlights Agentic RAG improves decision accuracy while maintaining compliance, governance visibility, and enterprise data traceability. Enterprises deploying AI agents report strong ROI as operational efficiency and knowledge accessibility steadily improve. Hybrid retrieval plus agent reasoning enables scalable AI workflows across complex enterprise systems and datasets. Governance, observability, and security architecture determine whether enterprise AI…

Key takeaways: AI reconciliation for enterprise finance is helping finance teams maintain control despite growing transaction complexity. AI-powered financial reconciliation solutions surfaces mismatches early, improving visibility and reducing close-cycle pressure. Hybrid reconciliation logic combining rules and AI improves accuracy while preserving audit transparency. Real-time financial reconciliation strengthens compliance readiness and reduces manual intervention. Successful adoption…