- Understanding RNN Architecture - Their Advantages and Limitations

- The Limitations of RNNs

- The Emergence of Transformers in NLP - A Triumph Over RNN

- Working Mechanism of Transformer Architecture - A Perk Over RNN

- Comparative Analysis: Transformer vs RNN

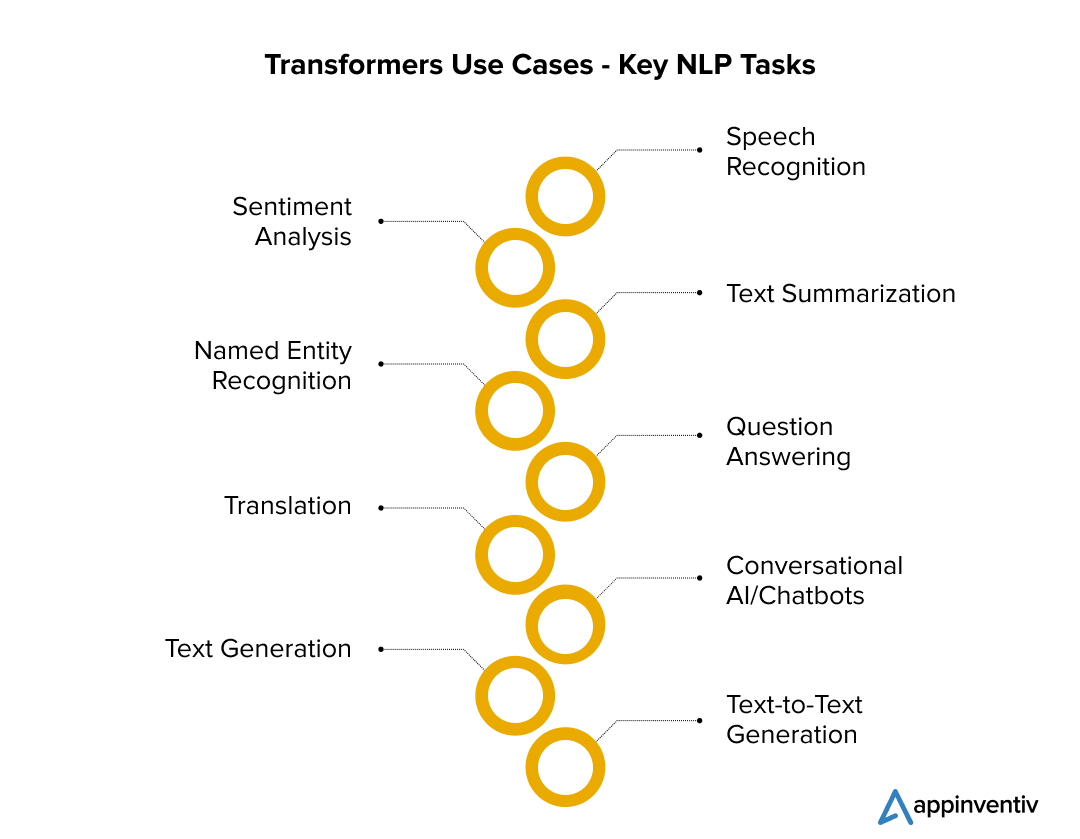

- NLP Transformers in Action: A World of Applications

- Speech Recognition

- Sentiment Analysis

- Text Summarization

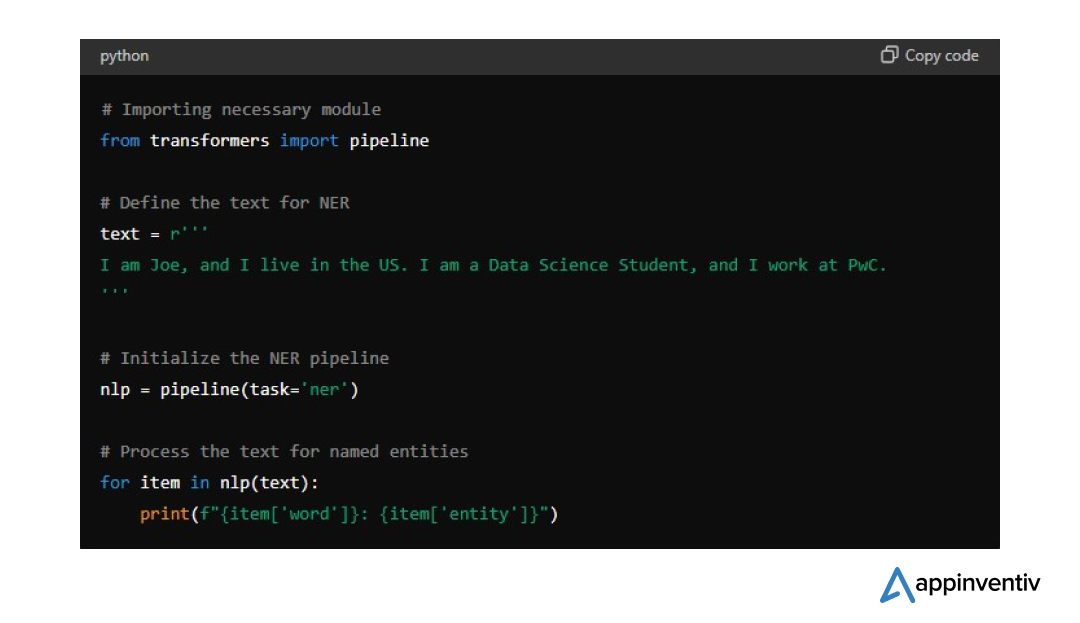

- Named Entity Recognition (NER)

- Question-Answering (QA)

- Machine Translation

- Conversational AI/Chatbots

- Text Generation

- Text-to-Text Generation

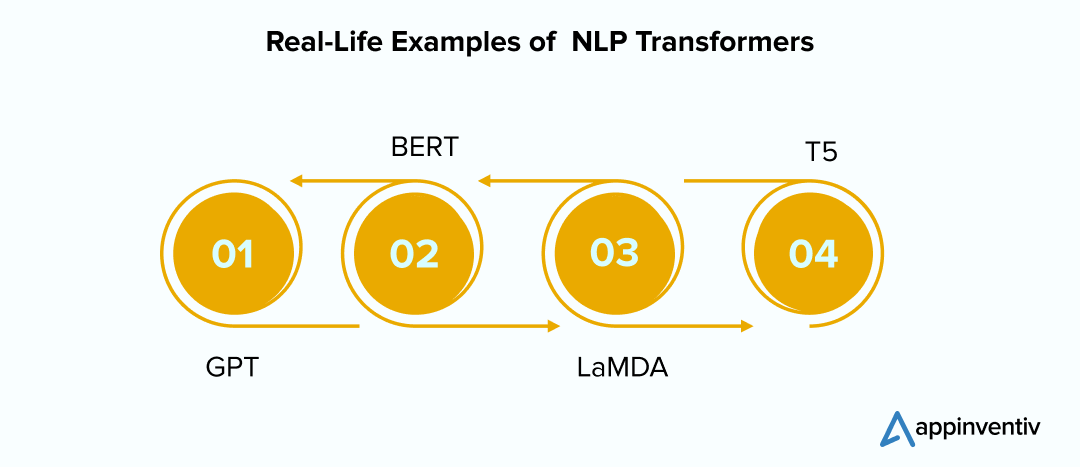

- Examples of Transformer NLP Models

- GPT and ChatGPT

- BERT

- LaMDA

- T5

- Future of NLP Transformers - Redefining the AI Era

- Enter into the Golden Era of NLP with Appinventiv

- FAQs

A major goal for businesses in the current era of artificial intelligence (AI) is to make computers comprehend and use language just like the human brain does. Numerous advancements have been made toward this goal, but Natural Language Processing (NLP) plays a significant role in achieving it.

NLP, a key part of AI, centers on helping computers and humans interact using everyday language. This field has seen tremendous advancements, significantly enhancing applications like machine translation, sentiment analysis, question-answering, and voice recognition systems. As our interaction with technology becomes increasingly language-centric, the need for advanced and efficient NLP solutions has never been greater.

Recurrent Neural Networks (RNNs) have traditionally played a key role in NLP due to their ability to process and maintain contextual information over sequences of data. This has made them particularly effective for tasks that require understanding the order and context of words, such as language modeling and translation. However, over the years of NLP’s history, we have witnessed a transformative shift from RNNs to Transformers.

The rise of Transformers over RNNs was not random. RNNs, designed to process information in a way that mimics human thinking, encountered several challenges. In contrast, Transformers in NLP have consistently outperformed RNNs across various tasks and address its challenges in language comprehension, text translation, and context capturing.

The emergence of Transformers has revolutionized the field of NLP. This new model in AI-town redefines how NLP tasks are processed in a way that no traditional machine learning algorithm could ever do before. Let’s dive into the details of Transformer vs. RNN to enlighten your artificial intelligence journey.

Understanding RNN Architecture – Their Advantages and Limitations

RNN in NLP is a class of neural networks designed to handle sequential data. Unlike traditional feedforward neural networks, RNNs have connections that form directed cycles, allowing them to maintain a memory of previous inputs. This makes RNNs particularly suited for tasks where context and sequence order are essential, such as language modeling, speech recognition, and time-series prediction.

For example, in language modeling, RNNs can predict the next word in a sentence based on the words that came before it, thanks to their ability to capture temporal dependencies.

The Limitations of RNNs

Even though RNNs offer several advantages in processing sequential data, it also has some limitations.

Vanishing and Exploding Gradient Problems

One of the significant challenges with RNNs is the vanishing and exploding gradient problem. During training, the gradients used to update the network’s weights can become very small (vanish) or very large (explode), making it difficult for the network to learn effectively.

Long-Term Dependency Issues

The vanishing and exploding gradient problem intimidates the RNNs when it comes to capturing long-range dependencies in sequences, a key aspect of language understanding. This limitation of RNN makes it challenging for the models to handle tasks that require understanding relationships between distant elements in the sequence.

Computational Inefficiency

RNNs process sequences sequentially, which can be computationally expensive and time-consuming. This sequential processing makes it difficult to parallelize training and inference, limiting the scalability and efficiency of RNN-based models.

Let’s understand the limitations of RNN in NLP through an example – suppose if RNN has to translate an English sentence into French — “Appinventiv is a leading app development company, formed in 2014”, the RNN would process this sentence (input) word-by-word, and sequentially give the French synonyms of each word. This output can lead to irrelevancy and grammatical errors, as in any language, the sequence of words matters the most when forming a sentence.

These limitations in RNN models led to the development of the Transformer – An answer to RNN challenges.

The Emergence of Transformers in NLP – A Triumph Over RNN

The Transformer architecture NLP, introduced in the groundbreaking paper “Attention is All You Need” by Vaswani et al., has revolutionized the field of Natural Language Processing. Unlike previously dominant RNN-based models like LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit), Transformers do not rely on recurrence or convolution, instead using a self-attention mechanism that allows for more efficient processing of sequential data.

This innovation has led to significant improvements in both the performance and scalability of NLP models, making Transformers the new standard in the AI town.

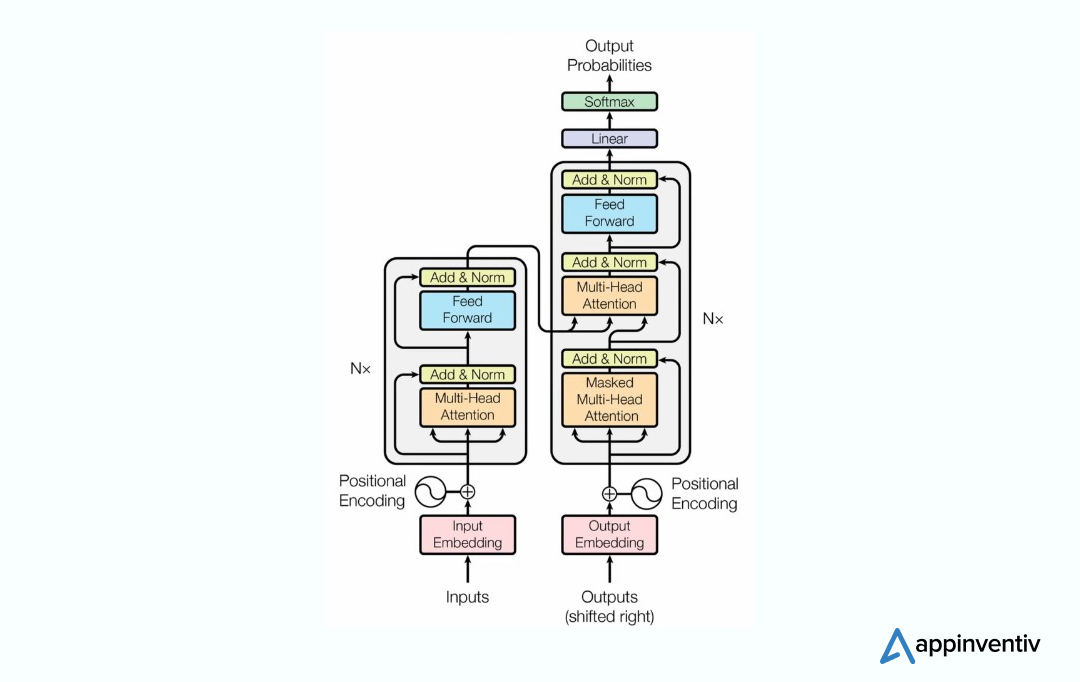

Working Mechanism of Transformer Architecture – A Perk Over RNN

Transformers’ self-attention mechanism enables the model to consider the importance of each word in a sequence when it is processing another word. This self-attention mechanism allows the model to consider the entire sequence when computing attention scores, enabling it to capture relationships between distant words. This capability addresses one of the key limitations of RNNs, which struggle with long-term dependencies due to the vanishing gradient problem.

Additionally, transformers for natural language processing utilize parallel computing resources to process sequences in parallel. This parallel processing capability drastically reduces the time required for training and inference, making Transformers much more efficient, especially for large datasets.

Based on the Transformers applications and working mechanisms, they can be divided into three prime categories – Encoder, Decoder, and Encoder-Decoder. Here is a table illustrating these three main types of Transformers in NLP, along with their examples and use cases:

| Category | Description | Examples | Use Cases |

|---|---|---|---|

| Encoder | Encode input data into contextual representations to understand the entire input sequence. | BERT, RoBERTa, ALBERT |

|

| Decoder | Generate text by predicting the next word in the sequence based on previous words. | GPT-1, GPT-2, GPT-3, GPT-4, Transformer-XL |

|

| Encoder-Decoder | Transform one sequence into another by encoding the input and decoding it into an output sequence. | Original Transformer, T5, BART |

|

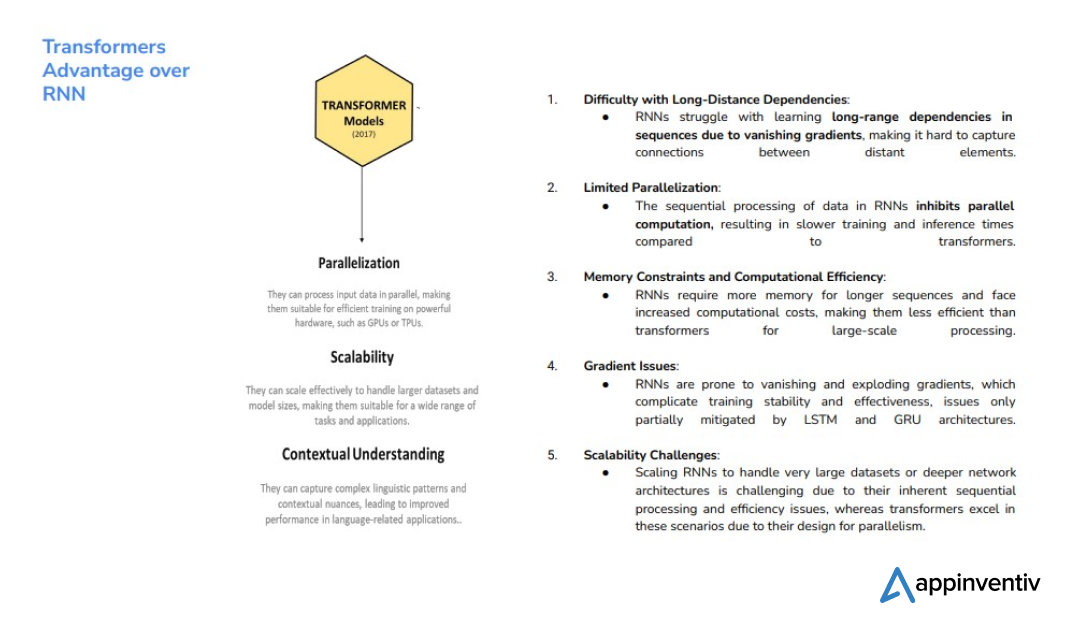

Comparative Analysis: Transformer vs RNN

To understand the advancements that Transformer brings to the field of NLP and how it outperforms RNN with its innovative advancements, it is imperative to compare this advanced NLP model with the previously dominant RNN model.

Here is a brief table outlining the key difference between RNNs and Transformers.

| Aspect | RNNs | Transformers |

|---|---|---|

| Efficiency and Performance | Sequential processing; computationally expensive | Parallel processing; highly efficient |

| Training Time and Resources | Longer training time; cannot be parallelized, less resource-intensive | Faster training on large datasets; higher memory usage. (GPT-3 was trained on 45TB of data) |

| Handling of Dependencies | Struggles with long-term dependencies | Excels at capturing long-range dependencies |

| Model Size and Scalability | Smaller models; Difficult to scale; limited by sequential nature | Larger models; Highly scalable; handle large datasets well |

| Adaptability to Various NLP Tasks | Good for specific tasks (e.g., language modeling) | Versatile; excels in diverse NLP applications |

| Parallelization | Limited parallelization due to sequential nature. | Excellent parallelization capabilities, leading to faster training and inference |

| Scalability | Low scalability due to sequential processing and efficiency issues. | Highly scalable, able to handle large models and datasets efficiently |

| Contextual Understanding | Inefficient in capturing context over long sequences | Superior contextual understanding, especially for long-range dependencies. |

| Attention Mechanism | Lacks inherent attention mechanism; requires additional layers for attention. | Built-in self-attention mechanism, enhancing the model’s ability to focus on relevant parts of the input |

NLP Transformers in Action: A World of Applications

Still unsure about the advantages and applications of Transformer vs. RNN? Well, if the comparison between the Transformer and RNN listed above has not clarified your dilemma, the wide array of applications mentioned below will provide a deeper understanding. Natural language processing with Transformers has revolutionized the field of NLP with their ability to handle complex language tasks. Here are some key applications and use cases of Transformers for natural language processing:

Speech Recognition

Speech recognition, also known as speech-to-text, involves converting spoken language into written text. Transformer-based architectures like Wav2Vec 2.0 improve this task, making it essential for voice assistants, transcription services, and any application where spoken input needs to be converted into text accurately. Google Assistant, Apple Siri, etc., are some of the prime examples of speech recognition.

You may like reading: Cost of developing an AI Voice and TTS app like Speechify

Sentiment Analysis

Transformers for natural language processing can also help improve sentiment analysis by determining the sentiment expressed in a piece of text. Transformers like BERT, ALBERT, and DistilBERT can identify whether the sentiment is positive, negative, or neutral, which is valuable for market analysis, brand monitoring, and analyzing customer feedback to improve products and services.

Here is an exemplary sentence to illustrate the process:

“The coffee at the cafe was good, but the service was awful”.

This sentence has mixed sentiments that highlight the different aspects of the cafe service. Without the proper context, some language models may struggle to correctly determine sentiment. On the other hand, Transformers in NLP capture context more effectively.

Revised Sentence: “The ambiance of the cafe was nice, but the coffee was awful; I wouldn’t go back.” Here, the overall sentiment is clearly negative.

Text Summarization

Text summarization involves creating a concise summary of a longer text while retaining its key information. Transformer models such as BART, T5, and Pegasus are particularly effective at this. This application is crucial for news summarization, content aggregation, and summarizing lengthy documents for quick understanding.

Named Entity Recognition (NER)

Named Entity Recognition (NER) is the process of identifying and classifying entities such as names, dates, and locations within a text. When performing NER, we assign specific entity names (such as I-MISC, I-PER, I-ORG, I-LOC, etc.) to tokens in the text sequence. This helps extract meaningful information from large text corpora, enhance search engine capabilities, and index documents effectively. Transformers, with their high accuracy in recognizing entities, are particularly useful for this task.

Here is an example to illustrate the process:

Code:

Output:

This output shows each word in the text along with its assigned entity label, such as person (PER), location (LOC), or organization (ORG), demonstrating how Transformers for natural language processing can effectively recognize and classify entities in text.

Question-Answering (QA)

QA systems use NP with Transformers to provide precise answers to questions based on contextual information. This is essential for search engines, virtual assistants, and educational tools that require accurate and context-aware responses.

Transformer models like BERT, RoBERTa, and T5 are widely used in QA tasks due to their ability to comprehend complex language structures and capture subtle contextual cues. They enable QA systems to accurately respond to inquiries ranging from factual queries to nuanced prompts, enhancing user interaction and information retrieval capabilities in various domains.

Machine Translation

Transformers have significantly improved machine translation (the task of translating text from one language to another). Models like the original Transformer, T5, and BART can handle this by capturing the nuances and context of languages. They are used in translation services like Google Translate and multilingual communication tools, which we often use to convert text into multiple languages.

Here is an example to illustrate the process:

In the phrase ‘She has a keen interest in astronomy,‘ the term ‘keen’ carries subtle connotations. A standard language model might mistranslate ‘keen’ as ‘intense’ (intenso) or ‘strong’ (fuerte) in Spanish, altering the intended meaning significantly.

A transformer model excels in accurately translating such nuances, ensuring that ‘keen’ is correctly interpreted as ‘gran’ in Spanish to convey the precise meaning: “Ella tiene un gran interés en la astronomía.”

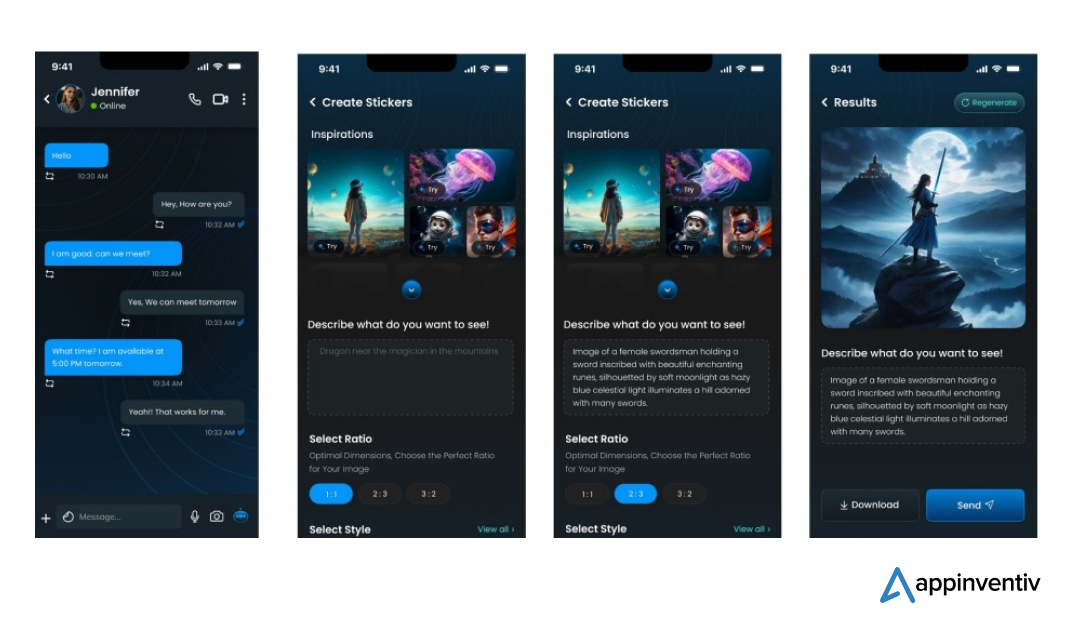

Conversational AI/Chatbots

Transformers power many advanced conversational AI systems and chatbots, providing natural and engaging responses in dialogue systems. These chatbots leverage machine learning and NLP models trained on extensive datasets containing a wide array of commonly asked questions and corresponding answers. The primary objective of deploying chatbots in business contexts is to promptly address and resolve typical queries. If a query remains unresolved, these chatbots redirect the questions to customer support teams for further assistance.

Text Generation

Transformers models like GPT-2, GPT-3, and GPT-4 can generate creative human-like text formats, such as scripts, poems, code, musical pieces, emails, and letters. They are highly suitable for creative writing, generating dialogue for conversational agents, and completing text based on a given prompt.

Text-to-Text Generation

Transformers like T5 and BART can convert one form of text into another, such as paraphrasing, text rewriting, and data-to-text generation. This is useful for tasks like creating different versions of a text, generating summaries, and producing human-readable text from structured data.

You may like reading: How to Build an Intelligent AI Model: An Enterprise Guide

Examples of Transformer NLP Models

When pondering over Transformer vs. RNN, the benefits of transformers far outweigh the traditional NLP models. This is why, in recent years, an increasing number of businesses across industries rely on transformer-based models to perform various NLP tasks, address RNN challenges, and redefine their operations. Here are some of the most notable examples of transformer NLP:

GPT and ChatGPT

OpenAI’s GPT (Generative Pre-trained Transformer) and ChatGPT are advanced NLP models known for their ability to produce coherent and contextually relevant text. GPT-1, the initial model launched in June 2018, set the foundation for subsequent versions. GPT-3, introduced in 2020, represents a significant leap with enhanced capabilities in natural language generation.

These models excel across various domains, including content creation, conversation, language translation, customer support interactions, and even coding assistance.

You may like reading: The Cost of Developing a Chatbot like ChatGPT

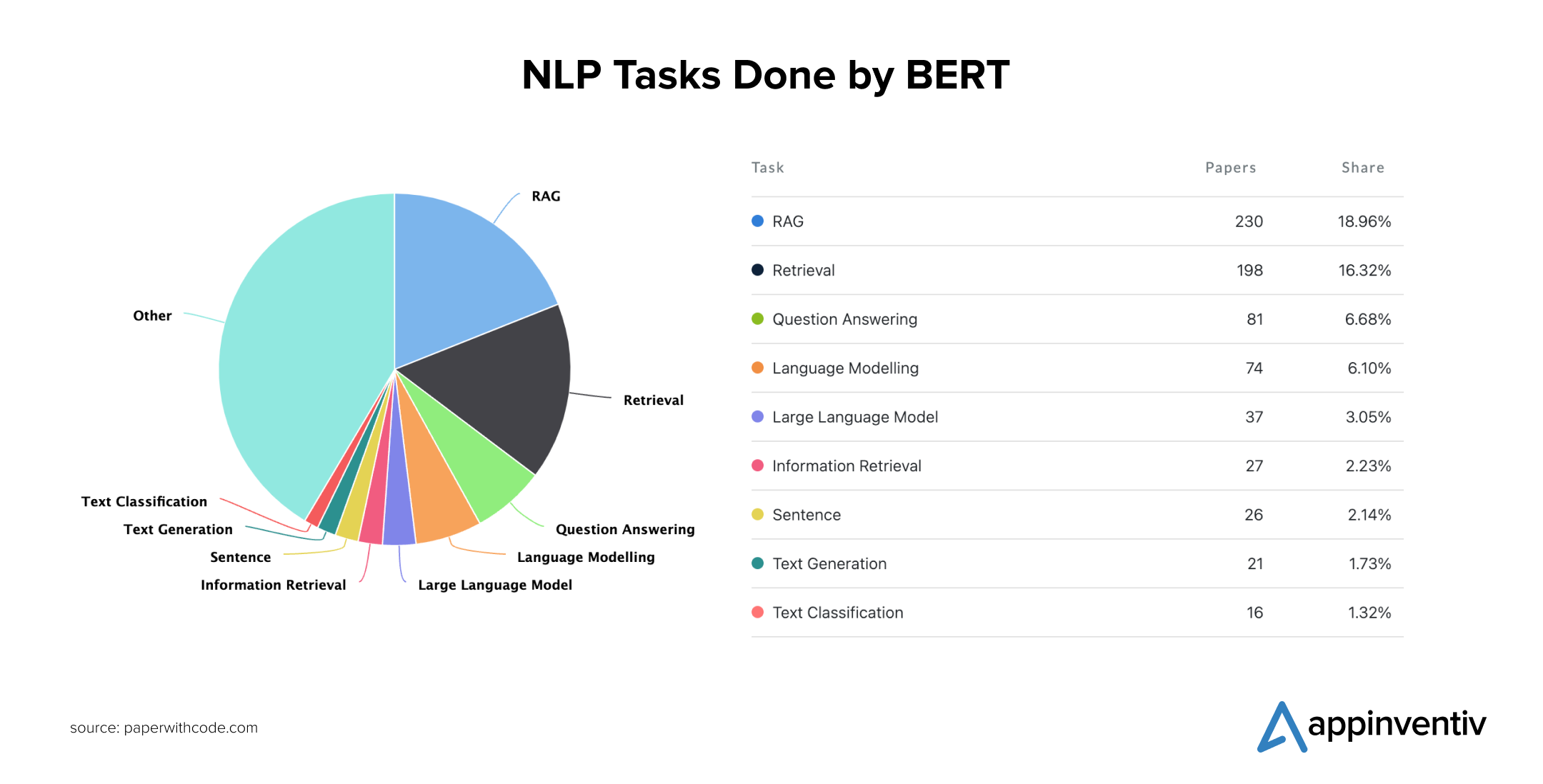

BERT

Introduced by Google in 2018, BERT (Bidirectional Encoder Representations from Transformers) is a landmark model in natural language processing. It revolutionized language understanding tasks by leveraging bidirectional training to capture intricate linguistic contexts, enhancing accuracy and performance in complex language understanding tasks.

BERT’s versatility extends to various applications such as sentiment analysis, named entity recognition, and question answering.

LaMDA

Google Introduced a language model, LaMDA (Language Model for Dialogue Applications), in 2021 that aims specifically to enhance dialogue applications and conversational AI systems. This language model represents Google’s advancement in natural language understanding and generation technologies.

Unlike RNN, this model is tailored to understand and respond to specific queries and prompts in a conversational context, enhancing user interactions in various applications.

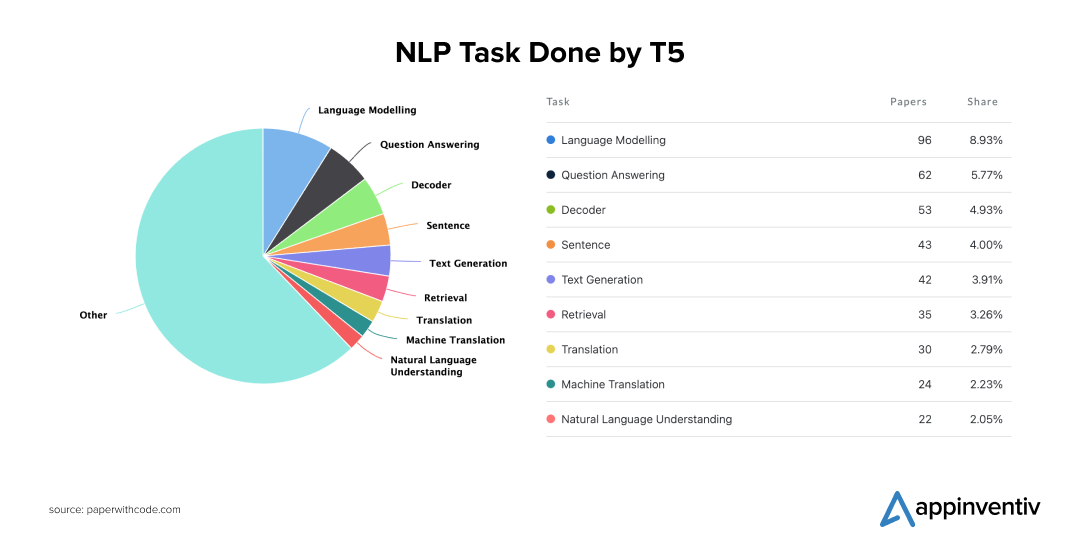

T5

T5 (Text-To-Text Transfer Transformer) is another versatile model designed by Google AI in 2019. It is known for framing all NLP tasks as text-to-text problems, which means that both the inputs and outputs are text-based. This approach allows T5 to handle diverse functions like translation, summarization, and classification seamlessly.

Deployed in Google Translate and other applications, T5 is most prominently used in the retail and eCommerce industry to generate high-quality translations, concise summaries, reviews, and product descriptions.

Future of NLP Transformers – Redefining the AI Era

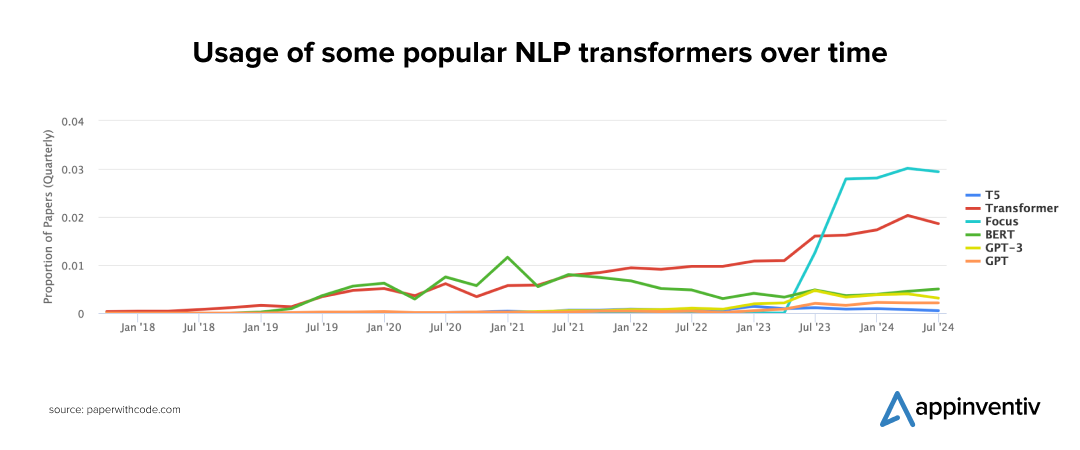

In the pursuit of RNN vs. Transformer, the latter has truly won the trust of technologists, continuously pushing the boundaries of what is possible and revolutionizing the AI era. While currently used for regular NLP tasks (mentioned above), researchers are discovering new applications every day.

Accordingly, the future of Transformers looks bright, with ongoing research aimed at enhancing their efficiency and scalability, paving the way for more versatile and accessible applications.

For instance, the ever-increasing advancements in popular transformer models such as Google’s PaLM 2 or OpenAI’s GPT-4 indicate that the use of transformers in NLP will continue to rise in the coming years.

Advances in NLP with Transformers facilitate their deployment in real-time applications such as live translation, transcription, and sentiment analysis. Additionally, integrating Transformers with multiple data types—text, images, and audio—will enhance their capability to perform complex multimodal tasks.

Transformers will also see increased use in domain-specific applications, improving accuracy and relevance in fields like healthcare, finance, and legal services. Furthermore, efforts to address ethical concerns, break down language barriers, and mitigate biases will enhance the accessibility and reliability of these models, facilitating more inclusive global communication.

In straight terms, research is a driving force behind the rapid advancements in NLP Transformers, unveiling revolutionary use cases at an unprecedented pace and shaping the future of these models. These ongoing advancements in NLP with Transformers across various sectors will redefine how we interact with and benefit from artificial intelligence.

Enter into the Golden Era of NLP with Appinventiv

As businesses strive to adopt the latest in AI technology, choosing between Transformer and RNN models is a crucial decision. In the ongoing evolution of NLP and AI, Transformers have clearly outpaced RNNs in performance and efficiency.

Their ability to handle parallel processing, understand long-range dependencies, and manage vast datasets makes them superior for a wide range of NLP tasks. From language translation to conversational AI, the benefits of Transformers are evident, and their impact on businesses across industries is profound.

Interested in integrating these cutting-edge models into your operations? Let’s dive into this exciting adventure with Appinventiv. As a leading AI development company, we excel at developing and deploying Transformer-based solutions, enabling businesses to enhance their AI initiatives and take their businesses to the next level.

Gurushala, Mudra, Tootle, JobGet, Chat & More, HouseEazy, YouComm, and Vyrb are some of our esteemed clients who have leveraged our AI capabilities to build next-gen applications and unlock new possibilities in today’s tech world.

Still unsure about transformer vs. recurrent neural network capability? Get in touch with us to uncover more and learn how you can leverage transformers for natural language processing in your organization.

FAQs

Q. What are transformers in NLP?

A. Transformers in NLP are a type of deep learning model specifically designed to handle sequential data. They use self-attention mechanisms to weigh the significance of different words in a sentence, allowing them to capture relationships and dependencies without sequential processing like in traditional RNNs.

Q. How is transformer different from RNN with attention?

A. Transformers and RNNs both handle sequential data but differ in their approach, efficiency, performance, and many other aspects. For instance, Transformers utilize a self-attention mechanism to evaluate the significance of every word in a sentence simultaneously, which lets them handle longer sequences more efficiently.

This “looking at everything at once” approach means transformers are more parallelizable than RNNs, which process data sequentially. This parallel processing capability gives natural language processing with Transformers a computational advantage and allows them to capture global dependencies effectively.

Thus, when comparing RNN vs. Transformer, we can say that RNNs are effective for some sequential tasks, while transformers excel in tasks requiring a comprehensive understanding of contextual relationships across entire sequences.

Q. What is the advantage of transformers NLP?

A. There are many advantages of using transformers NLP, such as:

- Transformers excel at capturing relationships between words across long distances, which is crucial for understanding context in NLP tasks.

- They can process and analyze input data in parallel, making them faster and more efficient than sequential models like RNNs.

- The self-attention mechanisms of Transformer architecture NLP enable them to scale up efficiently, handling large datasets and complex tasks without compromising performance.

- Pre-trained transformer models (like BERT and GPT) can be fine-tuned for specific tasks with relatively small amounts of task-specific data, offering improved performance and faster deployment.

- Natural language processing with transformers has achieved state-of-the-art results in various NLP benchmarks. It demonstrates their effectiveness in tasks such as language translation, text classification, and summarization.

These game-changing benefits of transformers make businesses go with the former option when evaluating – Transformer vs RNN.

10 Ways AI is Transforming the Healthcare Sector in Australia

Key takeaways: Generative AI could add $13 billion annually to Australia’s healthcare sector by 2030. AI is already transforming operations across diagnostics, admin tasks, and patient care. Healthcare in remote areas is improving with AI-powered virtual assistants and monitoring tools. AI can help address workforce shortages by automating routine tasks and supporting clinical decisions. Major…

Exploring the Power of AI in Creating Dynamic & Interactive Data Visualizations

Key takeaways: AI transforms data visualization by providing automated insights, interactive dashboards, and NLP, thereby tackling revenue loss and improving data quality. Key features include predictive analytics, real-time data integration, and user-friendly visualizations, enabling faster and more informed decisions. Top 2025 tools, such as Tableau, Power BI, and Julius AI, offer NLP and real-time analytics…

Practical Applications of AI as a Service for Your Business

Key takeaways: AIaaS enables fast, infrastructure-free AI adoption. Used across industries for automation and insights. Real-world examples show proven business impact. Pilot-first approach ensures smooth implementation. Key challenges include data, cost, and scaling. Trends include ethical AI, edge, and SME growth Imagine being able to cut down your operational costs while simultaneously improving decision-making and…